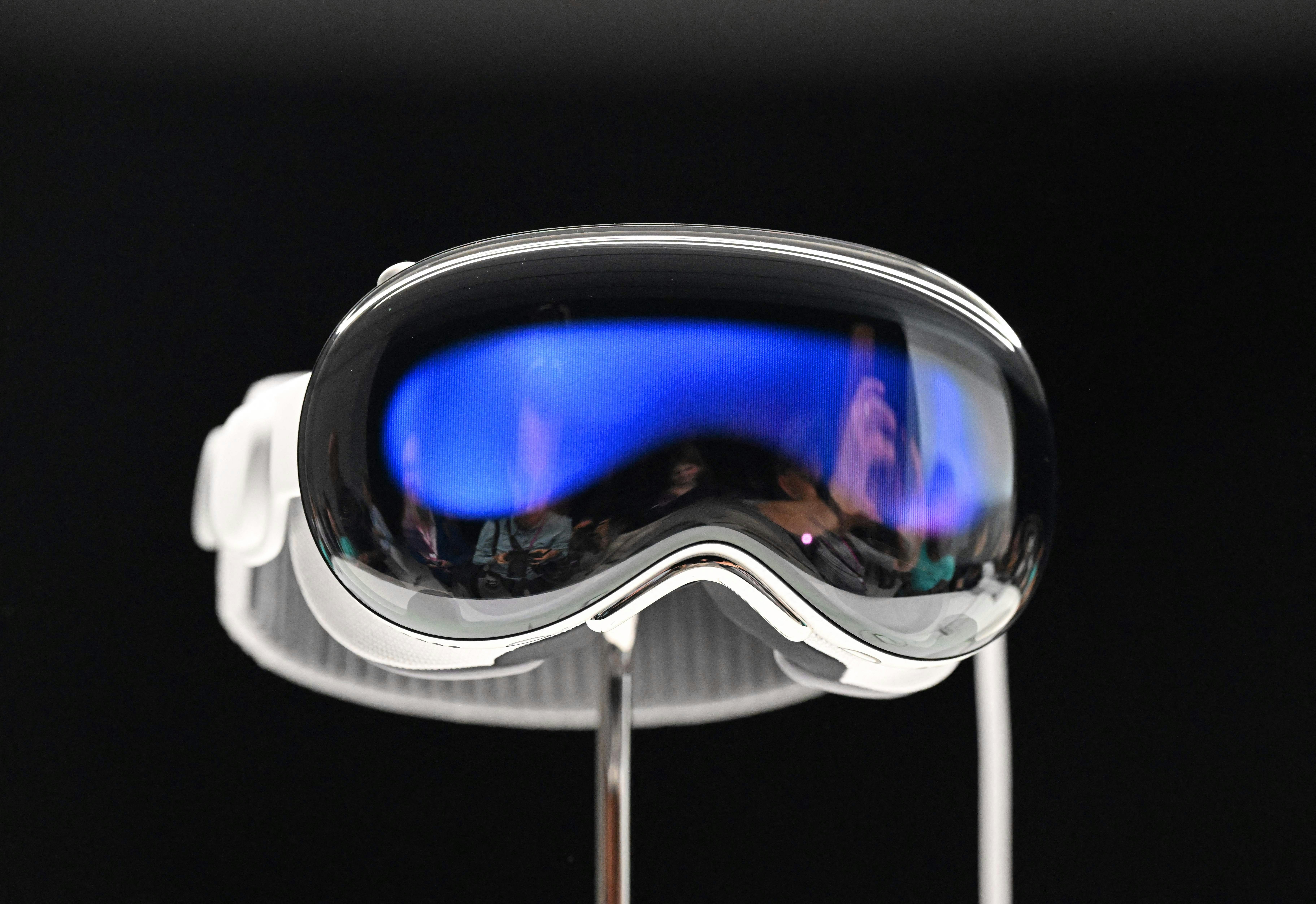

If there was one thing Apple could be counted on in introducing the Vision Pro “spatial computer,” it was sanding off most, if not all, of the rough edges of the mixed-reality headsets that had come before it. The yet-to-be-released headset offers the clearest possible passthrough, interface elements with the least amount of artifacting, and smooth and intuitive interactions based on our first hands-on with the device.

It’s a level of polish mirrored in Meta’s Quest 3, which, counter-intuitively, is in many ways more “pro” than its Quest Pro headset. With a dedicated depth sensor for setting up roomscale experiences, full-color passthrough for mixed reality, and a sharper display that promises a 2,064 x 2,208 resolution per eye, the Quest 3 makes a strong case that mixed reality can be fun and even useful for getting work done (if giant virtual monitors are your thing).

Neither headset is the end goal, of course. Miniaturizing these computers down further into something resembling glasses — or sunglasses — is still the endpoint both companies want, and it’s a challenge that will likely come with compromises around how we interact with them. You can only fit so many downward-facing cameras in something as small as a pair of glasses, after all. Luckily, the device many of us already wear on our wrists could help.

Double Tap Is the Start

Double Tap, introduced on the Apple Watch Series 9 and enabled by the extra Neural Engine boost that comes with the smartwatch’s new S9 SiP (System in a Package), is not the revolutionary feature Apple advertises it as. It’s a convenience, a “nice to have.” But navigating the small interfaces of watchOS 10, tapping buttons without even touching the screen, is a hint of where Apple could go.

The Apple Watch has long been a passive collector of information, a heart rate monitor and step tracker that was only temporarily required for Apple Fitness+. But the potential of Double Tap, and the more complex accessibility features Apple’s built into its smartwatch over the years, suggest the Apple Watch could work just as well as an input device.

According to Apple, the Neural Engine in the S9 SiP processes accelerometer, gyroscope, and optical heart rate sensor data with a new machine learning algorithm that lets it detect the “tiny wrist movements and changes in blood flow when the index finger and thumb perform a double tap.” But given the tight connections between all of Apple’s products, it doesn’t seem like much of a leap for those sensors to be used to create a Double Tap-esque feature for navigating the interface of your phone, tablet, laptop, or spatial computer.

Meta’s Smartwatch Controller

If any part of this “smartwatch as controller” idea sounds familiar, it's because Meta has regularly suggested it would like to do something similar with its own hardware. The company hopes to use EMG or electromyography — a method of using sensors to record the electrical activity of skeletal muscles — to detect the intention of movement in your body. This allows them to register swipes, taps, and other hand-based actions without you having to actually do them, provided you’re wearing the right sensors.

Meta’s efforts reportedly got as far as designing and producing a version of a smartwatch it planned on releasing earlier this year, according to Bloomberg. The watch, codenamed “Milan” featured the required EMG sensors and two cameras. One for video calls while wearing the watch and another that would let the device separate from its band and act as an action camera of sorts. The device was ultimately shelved because of technical issues, but Meta could certainly bring the work it did on those sensors to another product — especially if plans on introducing a more fully-featured version of its smart glasses are in the future.

Connecting the Dots

Voice control will always have a place in augmented, virtual, and mixed reality hardware, but in order for these devices to become simpler to use, more compact, and ideally cheaper, hardware features will have to happen. Apple is reportedly removing the Vision Pro’s exterior display for future cheaper versions of the headset and it seems totally feasible that it could offload further costs by making the Apple Watch a requirement for unlocking the more immersive control methods the headset has to offer. If not for the Vision Pro, then for whatever glasses-like product the company releases 10 years from now.