Update: Siri's new features may not arrive all at once, with the full update not appearing until Spring 2025.

Apple Intelligence finally got its proper announcement during the WWDC 2024 keynote, but a potential show-stealer comes in the form of Siri’s new intelligence. Apple’s voice assistant is getting smarter than ever before with new actions based on personal context, ushering a new era that aims to simplify the tasks you do on your Apple devices.

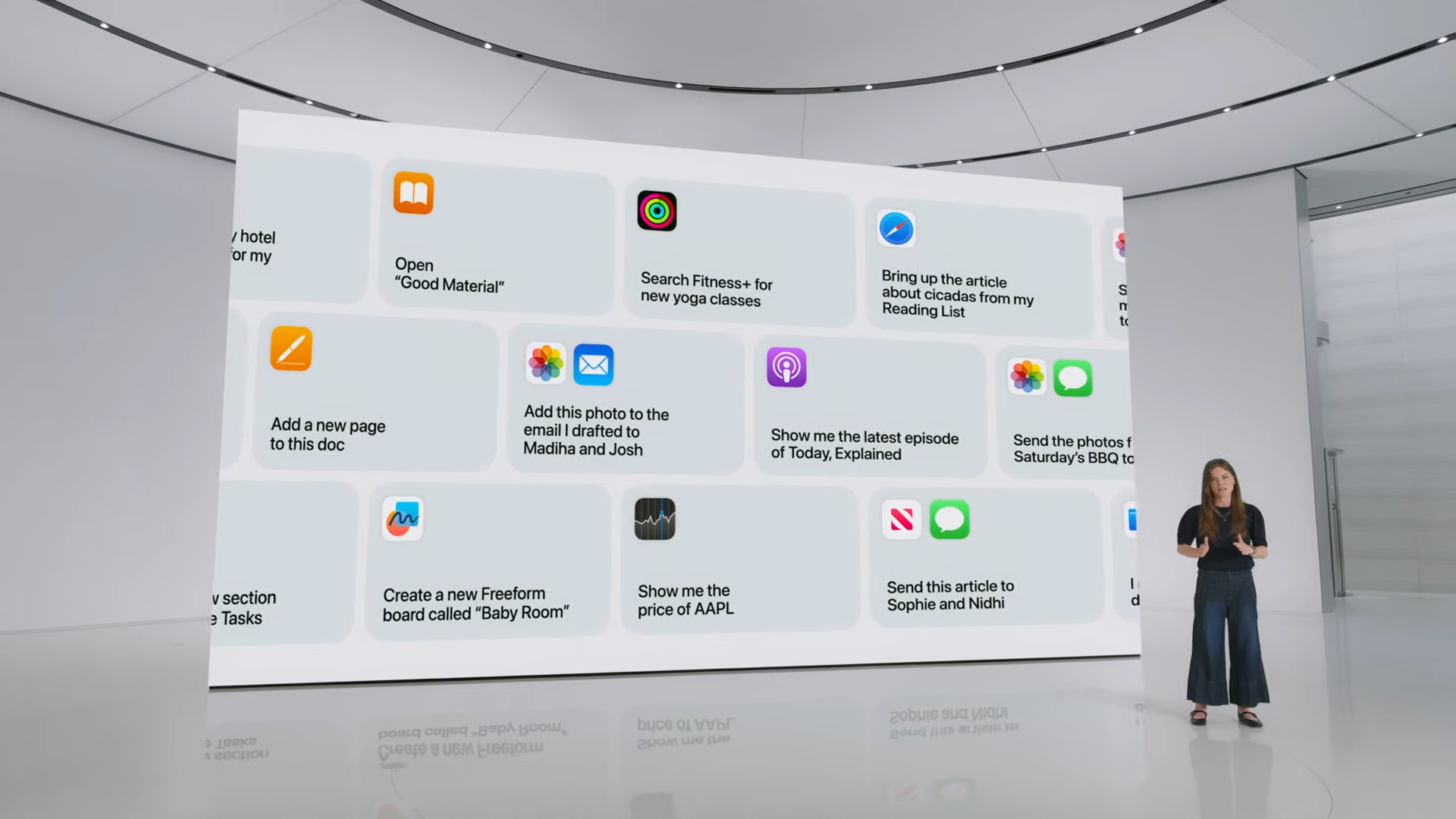

What’s really impressive are all the in-app actions that lets Apple Intelligence to work across a number of apps, where Siri can then interact with them on your behalf. It’s truly like having a personal assistant working for you.

Here's a quick look at what the revamped Siri can do.

Apple’s Craig Federighi summed it up best about Apple Intelligence: “We are embarking on a new journey to bring you intelligence that understands you.” And Siri’s undoubtedly getting turbocharged with Apple Intelligence, which will become available as part of a beta for iOS 18, iPadOS 18, and macOS Sequoia later this fall. However, these new Siri features with Apple Intelligence only will be supported on iPhone 15 Pro, iPhone 15 Pro Max, and iPads and Macs powered by M1 or later.

New Siri visual animation

Siri powered by Apple Intelligence is getting a brand-new redesign, which you can still access through voice control. Instead of the circular icon and animation we’ve been acquainted with whenever accessing Siri on iPhone and other Apple devices, you'll see a glowing light bar animation that wraps around the screen. It wraps around the entire edge and pulsates to indicate it’s listening, which makes it much more discernible that you've accessed Apple's assistant.

Maintains conversation context

Rather than constantly using voice commands to engage with Siri, the voice assistant can now maintain conversation context — so that it understands your follow-up questions and responses. This makes conversing with Siri more natural, as the assistant knows what you’re referring to based on your previous interactions.

For example, if you ask for the weather report for a particular location and follow that up with a request like "Tell me how to get there," Siri will know where "there" is.

You’ll be able to know if Siri’s still listening or awaiting a response by the new animation that continues to play on screen.

Type to Siri

Apple’s WWDC 2024 keynote also demoed a new way to interact with Siri, rather than doing it the conventional way with your voice. Type to Siri lets you double tap at the bottom of your iPhone’s screen and lets you type responses, questions, and actions to the voice assistant. It’s perfect for those moments when you really don’t want others to listen to your requests, making Siri interactions much more discreet.

On-screen awareness

Whichever app you’re using, Siri with Apple Intelligence is smart enough to understand and take actions. For example, if you get a text message with a new home address from your friend, you can tell Siri to update the address for that contact. It’ll be intelligent enough to know who it is you’re referring to because of on-screen awareness.

In-app actions

Without a doubt, the coolest new Siri feature with Apple Intelligence is the new in-app actions it’s getting — essentially allowing Siri to take commands that will let it take actions in many apps. The demo shown at WWDC 2024 really brings home the incredible power of this new feature, which lets Siri take action across a number of apps.

Additionally, you can follow up with other actions and Siri will perform them, saving you time from having to do all of that yourself. In Apple's demo, you would ask Siri to find photos of a specific person and location, then ask Siri to edit the photo to make it pop. Those are several actions that normally take several steps, but Siri with Apple Intelligence flawlessly executes them in an intuitive way — all by asking it.

App Intents API for third party support

And finally, Apple's giving developers access to these new Siri with Apple Intelligence features with its App Intents API. This effectively lets developers package these actionable items with apps, resulting in even more actions for Siri to — so they're not just siloed into Apple's native apps.

This will greatly speed up tasks that would otherwise take several steps to accomplish. For example, you can tell Siri to take a long exposure photo in a third party camera app, like Pro Camera by Moment. Details like this will greatly give Siri even more functionality than ever before.