From Doe v. Snap, Inc., filed Monday, following and repeatedly quoting Justice Thomas's separate statement respecting denial of certiorari in Malwarebytes, Inc. v. Enigma Software Grp. USA, LLC (2020):

Jennifer Walker Elrod, Circuit Judge, joined by Smith, Willett, Duncan, Engelhardt, Oldham, and Wilson, Circuit Judges, dissenting from denial of rehearing en banc:

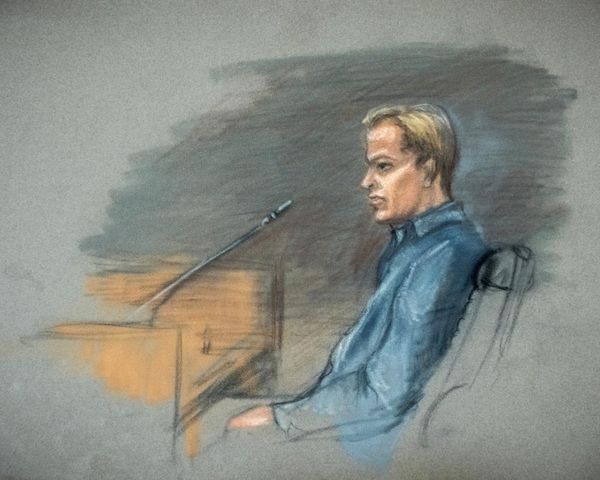

John Doe was sexually abused by his high school teacher when he was 15 years old. {Doe's teacher, Bonnie Guess-Mazock, pleaded guilty to sexual assault.} His teacher used Snapchat to send him sexually explicit material. Doe sought to hold Snap, Inc. (the company that owns Snapchat) accountable for its alleged encouragement of that abuse. Bound by our circuit's atextual interpretation of Section 230 of the Communications Decency Act, the district court and a panel of this court rejected his claims at the motion to dismiss stage.

The en banc court, by a margin of one, voted against revisiting our erroneous interpretation of Section 230, leaving in place sweeping immunity for social media companies that the text cannot possibly bear. That expansive immunity is the result of "[a]dopting the too-common practice of reading extra immunity into statutes where it does not belong" and "rel[ying] on policy and purpose arguments to grant sweeping protection to Internet platforms." Declining to reconsider this atextual immunity was a mistake.

[1.] The analysis must begin with the text. Section 230 states in relevant part that "[n]o provider or user of an interactive computer service shall be treated as the publisher or speaker of any information provided by another information content provider." 47 U.S.C. § 230(c)(1). It further prohibits interactive computer services from being held liable simply for restricting access to "material that the provider or user considers to be obscene, lewd, lascivious, filthy, excessively violent, harassing, or otherwise objectionable …" or for providing individual users with the capability to filter such content themselves. 47 U.S.C. § 230(c)(2). In other words, Section 230 closes off one avenue of liability by preventing courts from treating platforms as the "publishers or speakers" of third-party content. {Publishers are traditionally liable for what they publish as if it were their own speech. Distributors are liable for illicit conduct that they had knowledge of.} Sub-section (c)(1) and (c)(2) say nothing about other avenues to liability such as distributor liability or liability for the platforms' own conduct.

In fact, Section 502 of the Communications Decency Act expressly authorizes distributor liability for knowingly displaying obscene material to minors. This includes displaying content created by a third-party. It strains credulity to imagine that Congress would simultaneously impose distributor liability on platforms in one context, and in the same statute immunize them from that very liability.

Without regard for this text and structure, and flirting dangerously with legislative purpose, our court interpreted Section 230 over a decade ago to provide broad-based immunity, including against design defect liability and distributor liability.

"Courts have also departed from the most natural reading of the text by giving Internet companies immunity for their own content." For example, our circuit previously held that Section 230 protects platforms from traditional design defect claims. See Doe v. MySpace, Inc., (5th Cir. 2008) ("[Plaintiffs'] claims are barred by the CDA, notwithstanding their assertion that they only seek to hold MySpace liable for its failure to implement measures that would have prevented Julie Doe from communicating with" her eventual attacker.). This is notably different from the Ninth Circuit's interpretation, which has allowed some design defect claims to pass the motion to dismiss stage. See Lemmon v. Snap, Inc. (9th Cir. 2021) (holding that Snap is not entitled to immunity under Section 230 for claims arising out of the "'predictable consequences of' designing Snapchat in such a way that it allegedly encourages dangerous behavior").

Immunity from design defect claims is neither textually supported nor logical because such claims fundamentally revolve around the platforms' conduct, not third-party conduct. Nowhere in its text does Section 230 provide immunity for the platforms' own conduct. Here, Doe brings a design defect claim. He alleges that Snap should have stronger age-verification requirements to help shield minors from potential predators. He further alleges that because "reporting child molesters is not profitable," Snap "buries its head in the sand and remains silent." Product liability claims do not treat platforms as speakers or publishers of content. "Instead, Doe seeks to hold Snap liable for designing its platform to encourage users to lie about their ages and engage in illegal behavior through the disappearing message feature."

That our interpretation of Section 230 is unmoored from the text is reason enough to reconsider it. But it is unmoored also from the background legal principles against which it was enacted.

Congress did not enact Section 230 in a vacuum. Congress used the statutory terms "publisher" and "speaker" against a legal background that recognized the separate category of "distributors." Just a year prior to the enactment of the Communications Decency Act, for example, a New York state court held an internet message board liable as a publisher of the defamatory comments made by third-party users of the site, declining to treat the platform as a distributor. The distinction is relevant because distributors are only liable for illegal content of which they had or should have had knowledge. Section 230 merely directs courts not to treat platforms as publishers of third-party content….

Congress drafted a statute precluding a particular avenue to liability, while leaving others, such as design defect and distributor liability, untouched. Our court upset that balance, leaving plaintiffs like Doe without recourse for a host of conduct Congress did not include in the text.

[2.] Deviation from statutory text is often justified by some using an appeal to the needs of a changing world. Our jurisprudence on Section 230 perhaps shows why such attempts at judicial policymaking are as futile as they are misguided. For here, our atextual transformation of Section 230 into a blunt instrument conferring near-total immunity has rendered it particularly ill-suited to the realities of the modern internet.

As the internet has exploded, internet service providers have moved from "passive facilitators to active operators." {Large, modern-day internet platforms are more than willing to remove, suppress, flag, amplify, promote, and otherwise curate the content on their sites in order to cultivate specific messages.} They monitor and monetize content, while simultaneously promising to protect young and vulnerable users. For example, Snap itself holds itself out to advertisers as having the capability to target users based on "location demographics, interests, devices … and more!"

Today's "interactive computer services" are no longer the big bulletin boards of the past. They function nothing like a phone line. Rather, they are complex operations offering highly curated content. Section 230 defines information content providers as "any person or entity that is responsible, in whole or in part, for the creation or development of information provided through the Internet or any other interactive computer service." Where platforms take this content curation a step further, so as to become content creation, they cannot be shielded from liability.

Power must be tempered by accountability. But this is not what our circuit's interpretation of Section 230 does. On the one hand, platforms have developed the ability to monitor and control how all of us use the internet, exercising a power reminiscent of an Orwellian nightmare. On the other, they are shielded as mere forums for information, which cannot themselves be held to account for any harms that result. This imbalance is in dire need of correction by returning to the statutory text. Doe alleges that Snap monitors content in order to "prohibit … explicit content." Where such oversight results in knowledge of illegal content, platforms should not be shielded from liability as distributors.

[3.] … [I]t is once again up to our nation's highest court to properly interpret the statutory language enacted by Congress in the Communications Decency Act. "Paring back the sweeping immunity courts have read into § 230 would not necessarily render defendants liable for online misconduct. It simply would give plaintiffs a chance to raise their claims in the first place." Doe's claims have been denied under the Communications Decency Act at the motion to dismiss stage, without even the chance for discovery. Importantly, we have categorically barred not only Doe, but every other plaintiff from litigating their claims against internet platforms. Before granting such powerful immunity, "we should be certain that is what the law demands." I am far from certain.

I'm not sure that this is right, but it struck me as an important opinion, especially given the number of its signatories, and it may increase the chances that the Supreme Court will agree to hear the case.

The post Seven Fifth Circuit Judges Argue for Reading § 230 Narrowly appeared first on Reason.com.