Runway AI, the generative AI company whose video editing tools were critical to making "Everything Everywhere All at Once," has made parts of its toolkit available to the public — so anyone can turn images, text or video clips into 15-second reels.

Why it matters: There's grave and justifiable concern about AI's potential for abuse. But the flip side is that tools like Runway's are unleashing all manner of artistic creativity and giving rise to new AI-generated art forms.

Driving the news: Runway just granted public access to its image-to-video model, Gen-1, and, in a more limited release, to its text-to-video product, Gen-2.

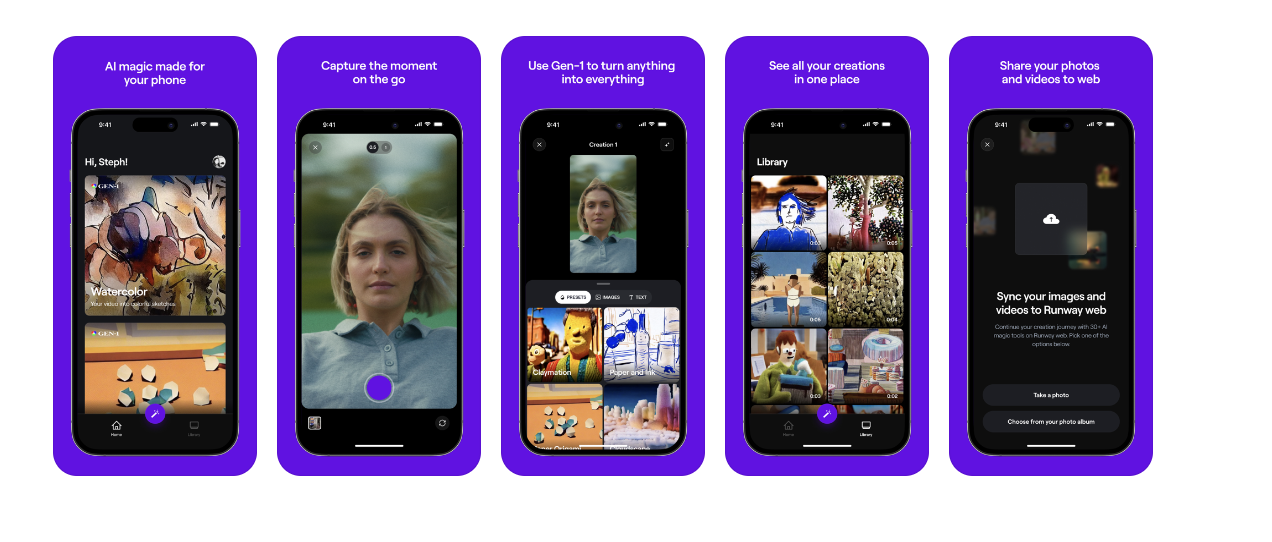

- Gen-1 is available on a mobile app that lets users take or upload a picture or video and instantly generate a short video clip based on it — applying filters like "Claymation" or "Cloudscape."

- Gen-2, which Runway says is the first publicly available text-to-video product, is available now in limited form via chat platform Discord (and will soon be available more widely).

- These fun and highly preliminary features are just a small, public-facing snapshot of the powerful suite of back-end tools available to video professionals who've been drawn to Runway's products like catnip.

Backstory: Runway was a co-creator of Stable Diffusion, a breakthrough text-to-image generator.

- Runway's creative suite has been a loosely kept secret among filmmaking cognoscenti, who use it to save countless hours on editing tasks traditionally done by hand.

- The company's AI Magic Tools let users edit and generate content in dozens of ways — by removing backgrounds from videos, erasing and replacing parts of a photo, or blurring out faces or backgrounds, for example.

What they're saying: Runway's technology is like "having your own Hollywood production studio in your browser," Cristóbal (Cris) Valenzuela, the company's CEO, tells Axios.

- Its tools — and others, like ChatGPT — are "democratizing content creation" and "reducing the cost of creation to almost zero," he said.

Where it stands: Runway's tools have been used to help produce the "The Late Show with Stephen Colbert," build sequences for MotorTrend's "Top Gear America," design shoes for New Balance, and make videos for Alicia Keys.

- And they're a growing staple in creating visual effects (VFX) for amateur and professional films.

To foster such work, Runway started up an AI film festival this year that drew hundreds of submissions.

- "I think we're just at the beginning of this new way of storytelling," says Valenzuela, who founded Runway in 2018 after art school at New York University.

- The film festival was a "manifestation of the volume of creativity that's been emerging with all of these new techniques," he says.

Case study: For the Oscar-winning film "Everything Everywhere All at Once," VFX artist Evan Halleck used Runway's Green Screen Background Remover for one of the "moving rocks" scenes. (He describes the experience here.)

- He told Variety he wishes he had discovered Runway's tools sooner, as they automate otherwise laborious and time-consuming processes.

- "In the scene where Michelle Yeoh’s Evelyn flips through multiverses in seconds, all 300 shots were handmade," Variety reports.

- Halleck told Variety: "I look back and I wish I had that for when we were working on it, instead of spending weeks working on it and photoshopping aliens."

At "The Late Show with Stephen Colbert" (which has just gone dark because of the Hollywood writers' strike), Runway's editing tools compress "a workflow that used to take them six hours of work into six minutes of work," says Valenzuela.

- In addition to the Green Screen tool, "Colbert" uses Inpainting, which allows film editors to remove (or "paint over") objects in videos.

The big picture: Virtual production and AI are upending cinematography, and DIY tools like Runway's offer an everyman alternative to heavyweights like George Lucas-founded Industrial Light & Magic.

- While Meta and Google have demonstrated text-to-video generative AI projects, they haven't released any public tools.

- Image-generation engines such as DALL-E, Midjourney and Stable Diffusion help users create still artwork — while Runway is focused on video.

Yes, but: The threat level from Hollywood deepfakes and other manipulations made through generative AI is just starting to be understood.

- There are calls in Congress to regulate AI, and an open letter is circulating among top technologists to pause development of the technology.

For his part, Valenzuela says it's too early to apply the brakes.

- "I think the key thing that we need to do right now is listen and discuss," he said. "The same set of questions were being asked when Photoshop was invented."

The bottom line: Valenzuela says he's animated by the act of putting Runway's creative tools in the hands of storytellers everywhere.

- "I'm creating a guitar, and I'm giving it to Jimi Hendrix," he said.