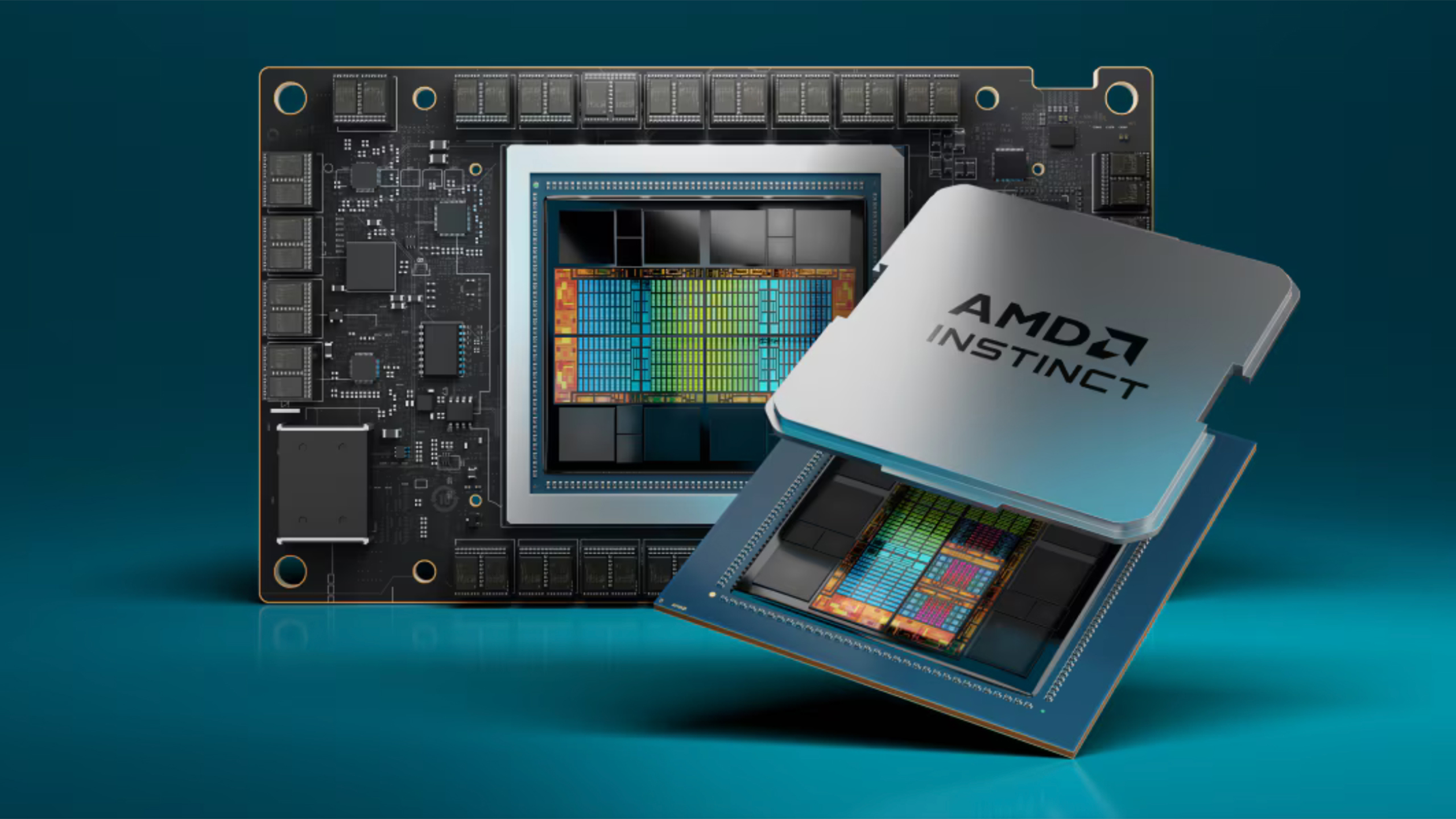

AMD has announced ROCm version 6.3, which adds many new updates to the ROCm ecosystem. The latest iteration of the open-source driver stack features several additions, including SGLang, FlashAttention-2, and a Fortran Compiler.

SGLang is a new runtime in ROCm 6.3 that purportedly improves latency, throughput, and resource utilization by optimizing "cutting-edge" generative AI models on AMD's homebrewed Instinct GPUs. SGLang purportedly achieves up to 6X higher performance on large language model inferencing and comes with pre-configured Docker containers that use Python to accelerate AI, multimodal workflows, and scalable cloud backends.

FlashAttention-2 is the next iteration of FlashAttention, which reduces memory usage and compute demands with Transformer AI models. FlashAttention-2 purportedly features up to 3x speedup improvements over version one for backward and forward passes, accelerating AI model training time.

AMD has implemented a Fortran compiler into ROCm 6.3, enabling users to run legacy Fortran-based applications on AMD's modern Instinct GPUs. The compiler features direct GPU offloading through OpenMP for scientific workloads, backward compatibility allowing the developers to continue writing Fortran code for existing legacy applications, and simplified integrations with HIP kernels and ROCm libraries.

Multi-NodeFFT support enables high-performance distributed FFT computations in ROCm 6.3. This feature purportedly simplifies multi-node scaling, reducing developers' complexity and enabling seamless scalability across massive datasets.

ROCm 6.3 introduces enhancements to the computer vision libraries rocDecode, rocJPEG, and rocAL, enabling support for the AV1 codec, GPU-accelerated JPEG decoding, and better audio augmentation.

ROCm is an open-source stack of software and drivers designed to run on AMD Instinct GPUs. The platform aims to provide features that enable or improve enterprise GPU-accelerated applications such as high-performance computing (HPC), AI/Machine Learning, communication, and more.