Critics have accused the European Commission of seeking to end encrypted communications after the EU’s executive body unveiled strict regulations for messaging apps intended to fight the spread of child sexual abuse imagery.

Under the proposed regulations, messaging services and web hosts would be required to search for, and report, child abuse material – even in the case of encrypted messaging services like Apple’s iMessage and Facebook’s WhatsApp that cannot be scanned in such a way.

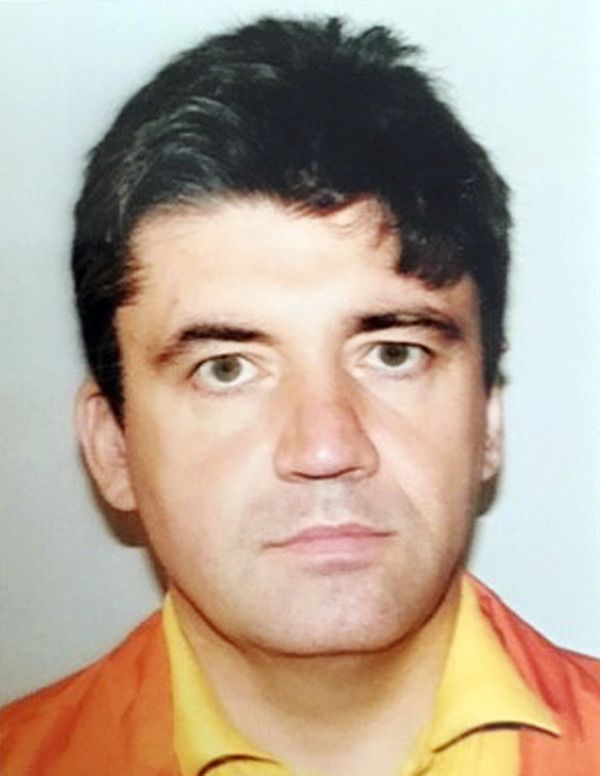

“Detection, reporting and removal of child sexual abuse online is urgently needed to prevent the sharing of images and videos of the sexual abuse of children, which retraumatises the victims often years after the sexual abuse has ended,” said Ylva Johansson, the EU’s commissioner for home affairs on Thursday.

“Today’s proposal sets clear obligations for companies to detect and report the abuse of children, with strong safeguards guaranteeing privacy of all, including children,” she added.

Andy Burrows, head of child safety online at the National Society for the Prevention of Cruelty to Children (NSPCC), welcomed the proposals, which he described as an “impressively bold and ambitious” attempt to “systemically prevent avoidable child abuse and grooming, which is taking place at record levels”.

He added: “If approved, it will place a clear requirement on platforms to combat abuse wherever it takes place, including in private messaging where children are at greatest risk.”

But the plans have also been criticised as an attack on privacy. “Private companies would be tasked not just with finding and stopping distribution of known child abuse images, but could also be required to take action to prevent ‘grooming’ or suspected future child abuse,” said Joe Mullin of Electronic Frontier Foundation (EFF), a privacy group.

“This would be a massive new surveillance system, because it would require the infrastructure for detailed analysis of user messages.”

The regulation does not technically require providers to turn off end-to-end encryption entirely. Instead, it allows providers to apply automated techniques to scan for abusive material on users’ devices – a compromise that supporters say preserves privacy while also helping deter child abuse.

It is a similar approach to that proposed – but indefinitely postponed – by Apple, when the company suggested scanning photos before they were uploaded to its cloud storage.

Apple’s approach, however, only scanned photos looking for examples of existing child abuse imagery. The commission’s proposal includes a requirement to detect newly created images, and even to look for active grooming, which is far harder to successfully achieve with existing automated systems without compromising privacy.

It also suggests that app stores, like those run by Apple and Google, should be involved in enforcing the requirements, pulling apps that don’t comply with the scanning obligations.

Patrick Breyer, German MEP for the Pirate party, called the proposals “fundamental rights terrorism against trust, self-determination and security on the iInternet”.

He added: “The proposed chat control threatens to destroy digital privacy of correspondence and secure encryption.

“Scanning personal cloud storage would result in the mass surveillance of private photos. Mandatory age verification would end anonymous communication.”

The proposal does not include the overdue obligation on law enforcement agencies to report and remove known abusive material on the net, nor does it provide for Europe-wide standards for effective prevention measures, victim support and counselling and effective criminal investigations.

“This Big Brother attack on our mobile phones, private messages and photos with the help of error-prone algorithms is a giant step towards a Chinese-style surveillance state,” Breyer said.