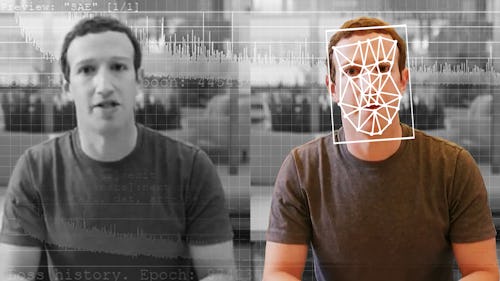

Some of the first instances of online-trickery in the mid 90s through the early aughts arrived in the form of fraudulent emails meant to trick users into giving away personal information. Since the proliferation of phishing, spam filters for personal emails improved and scammers had to set their sights on other, larger targets. Now, as technology has advanced and we’ve come to craft identities online, more nuanced gimmicks have emerged with deepfake technology.

Since Gal Gadot’s face was superimposed onto a porn video in late 2017, questions surrounding the darker implications of this sort of tech have arisen — will trust in the digital mediums that the public uses to consume their daily share of information erode? Accordingly, it’s been critical to gauge whether or not people are able to spot a deepfake. A recent study published in PNAS suggests that this ability is... lacking.

One conclusion from the study:

“Synthetically generated faces are more trustworthy than real faces. This may be because synthesized faces tend to look more like average faces which themselves are deemed more trustworthy. Regardless of the underlying reason, synthetically generated faces have emerged on the other side of the uncanny valley.”

What did the study involve?—

There were three experiments conducted over the course of the project. Using generative adversarial networks (GANs), which synthesize content by pitting neural networks together, researchers created 400 different faces, representing four ethnic groups (Black, Caucasian, East Asian, and South Asian) and containing an equal gender split. Each of these faces was then paired with real faces that were part of the database used to train the GAN.

The first experiment tasked 315 participants to make judgements on 128 faces, deducing whether or not they were real or fake — results showed an accuracy rate of 48 percent, or in other words slightly below the odds of calling a coin flip.

The second experiment involved a new group of 219 participants and this time, users were given some help prior to the deduction process. Results improved, but only marginally to 59 percent.

Finally, the third experiment involved snap judgements and 223 participants were asked to rate the trustworthiness of the set of 128 faces on a scale of 1 (very untrustworthy) to 7 (very trustworthy). In this scenario fake faces were deemed 8 percent more trustworthy.

While deepfake technology is most likely here to stay, it has become more important for companies that seek to incorporate this kind of tech into their products to develop safe guards to reduce the potential negative effects of misuse.

The study ends with a cautionary note imploring institutions and companies to be wary with deepfake use:

Because it is the democratization of access to this powerful technology that poses the most significant threat, we also encourage reconsideration of the often laissez-faire approach to the public and unrestricted releasing of code for anyone to incorporate into any application.