A hacker breached OpenAI’s internal messaging systems early last year, stealing details of how OpenAI's technologies work from employees. Although the hacker did not access the systems housing key AI technologies, the incident raised significant security concerns within the company. Furthermore, it even raised concerns about the U.S. national security, reports the New York Times.

The breach occurred in an online forum where employees discussed OpenAI's latest technologies. While OpenAI's systems, where the company keeps its training data, algorithms, results, and customer data, were not compromised, some sensitive information was exposed. In April 2023, OpenAI executives disclosed the incident to employees and the board but chose not to make it public. They reasoned that no customer or partner data was stolen and the hacker was likely an individual without government ties. But not everyone was happy with the decision.

Leopold Aschenbrenner, a technical program manager at OpenAI, criticized the company's security measures, suggesting they were inadequate to prevent foreign adversaries from accessing sensitive information. He was later dismissed for leaking information, a move he claims was politically motivated. Despite Aschenbrenner's claims, OpenAI maintains that his dismissal was unrelated to his concerns about security. The company acknowledged his contributions but disagreed with his assessment of its security practices.

The incident heightened fears about potential links to foreign adversaries, particularly China. However, OpenAI believes its current AI technologies do not pose a significant national security threat. Still, one could figure out that leaking them to Chinese specialists would help them advance their AI technologies faster.

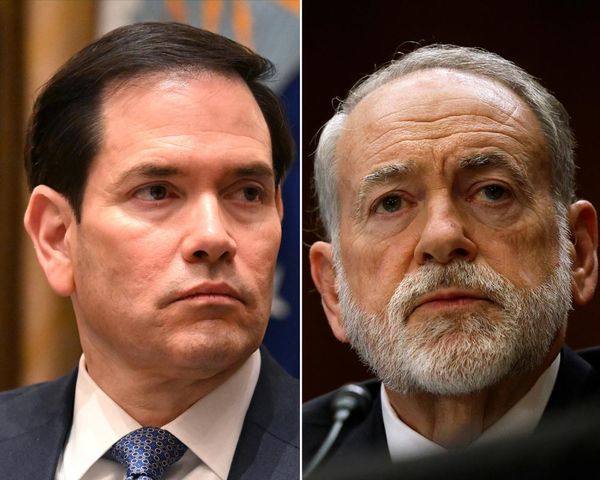

In response to the breach, OpenAI, just like other companies, has been enhancing its security measures. For example, OpenAI and others have added guardrails to prevent misuse of their AI applications. Also, OpenAI has established a Safety and Security Committee, including former NSA head Paul Nakasone, to address future risks.

Other companies, including Meta, are making their AI designs open source to foster industry-wide improvements. However, this makes technologies available to American foes like China, too. Studies conducted by OpenAI, Anthropic, and others indicate that current AI systems are not more dangerous than search engines.

Federal and state regulations are being considered to control the release of AI technologies and impose penalties for harmful outcomes. However, this looks more like a precaution as experts believe that the most serious risks from AI are still years away.

Meanwhile, Chinese AI researchers are quickly advancing, potentially surpassing their U.S. counterparts. This rapid progress has prompted calls for tighter controls on AI development to mitigate future risks.