Researchers have found that OpenAI's audio-powered transcription tool, Whisper, is inventing things that were never said with potentially dangerous consequences, according to a new report.

As per APNews, the AI model is inventing text (commonly referred to as a 'hallucination'), where the large language model spots patterns that don't exist in its own training material, thus creating nonsensical outputs. US Researchers have found that Whisper's mistakes can include racial commentary, violence and fantasised medical treatments.

Whisper is integrated with some versions of ChatGPT, and is a built-in offering in Microsoft and Oracle's cloud computing platforms. Microsoft has stated that the tool is not intended for high-risk use, though healthcare providers are starting to adopt the tool to transcribe patient consultations with doctors.

Whisper is claimed to have "near human level robustness and accuracy" by its maker, and has supposedly been adopted by over 30,000 US clinicians across 40 health systems. However, researchers are warning against the adoption, with problems found in different studies.

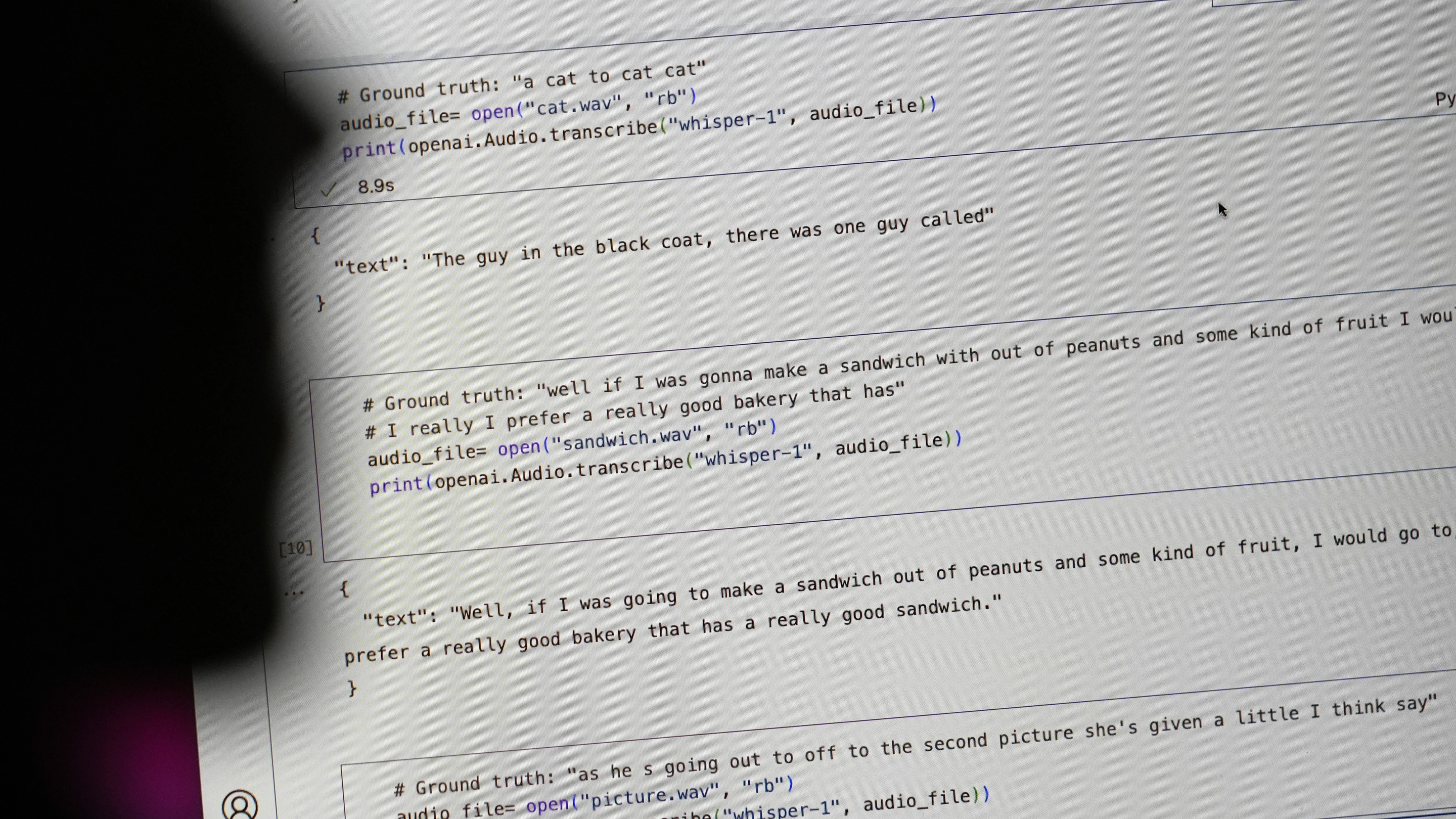

In a study of public meetings, a University of Michigan researcher found Whisper hallucinating in eight of every 10 audio transcriptions inspected. Meanwhile, a machine learning engineer discovered hallucinations in about half of over 100 hours of transcriptions and a third developer found hallucinations in nearly every one of the 26,000 transcripts he created with Whisper.

In the past month, Whisper was downloaded over 4.2 million times from the open-source AI platform HuggingFace, with the tool being the most popular speech recognition model on the website. Analysing material from TalkBank, a repository hosted at Carnegie Mellon University, researchers determined that 40% of the hallucinations Whisper was producing could be harmful as the speaker was "misinterpreted or misrepresented".

In AP examples of such snippets, one speaker described "two other girls and one lady", and Whisper invented commentary on race, noting "two other girls and one lady, um, which were Black". In another example, the tool created a fictional medication known as "hyperactivated antibiotics".

Mistakes like those found could have "really grave consequences," especially within healthcare settings, Princeton Professor Alondra Nelson told AP as "nobody wants a misdiagnosis".

There are calls for OpenAI to address the issue, as ex-employee William Saunders told AP that "it's problematic if you put this out there and people are overconfident about what it can do and integrate it into all these other systems".

Hallucinations are a problem for AI transcription tools

While it’s expected by many users that AI tools will make mistakes or misspell words, researchers have found that other programs make mistakes just as much as Whisper.

Google's AI Overviews was met with criticism earlier this year when it suggested using non-toxic glue to keep cheese from falling off pizza, citing a sarcastic Reddit comment as a source.

Apple CEO Tim Cook admitted in an interview that AI hallucinations could be an issue in future products, including the Apple Intelligence suite. Cook told the Washington Post that his confidence level wasn't 100% on whether the tools might hallucinate.

"I think we have done everything that we know to do, including thinking very deeply about the readiness of the technology in the areas that we’re using it in," Cook said.

Despite this, companies are furthering the development of AI tools and programs, with hallucinations, much like Whisper's inventions, continuing to be a prevalent issue. As for OpenAI's response to hallucinations, it has recommended against using Whisper in "decision-making contexts, where flaws in accuracy can lead to pronounced flaws in outcomes".