Backprop, a GPU cloud provider for AI processes, recently showcased AI-generated video using an environment based on Open-Sora V1.2. The company showed four examples using different prompts, and the results were generally of average quality. But the hardware requirements for even these relatively tame samples are quite high.

According to the company blog post, “On a 3090, you can generate videos up to 240p and 4s. Higher values than that use more memory than the card has. Generation takes around 30s for a 2s video and around 60s for a 4s video.” That's a decent amount of computational power for only 424x240 output — a four-second video has just under ten million pixels in total.

The Nvidia RTX 3090 was once the most powerful GPU available, and it's still pretty potent today. It comes with 24GB of GDDR6X memory, a critical element for many complex AI workloads. While that's plenty of VRAM for any modern games, matching the newer RTX 4090 on capacity, it's still a limiting factor for Open Sora video generation. The only way to get more VRAM in a graphics card at present would be to move to professional or data center hardware.

A single Nvidia H100 PCIe GPU can have up to 94GB of HBM2e memory — and up to 141GB for the newer SXM-only Nvidia H200 with HBM3e. Aside from its massive capacity, which is more than triple than that of top consumer GPUs, these data center GPUs also have much higher memory bandwidths, with next-gen HBM3s from Micron planned to achieve 2 TB/s. The H100 PCIe adapters currently cost in the ballpark of $30,000 retail, though licensed distributors might have them for slightly less money.

You could pick up an Nvidia RTX 6000 Ada Generation with 48GB of memory for a far more reasonable $6,800 direct from Nvidia. That's double the VRAM of any consumer GPU and would likely suffice for up to 512x512 video generation, though still in relatively short clips.

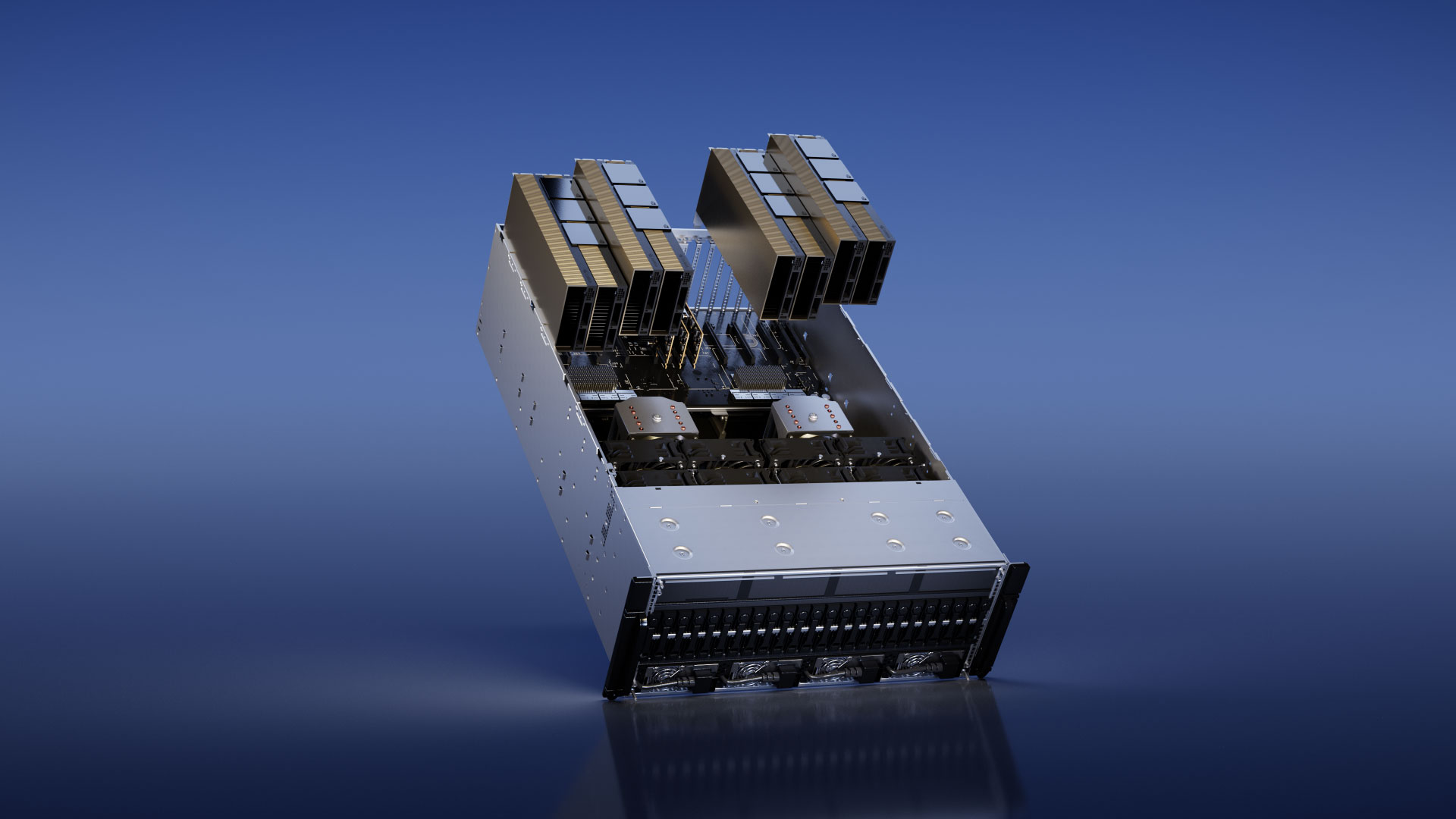

Alternatively, there's the H100 NVL, but that's a bit hard to find all on its own for the dual-GPU variant. Newegg has a Supermicro dual Grace Hopper server for $75,000, though, which would give you 186GB of shared VRAM. Then you could perhaps start making 720p video content.

Obviously, the biggest downside to acquiring any of the above GPUs is price. A single RTX 4090 starts at $1,599, which is a lot of money for most consumers. Professional GPUs cost four times as much, and data center AI GPUs are potentially 20 times as expensive. The H100 does have competition from Intel and AMD, but Intel’s Gaudi is still expected to cost over $15,000, while AMD’s MI300X is priced between $10,000 and $15,000. And then there's the Sohu AI chip that's supposed to be up to 20X faster than an H100, but which isn't actually available yet.

Even if you have the kind of cash needed, you can’t just walk in your nearest PC shop to grab most of these GPUs. Larger orders of the H100 have a lead time of two to three months between paying for your order and it arriving on your doorstep. Don't forget about power requirements either. The PCIe variant of the H100 can still draw 350W of power, so if it's generating video 24/7 that adds up to about 3 MWh per year — roughly $300 per year, which isn't much considering the price of the hardware.

Getting Open Sora up and running may not be a trivial endeavor either, particularly if you want to run it on non-Nvidia solutions. And, like so many other AI generators, there are loads of copyright and fair use questions left unanswered. But even with the best hardware available, we suspect it will take a fair bit more than AI generators to create the any epic movies.