Big tech companies are planning to spend hundreds of billions of dollars over the next two years in their race to lead the next generation of artificial intelligence technologies, in what could be the biggest gamble of shareholder capital since the dawn of the internet three decades ago.

AI-related technologies are expected to drive revenue gains for nearly all the world's biggest tech giants over the coming years as companies look to leverage their massive datasets in order to enhance sales of everything from drive-through dining to the most complicated pharmaceutical testing.

Tapping into those data requires big investments in computing infrastructure, often based in virtual cloud computing environments, which are built and managed by so-called hypercalers like Alphabet's Google Cloud, Microsoft's (MSFT) Azure and Amazon Web Services (AMZN) .

And with AI potential now unlocked by the advances in chip design, speed, energy consumption and costs, these hyperscalers are seeing perhaps the biggest demand surge on record for their essential cloud services.

Building and maintaining those networks, however, comes at a steep cost and requires investors to take a leap of faith and companies such as Google and Microsoft are committing to spending billions while generating only a relatively small amount of revenue from their AI-focused products.

Capital expenditure is the new Big Tech focus

That puts the topic of capital expenditures, or capex, at the heart of the AI investment story and cements the market leadership of chipmaker Nvidia (NVDA) regardless of which tech giant emerges as the hyperscaler of choice.

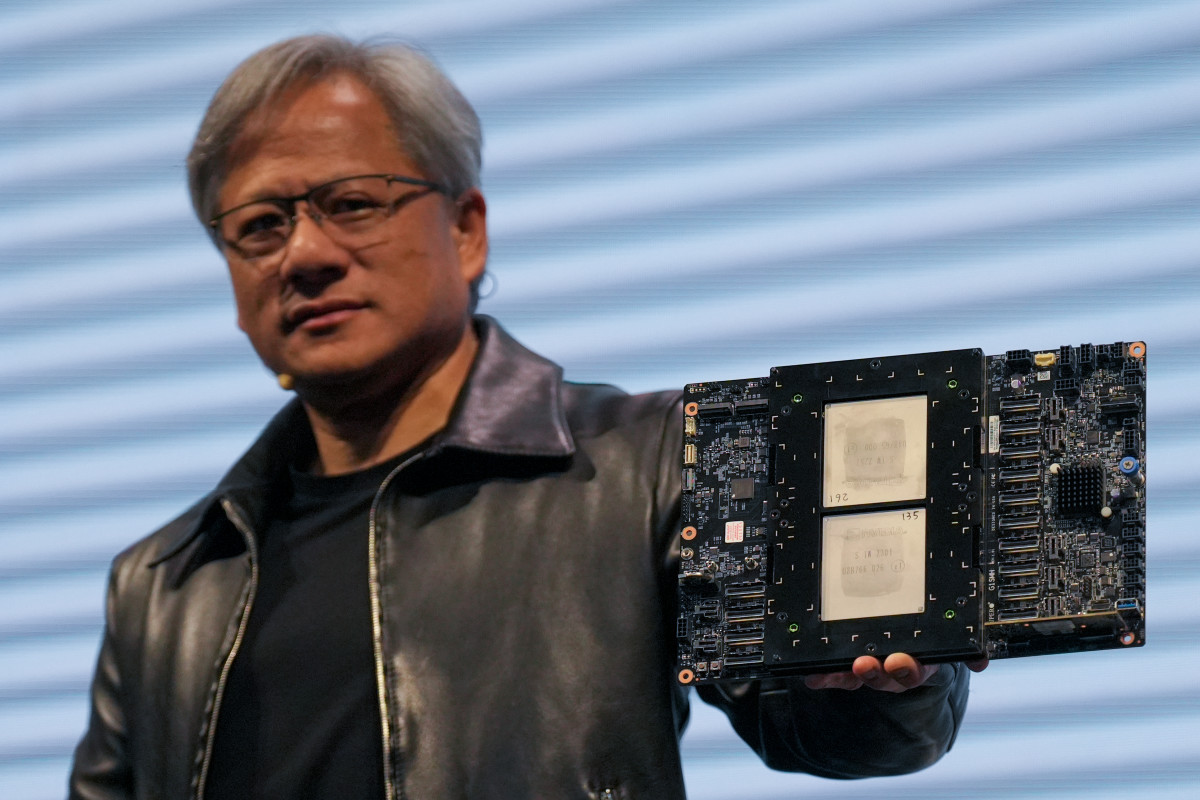

Nvidia CEO Jensen Huang, in fact, sees the data center market growing at $250 billion a year – on top of an installed base he estimates is already valued at $1 trillion – as companies like IBM and Alibaba Cloud mount nascent challenges to their larger rivals.

And much like the men and women who sold pickaxes and sifting pans during the California gold rush, he seems thoroughly agnostic about who will win.

"We sell an entire data center in parts, and so our percentage of that $250 billion per year is likely a lot, lot, lot higher than somebody who sells a chip," Huang told investors during the group's GTC developers' conference in San Jose, Calif., last month.

Related: Analyst revamps Nvidia stock price target as Mag 7 earnings loom

Nvidia, which currently boasts an estimated 80% share of the market for AI-powering processors, used the GTC event to unveil its iteration to its lineup, the Blackwell GPU architecture, which could command a 40% premium to the current range for H100 chips, which go for between $30,000 and $40,000 each.

That level of cost goes a long way toward explaining the massive amounts of capital spending unveiled by Microsoft and Alphabet, two of Nvidia's biggest customers, earlier this week as well as the spending plans outlined by Meta Platforms' (META) Mark Zuckerberg and Tesla's (TSLA) Elon Musk.

Alphabet (GOOG) CEO Sundar Pichai hinted at capex levels of around $50 billion this year and $57 billion in 2025.

Winning the AI race will cost billions

"We are committed to making the investments required to keep us at the leading edge in technical infrastructure," Pichai told investors on April 25. "You can see that from the increases in our capital expenditures. This will fuel growth in Cloud and help us push the frontiers of AI models."

Google's first quarter capex was pegged at $12 billion, nearly double the tally from a year earlier, driven "overwhelmingly by investment in our technical infrastructure with the largest component for servers followed by data centers."

Microsoft, meanwhile, is planning a capex tally of around $50 billion for its coming financial year, which begins in July, after fiscal third-quarter spending surged nearly 80% to $14 billion.

Related: Analysts eye Google spending ahead of earnings as Meta spooks AI bets

"We have been doing what is essentially capital allocation to be a leader in AI for multiple years now, and we plan to keep taking that forward," CEO Satya Nadella told investors on April 25.

Amazon CFO Brian Olsavsky told investors on the group's most recent earnings call that while it's still working through spending plans for the year, "we do expect capex to rise as we add capacity in AWS for region expansions, but primarily the work we're doing with generative-AI projects."

Nvidia GPUs are central to AI ambitions

Meta Platforms, which has committed to buying around 350,000 of Nvidia's H100 graphics chips, forecast its 2024 capex bill at between $35 billion to $40 billion, while Tesla CEO Elon Musk told investors he's likely to deploy around 85,000 of them this year as part of his new pivot toward AI and autonomous driving.

"For Tech, AI capex is as important as the AI contribution in our view, and 2024 marks the first of a multi-year investment cycle,." BoFA analyst said in a recent client note. "Hyperscalers' capex outlook confirmed this thesis (and) GDP data corroborates that we are now into the second quarter of the Tech spend cycle."

Nvidia is slated to report its first quarter earnings on May 22, and early indications suggest an overall revenue tally of $24.5 billion, the vast majority powered by data center sales.

While that's a staggering 245% gain from the year-earlier period, it could be tweaked even higher now that its biggest customers have outlined some of their spending plans.

"Many of our customers that we have already spoken with talked about the designs, talked about the specs, have provided us their demand desires," Nvidia CFO Colette Kress told investors at the group's GTC conference last month. "And that has been very helpful for us to begin our supply chain work, to begin our volumes and what we're going to do."

Related: Analysts reset Microsoft stock price targets ahead of highly anticipated earnings

However, in much the same way as Microsoft and Alphabet have told investors that data center demand has far outpaced their ability to supply it, Nvidia also faces capacity constraints.

Chip-on-wafer-on-substrate, often called CoWoS, is a crucial component of AI chipmaking and has been weighing on Nvidia deliveries for much of the past year.

Taiwan Semiconductor (TSM) , the world's biggest chip contractor, has struggled to meet the surge for its high-end stacking and packing technology and has warned investors "that condition will continue probably to next year."

AMD has a firm grip on second place

"It's very true though that on the onset of the very first one coming to market, there might be constraints until we can meet some of the demand that's put in front of us," Kress said during her call with analysts last month.

That could provide a boost for Advanced Micro Devices (AMD) , the only real rival to Nvidia's global market dominance, and its newly-minted MI300X chip.

Earlier this year, AMD said the new chip could generate around $3.5 billion in sales over the coming year as the group leverages its new launch against Nvidia's ability to meet the global surge in demand.

More AI Stocks:

- Analyst unveils eye-popping Palantir stock price target after Oracle deal

- Veteran analyst delivers blunt warning about Nvidia's stock

AMD will post its own first quarter earnings after the close of trading on April 30, but analysts see sales rising only around 4%, to $5.5 billion, as demand in the global PC market, a key revenue driver, remains muted.

Nvidia shares closed at $877.35 each on April 26, after rising 6.18% on the session and extending their year-to-date gain to around 225%. That puts the chipmaker's market value at $2.19 trillion, making it the third-largest company in the world by that measure.

Related: Veteran fund manager picks favorite stocks for 2024