Last year Nvidia CEO Jensen Huang said "AI was about to have its iPhone moment", and this year that prediction is coming true. Things stepped up a gear at the recent Nvidia GTC conference in San Jose where the tech giant revealed its new Blackwell series of AI 'superchips', which can be used to train advanced, even more powerful AI models of GPT, Claude and Gemini.

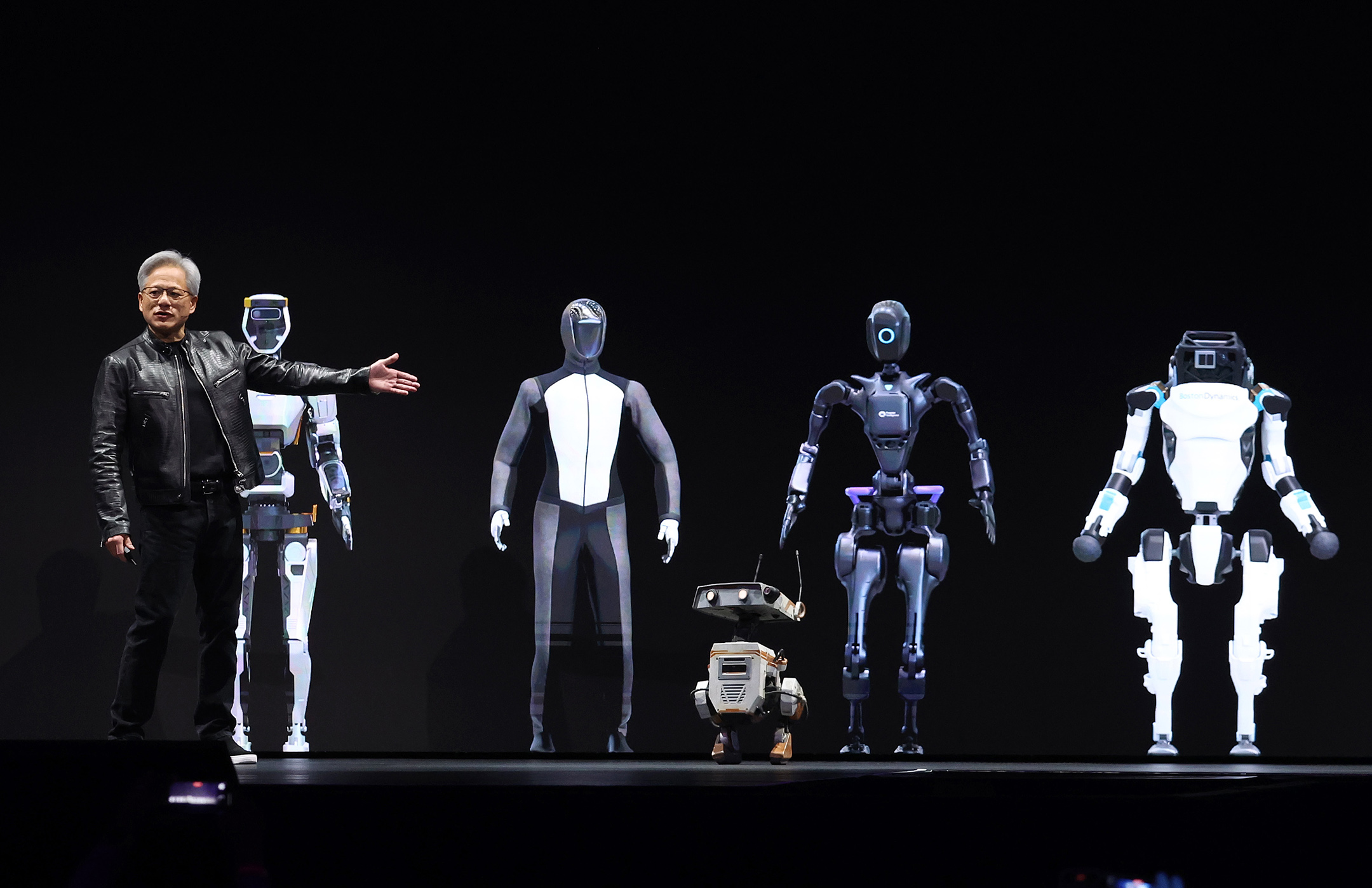

Oh, and these superchips can be used to run humanoid robots - has Nvidia founder and Jensen not seen The Terminator? But if robots dream of electric sheep Jensen dreams of dollars, and wild new and powerful tech of course. It's been revealed the Blackwell chips have cost Nvidia over $10 billion to research and make, and each one of its higher spec GB200 GPUs - the superchips - will cost between $40,000 - $50,000.

Aside from running an advanced robotics AI - codenamed Project GR00T (yes, after that Marvel character) - who is this new Nvidia GPU really for? Ultimately its aimed at industry and powering large-scale AI models, one demo showed how Nvidia has remade the entire planet Earth as a digital double with Blackwell GPUs used to predict weather in real time. But versions of the Blackwell chips will also likely appear in all our tech as well, giving another boost to further develop generative AI.

Take game development as one example, we will see a greater proliferation of AI tech to improve graphics, enhance and create more realistic simulations, improve animation and change the way gamers interact with NPCs. This last one, we already saw Nvidia's NPC AI tech last year and now Ubisoft has shared its own NEO NPCs for more realistic in-game conversations.

Despite the many problems with generative AI, such as copyright theft, many artists and devs are embracing aspects of the technology. For example we spoke to a number of leading indie game devs who revealed they are all using aspects of AI to improve their work and enable greater creativity - but unsurprisingly they all draw the line at using AI image generators like Midjourney.

Behind the noise surrounding the reveal of the Blackwell chips is one clear aim - to reduce energy costs. Aas explained at Nvidia GTC, the current H100 AI chip, which is used to train AI models, needs to be used in bulk, around 8,000 chips to train GPT-4, which uses around 15 megawatts of power - the equivalent of running 30,000 homes.

Now that same processing can be done with 2,000 Blackwell 'B200' GPUs, meaning either a reduction in energy use or the same cost can be put into running even more powerful AI models.

The real coupe at GTC came when Nvidia revealed the peak of its Blackwell chip roster, the GB200 superchip. This features two Blackwell B200 GPUs on one board with its own Nvidia Grace CPU, which not only promises to offer more power but will use less energy.

While the Blackwell superchip isn't really for all of us, right now, it will affect every one of us in some way in the future

So while the Blackwell superchip isn't really for all of us, right now, it will affect every one of us in some way in the future. Right now the list of partners lining up to make use of the new Blackwell chips include Pixar, Samsung and Microsoft alongside the obvious, OpenAI.

Whether that's in variants coming to the best gaming PCs, the best games consoles, or in developing new ways to create 3D art and animation, Nvidia's GPUs and its improvements to AI models will be felt very soon. If in doubt, just take a look at the best Nvidia graphic cards available now.