Nvidia is deploying its GH200 chips in European supercomputers, and as HPCwire reports researchers are already releasing the first performance benchmarks from these systems with impressive results.

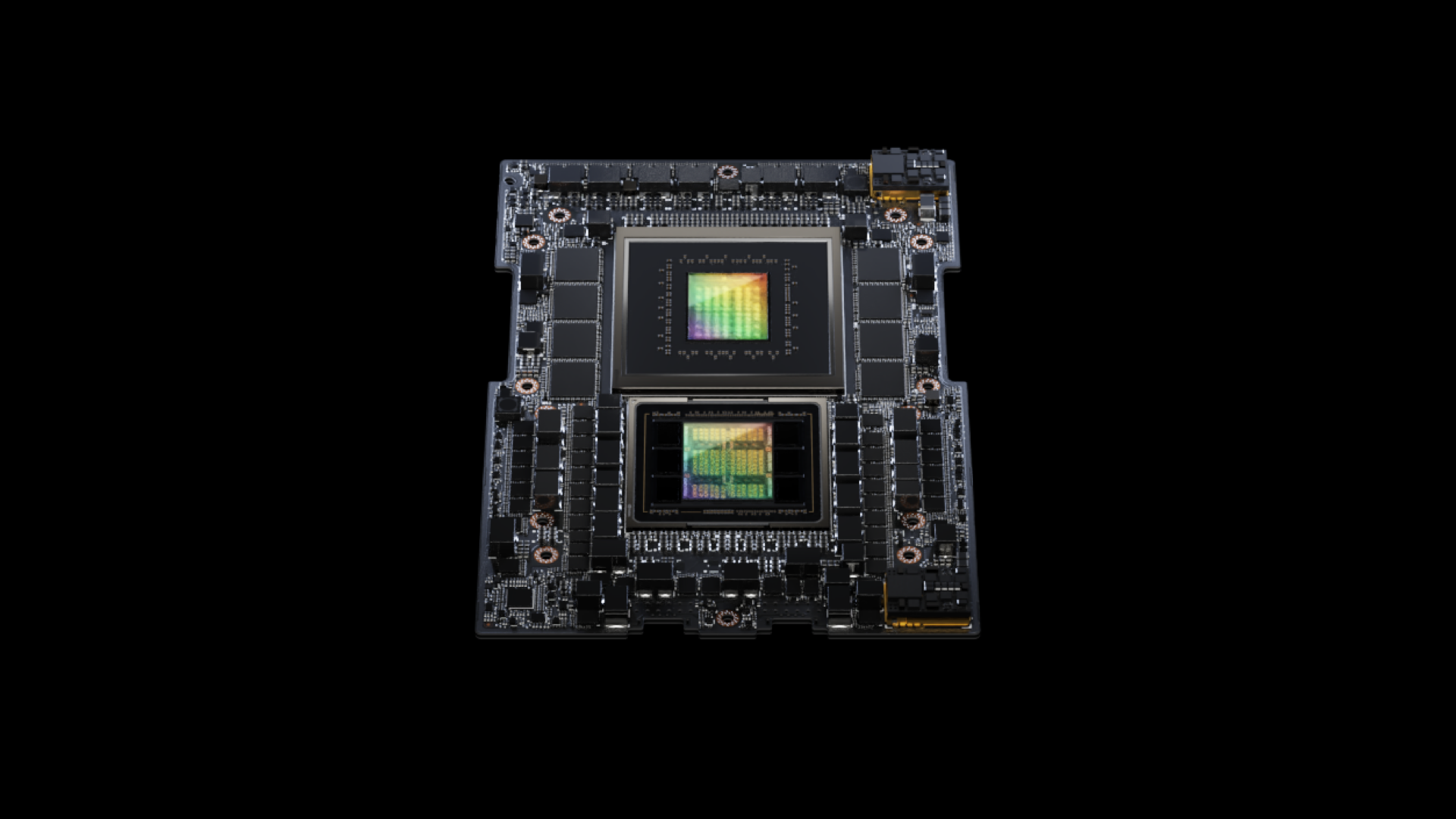

In a paper titled Understanding Data Movement in Tightly Coupled Heterogeneous Systems: A Case Study with the Grace Hopper Superchip, published on the arXiv pre-print server, researchers tested the GH200, which integrates both a CPU and GPU, to demonstrate its speed and benefits for AI and scientific applications.

The Alps system at the Swiss National Supercomputing Center, which is still in the process of being upgraded, was one of the testbeds for these benchmarks. Researchers found the GH200 showed strong performance in AI tasks, especially in leveraging unified memory pools for large memory footprint applications. Each quad GH200 node in the test setup had 288 CPU cores, four Hopper GPUs, and a total of 896GB of memory, comprising 96GB of HBM3 and 128GB of LPDDR5 per superchip.

Climate change modeling

In terms of raw power, the GH200 achieved 612 teraflops using HBM3 memory in certain AI tasks, vastly outperforming DDR memory, which managed 59.2 teraflops. When tested for inference tasks on Llama-2, the GH200 was up to four times faster with HBM3 memory than with DDR.

Another paper spotted by HPCwire on the arXiv server, Boosting Earth System Model Outputs and Saving PetaBytes in their Storage Using Exascale Climate Emulators, compared GH200 clusters with AMD’s MI250X and Nvidia’s A100 and V100 chips in supercomputers like Frontier, Leonardo, and Summit. The GH200 showed impressive results for climate simulations, a notoriously data-heavy workload, with the Alps system topping out at 384.2 million petaflops.

The research highlights the GH200’s ability to handle mixed workloads - combining AI and scientific simulations - while outperforming older chips. These first studies point to Nvidia's GH200 as a crucial step in advancing performance in high-performance computing environments and it will be interesting to see how this new technology evolves as more systems integrate Nvidia's superchips and additional benchmarks emerge.