Nvidia CEO Jensen Huang said in an interview with CNBC that Nvidia plans to sell its Blackwell GPU for AI and HPC workloads for $30,000 to $40,000. However, that is an approximate price since Nvidia is more inclined to sell the whole stack of datacenter building blocks, not just an accelerator itself. Meanwhile, a Raymond James analyst believes it costs around $6,000 to build one B200 accelerator (via @firstadopter).

The performance of Nvidia's Blackwell-based B200 accelerator with 192 GB of HBM3E memory certainly impresses, but those numbers are enabled by a dual-chipset design that packs as many as 204 billion transistors in total (104 billion per die). Nvidia's dual-die GB200 solution with 192 GB of HBM3E will cost significantly more than a single-die GH100 processor with 80GB of memory. The Raymond James analyst estimates that each H100 costs around $3,100, whereas each B200 should cost around $6,000, according to the market observer.

Development of GB200 was also quite an endeavor and Nvidia's spending on its modern GPU architectures and designs tops $10 billion, according to chief executive of the company.

Nvidia's partners used to sell H100 for $30,000 to $40,000 last year when demand for these accelerators was at its peak, and supply was constrained by TSMC's advanced packaging capacities.

It should also be noted that Nvidia's B200 (assuming Jensen Huang talked about B200) is a dual-die solution, whereas Nvidia's H100 is a single-die solution. To that end, it makes sense to compare the price of the B200 to the H100 NVL dual-card product aimed at training large language models. Meanwhile, H100 NVL is not exactly sold in retail, which makes analyzing Nvidia's costs much more complicated.

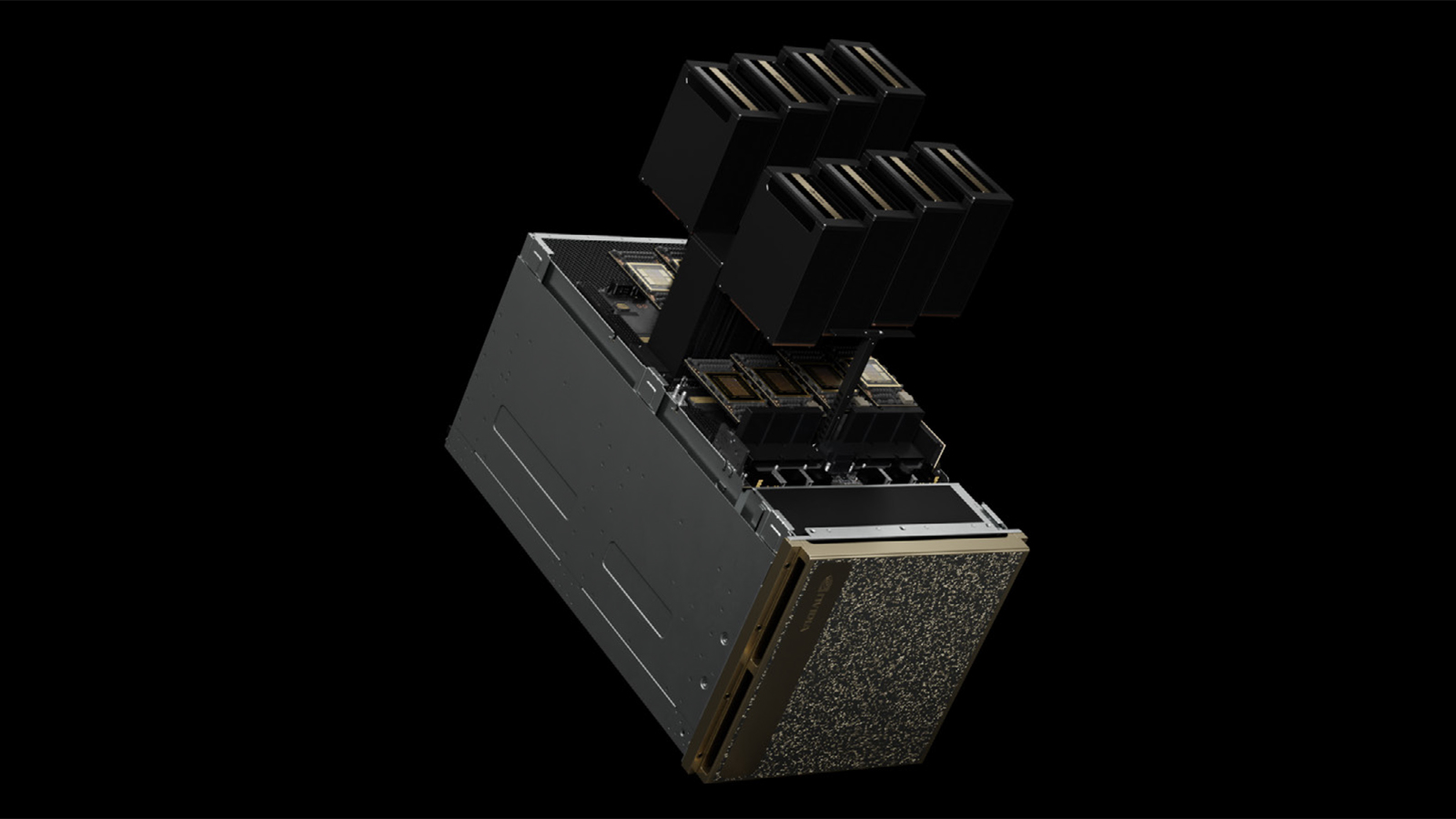

Another thing to consider about Nvidia's B200 is that the company may not really be inclined to sell B200 modules or cards. It may be much more inclined to sell DGX B200 servers with eight Blackwell GPUs or even DGX B200 SuperPODs with 576 B200 GPUs inside for millions of dollars each.

Indeed, Huang stressed that the company would rather sell supercomputers or DGX B200 SuperPODS with plenty of hardware and software that command premium prices. Therefore, the company does not list B200 cards or modules on its website, only DGB B200 systems and DGX B200 SuperPODs. That said, take the information about the pricing of Nvidia's B200 GPU with a grain of salt despite the incredibly well-connected source.