Nvidia said that its Grace Hopper GH200 superchip featuring its own Arm-based CPU and Hopper-based GPU for artificial intelligence (AI) and high-performance computing (HPC) powers Jupiter, Europe's first ExaFLOPS supercomputer hosted at the Forschungszentrum Jülich facility in Germany. The machine can be used for both simulations and AI workloads, which sets it apart from the vast majority of supercomputer installed today. In addition, Nvidia said that its GH200 powers 40 supercomputers worldwide and their combine performance is around 200 'AI' ExaFLOPS.

The Jupiter supercomputer is powered by nearly 24,000 GH200 chips with 2.3 TB of HBM3E memory in total interconnected using Quantum-2 InfiniBand networking and cooled down using Eviden's BullSequana XH3000 liquid-cooling technology. The machine offers 1 ExaFLOPS of FP64 performance for simulations, including climate and weather modeling, material science, drug discovery, industrial engineering, and quantum computing. In addition, the machine can provide a whopping 90 ExaFLOPS of AI performance for training of large language models and similar workloads. All of this will be powered by Nvidia's software solutions like Earth-2, BioNeMo, Clara, cuQuantum, Modulus, and Omniverse.

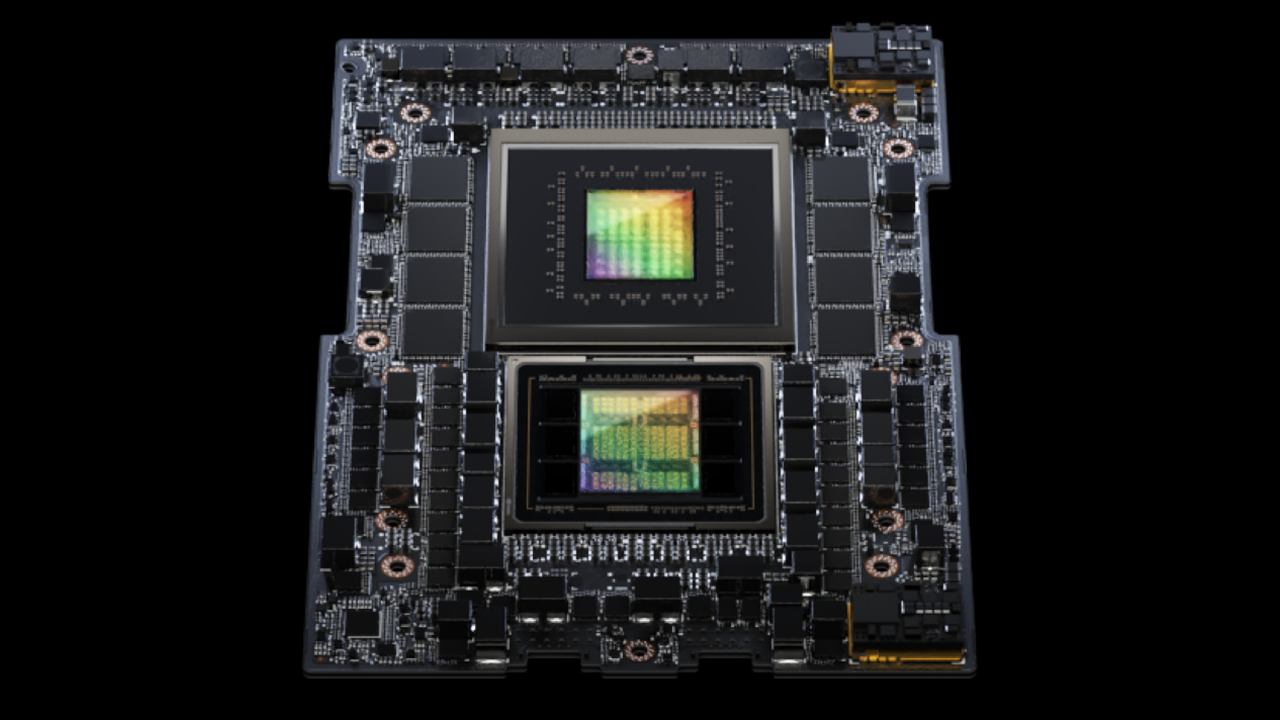

Each node within the Jupiter supercomputer is a powerhouse by itself, featuring 288 Arm Neoverse cores and four H200 GPU for AI and HPC workloads and is capable of achieving a remarkable 16 PetaFLOPS of AI performance. The GH200 superchips are interconnected using Nvidia's NVLink connection, though Nvidia has not disclosed total bandwidth of this interconnection.

"At the heart of Jupiter is NVIDIA’s accelerated computing platform, making it a groundbreaking system that will revolutionize scientific research," said Thomas Lippert, director of the Jülich Supercomputing Centre. "Jupiter combines exascale AI and exascale HPC with the world’s best AI software ecosystem to boost the training of foundational models to new heights."

While Jupiter is a remarkable system — at the end of the day, this is Europe's first ExaFLOPS machine — it is not the only GH200-based supercomputer. The University of Bristol, in particular, is building a supercomputer with more than 5,000 GH200 with 141GB HBM3E, aiming to be the most powerful in the UK. Nvidia's Grace Hopper GH 200 is going to be used in more than 40 supercomputers around the world built by companies like Dell, HPE, and Lenovo. Combined performance of these system will be about 200 AI ExaFLOPS.

HPE, for example, will be using Nvidia's Grace Hopper GH200 superchip in its HPE Cray EX2500 supercomputers. These machines can be scaled up massively, using thousands of GH200 chips, which means faster and more efficient AI model training.

Nvidia's GH200 will also be available from ASRock Rack, Asus, Gigabyte, and Ingrasys by the end of the year. Nvidia also plans to offer free access to the GH200 through its LaunchPad program, making this powerful tech available to more people.