Nvidia on Tuesday unleashed a revamped version of its next-generation Grace Hopper Superchip platform with HBM3e memory for artificial intelligence and high-performance computing. The new version of the GH200 Grace Hopper features the same Grace CPU and GH100 Hopper compute GPU but comes with HBM3e memory boasting higher capacity and bandwidth.

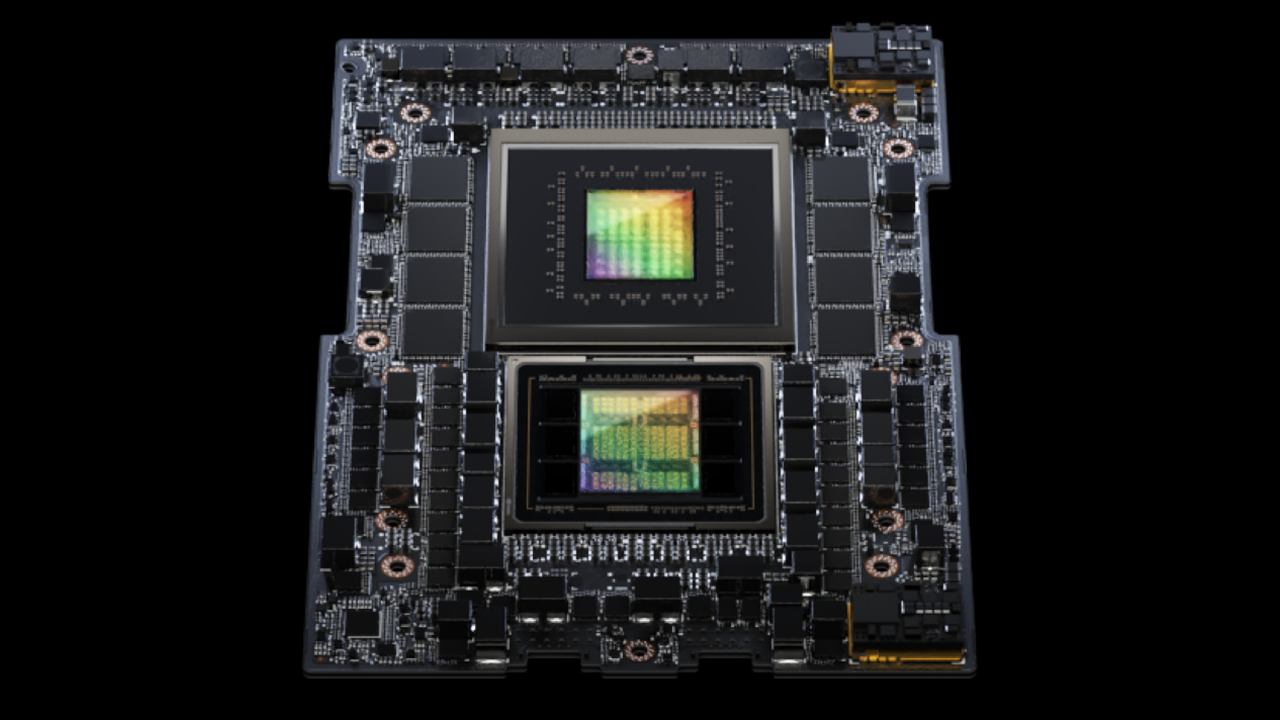

The new GH200 Grace Hopper Superchip is based on the 72-core Grace CPU outfitted with 480 GB of ECC LPDDR5X memory as well as the GH100 compute GPU that is paired with 141 GB of HBM3E memory that comes in six 24 GB stacks and uses a 6,144-bit memory interface. While Nvidia physically installs 144 GB of memory, only 141 GB is accessible for better yields.

Nvidia's current GH200 Grace Hopper Superchip comes with 96 GB of HBM3 memory, providing bandwidth of less than 4 TB/s. By contrast, the new model ups memory capacity by around 50% and increases bandwidth by over 25%. Such massive improvements enable the new platform to run larger AI models than the original version and provide tangible performance improvements (which will be particularly important for training).

According to Nvidia, Nvidia's GH200 Grace Hopper platform with HBM3 is currently in production and will be available commercially starting next month. By contrast, the GH200 Grace Hopper platform with HBM3e is now sampling and is expected to be available in the second quarter of 2024. Nvidia stressed that the new GH200 Grace Hopper uses the same Grace CPU and GH100 GPU silicon as the original version, so the company will not need to ramp up any new revisions or steppings.

Nvidia says that the original GH200 with HBM3 and the improved GH200 with HBM3E will co-exist on the market, which means that the latter will be sold at a premium given its higher performance enabled by the more advanced memory.

"To meet surging demand for generative AI, data centers require accelerated computing platforms with specialized needs," said Jensen Huang, chief executive of Nvidia. "The new GH200 Grace Hopper Superchip platform delivers this with exceptional memory technology and bandwidth to improve throughput, the ability to connect GPUs to aggregate performance without compromise, and a server design that can be easily deployed across the entire data center."

Nvidia's next-generation Grace Hopper Superchip platform with HBM3e is fully compatible with Nvidia's MGX server specification and is, therefore drop-in compatible with existing server designs.