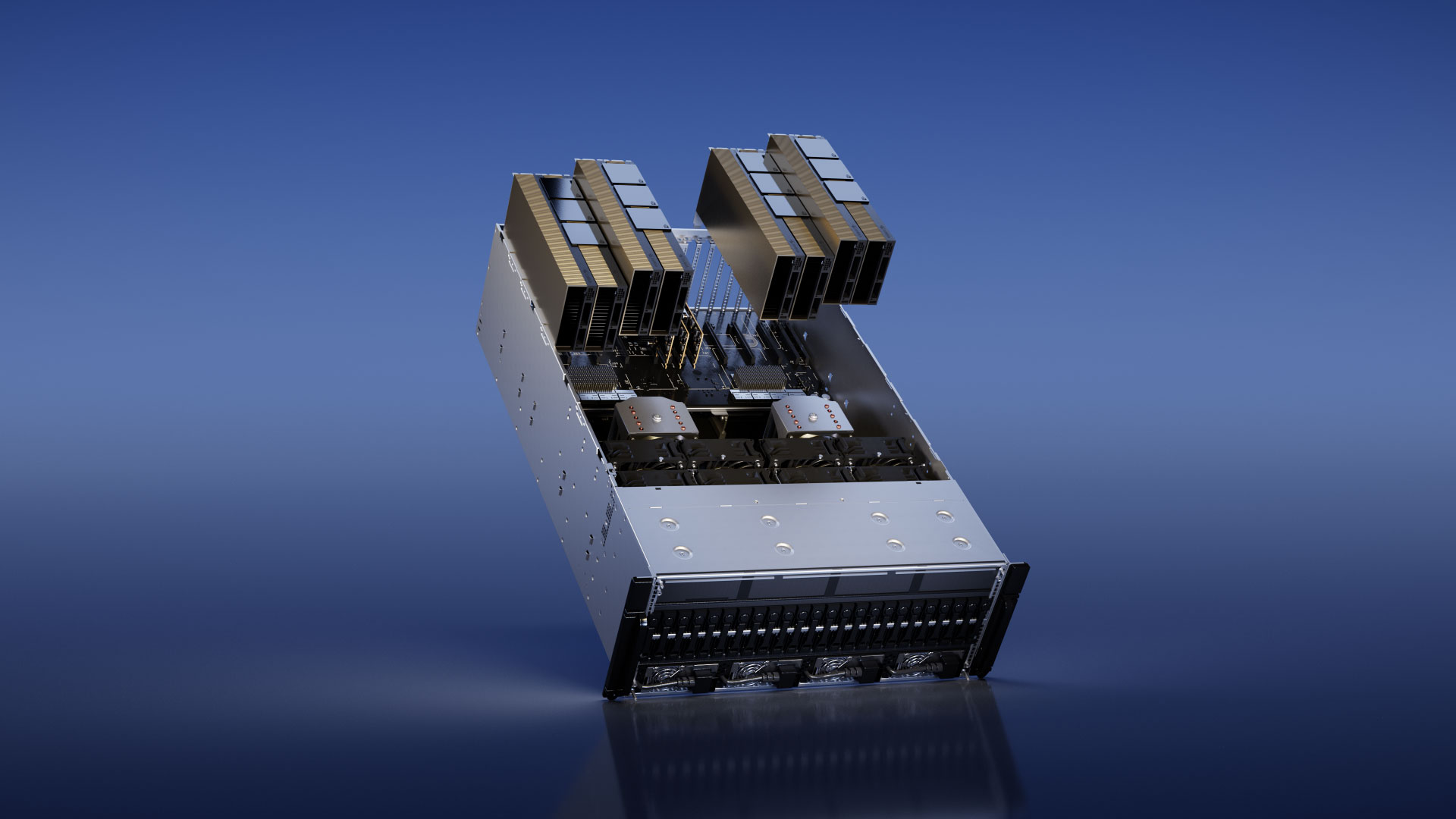

Nvidia announced a new dual-GPU product, the H100 NVL, during its GTC Spring 2023 keynote. This won't bring back SLI or multi-GPU gaming, and won't be one of the best graphics cards for gaming, but instead targets the growing AI market. From the information and images Nvidia has released, the H100 NVL (H100 NVLink) will sport three NVLink connectors on the top, with the two adjacent cards slotting into separate PCIe slots.

Note that the existing H100 PCIe already had the three NVLink options, but the H100 NVL makes some other changes and will also only be sold as a paired card solution. It's an interesting change of pace, apparently to accommodate servers that don't support Nvidia's SXM option, with a focus on inference performance rather than training. The NVLink connections should help provide the missing bandwidth that NVSwitch gives on the SXM solutions, and there are some other notable differences as well.

Take the specifications. Previous H100 solutions — both SXM and PCIe — have come with 80GB of memory (HBM3 for the SXM, HBM2e for PCIe), but the actual package contains six stacks, each with 16GB of memory. It's not clear if one stack is completely disabled, or if it's for ECC or some other purpose. What we do know is that the H100 NVL will come with 94GB per GPU, and 188GB HBM3 total. We assume the "missing" 2GB per GPU is either for ECC or somehow related to yields, though the latter seems a bit odd.

Power is slightly higher than the H100 PCIe, at 350–400 watts per GPU (configurable), an increase of 50W. Total performance meanwhile ends up being effectively double that of the H100 SXM: 134 teraflops of FP64, 1,979 teraflops of TF32, and 7,916 teraflops FP8 (as well as 7,916 teraops INT8).

Basically, this looks like the same core design of the H100 PCIe, which also supports NVLink, but potentially now with more of the GPU cores enabled, and with 17.5% more memory. The memory bandwidth is also quite a bit higher than the H100 PCIe, thanks to the switch to HBM3. H100 NVL checks in at 3.9 TB/s per GPU and a combined 7.8 TB/s (versus 2 TB/s for the H100 PCIe, and 3.35 TB/s on the H100 SXM).

As this is a dual-card solution, with each card occupying a 2-slot space, Nvidia only supports 2 to 4 pairs of H100 NVL cards for partner and certified systems. How much would a single pair cost, and will they be available to purchase separately? That remains to be seen, though a single H100 PCIe can sometimes be found for around $28,000. So $80,000 for a pair of H100 NVL doesn't seem out of the question.