Nvidia has announced two products: the GB200 NVL4, a monster quad-B200 GPU module featuring two Grace CPUs, and the H200 NVL PCIe GPU, aimed at air-cooled data centers.

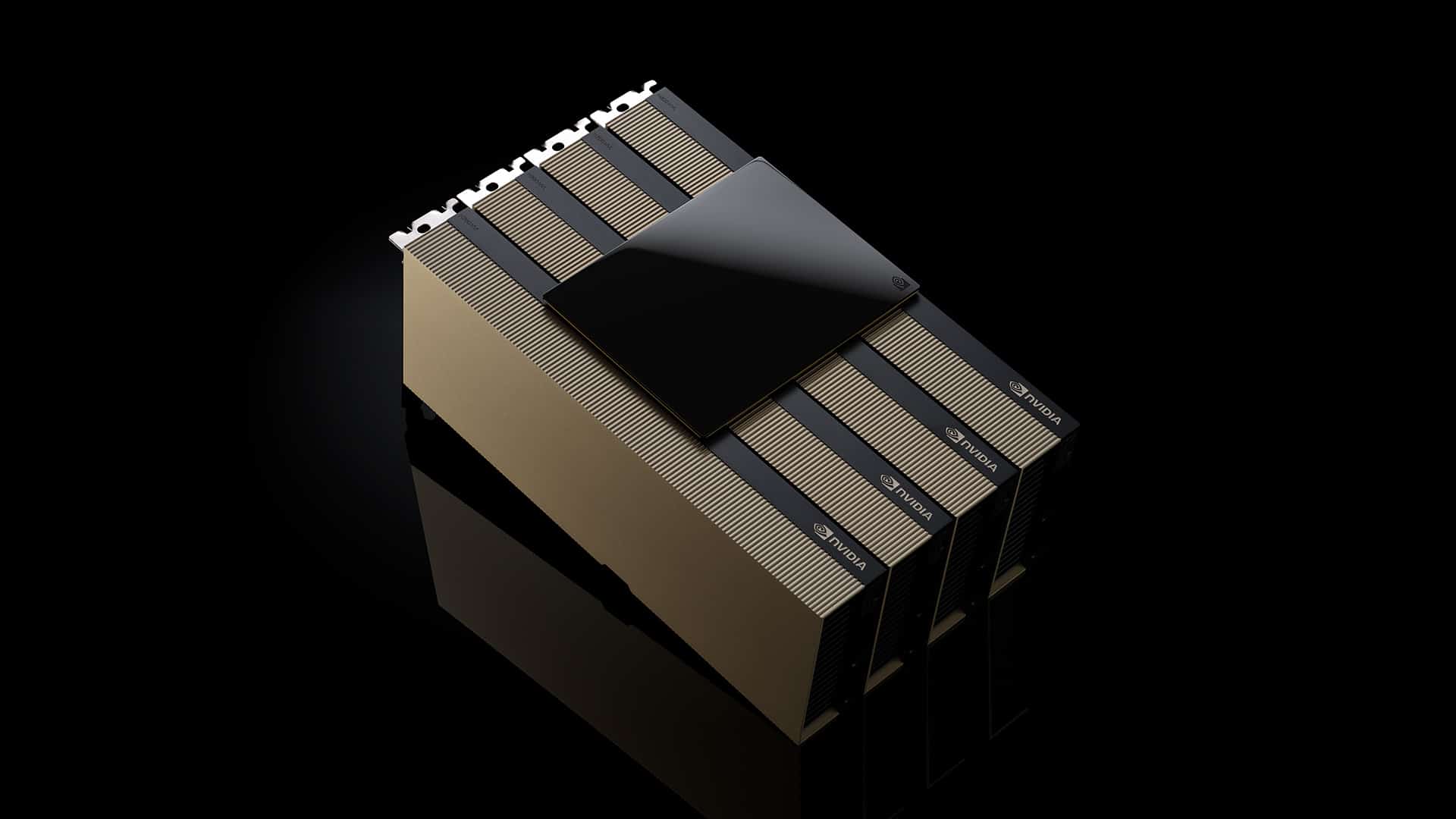

The GB200 Grace Blackwell NVL4 Superchip is an even more potent variant of the standard (non-NVL4) dual-GPU variant, featuring a whopping four B200 Blackwell GPUs hooked up to each other with NVLink and two Grace ARM-based CPUs all on one motherboard. The solution is aimed at HPC and AI-hybrid workloads featuring a whopping 1.3TB of coherent memory. Nvidia advertises the GB200 NVL4 as having 2.2X the simulation, 1.8X the training, and 1.8X the inference performance of the Nvidia GH200 NVL4 Grace Hopper Superchip — its direct predecessor.

Nvidia says the GB200 NVL4 super chip will be available in 2H 2024 from various providers, such as MSI, Asus, Gigabyte, Wistron, Pegatron, ASRock Rack, Lenovo, HP Enterprise, and more.

Swinging around to the opposite side of the spectrum, Nvidia's H200 NVL is a dual-slot air-cooled GPU featuring PCIe 5.0 connectivity (128 GB/s). The cooler is optimized for rack-mount solutions, featuring a flow-through design where intake air runs from right to left; there is no blower-style fan.

Performance is slightly worse than Nvidia's outgoing H200 in the SXM form factor. The H200 NVL is rated at 30 TFLOPS of FP64 and 60 TFLOPS of FP32. Tensor core performance is rated at 60 TFLOPS of FP64, 835 TFLOPS of TF32, 1,671 TFLOPS of BFLOAT16, 1,671 TFLOPS of FP16, 3,341 TFLOPS of FP8 and 3,341 TFLOPs of INT8.

However, Nvidia says the H200 NVL is much faster than the H100 NVL it replaces. It features 1.5X the memory capacity and 1.2X the memory bandwidth, delivering up to 1.7X faster inference performance and 1.3X faster performance for HPC workloads. Nvidia also compared quickly to Ampere, stating that the H200 NVL is 2.5X faster than Ampere's equivalent GPUs.

The H200 NVL PCIe GPU is optimized for the vast majority of data center configurations, including air-cooled server racks. Nvidia states that according to a survey, roughly 70% of enterprise racks use air cooling and 20kW of power or less. Being a PCIe GPU, data center providers can re-use their existing racks and only replace the GPUs, reducing waste and significantly reducing the cost of upgrading hardware. The H200 NVL is also equipped with NVLink, offering up to 900 GB/s of bandwidth per GPU and enabling system providers to hook up to four GPUs in a single rig to boost performance.

Nvidia's new air-cooled GPU arrives at a time when Nvidia's Blackwell GPUs are having severe overheating problems. Despite operating with full-blown liquid cooling systems, system integrators are forced to redesign Blackwell GPU-supported server racks due to the GPU's enormous amount of heat dissipation in racks that consume up to 120KW alone. The H200 NVL is not even a close competitor to the B200, but Nvidia's air-cooled datacenter GPU highlights the significant advantages of low-power-consuming air-cooled GPUs.

H200 NVL will be available from various providers such as Dell, HP Enterprise, Lenovo, and Supermicro. Additionally, the new GPU will be available in platforms from Aivres, ASRock Rack, Asus, Gigabyte, Ingrasys, Inventec, MSI, Pegatron, QCT, Wistron, and Wiwynn.