Nvidia recently showcased the capabilities of its RTX consumer GPUs at a press event, according to Benchlife.Info. The company pointed out how its GPUs are better than regular NPU-equipped PCs at handling AI tasks. It also presented several benchmarks, which showed its GPUs outperforming competing notebooks with AI-hardware acceleration including the MacBook Pro featuring Apple's top-of-the-line M3 Max chip.

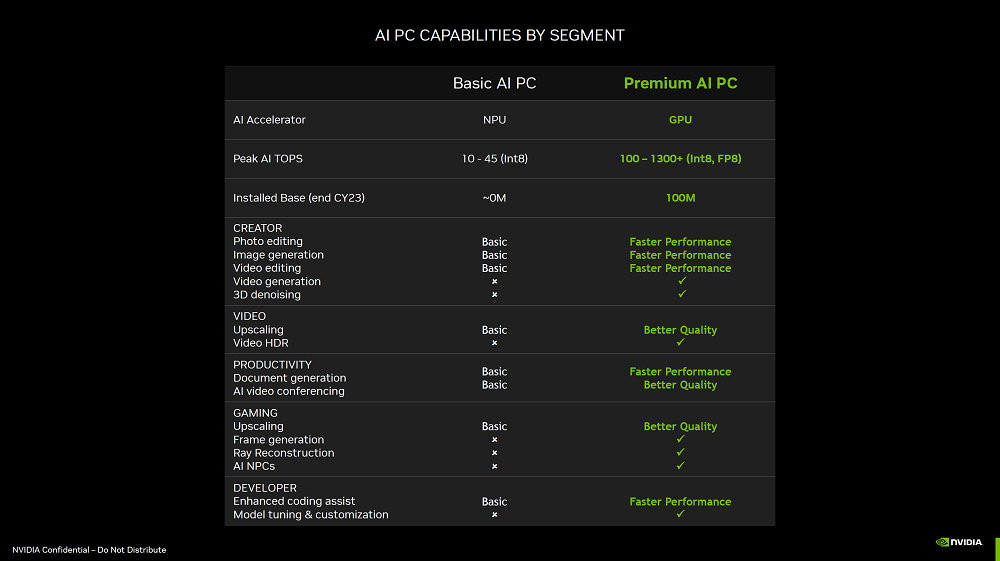

The entire event was centered around how Nvidia's RTX GPUs outperform modern-day "AI PCs" equipped with NPUs. According to Nvidia, the 10–45 TOPS performance rating found in modern Intel, AMD, Apple, and Qualcomm processors is only enough for "basic" AI workloads. The company gave several examples, including photo editing, image generation, image upscaling, and enhanced coding assistance through AI, which it claimed NPU-equipped AI PCs are either incapable of handling or are only capable of executing at a very basic level. Nvidia's GPUs, on the other hand, can handle all AI tasks and execute them with better performance and/or quality, naturally.

Nvidia stated that its RTX GPUs are much more performant than NPUs and can achieve anywhere between 100 to 1300+ TOPS, depending on the GPU. The Nvidia event went so far as to categorize its RTX GPUs as "Premium AI" equipment, while regulating NPUs to a lower "Basic AI" equipment category. (Nvidia also added another category, cloud computing, which it categorized as "Heavy AI" equipment boasting thousands of TOPS of performance, no doubt a nod to its H100 and B200 Blackwell enterprise GPUs.)

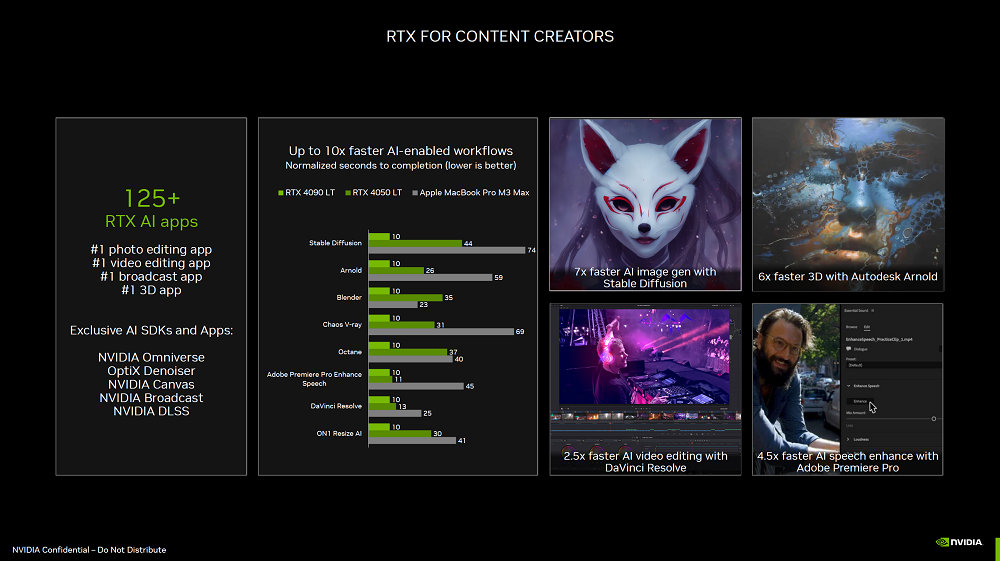

Nvidia shared several AI-focused benchmarks, which compared the latest RTX 40-series GPUs against some of its competitors. For content creation, Nvidia showed a benchmark comparing the RTX 4090 Laptop GPU, the RTX 4050 Laptop GPU, and the Apple Macbook Pro M3 Max in several content creation applications that use AI — featuring Stable Diffusion, Arnold, Blender, Chaos V-ray, Octane, Adobe Premier Pro Enhance Speech, DaVinci Resolve, and ON1 Resize AI. The benchmark showed the RTX 4090 Laptop GPU outperforming the M3 Max-equipped Macbook Pro by over 7x in the most extreme cases, and the RTX 4050 Laptop GPU outperforming the same Macbook Pro by over 2x. On average, the mobile RTX 4090 outperformed the M3 Max by 5x while the RTX 4050 LT outperformed it by 50–100 percent.

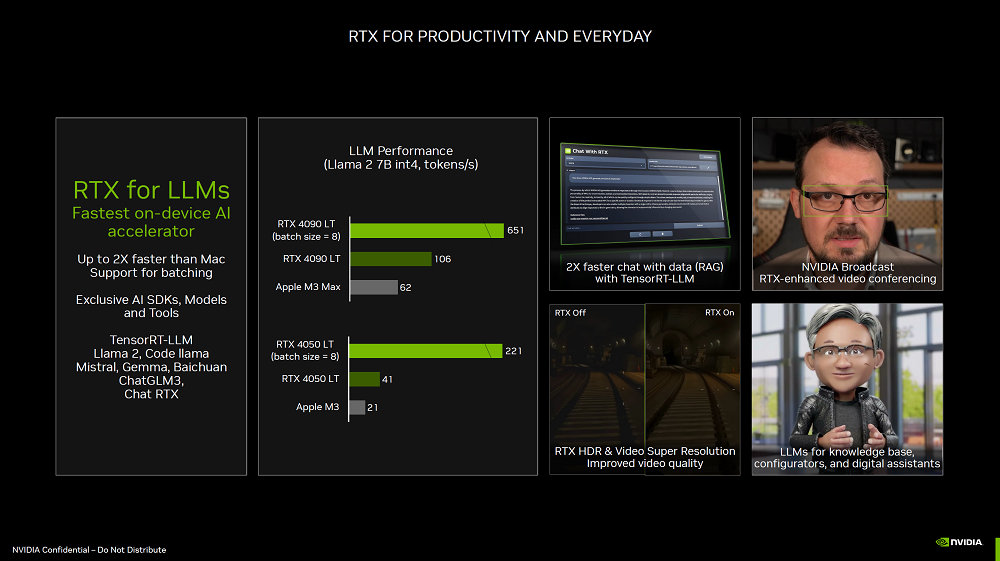

Another benchmark Nvidia showed encompassed large language models (LLMs), utilizing a Llama 2 7B int4 LLM workload. Nvidia pitted the mobile RTX 4090 against the M3 Max and the mobile RTX 4050 against Apple's baseline M3 chip. The RTX 4090 was 42% faster than the M3 Max, but with a batch size of eight, the chip was 90% faster. Similarly, the mobile RTX 4050 was 48% faster than the Apple M3, but with a batch size of eight, the RTX 4050 was 90% quicker. Batch size changes are an optimization that can improve AI performance, depending on the architecture.

Nvidia showed a third benchmark using UL Procyon Stable Diffusion 1.5 against AMD. For this test, Nvidia pitted its entire RTX 40-series desktop GPU lineup against AMD's Radeon RX 7900 XTX. Every Nvidia GPU, starting from the RTX 4070 Super and higher, outperformed AMD's flagship — and the RTX 4090 outperformed it by 2.8x. The RTX 4060 Ti and RTX 4060 were noticeably slower, however. The takeaway is that Nvidia's flagship GPU is substantially quicker than the equivalent AMD GPU, at least in this specific workload. (Our own Stable Diffusion benchmarks tell a similar story, with the 4090 beating the 7900 XTX by 2.75X at 768x768 generation and 2.86X with 512x512 image generation.)

With the spotlight on NPU-equipped AI laptops and AI PCs this year, Nvidia is reminding everyone that its RTX GPUs are already far more potent alternatives. Not only are they very powerful, there are substantially more RTX GPUs out in the wild today than there are NPU-equipped PCs — meaning many existing laptops and PCs with RTX cards are already AI-ready.

Nvidia does make a good point: Its RTX GPUs do provide more performance compared to the latest NPUs seen on Qualcomm's Snapdragon X Elite and AMD's Ryzen 8040 series counterparts. However, we have yet to see Nvidia RTX GPUs become a crucial part of AI PCs. Microsoft's official definition for AI PCs requires the addition of an NPU alongside the CPU/GPU — meaning most RTX-equipped systems are still "unqualified" as most do not have built-in NPUs.

Microsoft is also clearly looking at not just raw computational power, but on the efficiency of doing the work. That an RTX 4090 Laptop GPU can pummel integrated NPUs shouldn't surprise everyone. But the mobile 4090 is also rated to draw 80W to 150W of power, roughly 2X to 5X more than what many of these mobile processors require.

It will be interesting to see if Microsoft changes its mind on the definition of an AI PC in the future — or more specifically, when it will change the definition and in what ways. 45 TOPS as a baseline level of performance, via an energy efficient NPU, will bring AI acceleration to mainstream consumers. In fact, Microsoft even insists that its Copilot software run on the NPU instead of the GPU, to improve battery life. But it's inevitable that at some point we'll have AI tools that need far more than this initial 45 TOPS of compute, and dedicated GPUs are already far down that path.