TechRadar is on the ground in Taipei, Taiwan, for the biggest computing event of the year, Computex 2023, and we'll be in the audience for the opening keynote address by Nvidia CEO Jensen Huang, which is scheduled for May 29, 2023, at 11:00 AM Taipei time (4AM BST), or May 28, 2023, 11PM EST.

The keynote comes off the massive Q1 profit Nvidia reported, powered by new AI hardware powering the latest large language models at Microsoft, Google, and OpenAI like ChatGPT, Stable Diffusion, and more.

We don't have anything official about what Huang will say during the address to the throngs gathered in Taipei for the first real in-person Computex event since 2019, but you can be sure that there will be a lot of AI talk, as well as Nvidia Omniverse discussion.

Hot off the Nvidia RTX 4060 Ti 8GB launch, though, we wouldn't be surprised at all to find that Nvidia announces a release date and pricing for the Nvidia RTX 4060 Ti 16GB and Nvidia RTX 4060, both of which Nvidia has said will launch in July.

Could we get other reveals at the event? Almost certainly, but we'll have to see what transpires as Nvidia opens up Computex 2023.

Howdy folks, this is John Loeffler, TechRadar's Components Editor, and I am in Taipei after a grueling 15-hour flight from New York City, but I'm pumped to be here and bring you all the latest from Nvidia's keynote, as well as all the rest of Computex 2023.

I can't say for certain what we'll see in a couple of hours, but I expect that it will be exciting, at least as far as AI is concerned, considering how much Nvidia's GPU hardware is integral to these latest AI advances.

Will we get graphics card talk? I'm almost certain that it will come up, but will we get prices and release dates for the RTX 4060 Ti 16GB and RTX 4060? I sure hope so, but we'll know in a couple of hours from now once the event kicks off.

I'm waiting to get into the Nvidia keynote event as we speak, which should be kicking off in the next 20 minutes. I'll keep you posted on all the latest as everyone gets in and settled.

We're all filing into our seats and waiting for the keynote to start, and the energy is great. It's good to be back at Computex! We missed you Taipei!

It looks like the event is about to start!

Oh yeah, this event is going to be very AI heavy from this intro.

And here's Jensen!

Jensen showing off the RTX 4060 Ti GPU and a 14-inch laptop running Cyberpunk 2077 with real-time RT.

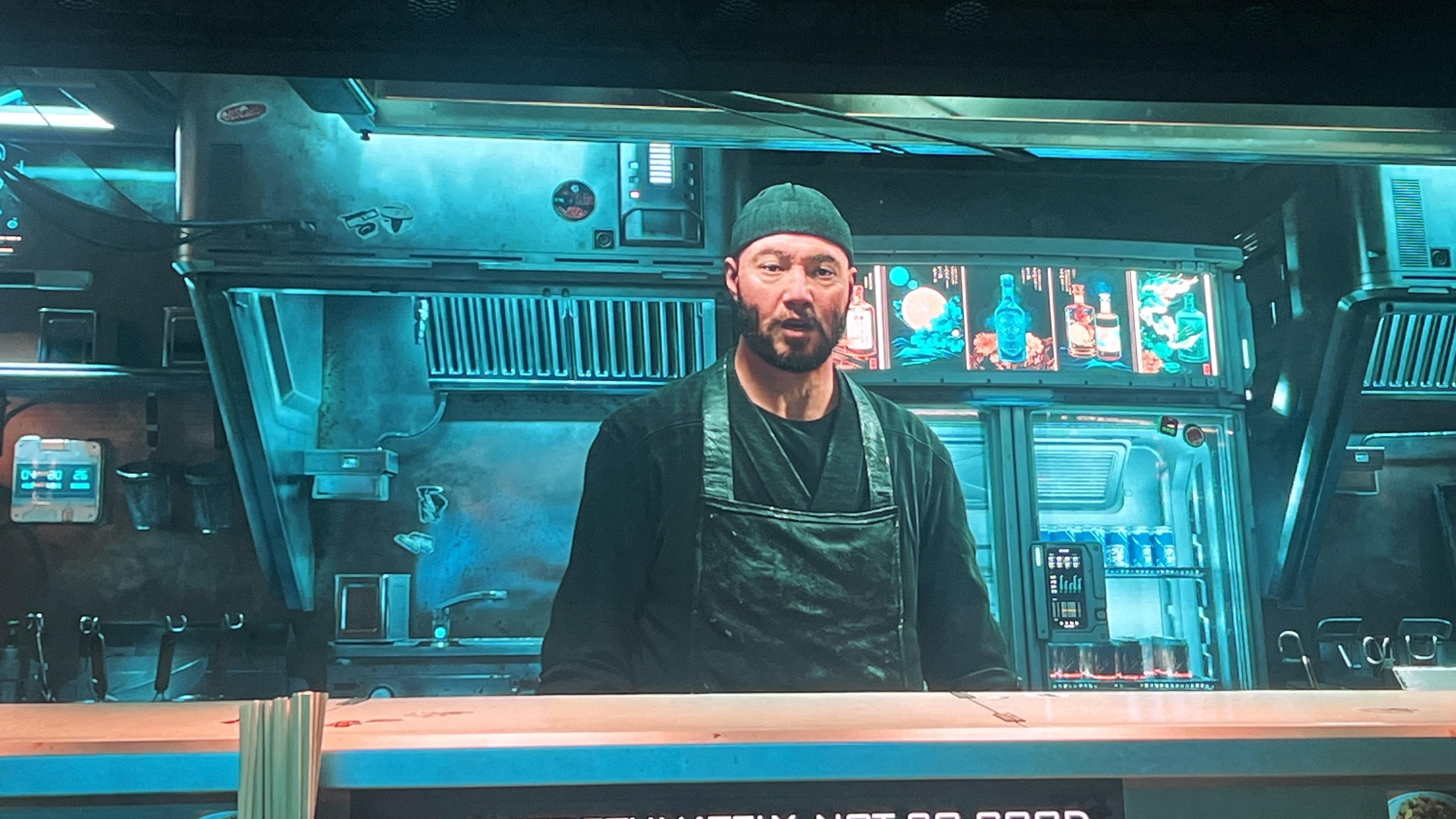

Nvidia Ace, a real time AI model rendering tool. This looks like something for procedurally-generated missions and content.

Jensen says that the characters in this game aren't prescripted, and they have a typical quest giver NPC type. The conversation was a bit stilted, but not too bad. Maybe Oblivion level dialogue. Not bad for an AI.

Jensen is talking about the IBM 360 from 1964, specifically the importance of the CPU.

Jensen is talking about the end of Moore's Law, and how generative AI is an answer to the end of Moore's Law.

Jensen says that it's taken Nvidia to develop its full hardware stack. He says nvidia is introducing a new computing model built on Nvidia's accelerated computing paradigm.

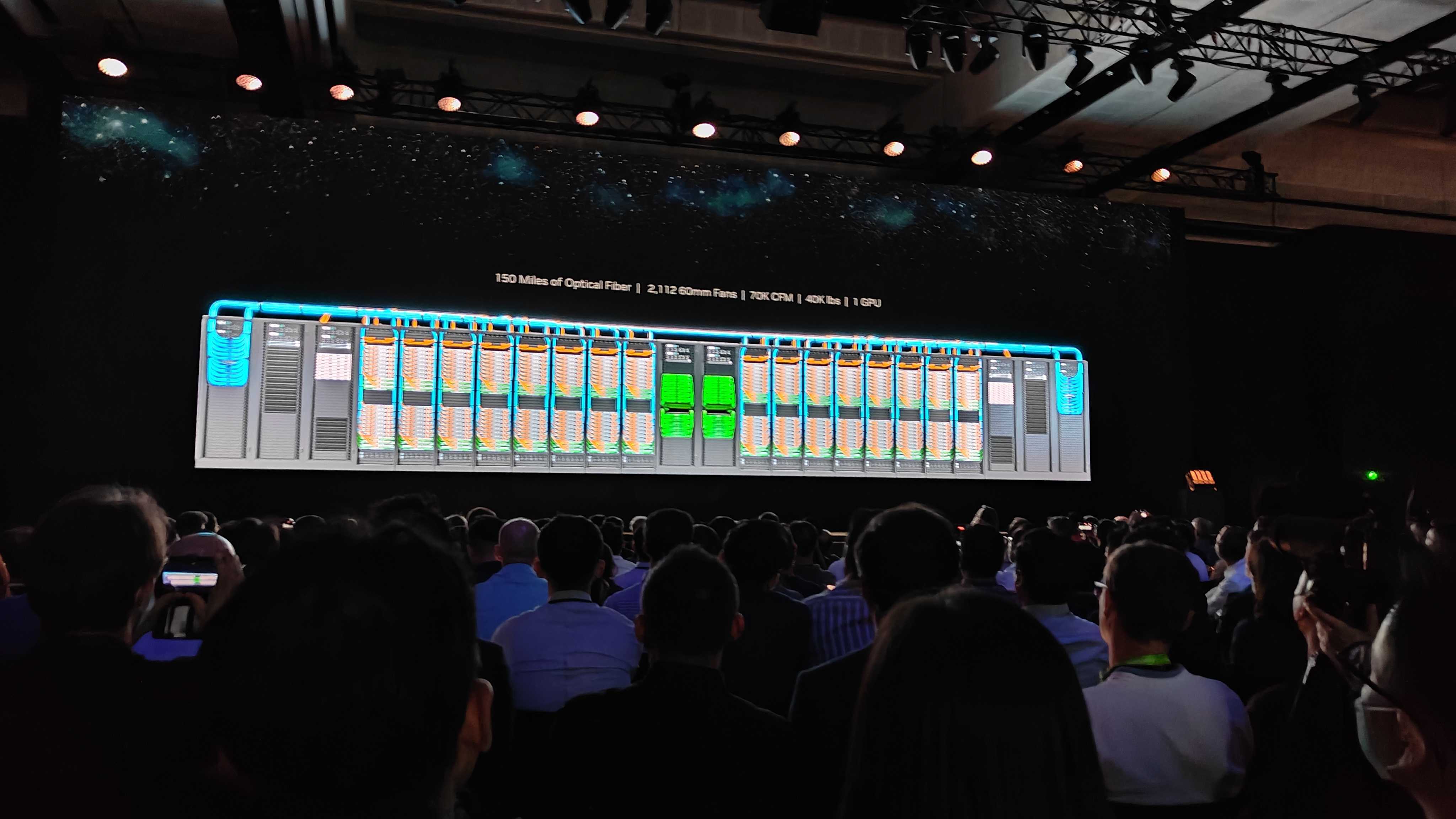

After the brief talk about ray tracing and whatnot, we're getting to the meat of Nvidia's new business model: data center GPU setups for large language models. This is the major driver of Nvidia's profits last quater.

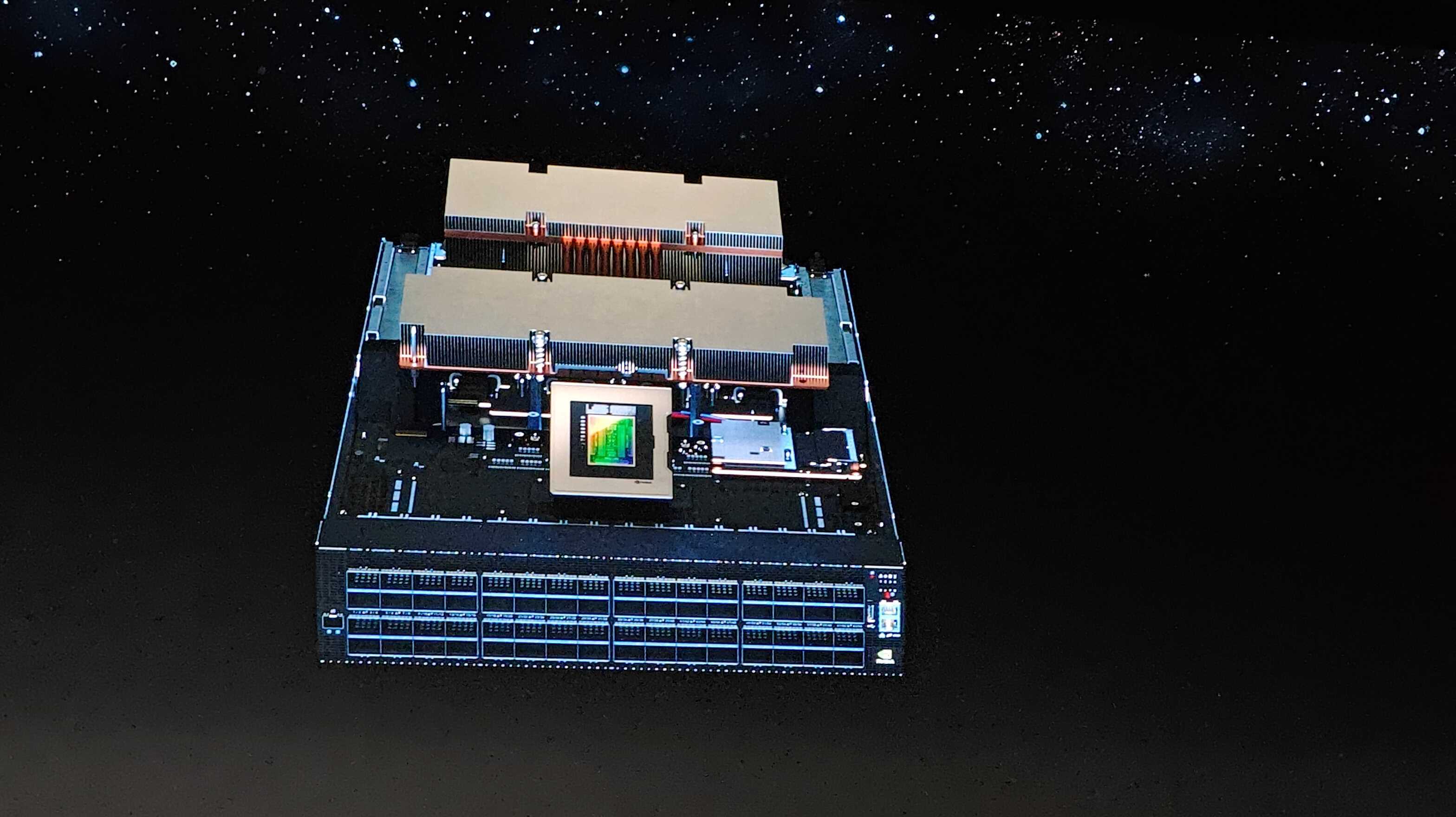

Jensen is showing off a datacenter GPU server that can run a large language model for less then 0.4 GWHr for $400K.

Nvidia is going to transition into a data center AI company as a primary function of its business, and it looks increasingly like graphics cards are going to be more of an afterthought.

It makes sense. Given how much money Nvidia is making off its AI side of the business, the market incentives to go all-in on AI will be irresistable.

Every single data center is overextended, Jensen says. I don't doubt that one bit.

The Nvidia HGX H100 is in full production, Jensen says. To be clear, this is a data center GPU, so don't expect it to run any of the best PC games on your rig unless you're playing via the cloud.

LOL, the H100 costs $200,000. Talk about GPU price inflation.

Nvidia has been investing heavily in its AI infrastructure, and it's making major advances every two years on AI supercomputers. These supercomputers will need dedicated programmers, comparable to computer "factories". To be honest, this keynote is going over the head of probably 60% of the audience at the very least.

Jensen says that Nvidia improved graphics processing 1000x in 5 years using AI. I am really not sure where that number is coming from.

ChatGPT has entered the chat.

"Anything that has structure, we can learn that language," Jensen says. "Once you can learn the language of certain information...we can now guide the AI to produce new information."

WE can now apply computer science...to so many different fields that wasn't possible before," he says.

Jensen is showing off the power of prompts to generate new content, including a text to video demo with a very realistic woman speaking the words. He's showed off a generative AI demo of a traditional Taiwanese song, and then gave the AI a prompt to create a sing along song. He just made the audience sing along.

1600 generative AI startups partnering with Nvidia. Yeah...Nvidia's 5000-series graphics cards will probably be Nvidia's last. Maybe the 6000-series, but shareholders will force Nvidia to go all in on generative AI and AI datacenters.

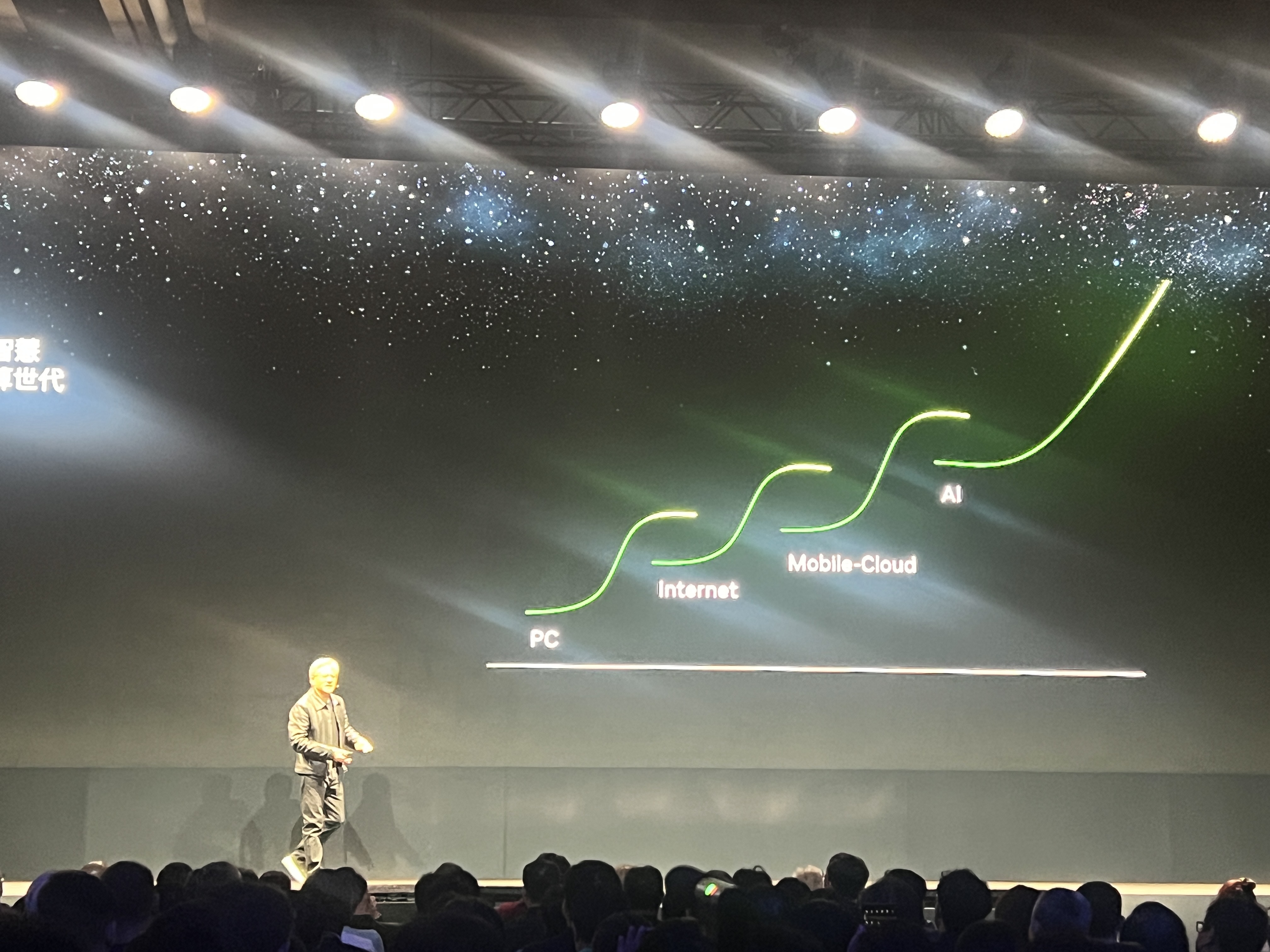

I agree with Jensen, we're in a new computing era for sure.

Jensen busted out the S curve and hockey stick that anyone who has looked at AI should be very familiar with.

Grace Hopper is in full production. GH200 is capable of 65 billion parameters, which is absolutely obscene. Which has interlinked shared memory, so you don't have to break data into pieces, which will help scale out AI.

Grace Hopper is going to revolutionize drug discovery and medicine. I don't know about all the other industries, but medicine is going to see a massive benefit from this.

You can use Grace Hopper as pods, which can get you 256 GH superchips, with 144TB sharred, connected memory for 1 exaFLOPS of performance. It's really hard to quantify that for anybody who isn't really deep into data center.

"I wonder if it can play Crisis," Jensen says.

Google Cloud, Meta, and Microsoft are the first customers in line for the GH200 supercomputer.

We've moved onto video transfer, the compression-stream-decompression paradigm that's been in use since the 1960s. Using Grace Hopper, you can now have digitized avatars that can translate your voice, render your image in 3D, apply generative filters.

The entire 5G stack can run in Grace Hopper as a software codec-like platform.

We've crossed over the first hour, and I think there may have been about 10 minutes dedicated to consumer GPUs. That's not entirely surprising, this is Taiwan, after all, so there are a LOT of industry players here, so this keynote is more of a sales pitch than it is anything else.

Nvidia has a whole new way to network IT infrastructure, called Spectrum-4. I'll leave this talk to our friends at TechRadar Pro, because once you start talking ethernet networking, I know how to unbplug my router and plug it back in again to fix a dropped internet connection.

Well, we're into the Omniverse now.

I really like what Nvidia does as a company, with some major caveats (especially around consumer pricing), but my god, this is a direct sales pitch to the executives in this room., and not much else.

Hello! Do you have a few minutes to watch a presentation on heavy-industries's lord and savior, Nvidia Omniverse?

We're now getting a live demo of Nvidia Omniverse, and if you've ever used Unreal Engine, it will look pretty familiar.

Ok, in the future, a generative AI model will interact with Nvidia Omniverse for you. Once they build a prompt generator, we can just hang up the shingle as humans, we won't be necessary for pretty much anything. Given how much energy this is all going to require, maybe they can use us as batteries.

Nvidia and WPP are partnering to create AI-generated marketing for brands, so no one needs to hire artists anymore or pay royalties, they just need to pay Nvidia for all this AI tech. For Nvidia, this is a great business move, but it's hard to look at all this and wonder what the hell happens to the billion humans that will be made redundant in the global workforce as a result.

Maybe they can AI generate customers and just cut us out of the loop entirely and we can all become Trad Hipsters or something.

Nvidia Metropolis. Irony is dead.

Jensen is wrapping up. If you're here for datacenter content, you'll be very happy. If you were hoping for anything about graphics cards or mobile GPUs, you're out of luck. I'll be talking more with Nvidia over the course of Computex 2023 to dig into the future of its consumer hardware lineup, so stay tuned for that over the course of the week.

In summary though, there's nothing else to say: Nvidia is transitioning into an AI hardware company, and I don't think it has a choice. Given its huge profit haul in Q1 2023 from AI, the market pressures will be impossible to resist. There's more to come for sure, but that's it for now. Stay tuned for more from Computex 2023.