I don't know how many times I have to say it, but Teslas aren't autonomous.

Yes, the company markets its adaptive cruise control software as "Autopilot" and "Full Self Driving," but those technologies require the driver to still be in control of the 5,000-10,000-pound electric car at all times. You can't just sleep in the back seat, scroll your phone, or do...other things. You have to be aware and still driving the car.

But folks have taken Tesla's CEO Elon Musk's thoughts, opinions, and off-the-cuff remarks as meaning that Autopilot is just as good as a human driver. It isn't. And the whole trial Tesla showed off a couple years ago proving Autopilot could, in fact, drive itself, was fabricated.

So it's no wonder that Tesla owners keep killing innocent motorcyclists because they believe the hyper and the glorification of the system by our generation's P.T. Barnum. But the family of one motorcyclist is now taking Tesla to task and suing the company due to the death of their son.

Good, I'm tired of writing stories about Teslas and Tesla drivers killing motorcyclists.

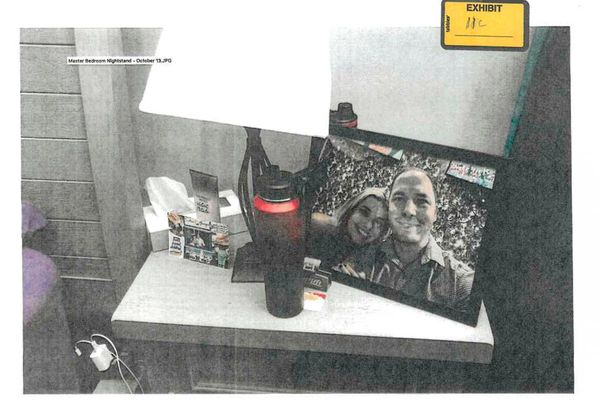

According to Reuters, Landon Embry was riding his Harley-Davidson motorcycle in 2022 when a Tesla Model 3 struck the motorcyclist at 70-80 mph, tossing him off his bike. The Tesla driver was using Autopilot at the time, as the lawsuit claims that the driver was "tired" at the time of the accident, as well as "not in a condition to drive as an ordinarily prudent driver."

Embry's family's lawsuit against Tesla claims that the company's Autopilot software is inadequate at identifying motorcycles and motorcyclists and that a "reasonably prudent driver, or adequate auto braking system, would have, and could have slowed or stopped without colliding with the motorcycle."

Emphasis mine.

I wanted to emphasize the driver bit because we have reams of data on Tesla's Autopilot software, as well as its Full Self Drive schtick, and it isn't as safe as a human. Nor is it still clear who's at fault when the system fails to recognize something in its path. In the case here, Landon Embry. Is the driver liable? Is Tesla? The driver initiated the system's use, but it's ultimately Tesla's safety system.

This is no different than Toyota's phantom acceleration problem.

I hope the Embry's lawsuit moves forward and levels some form of penalty on Tesla, as the company's played far too fast and loose with its system's safety for too long. I remember an instance where a Model 3 on Autopilot nearly collided with our family's Volkswagen a few years back with my infant daughter in the car.

There are numerous other investigations, lawsuits, and more into Tesla's Autopilot system, FSD, as well as the statements and marketing made by both Elon Musk and Tesla's corporate. Both the feds and the state of California are investigating false advertising promises, while the NHTSA has opened up its own report on accidents, injuries, and fatalities linked to Teslas.

And that's all well and good, but it's time to do something. Because I'm sorry, the world isn't your Beta test playground, Elon. I didn't sign your terms and conditions, and neither did Landon Embry, my daughter, or anyone else around your 5,000-pound rolling software.