As the inquest into the death of Molly Russell ground to its conclusion on Friday, what kept flashing like a faulty neon sign in one’s mind was a rhetorical question asked by Alexander Pope in 1735: “Who breaks a butterfly upon a wheel?” For Pope it was a reference to “breaking on the wheel”, a medieval form of torture in which victims had their long bones broken by an iron bar while tied to a Catherine wheel, named after St Catherine who was executed in this way.

For those at the inquest, the metaphor’s significance must have been unmistakable, for they were listening to an account of how an innocent and depressed 14-year-old girl was broken by a remorseless, contemporary Catherine wheel – the AI-powered recommendation engines of two social media companies, Instagram and Pinterest.

These sucked her into a vortex of, as the coroner put it, “images, video clips and text concerning or concerned with self-harm, suicide or that were otherwise negative or depressing in nature... some of which were selected and provided without Molly requesting them”. Some of this content romanticised acts of self-harm by young people on themselves, while “other content sought to isolate and discourage discussion with those who may have been able to help”. His verdict was not suicide but that “Molly Rose Russell died from an act of self-harm whilst suffering from depression and the negative effects of online content”.

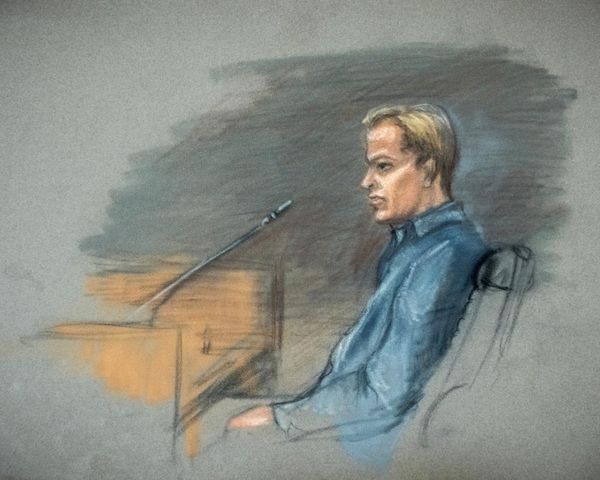

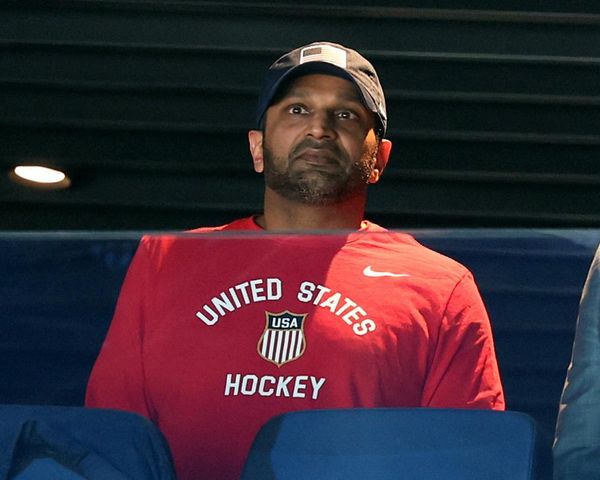

This inquest had a wider significance than that of a belated – Molly died in November 2017 – recognition of a harrowing injustice. In the first place, the coroner’s verdict was a world first, in that it explicitly linked social-media recommendation engines as a causal factor in a death. Second, his inquiry broke new procedural ground by requiring representatives of the two US companies involved (Meta, owner of Instagram, and Pinterest) to testify in person under oath. Of course the two who made the transatlantic trip – Elizabeth Lagone from Meta and Jud Hoffman from Pinterest – were essentially just monkeys representing their respective organ-grinders back in Silicon Valley; but still, there they were in London, batting on a very sticky wicket.

Third, the horrific evidence of the stuff to which Molly had been exposed was presented in an open legal proceeding. And it was horrific – so much so that a child psychiatrist who testified said that even he had found it disturbing and distressing. Afterwards, he said: “There were periods where I was not able to sleep well for a few weeks, so bearing in mind that the child saw this over a period of months I can only say that she was [affected] – especially bearing in mind that she was a depressed 14-year-old.”

Cross-examination of the two company representatives was likewise revealing. The Pinterest guy caved early. The Russell family’s lawyer (Oliver Sanders KC), took him through the last 100 posts Molly had seen before she died. Hoffman expressed his “deep regret she was able to access some of the content that was shown”. He admitted that recommendation emails sent by Pinterest to the teenager, such as “10 depression pins you might like”, contained “the type of content that we wouldn’t like anyone spending a lot of time with”, and that some of the images he had been shown were ones he would not show to his own children. Predictably, the Meta representative was a tougher nut to crack. After evidence was given that out of the 16,300 posts Molly saved, shared or liked on Instagram in the six-month period before her death, 2,100 were depression, self-harm or suicide-related, Sanders asked her: “Do you agree with us that this type of material is not safe for children?”

Lagone replied that policies were in place for all users and described the posts viewed by the court as a “cry for help”.

“Do you think this type of material is safe for children?” Sanders continued.

“I think it is safe for people to be able to express themselves,” she replied. After Sanders asked the same question again, Lagone said: “Respectfully, I don’t find it a binary question.”

Here the coroner interjected and asked: “So you are saying yes, it is safe or no, it isn’t safe?”

“Yes, it is safe,” Lagone replied.

In a way, this was a “gotcha” moment, laying bare the reality that no Meta executive can admit in public what the company knows in private (as the whistleblower Frances Haugen revealed), namely that its recommendation engine can have toxic, and in Molly Russell’s case, life-threatening effects.

It all comes back, in the end, to the business model of companies like Instagram. They thrive on, and monetise, user engagement – how much attention is garnered by each piece of content, how widely it is shared, how long it is viewed for and so on. The recommendation engine is programmed to monitor what each user likes and to suggest other content that they might like. So if you’re depressed and have suicidal thoughts, then the machine will give you increasing amounts of the same.

After Molly’s death, her family discovered that it was still sending this stuff to her account. The wheel continued to turn, even after it had broken her.

• John Naughton chairs the Advisory Board of the Minderoo Centre for Technology and Democracy at Cambridge University

Do you have an opinion on the issues raised in this article? If you would like to submit a letter of up to 250 words to be considered for publication, email it to us at observer.letters@observer.co.uk

• In the UK, the youth suicide charity Papyrus can be contacted on 0800 068 4141 or email pat@papyrus-uk.org, and in the UK and Ireland Samaritans can be contacted on freephone 116 123, or email jo@samaritans.org or jo@samaritans.ie. In the US, the National Suicide Prevention Lifeline is at 800-273-8255 or chat for support. You can also text HOME to 741741 to connect with a crisis text line counsellor. In Australia, the crisis support service Lifeline is 13 11 14. Other international helplines can be found at befrienders.org