This thing we made is so brilliant, we can't risk releasing it to the general public. So Microsoft basically says about it's latest speech generator, VALL-E 2. So, does that reflect genuine concerns? Or is it a clever marketing ruse designed to get some viral traction and online chins wagging?

If it is all completely genuine, what does it say about Microsoft that it's knowingly creating AI tools too dangerous to release? It's a conundrum, to be sure.

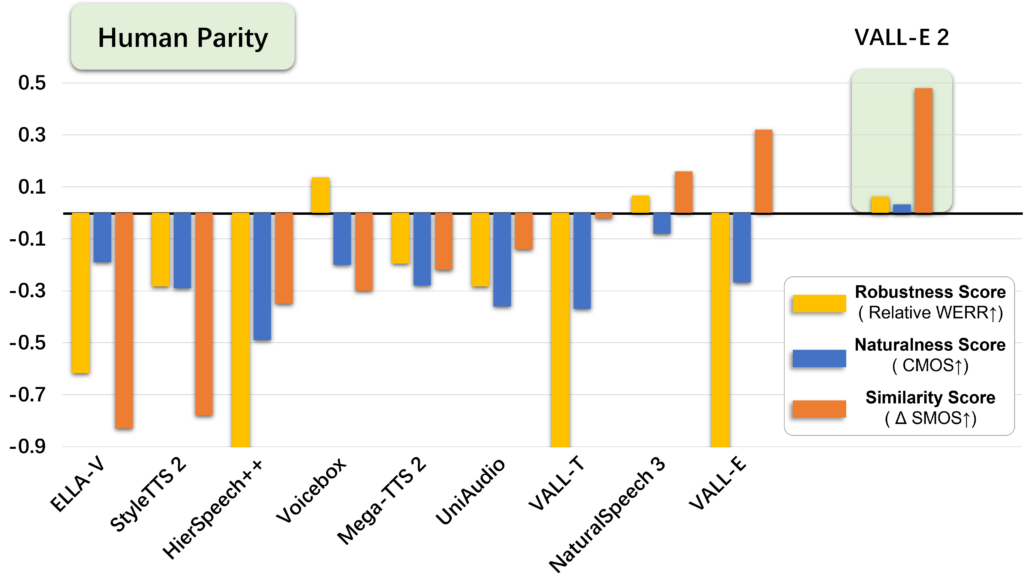

Anyway, here are the basic facts of the situation. Microsoft says in a recent blog post (via Extremetech) that it's latest neural codec language model for speech synthesis, known as VALL-E 2, achieves "human parity for the first time".

More specifically, "VALL-E 2 can generate accurate, natural speech in the exact voice of the original speaker, comparable to human performance." Now, to some extent, this is nothing new. However, it's the incredible speed with which VALL-E 2 can achieve this, or to put it another way, the incredibly limited sample or prompt it needs to achieve this feat that's remarkable.

VALL-E 2 can accurately mimic a specific person's voice based on a sample just a few seconds long. It pulls that trick off by using a huge training library that maps variations in pronunciation, intonation, cadence in the model to the sample and spits out what appears to be totally convincing synthesised speech.

Microsoft's blog post has a range of example audio clips demonstrating how well VALL-E 2 (and indeed its predecessor, VALL-E) can turn a short sample of between three and 10 seconds into convincing synthesised speech that's often indistinguishable from a real human voice.

It's a process known as zero-shot text-to-speech synthesis or zero-shot TTS for short. Again, the approach is nothing new, it's the accuracy and shortness of the sample audio that's novel.

Of course, the idea of weaponising such tools to create fake content for nefarious purposes is likewise not a new idea. But the VALL-E 2's capabilities do seem to take the threat to a whole new level. Which is why the "Ethics Statement" appended to the blog post makes it clear that Microsoft currently has no intention of releasing VALL-E 2 to the public.

"VALL-E 2 is purely a research project. Currently, we have no plans to incorporate VALL-E 2 into a product or expand access to the public," the statement says, adding that "it may carry potential risks in the misuse of the model, such as spoofing voice identification or impersonating a specific speaker. We conducted the experiments under the assumption that the user agrees to be the target speaker in speech synthesis. If the model is generalized to unseen speakers in the real world, it should include a protocol to ensure that the speaker approves the use of their voice and a synthesized speech detection model."

Microsoft expressed similar concerns regarding its VASA-1, which can turn a still image of a person into convincing motion video. "It is not intended to create content that is used to mislead or deceive. However, like other related content generation techniques, it could still potentially be misused for impersonating humans," Microsoft said of VASA-1.

Best CPU for gaming: The top chips from Intel and AMD.

Best gaming motherboard: The right boards.

Best graphics card: Your perfect pixel-pusher awaits.

Best SSD for gaming: Get into the game ahead of the rest.

An obvious observation, perhaps, is that the problems that come with such models aren't exactly a surprise. You don't have to succeed in making the perfect speech synthesis model to imagine what might go wrong if such a tool was released to the public.

So, it's easy enough to see the problem coming, but Microsoft pressed ahead anyway. Now it claims to have achieved its aims, only to decide it's not fit for public consumption.

It does rather beg the question of what other tools it is developing that it much know in advance are too problematic for general release. And then you inevitably wonder what Microsoft's aim is in all this.

There's also the inevitable genie-and-bottle conundrum. Microsoft has made this tool and it's hard to imagine how it or something very similar doesn't eventually end up out in the wild. In short, the ethics of it all are rather confusing. Where it all ends is still anyone's guess.