Many executives and IT strategists would agree we’re in a bit of an “AI standoff.” Organizations need to harness generative AI to remain competitive, but the last year has proven that while it’s easy to embrace new technology, it’s quite difficult to maximize its full potential. The same statement holds true for Microsoft Copilot, the latest take on generative AI that spans and integrates with many of the Microsoft applications to include the Edge browser, the Windows Operating System, Office Applications (e.g., Word, Excel, PowerPoint, MS Teams, etc.), the Power Platform and more. One of the most anticipated Copilots, Copilot for Microsoft 365, was launched on November 1. It is now easier than ever for nearly anyone to tap into the power of AI, all from within the flow of their work. However, ensuring it’s a worthwhile investment for your organization requires strategic forethought and planning. Done properly, implementing Copilot promises to be transformative for your organization, driving innovation, drastically increasing efficiency, and making your organization even more competitive.

Microsoft purchased a 49 percent state in Open AI, the creator of the viral AI tool ChatGPT. Using ChatGPT’s same large language model (LLM) and natural language processing (NLP) capabilities, Microsoft built Copilot, a suite of AI-powered assistants that enhance various applications across Microsoft’s platform. Unlike ChatGPT, which operates within a browser or mobile app, Microsoft 365 Copilot seamlessly integrates into the Microsoft applications we rely on every day, from Outlook to Excel.

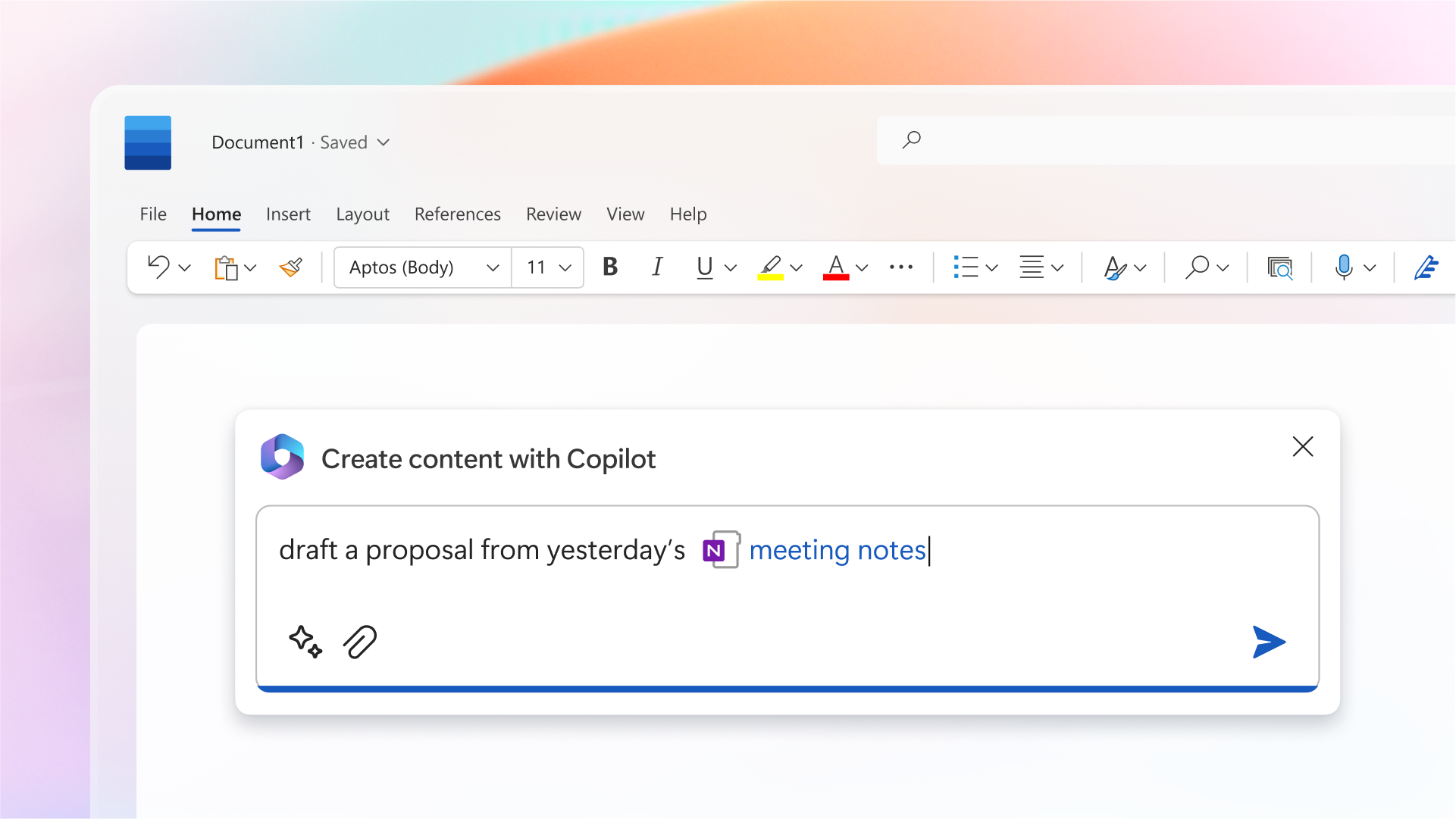

Copilot for Microsoft 365 promises impressive productivity enhancements. For example, PowerPoint’s Copilot can create entire presentations from a single prompt, Excel’s Copilot can visualize your data in the most strategic chart, Outlook’s Copilot can clear your inbox in minutes (who doesn’t love that?), and Teams Copilot can quickly get you caught up on the meetings that you’ve attended and maybe even missed.

However, priced at $108,000 minimum annually (which I’ll break down later) and pulling from your data, Copilot for Microsoft 365 is an expensive and potentially risky investment if not implemented properly. The many features that make Copilot live up to its name (a copilot is a qualified no. 2 who relieves the primary pilot from extraneous or unnecessary duties) will create substantial challenges if your organization hasn’t reviewed and implemented best practices around security, privacy, compliance, and addressed the “people side” of this evolutionary change that will most likely occur across your organization. Rather than adopting Microsoft Copilot for the sake of leveraging new technology as quickly as possible, it’s crucial to evaluate the digital environment you’re inviting new technology into instead of jumping in the void without a safety net.

Put simply, if you want Copilot to be worth it, it’s time to get your house in order.

Grounded in your data

ChatGPT derives its output data from three sources: information freely available on the Internet, data licensed from third-party providers, and information provided by its users. By contrast, Copilot uses your organization’s SharePoint, OneDrive, Teams data, and more to provide real-time assistance. Copilot can even consume data from other non-Microsoft data sources and applications to build a true cross-application AI productivity tool.

Because Copilot has access to your organization’s data, Microsoft has implemented privacy features to safeguard your sensitive information (no, you don’t have to worry about the emails you’ve been sending lately). Copilot only retrieves information that you have access to, maintaining the privacy, security, and compliance settings that you have implemented in your Microsoft environment. In principle, this means a marketing professional who uses Copilot will not be able to access data from sensitive HR files, nor will an HR representative see information from the CEO’s confidential documents.

However, Copilot’s privacy features will only benefit you if you have the proper security, privacy, and compliance governance/configurations in place. If an HR representative saves a document of employees’ confidential information and unknowingly leaves its sharing settings as universal, then that document is fair game for Copilot to pull from, granting everyone in your organization potential access to information they shouldn’t have. It’s worth noting that the larger your organization is, the greater likelihood you have of encountering privacy issues like these.

To limit the exposure of private information within your organization, you must establish and enforce proper security, privacy, and compliance governance/configurations. In particular, defining and communicating policies around permissions management will be critical to ensure your workforce knows that improper permissions may result in “oversharing” of information which your Copilots may find and display to anyone using Copilot. Because of this, it’s imperative that your organization does a complete review of existing permissions, sharing capabilities and limitations, and other Microsoft features that will increase the protection of your data such as Data Loss Prevention and Sensitivity Labels.

Generative, not mind-reading

If users lack proper training in prompt generation, Generative AI can start to resemble a combination of two childhood games: telephone and Mad Libs. When using Copilot, our documents can become like whispered messages in the telephone game, taking on their own life once they pass from one person to the next, and oftentimes unrecognizable compared to the original (that’s part of the fun). This distortion is a user error rather than an error on the part of the tool.

The inputs we give AI can produce distorted outputs if we are not careful to communicate exactly what we mean. These distorted outputs function a lot like Mad Libs, a wordplay game comprised of fill-in-the-blank sentences. Mad Libs, a play on ad lib, comes from the Latin ad libitum, “as it is pleasing.” As the game’s etymology suggests, players fill in the blanks as they wish.

Generative AI models like Copilot have a unique way of handling our inputs. When the data model is trained on does not contain the information it requires to answer a question or fulfill a command, the model doesn’t signal it cannot do what it’s been asked. Instead, like someone playing Mad Libs, the model generates responses that closely align with the input, even if they are incorrect. Many people call these outputs “hallucinations” or “errors.” I prefer to call them “unhelpful responses” because they are not errors — the input simply asked the wrong question. If you misdirect a query, even unknowingly, responses will be inaccurate. Copilot can’t deduce what we’re trying to say. It can only respond to the exact request we write. AI models like Copilot are called “generative” because they create new, somewhat original content based on the patterns they trained on. Even if their response doesn’t accurately or completely fulfill the request they received, they still generate something new.

Unlike the simplicity of Mad Libs, Copilot operates with a very sophisticated structure. Even still, if it encounters a query its data cannot address, it will generate a response anyway. The onus is placed back on the user to review all outputs for accuracy and adjust prompts as necessary until the desired output is produced. This emphasizes the importance of comprehensive training for employees who are granted access to Copilot.

Training should emphasize that generative AI isn’t an authoritative research tool; it’s a productivity assistant. Instead of asking, “How reliable is Copilot’s output?” we should first be asking, “Is my input helpful enough for Copilot to produce an accurate output?” The good news is that Copilot’s grounding in your documents reduces the likelihood of unhelpful outputs, thereby enhancing the chance of receiving relevant and reliable responses.

Priced per person

Microsoft 365 Copilot is priced at $30 per user per month and requires at least 300 users. That means Copilot will cost your organization $9,000 per month and $108,000 annually at minimum. Additionally, to purchase Copilot, your organization needs to have one of two Microsoft 365 licensing plans: the M365 E3, priced at $36 per user per month, or the M365 E5, priced at $57 per user per month.

Copilot is not included in your existing Microsoft licensing plan, meaning that organizations will have to add its cost to their budget.

Companies who have the M365 E3 plan and purchase Copilot will spend $19,800 monthly and $237,600 annually at minimum, and companies who have the M365 E5 plan will spend $26,100 monthly and $313,200 annually at minimum. For a Fortune 500 company, Copilot may be affordable, but for smaller and mid-sized corporations, its cost may appear to be staggering.

With such a steep price tag, ensuring you get what you pay for is critical. To determine whether your organization should purchase Copilot, ask, “What are we hoping to get out of Copilot?” Your answer to this question should include an organizational change management (OCM) plan that discusses how you will introduce Copilot to appropriate personnel, how it will fit into your current digital landscape, and how its productivity boost will justify your investment. If you can’t answer this question, then it may not be the right time for you to purchase Copilot. In fact, many organizations may not be culturally, financially, or technologically ready to begin investing in Copilot or other generative AI models at such a large scale.

If your organization has a well-defined Copilot plan, the next question should extend beyond, “Who should have access to Copilot?” and into, “Which positions would experience such a dramatic uptick in productivity that it would offset Copilot’s cost?” To answer this question, list positions within your organization that would benefit the most from Copilot. Then, outline how a person with one of those positions would use Copilot in an ideal world. After creating those lists and evaluating your budget, choose which 300 employees (or more) will receive access to Copilot.

To maximize your investment, you must clearly communicate how employees with access to Copilot should use it in their day-to-day work. If you do not provide your employees with specific examples of what they can use Copilot for and walk them through how to do it, they will be less likely to use Copilot, thereby costing you money.

For example, you could show an HR representative how to use Word’s Copilot to summarize a candidate’s resume or write a job description. Similarly, you could show a project manager how to use PowerPoint’s Copilot to condense a hundred-slide presentation into a summary deck of five. Providing your employees with practical tutorials and specific demonstrations will help make Copilot a better investment.

Maximize Copilot’s potential

Copilot’s intuitive features illuminate the existing challenges many organizations face when purchasing new technology. Before adopting any new technology, not just Copilot, it is crucial to strengthen your organization’s readiness for change.

Introducing Copilot into your organization demands the precision that comes with an unhurried, well-thought-out approach to change. To maximize Copilot’s potential, your organization must determine what generative AI can and should be used for, which positions will yield the greatest benefits from using it, how to formulate precise questions to get precise answers, and how to critically evaluate its responses.

Your answers to these questions will define the scope of your adoption goals. More importantly, they will set realistic expectations for Copilot’s capabilities and prevent your employees from over-relying on it, ensuring that your adoption strategy and change management plan are effective.

Once your financial, cultural, and digital plans are in place, your house is officially in order, and you can decide whether to invite Copilot in.

We've featured the best open source software.

This article was produced as part of TechRadarPro's Expert Insights channel where we feature the best and brightest minds in the technology industry today. The views expressed here are those of the author and are not necessarily those of TechRadarPro or Future plc. If you are interested in contributing find out more here: https://www.techradar.com/news/submit-your-story-to-techradar-pro