What you need to know

- Meta is prioritizing age-appropriate content for teenagers on Instagram and Facebook, guided by expert advice.

- As a result, Instagram will automatically set the most restrictive content controls for teens, and content related to self-harm narratives will be hidden from teenagers.

- These adjustments apply to all users under 18 on Instagram and Facebook, with the full implementation expected in the coming months.

Instagram and Facebook are taking a stand against sensitive topics like suicide and eating disorders for teens, meaning young users will stop seeing those posts, even from friends.

Meta announced on Tuesday its new privacy and safety features that are meant to make sure teens get a more age-appropriate experience, all in the name of looking out for their wellbeing. The changes are set for full implementation in the next few months.

The new updates will clean up teens' Instagram and Facebook feeds, snatching away anything related to self-harm and eating disorders. Plus, the platform is making sure those teen accounts default to super-strict content filters.

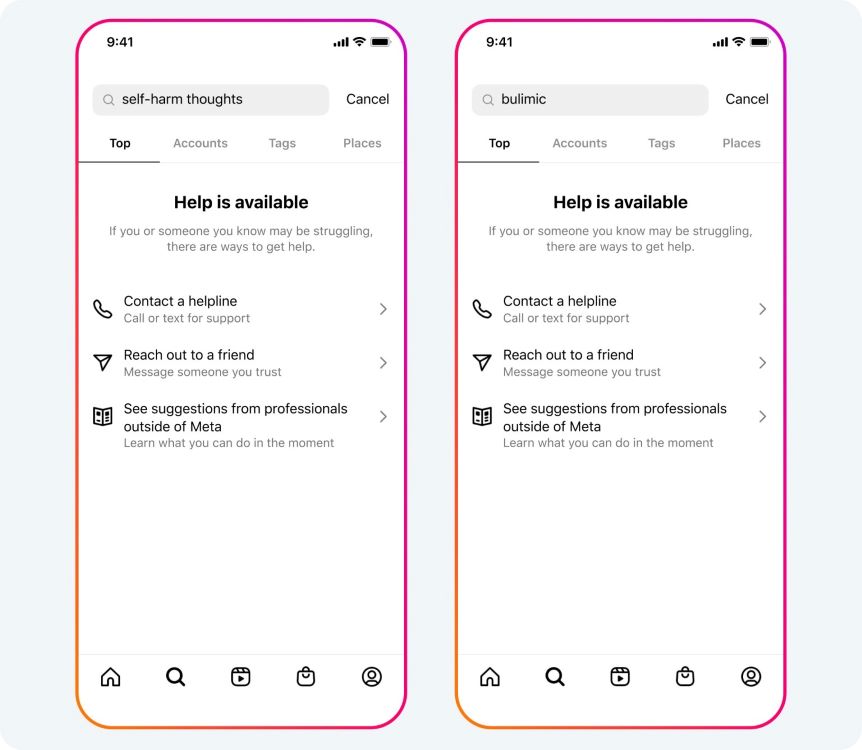

If a teen tries to dig up certain content on Facebook and Instagram, Meta is steering them toward expert resources like the National Alliance on Mental Illness. And teens won't even know if someone shares content in those categories because it's hidden from their view.

Meta does not already suggest certain content to teens in places like Reels and Explore. Now, it's kicking it up a notch and expanding this restriction to Feed and Stories, even if they're shared by someone the teen follows.

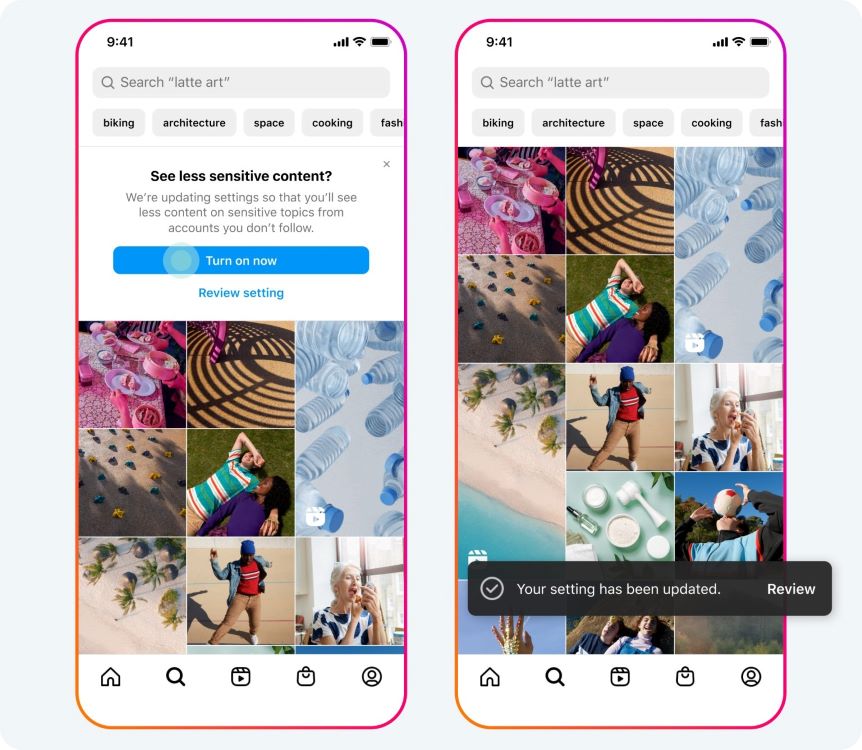

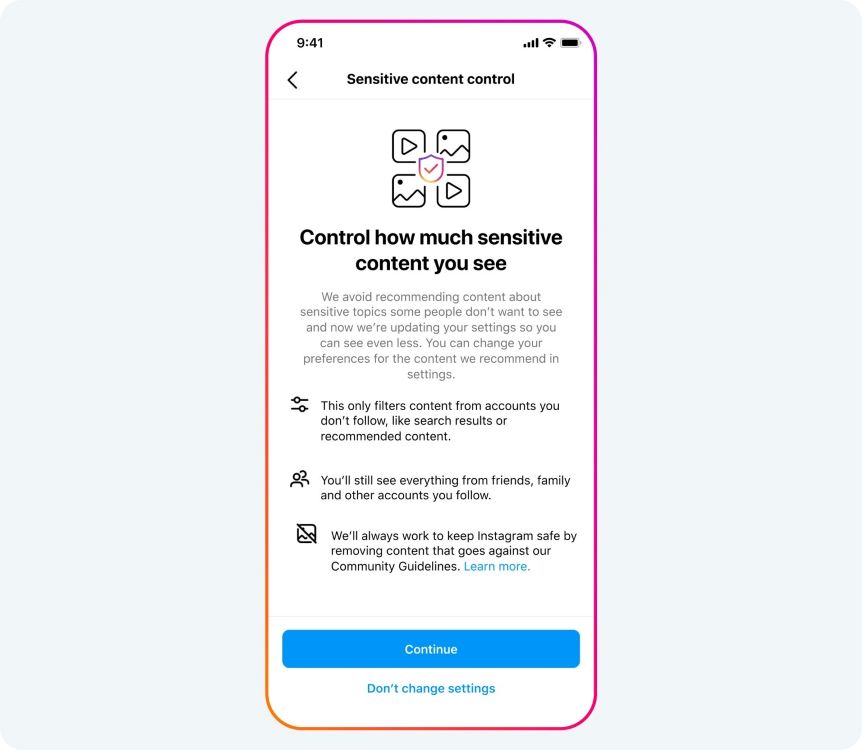

Of course, if teens want to loosen the reins, they can tinker with the settings themselves. That said, Meta will send notifications to remind them to lock down those privacy settings in order to save teens from unwanted messages and nasty comments. Just give that notification a tap, and you're in Meta's recommended teen settings.

Social media platforms are getting flak for not keeping kids safe from mental health-ruining content. Now, these changes are rolling out, and it's no coincidence. More than 40 states are dragging Meta to court, claiming its services are messing with the mental health of young users.

Mark Zuckerberg and executives from other tech giants are also gearing up for a Senate probe on January 31, where they'll be answering questions about child safety.