What you need to know

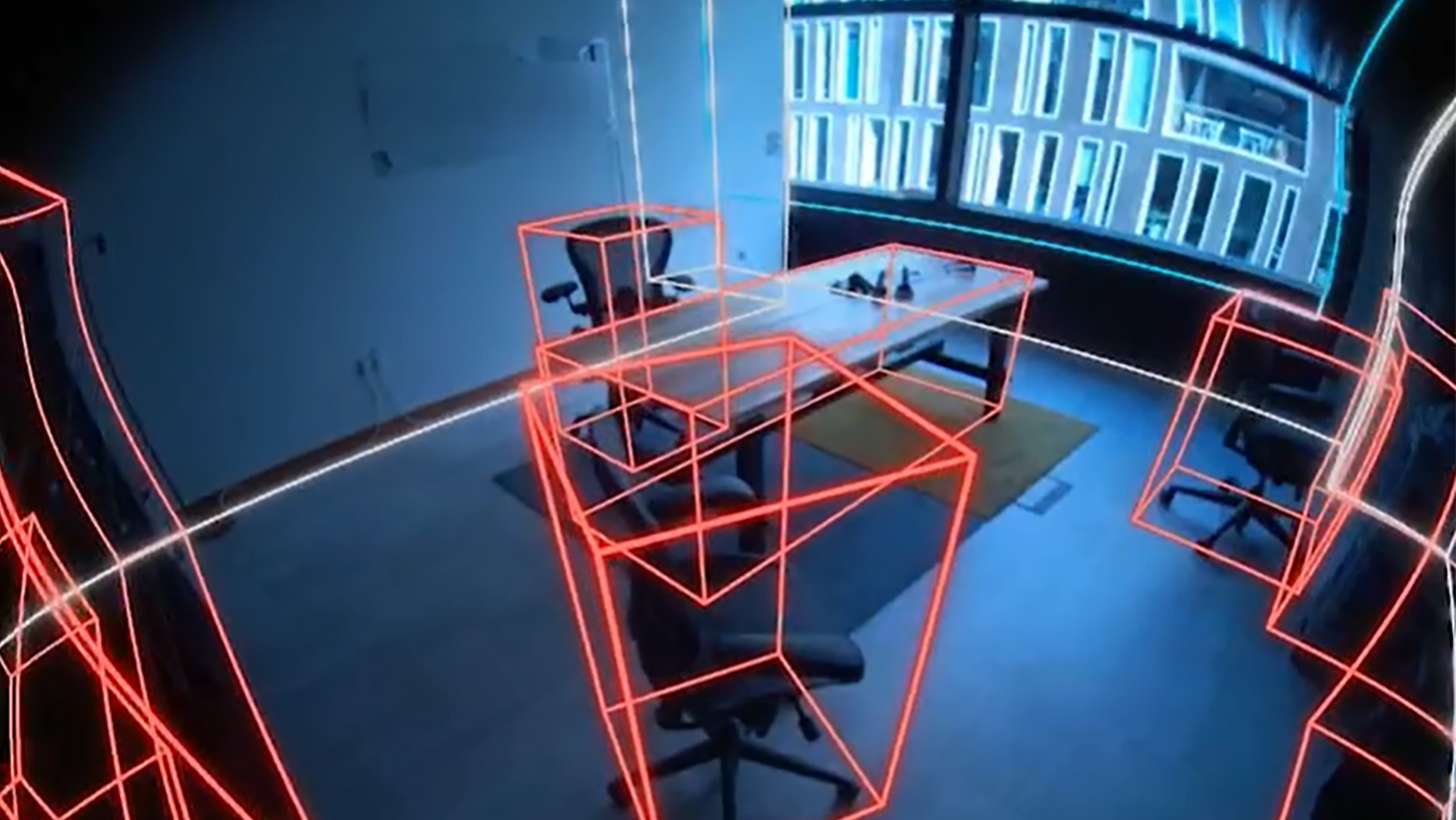

- A new Meta AI feature, SceneScript, can automatically map rooms and identify features such as walls, doors, windows, tables, and more.

- The AI was trained on hundreds of thousands of procedurally generated rooms and could end manual room mapping on XR headsets.

- Room data can also be used by LLMs to ask complex questions about living spaces for consumers and businesses.

Meta researchers have debuted a new room scanning feature called SceneScript, which uses the power of AI to automatically identify a room's objects and architecture. While that sounds pretty scary, Meta is using it to improve AR and VR devices and make them more consumer-friendly.

A detailed post on Threads juxtaposes current room mapping techniques with the new model, showcasing how much easier VR and AR usage is with the new model. Meta debuted manual room mapping on the Quest Pro, while the depth sensor on the Meta Quest 3 can automatically map floors, walls, and ceilings. The problem is that you still have to manually map couches, tables, windows, and other room features.

This new technique would use the depth sensor, along with cameras, to make a complete map of the room, including automatically mapped furniture and other architectural features.

The automatic room mapping technique on Apple Vision Pro means users don't have to manually map anything at all. Still, Apple's headset doesn't automatically identify objects with names and features, just as blank surfaces with which virtual objects can interact.

Meta's more advanced mapping feature means that this data can be fed into an LLM like Meta LLaMA, which can then be asked questions about the room. One research example, "how many pots of paint would it take to paint this room?" helps illustrate the value of such data for future AR glasses, in particular.

Meta used the Aria Synthetic Environments Dataset — a collection of 100,000 virtual procedurally-generated rooms — to train the AI so it can understand how rooms are laid out and how to properly identify objects. This helps it understand all sorts of different layouts and decor types, as well as variations in furniture and how a room can still be mapped even if things are moved around.

Meta hasn't revealed whether or not this will be coming to the Meta Quest 3, but it's entirely possible that such an upgrade could be included in one of the regular monthly software updates. It's entirely possible we could see such a feature in the upcoming Meta Quest UI overhaul.