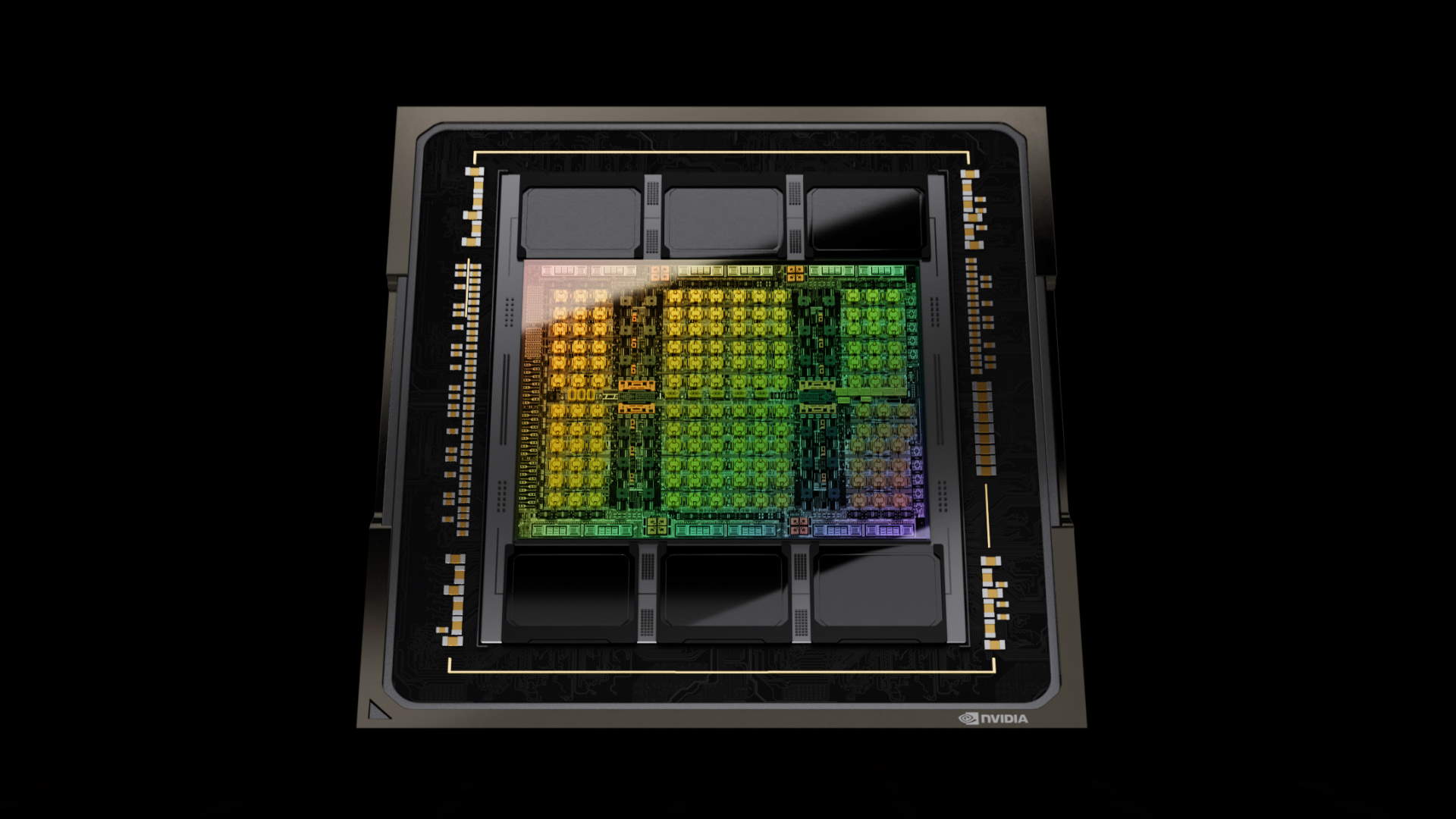

Remember when we reported some market data indicating Meta had snapped up 150,000 of Nvidia's beastly H100 AI chips? Now it turns out Meta wants to have more than double that at 350,000 of the big silicon things by the end of the year.

Most estimates put the price of an Nvidia H100 GPU at between $20,000 and $40,000. Meta along with Microsoft are Nvidia's two biggest customers for H100, so it's safe to assume it will be paying less than most.

However, some basic arithmetic around Nvidia's reported AI revenues and its unit shipments of H100 reveals that Meta can't be paying an awful lot if any less than the lower end of that price range, which works out to roughly $7 billion worth of Nvidia AI chips over two years. For sure, then, at the very least we're talking about many billion of dollars being spent by Meta on Nvidia silicon.

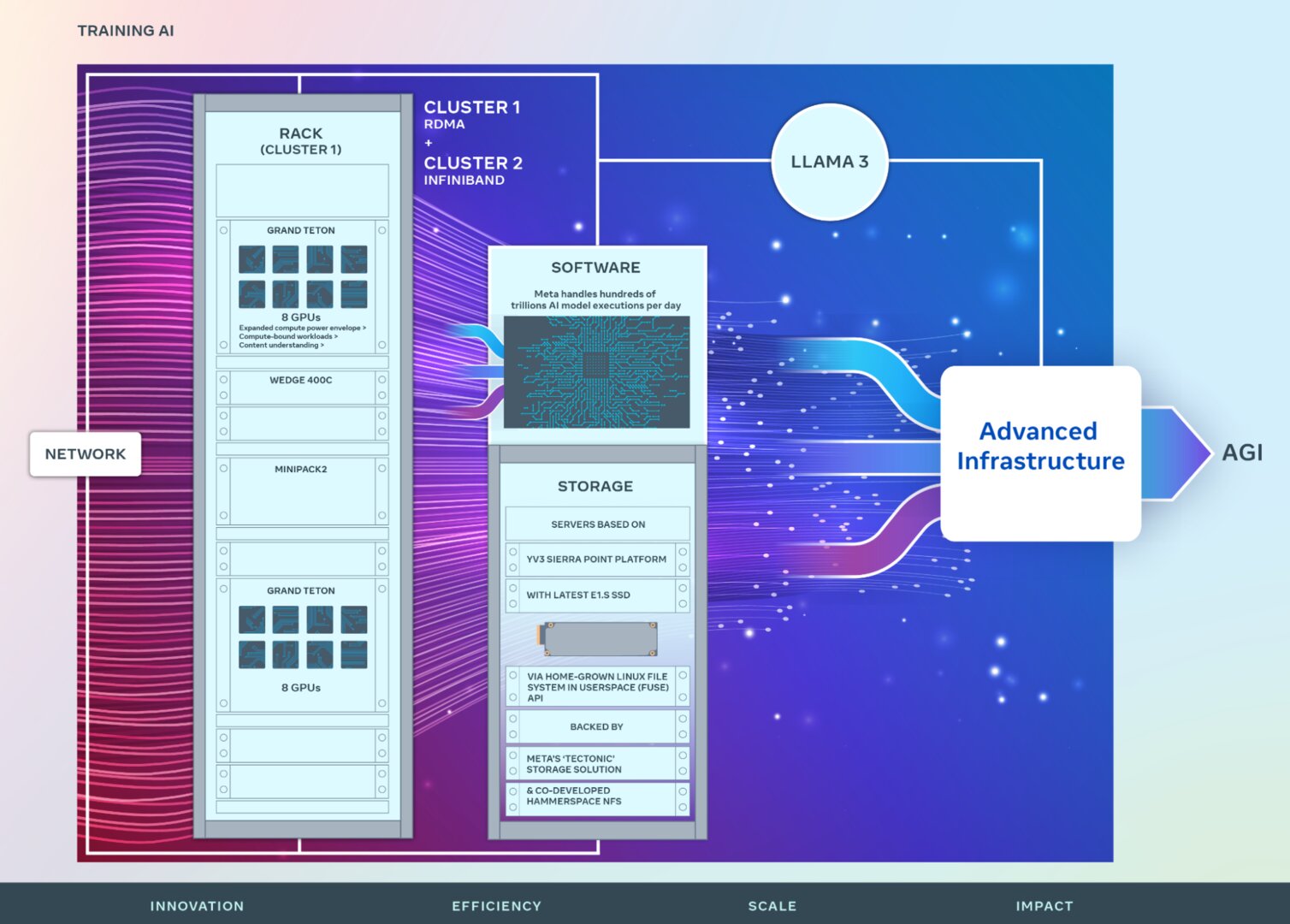

Meta has also now revealed some details (via ComputerBase) about how the H100s are implemented. Apparently, they're built into 24,576-strong clusters used for training language models. At the lower end per-unit H100 price estimate, that works out at $480 million worth of H100s per cluster. Meta has provided insight into two slightly different versions of these clusters.

To quote ComputerBase, one "relies on Remote Direct Memory Access (RDMA) over Converged Ethernet (RoCE) based on the Arista 7800, Wedge400 and Minipack2 OCP components, the other on Nvidia's Quantum-2 InfiniBand solution. Both clusters communicate via 400 Gbps fast interfaces."

In all candour, we couldn't tell our Arista 7800 arse from our Quantum-2 InfiniBand elbow. So, all that doesn't mean much to us beyond the realisation that there's a lot more in hardware terms to knocking together some AI training hardware than just buying a bunch of GPUs. Hooking them up would appear to be a major technical challenge, too.

But it's the 350,000 figure that is the real eye opener. Meta was estimated to have bought 150,000 H100s in 2023 and hitting that 350,000 target means upping that to fully 200,000 more of them this year.

According to the figures provided by research outfit Omnia late last year, Meta's only rival for sheer volume of H100 acquisition was Microsoft. Even Google was only thought to have bought 50,000 units in 2023. So it would have to ramp up its purchases by a truly spectacular amount to even come close to what Meta is buying.

For what it's worth, Omnia predicts that the overall market for these AI GPUs will be double the size in 2027 that it was in 2023. So, Meta buying a huge amount of H100s in 2023 and yet even more of them this year fits the available data well enough.

What this all means for the rest of us is anyone's guess. It's hard to predict everything from the impact this huge demand for AI GPUs will have on availability of humble graphics-rendering chips for PC gaming, let alone the details of how upcoming AI will advance over the next year or two.

Best CPU for gaming: The top chips from Intel and AMD.

Best gaming motherboard: The right boards.

Best graphics card: Your perfect pixel-pusher awaits.

Best SSD for gaming: Get into the game ahead of the rest.

As if these numbers weren't big enough, Omdia reckons Nvidia's revenues for these big data GPUs will actually be double in 2027 what it is today. They won't all be used for large language models and similar AI applications, but a lot of them will.

It's interesting that Omdia is so bullish about Nvidia's prospects in this market despite the fact that many of Nvidia's biggest customers in this market are actually planning on building their own AI chips. Indeed, Google and Amazon already do, which is probably why they don't buy as many Nvidia chips as Microsoft and Meta.

Strong competition is also expected to come from AMD's MI300 GPU and its successors, too, and there are newer startups including Tenstorrent, led by one of the most highly regarded chip architects on the planet, Jim Keller, trying to get in on the action.

One thing is for sure, however, all of these numbers do rather make the gaming graphics market look puny. We've created a monster, folks, and there's no going back.