Meta, Facebook’s parent company, has just been hit with an eye-watering €1.2 billion fine (about A$1.9 billion) for breaches of the European Union’s General Data Protection Regulations (GDPR).

Unfortunately for Meta and its shareholders, earlier penalties mean it now faces a total fine amount close to A$4 billion.

Meta is often used as an example of how not to do privacy, but this isn’t a simple case of organisational greed or disregard for legislation. As is the case with most events of this nature, there’s a lot more going on.

Why was Meta fined?

The GDPR legislation, introduced in 2018, governs how data relating to citizens of the European Economic Area (which includes EU countries, as well as Iceland, Liechtenstein and Norway) can be used, stored and processed. In many cases this means undertaking all data-related activities within the European Economic Area.

Exceptions are allowed, providing the protections for individuals’ privacy are aligned with those under the GDPR. This is referred to as an “adequacy decision”.

This sounds relatively simple; if you’re a German citizen, then your data should not be exported outside the EU. But organisations such as Meta operate on a global scale. Considering users’ nationality and residential status are often changing, managing their data can be challenging.

In 2016, the EU-US Privacy Shield legal framework was introduced, enabling large organisations such as Meta to continue to process data for EU citizens in the US. This framework replaced the previous International Safe Harbor Privacy Principles, which were invalidated in 2015 after a complaint by Austrian privacy campaigner Max Schrems.

Read more: Privacy Shield replaces Safe Harbour, but only the name has changed

However, in 2020 the EU-US Privacy Shield also became invalidated following a determination by the Court of Justice of the European Union. The court essentially ruled the US did not offer personal data protections that were comparable to those offered under the GDPR.

One significant issue was that US surveillance laws allowed for the potential interception of, or access to, data relating to European Economic Area citizens. In particular, the Foreign Intelligence Surveillance Act and Executive Order 12333.

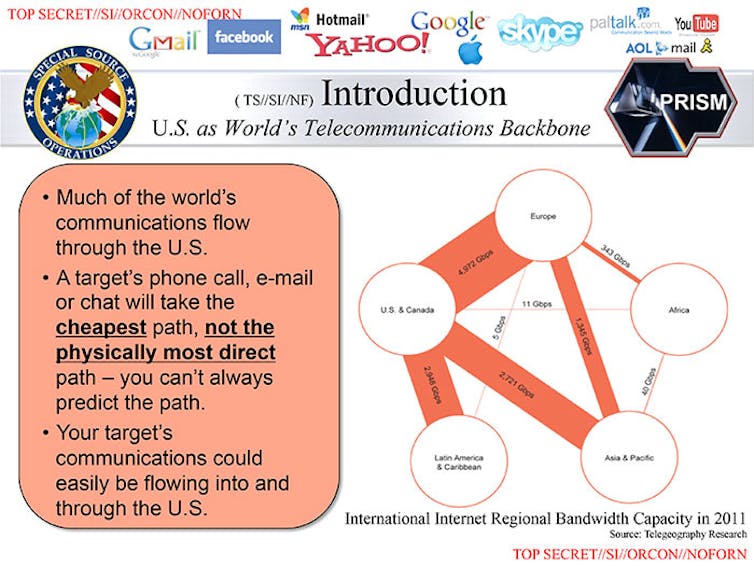

Some concerns related to disclosures made by Edward Snowden in 2013. These leaks identified a secretive US program code-named PRISM, which allowed the US National Security Agency to collect data across a range of popular consumer platforms.

Although the Court of Justice determination was delivered in 2020, it took until 2023 for the outcome to be announced due to legal challenges and conflicting viewpoints on the penalties.

The outcome led the Irish Data Protection Commission, the entity which regulates Meta across the EU, to impose the fine. The commission initially did not intend to impose the penalty, but was overruled by the European Data Protection Board, which acted on objections raised by yet another body – the EU/EEA Concerned Supervisory Authorities.

Apart from the €1.2 billion fine, it was determined Meta should stop transferring any personal European Economic Area citizen data to the US within five months, and ensure EU/EEA user data stored within the US is compliant with the GDPR within six months.

What happens now?

While the reports may seem dramatic, it’s possible nothing will really happen (at least for a while) as Meta has lodged an appeal against the decision. Meta highlighted that even the Irish Data Protection Commission acknowledged the company was acting in good faith.

Once the appeal is under way, Meta and the EU may face court hearings lasting months. By the time a decision is made, a newly proposed EU-US Data Protection Framework could be in place (although a recent vote by members of European Parliament may further delay things).

In a worst-case scenario for Meta, the tech giant could be forced not only to pay the fine, but also address the large volume of European Economic Area user data held on US servers, and establish a fully EU-based infrastructure to deliver Facebook functionality. This is a mammoth task, even for an organisation of Meta’s size.

It might prove impossible to extract years of data from Meta’s global network of servers and distribute it to appropriate regional locations. Imagine a Spanish citizen who currently lives in the US, for whom ten years of data were collected while in Germany, and who also spent time in the UK before and after Brexit!

If Meta does have to move data to different servers around the world, this may impact its ability to use these data to profile users. This could decrease its advertising effectiveness and the relevancy of users’ Facebook feeds.

As for simply pulling Facebook services from the European Economic Area, it’s unlikely Meta will do this as this would entail walking away from the billions of dollars of advertising revenue it receives from European users. As Markus Reinisch, Meta’s Vice President for Public Policy in Europe, has stated, “Meta is not wanting or ‘threatening’ to leave Europe”.

Why does it matter where data are kept?

The reality is most of us have neither awareness, nor interest, in where our personal data are stored for the services we use. Yet, where a company chooses to store our data can end up having a major impact on how the data are accessed and used.

Meta has chosen to store large volumes of data in the US (and elsewhere) for commercial reasons. This choice could be based on cost, convenience, technical requirements, or countless other reasons.

Some organisations deliberately distribute data in regional data centres that are geographically closer to their customers. This can help reduce the time it takes for customers to access their services.

For others, hosting data in a specific country can be a good selling point. For example, offering a guarantee of data sovereignty will appeal to those wanting to keep their data out of sight of foreign governments (or perhaps away from their own).

The EU has taken responsibility for ensuring the safe and secure processing of personal data belonging to citizens of the European Economic Area. In Meta’s case we’re yet to see how, or if, this will ultimately be done. While the company’s focus on privacy has improved in recent years, perhaps its next few steps will reveal how far this commitment goes.

Read more: Feed me: 4 ways to take control of social media algorithms and get the content you actually want

Paul Haskell-Dowland does not work for, consult, own shares in or receive funding from any company or organization that would benefit from this article, and has disclosed no relevant affiliations beyond their academic appointment.

This article was originally published on The Conversation. Read the original article.