From the Humane AI pin flop to the Rabbit R1 having an incredibly dicey start to its life, AI hardware went from being heralded as the future at CES 2024 to quite lackluster. But there is one gadget that has managed to stand head and shoulders above the rest in terms of logical form factor, style and capability, and that’s the Ray-Ban Meta smart glasses.

If Zuck & Co. play their cards right with impactful updates at Meta Connect 2024, we stand a very good chance of seeing these specs be the de facto must-buy when it comes to AI-centric devices. The wearable design makes sense and is stylish enough to sink into the background as a pair of glasses, rather than a weird pin that was supposed to replace your phone or an additional device that takes up pocket space.

And the current multimodal AI features in the glasses range from genuinely useful (like being able to look at a landmark and ask questions about it) to fun gimmicks that you try once and forget (asking it to make jokes about items it can see turns into a fun roast).

But with all the pressure on Meta, it’s time to prove an AI wearable is more of an essential piece of kit to your life rather than a nice to have. So with that in mind, here are 5 features I’d want Meta to announce to get there.

1. Meeting recording and transcribing

We’re seeing this in a lot of devices already, and I hope it comes to the Meta Ray-Bans. As seen in the stellar video content capture, the microphones on these specs are great. So to have the chance to utilize these for recording meetings and getting cliff notes would be huge.

This would be one of a few things Meta could do to start removing certain barriers to wearing these glasses. All you’ve got to do is look at your common everyday moments and ask how Ray-Ban Smart specs could enrich them.

2. Integrations with other apps

At the moment, Meta is really stretching its legs in generative AI and providing knowledge/advice for the user. But the next real step forward is actionable AI. Rabbit was the first to flirt with it with the Large Action Model (LAM for short), and while the promise of it didn't come to fruition at the time, the principle was a sound one.

This could be Meta’s moment to pounce, and it could do so by building some partnerships. Just baby steps at first – maybe looking at an on-screen email and asking Meta AI to extract tasks for you and put them in a to-do list in Evernote as an initial idea.

To keep regulators and companies happy, this should always be done at an API level — using doors built by developers into its code rather than sending a bot to crawl a website. And this could be the start of asking Meta for ideas and translating that into action. Maybe asking for recipe inspiration based on what’s in your fridge and following up by getting them to purchase any additional ingredients you need, or purchasing everything needed for a travel plan.

3. Make interactions more natural and straightforward

Right now, your interaction and prompt have to be pretty specific – starting with “Hey Meta, look and…” followed by whatever you want the Ray-Bans to look at. It’s a simple structure for sure, but not one that is natural with how you’d ask questions.

That’s where some more natural language recognition could be beneficial. Namely, rather than needing to hear stuff like “look and tell me more about this building,” it can be versatile to the way you’d normally ask that: “what should I know about this building?”

This would make the AI more approachable and more helpful to the masses.

4. Understand personal context

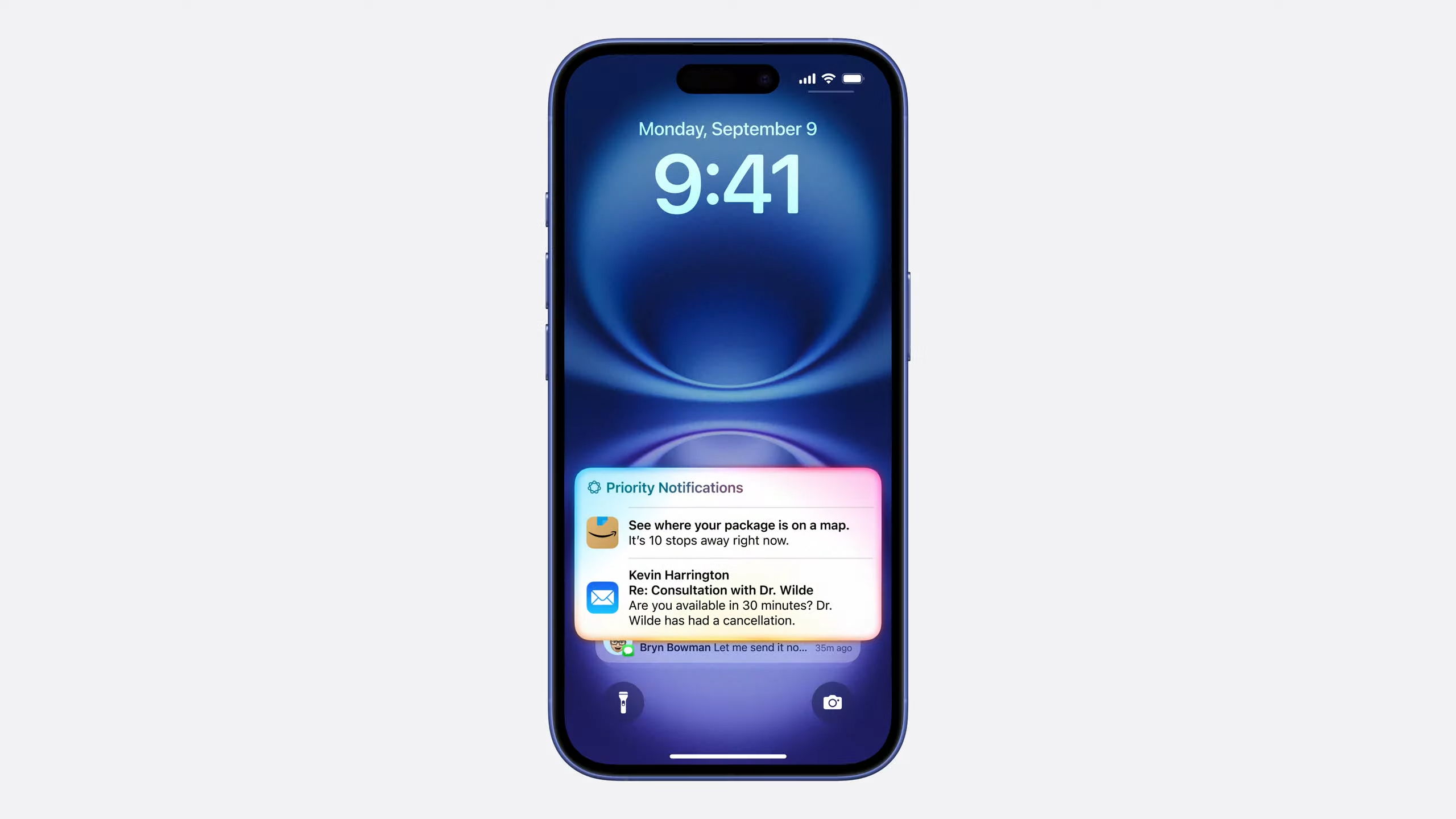

This is one of the real superpowers of what Apple Intelligence will bring to the table over the course of the next few months. Sure, there are some generative AI elements like Genmoji, but the real power will come from the use of your personal context – being able to put your data through that model of understanding and pull out relevant information you’re asking about.

Now, Meta’s probably sitting on a treasure trove of your data, and Zuck ain’t afraid to use it! From WhatsApp conversations and social posts to IG stories and a wealth of bookmarked posts/links. Asking your glasses to remind you of any of this data would be one thing, but blending it with multimodal AI would be insane (in a good way).

Imagine getting deja vu looking at something in a store; you ask your glasses whether you’ve seen it before, and the Ray-Bans tell you that you bookmarked it for a far cheaper price online. This could be significant.

5. Give 1 Second A Day a run for its money

I know I talked about needing to make these more than a gimmick, and here I am turning to a rather gimmicky kind of feature. But let’s be honest, it would be a lot of fun.

What’s the biggest obstacle for 1 Second A Day? Remembering to take a clip. You get the notification, think “I’ll do this later,” and just end up forgetting. Imagine if the Ray-Bans would pick an agreed time each day with you to just capture it for you, and automatically create a compilation reel for you at the end of the year. Pretty cool, right?

Outlook

Yes, I know there are a lot of privacy question marks around this and the other points up above. Privacy is something that (let’s be honest) Meta’s kind of ignored at first until governments regulate them. But that doesn’t take away from the fact that these obstacles will need to be cleared before we see a lot of this.

That being said, I’m definitely looking on Meta Connect with a certain higher level of excitement. In 2023, we got the glasses, and in 2024, Meta may make them the best piece of AI hardware you can buy.