Whether it’s Hulk Hogan as Paddington Bear, Paddington Bear as Hulk Hogan, R2-D2 getting baptized, or courtroom sketches of Snoop Dog being sued by Snoopy, the images generated by the artificial intelligence model DALL-E mini, have captured the imagination of the extremely online and careened around every corner of the internet.

An AI model that generates images based on a user’s text prompts, DALL-E mini was developed by AI artist Boris Dayma as a trimmed-down, open-sourced version of the DALL-E 2 model developed by artificial intelligence research company OpenAI. “To me, it’s a new form of imagination, and in some ways I’m always drawn to new ways of thinking about things,” says Ernie Smith, the proprietor of Dall-E News, a brand-new Twitter account that features the results of newsworthy events run through the app.

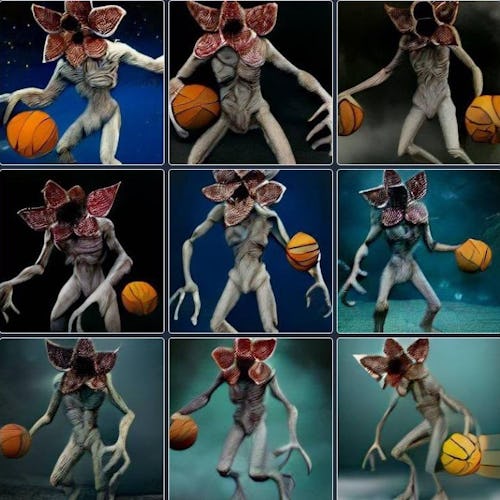

Dall-E News is one of a number of single-service Twitter accounts that have sprung up recently that either collate the best user-generated content put through DALL-E mini or post their own attempts to test the limits of absurdity using the model. The most popular of them all is Weird Dall-E Generations, which has nearly 638,000 followers and whose current pinned tweet illustrates “the Demogorgon from Stranger Things holding a basketball.”

The creator of Weird Dall-E Generations, who was unavailable for an interview, curates submissions from an associated subreddit with more than 50,000 users. The creator, who goes by @huhwtfthe on Twitter, also runs a similar account dedicated to weird Spotify playlists.

On the more niche side of things, there are tiny Twitter accounts devoted to AI takes on Sonic the Hedgehog and Funko Pops, plus one dedicated to depictions of Guy Fieri in the style of famous artists. All these accounts show how we try to make sense of the world when something new and shiny comes along. Of course, they’re all seemingly trying create viral gold, too.

Smith, who has written for Input and runs Tedium, a newsletter of quirky ephemera and history, has a history of aggregation: He previously ran a Tumblr site, ShortFormBlog, that had 160,000 followers at its peak. Like many people, Smith is trying to push the limits of the DALL-E model to the breaking point, reveling in the unexpected links it makes between what we humans think are either obvious partners or totally incongruous ones.

Smith admits to being more interested in the examples where things go wrong. “As a kid growing up, I was the kind of person that would throw random Game Genie codes on an NES game to see what would happen,” he says. “DALL-E mini feels like a 2022 version of that.”

The gruesomeness of some of DALL-E mini’s results may well explain why we’re so engaged with it, says David White, head of digital education and academic practice at University of the Arts London, who included the model in a recent presentation on digital art. “It’s kind of fun and the images it creates are hilarious,” he says. “The ones I’ve seen that are popular are the ones that ram together two ideas, to combine contexts.”

“DALL-E mini is doing something human-like, but it clearly doesn’t know what it’s doing.”

White enjoyed the output of prompting the model to produce images of Vladimir Putin eating a bagel. “It came out seriously distressing,” he says. “Then I think it’s also popular because it’s created its own aesthetic that is specific, unusual, and uncanny.” (The results the model produces often feature warped, melting faces.) “It’s doing something human-like,” White adds. “but it clearly doesn’t know what it’s doing.”

Tony Henz, a digital marketing company head based in Spain, runs @dallerare. He says the Twitter account, which pumps out incongruous results from the model, is designed to show the strength of DALL-E mini. “I think artificial intelligence is the future of the internet, and DALL-E is capable of creating things that we could only imagine in our dreams,” says Henz.

He’s been following the development of AI-generated content based on user prompts since GPT-2, which generates screeds of text, was first released by OpenAI in 2019. He says he’s been anxiously awaiting the day that OpenAI makes the full-scale DALL-E available to the public. The open-sourced version developed by Dayma was the next best thing.

Henz says he turned a pre-existing Twitter account dedicated to cryptocurrencies called @cryptosake into @dallerare when he began to see people sharing DALL-E images online. The idea was to act as a depository for the best examples produced by users, alongside some of his own.

He regularly asks his employees for inspiration and crazy ideas to test the limits of the AI model. “If it is good enough we publish it on Twitter,” he says. “I guess I’m not an example to follow as a boss, but at least it’s fun.”

Heightened absurdity

Yet the filtering process is one key thing that’s often elided in the focused Twitter accounts, which post a near-endless stream of rib-ticklingly odd images. By sharing only the best (or the worst) output from DALL-E mini, we’re running the risk of overhyping its ability to merge two concepts into a harmonious result — or imbuing it with a sense of humor that it doesn’t necessarily have by highlighting the ways it goes wrong.

Smith admits to tilting the model to the absurd by playing with the language he inputs into the model. One of his favorite examples that his account has shared is a depiction of Sarah Palin battling Santa Claus, based off the news that Santa Claus has made it onto the ballot in Alaska.

He also deliberately picks stories he knows are able to be parsed well by the model in order to increase the likelihood of success. “A lot of what I pick tends to be stuff that I think might make good visuals, which means a lot of science, business, and celebrity news,” he says.

Smith has also held back other things generated by the model he feels aren’t suitable for public consumption. “There are things I won’t share. There have been some questionable pictures of celebrities where I was just like ‘No way,’ such as when I put in ‘Jennifer Hudson EGOT’,” says Smith. (The exaggerated features the model produced were problematic, to say the least.) “The algorithm’s brokenness in many cases raises some important questions about bias.”

It’s an issue that Dayma has himself acknowledged on the DALL-E mini landing page itself, pointing out that the model is trained on unfiltered information on the internet — and thus all the biases of we humans. “It’s not that long ago that you’d type ‘professor’ into Google image search and it’d be ancient white males,” says White. “No matter how smart the technology is, it’s just a mirror to our culture and its own failings.”

That said, most people putting in random strings of words to see what the model spits out aren’t that bothered by the underlying biases inherent in every AI model — and how it mirrors the issues in our society. They mostly want to see cool stuff, like the adorably twisted images featured in Henz’s favorite @dallerare tweet:

“We have a Pomeranian dog in the office that we call a ‘company puppy,’ and my employees gave me the idea of imagining him cooking methamphetamine,” explains Henz. “The result was really funny.”