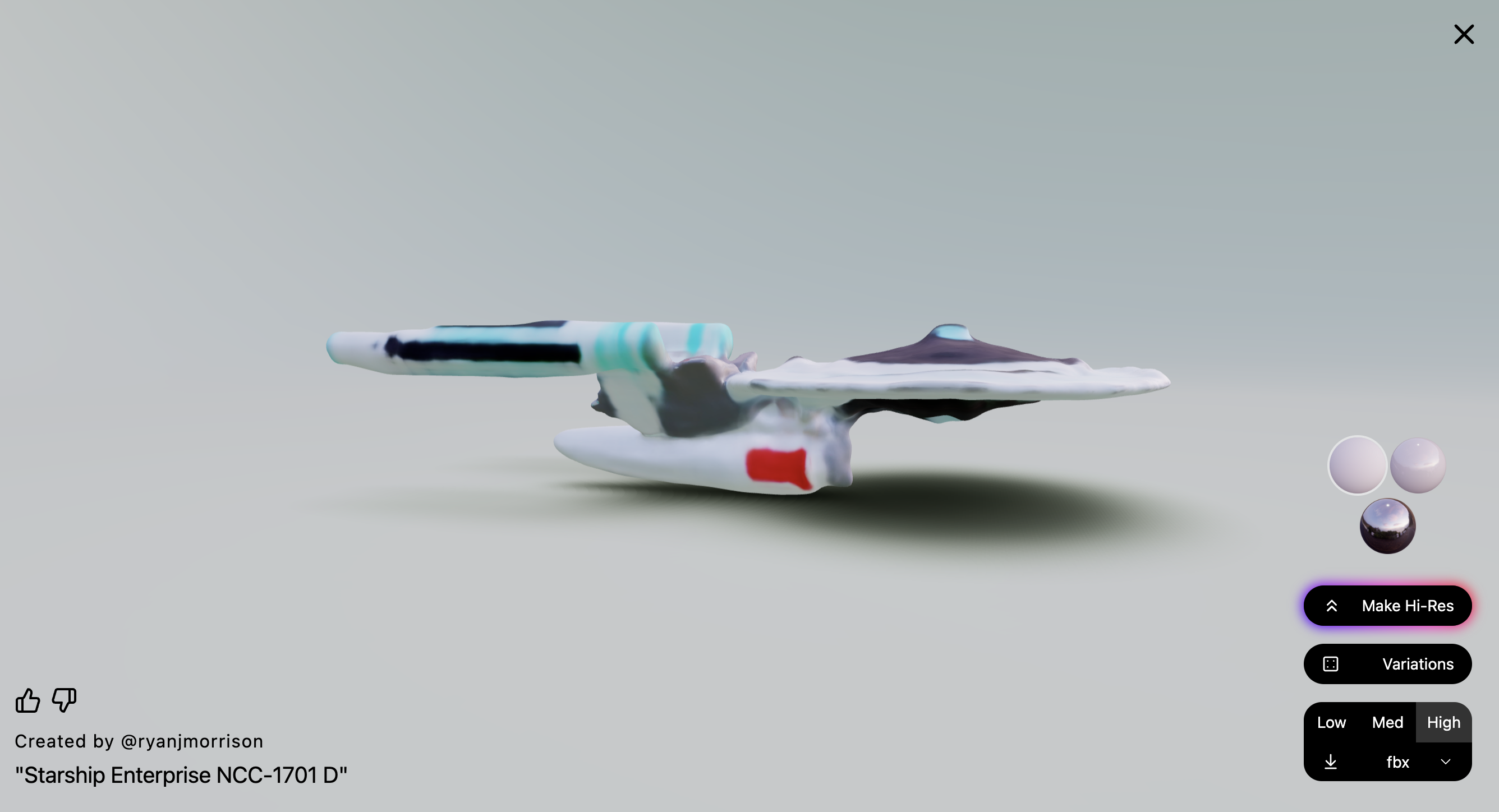

A new text-to-3D artificial intelligence model has been created by startup Luma Labs. The AI tool lets you turn a simple phrase or word into an object you can 3D print or use in a game.

Named Genie, the tool creates a range of outputs including files that can be opened in 3D modeling applications for editing, adding new textures or incorporating into other models.

The tool is available through the Luma website, as an iPhone app where you can also use the camera to capture 3D models of your own real-world objects, or through the Luma community Discord server.

With the website version you have the option to upscale the model you've just created to HD, download it in a range of formats or have different versions generated if the first doesn’t work, it is currently free to use.

How does it work?

Unlike text-to-image tools like MidJourney or DALL-E, Luma Genie creates objects that you can interact with and see from all sides.

In under 10 seconds, it generates an item with materials, colors and everything else needed for printing or using in a production environment.

You will wait a bit longer if you first want to upscale the object within Genie, but if you’re happy with low resolution then you can go from entering text to downloading the object in seconds.

You can then export your model to graphical engines like Blender, Unity and Unreal, to render them in even higher detail and use how you please, including for game development.

Why is this such a big deal?

Generative AI seems to be coming for every sector of the creative ecosystem. We have video, photo and even music models — 3D is the next logical evolution.

Platforms like Leonardo.ai already offer the opportunity to create textures for 3D objects but with Luma Genie you're also able to generate the object itself.

In a statement announcing a $43 million series-B funding round, Luma founders said this was about creating true multimodality in AI.

"We believe that multimodality is critical for intelligence," they declared. Adding that to go beyond the language models like GPT-4 from OpenAI and create more aware and capable systems they need to understand the real world.

"We are working on training and scaling up multimodal foundation models for systems that can see and understand, show and explain, and eventually interact with our world to effect change," they said.

The origin of the Holodeck?

Genie gives us a hint at what will come in the future. Today it generates single objects but we may see a situation where you can say Genie, take me to Paris in the 1920s and it will create a fully immersive 3D environment for your VR headset.

Many of the technologies we take for granted today drew inspiration from science fiction and being able to create an immersive virtual environment from a simple instruction sounds a lot like Star Trek's Holodeck to me.