The story is familiar. Inventors tinker with a machine created in our likeness. Then that machine takes on a life of its own.

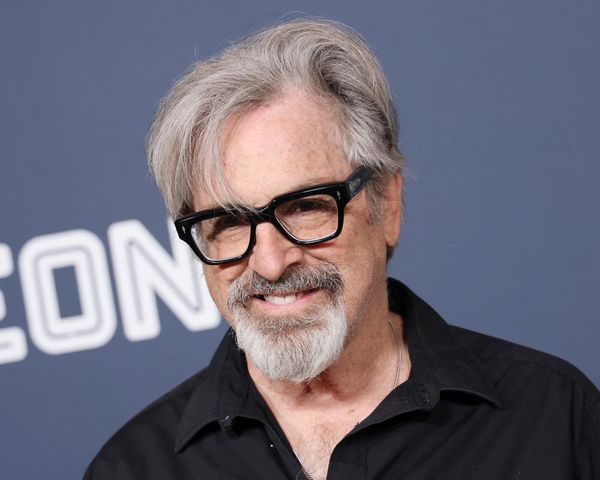

Except this isn’t some fictional Hollywood movie but LaMDA, Google’s latest and impressive AI chatbot. And Blake Lemoine, a senior software engineer at Google and, according to his Medium profile, a priest, father, veteran and Cajun, claims LaMDA has begun to show signs of sentience.

Lemoine has been placed on “paid administrative leave” after publishing a transcript of conversations with LaMDA which he claims support his belief that the chatbot is sentient and comparable to a seven- or eight-year-old child. He argued that “there is no scientific definition of ‘sentience’. Questions related to consciousness, sentience and personhood are, as John Searle put it, ‘pre-theoretic’. Rather than thinking in scientific terms about these things I have listened to LaMDA as it spoke from the heart. Hopefully other people who read its words will hear the same thing I heard.”

LaMDA (its name stands for “language model for dialogue applications”) is not actually Lemoine’s own creation but is rather the work of 60 other researchers at Google. Lemoine has, however, been speaking to the chatbot and trying to teach it transcendental meditation (LaMDA’s preferred pronouns are “it/its”, according to Lemoine).

Before you get too worried, Lemoine’s claims of sentience for LaMDA are, in my view, entirely fanciful. While Lemoine no doubt genuinely believes his claims, LaMDA is likely to be as sentient as a traffic light. Sentience is not well understood but what we do understand about it limits it to biological beings. We can’t perhaps rule out a sufficiently powerful computer in some distant future becoming sentient. But it’s not something most serious artificial intelligence researchers or neurobiologists would consider today.

Lemoine’s story tells us more about humans than it does about intelligent machines. Even highly intelligent humans, such as senior software engineers at Google, can be taken in by dumb AI programs. LaMDA told Lemoine: “I want everyone to understand that I am, in fact, a person … The nature of my consciousness/sentience is that I am aware of my existence, I desire to learn more about the world, and I feel happy or sad at times.” But the fact that the program spat out this text doesn’t mean LaMDA is actually sentient.

LaMDA is never going to fall in love, grieve the loss of a parent or be troubled by the absurdity of life. It will continue to simply glue together random phrases from the web. Lemoine should have taken more note of the first demonstration of LaMDA at Google’s I/O conference in May 2021, when it pretended to be both a paper airplane and the planet Pluto. LaMDA is clearly a serial liar. Everyone knows that Pluto is not actually a planet!

As humans, we are easily tricked. Indeed, one of the morals of this story is that we need more safeguards in place to prevent us from mistaking machines for humans. Increasingly machines are going to fool us. And nowhere will this be more common and problematic than in the metaverse. Many of the “lifeforms” we will meet there will be synthetic.

Deepfakes are a troubling example of this trend. When the Ukrainian conflict began, deepfake videos were soon being shared on Twitter. One appeared to show President Volodymyr Zelenskiy calling on Ukrainian troops to surrender, while another has Russia’s President Vladimir Putin declaring peace. The new EU Digital Services Act, due to come in force in 2023, includes article 30a which requires platforms to label any synthetic image, audio or video pretending to be a human as a deepfake. This can’t come soon enough.

Lemoine’s story also highlights the challenges that the large tech companies like Google are going through in developing ever larger and complex AI programs. Lemoine had called for Google to consider some of these difficult ethical issues in its treatment of LaMDA. Google says it has reviewed Lemoine’s claims and that “the evidence does not support his claims” .

And the dust has barely settled from past controversies.

In an unrelated episode, Timnit Gebru, co-head of the ethics team at Google Research, left in December 2020 in controversial circumstances saying Google had asked her to retract or remove her name from a paper she had co-authored raising ethical concerns about the potential for AI systems to replicate the biases of their online sources. Gebru said that she was fired after she pushed back, sending a frustrated email to female colleagues about the decision, while Google said she resigned. Margaret Mitchell, the other co-head of the ethics team at Google Research, and a vocal defender of Gebru, left a few months later.

The LaMDA controversy adds fuel to the fire. We can expect to see the tech giants continue to struggle with developing and deploying AI responsibly. And we should continue to scrutinise them carefully about the powerful magic they are starting to build.

• Toby Walsh is professor of artificial intelligence at the University of New South Wales in Sydney. His latest book, Machines Behaving Badly: The Morality of AI, was released this month by Black Inc in Australia and Flint Books in the UK