OpenAI may be moving away from numbers in the way it names its next generation artificial intelligence models, at least that's the suggestion from a recent presentation in Paris.

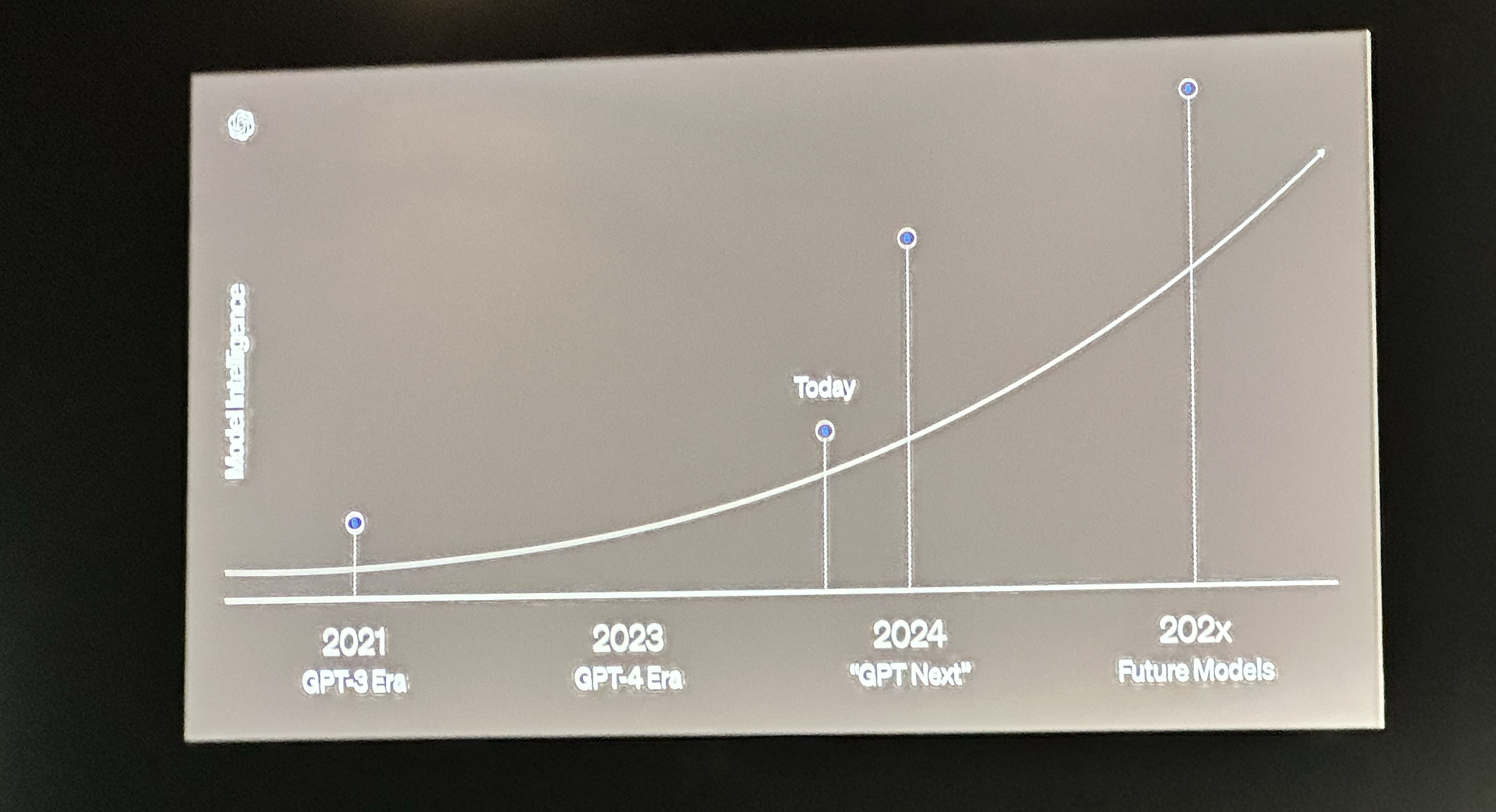

During a demonstration of ChatGPT Voice at the VivaTech conference, OpenAI's Head of Developer Experience Romain Huet showed a slide revealing the potential growth of AI models over the coming few years and GPT-5 was not on it.

It showed the GPT-3-era, the GPT-4-era and "today" sitting between it and GPT-Next. While I doubt the next generation model will carry that moniker, it is a hint that the company is moving away from GPT-5 as a brand. This also matches the fact CEO Sam Altman has been cagey in recent interviews over when the model will come out.

What's in a name?

In the world of artificial intelligence naming is still a messy business as companies seek to stand out from the crowd while also maintaining their hacker credentials.

We have Grok, a chatbot from xAI and Groq, a new inference engine that is also a chatbot. Then we have OpenAI with ChatGPT, Sora, Voice Engine, DALL-E and more.

During his presentation on Wednesday Huet even suggested we're going to see multiple sizes of OpenAI models in the coming months and years — not just one size fits all for ChatGPT and other products.

OpenAI started to make its mark with the release of GPT-3 and then ChatGPT. This model was a step change over anything we'd seen before, particularly in conversation and there has been near exponential progress since that point.

With GPT-4 we saw a model with the first hints of multimodality and improved reasoning and everyone expected GPT-5 to follow the same path — but then a small team at OpenAI trained GPT-4o and everything changed.

Until last year Altman would talk about GPT-5 being in training but in the past few months when quizzed on its release he pivots, hedges and talks instead about "many impressive models" coming this year.

During his presentation on Wednesday Huet even suggested we're going to see multiple sizes of OpenAI models in the coming months and years — not just one size fits all for ChatGPT and other products.

So what is coming next?

According to the slide shared by Huet we'll see something codenamed GPT-Next by the end of this year, which I suspect is effectively Omni-2 — a more refined, better trained and larger version of GPT-4o.

This, the graph suggests will be a noticeable but not ground breaking improvement over what we have today — with the good stuff coming in the next few years.

During a recent safety update, released to coincide with the international AI Seoul Summit, OpenAI said it would spend more time on assessing the capabilities of any new model before release, which could explain the lack of a date.

GPT-4o is already a step change in AI, going from being able to reason across text only to having a native understanding of text, image and video, so any future models are likely going to build on this — and require more complex safety assessments.