Is consumer-grade AI a solution in search of a problem? Take Google’s Gemini. The latest iteration of its public-facing AI tech, Gemini was originally known as Bard when launched back in February 2023. Since then, the chatbot has been constantly upgraded and reiterated, and is now available as a free app or web interface that you can use to supplement or replace regular Google search.

So what should we do with AI? In a very real sense, this is down to you. We spoke to Google’s Serge Lachapelle, a Director of Product for Google Workspace. The French-Canadian software engineer has been based in Stockholm since the 90s, lured by its pioneering high-speed internet. In 2007, Lachapelle was one of the founding members of the Google Sweden Engineering office, when the company acquired his video conferencing firm Marratech AB.

Integral to the development of products like Google Meet, Lachapelle now works for Google’s Outbound Product team, ‘liaising with Google’s most important customers and partners.’ This makes him well placed to talk about the ways in which Google’s pivot to AI-powered search and data management will evolve. ‘What has happened in the past 18 months to make AI so important?’ Lachapelle asks rhetorically, before answering that ‘computers can now understand us for the first time. We can create truly collaborative software experiences. We’re going from telling systems what to do to using them on a journey, for example.’

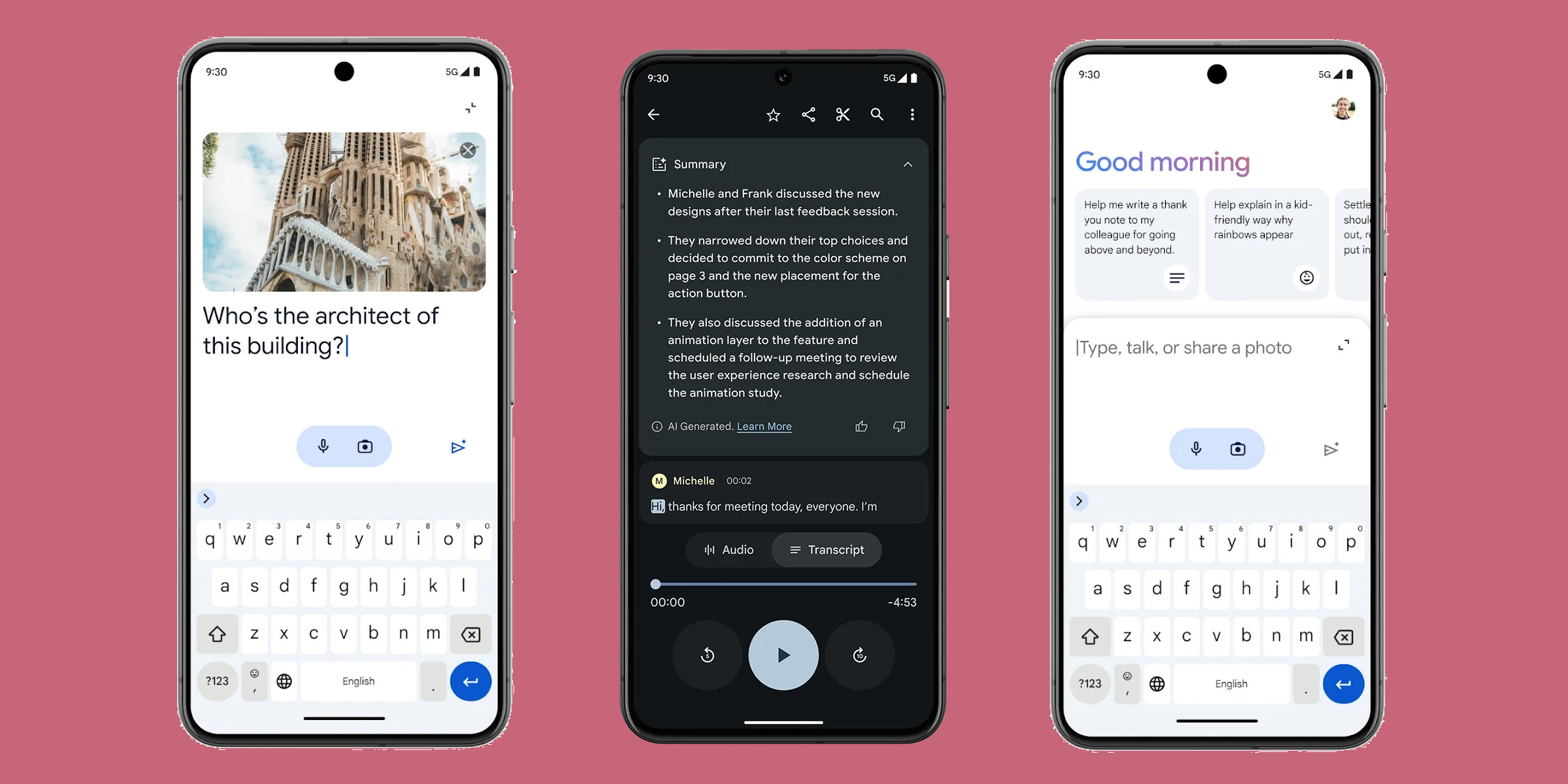

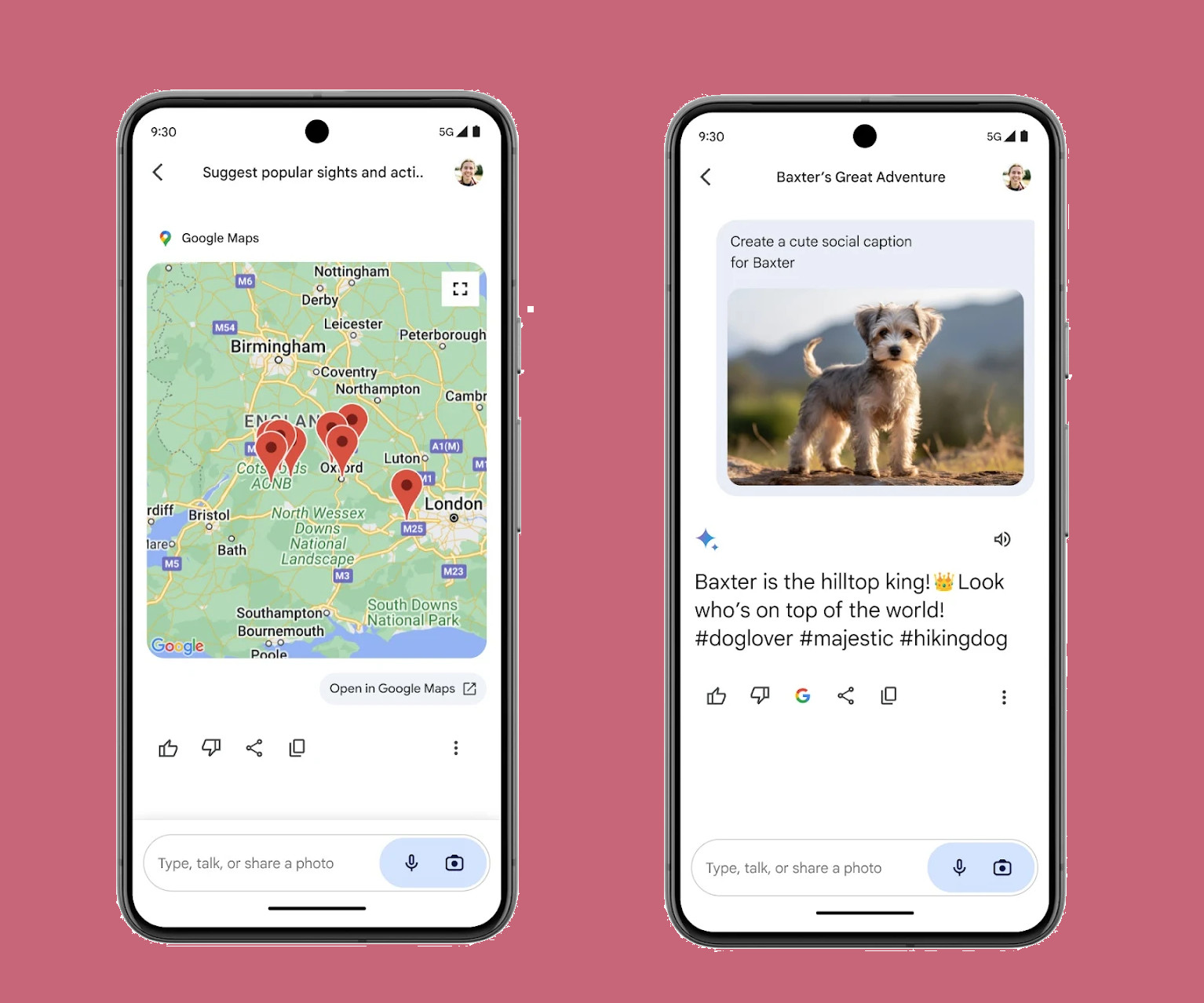

By way of illustration, Lachapelle uses Gemini to augment and supplement a hand-written packing list for a hypothetical family holiday. After photographing the list with his Google Pixel, Gemini decodes his handwriting and generates a neat list of things to bring, supplementing what is already there with suggestions for extra items based on the initial prompt (which specified a young family taking a few days’ holiday). ‘We’re trying to highlight the type of services we can offer,’ Lachapelle says, adding that ‘we’re at the very, very beginning’ of what AI-powered chatbots like Gemini can do.

In some respects, Google is playing catch-up, having been caught on the hoof by ChatGPT’s rapid rise to global prominence at the turn of the decade. Microsoft and Apple have also gone all-in on AI, but each company’s approach seems to reflect its pre-existing values and aspirations. Apple is doubling down on privacy, for example, while Microsoft seems to think that AI equates to a form of creative hand holding. Google, however, is all about delivering answers.

The packing list is a case in point, with the software effectively combining handwriting recognition with pre-existing online context-specific content like other people’s packing lists and travel recommendations, parenting blogs and trending products, with the result packaged up in a friendly list format. Interestingly, Lachapelle prefaced his prompt with the words ‘You are an amazing assistant,’ both prepping the AI for the type of response required – a helpful list of suggestions – and perhaps also an unconscious deference to the power of this unseen ‘ghost in the machine’.

The ins and outs of AI are largely impenetrable to the layperson and it’s impossible not to be slightly sceptical about the way in which the tech giants are operating. ‘Innovations’ are announced, then lightly walked back in the face of concerns about privacy, security and intellectual property. All the while, our smartphones are getting smarter and AI content is becoming unavoidable.

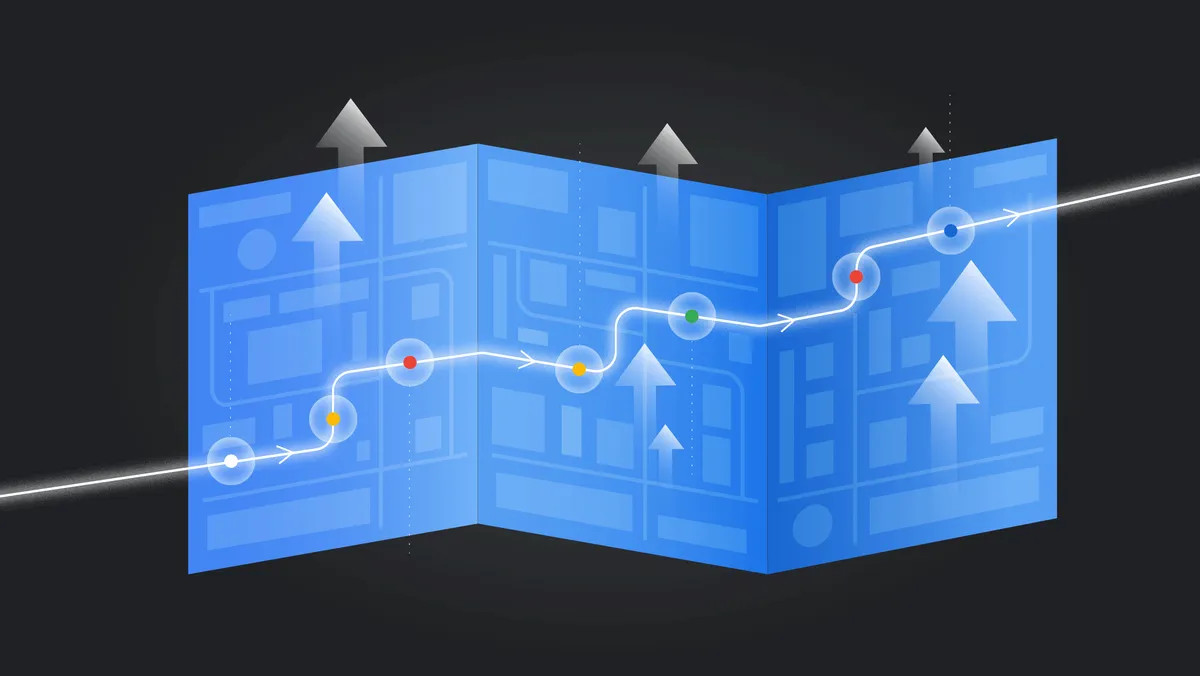

Lachapelle certainly has faith in the machine. ‘We’re asking Gemini to fetch some information from another app,’ he explains, as he experiments with getting the AI to retrieve travel itineraries for this hypothetical trip from Google Maps, parcelling everything up together in an easy-to-digest, clickable document. Remarkably, the Google engineer had never explored this functionality before, all made available by the heavy computational lifting being done behind the scenes.

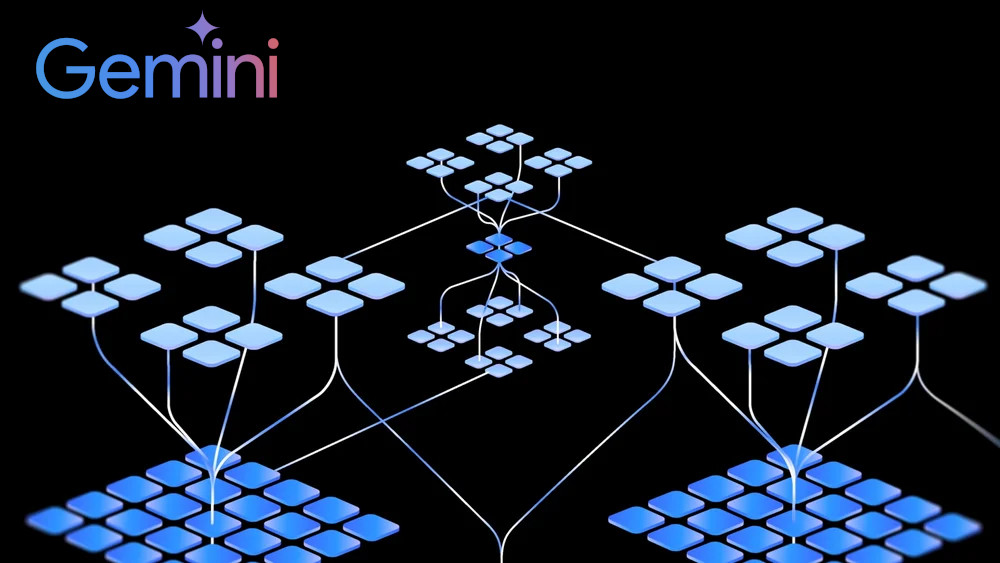

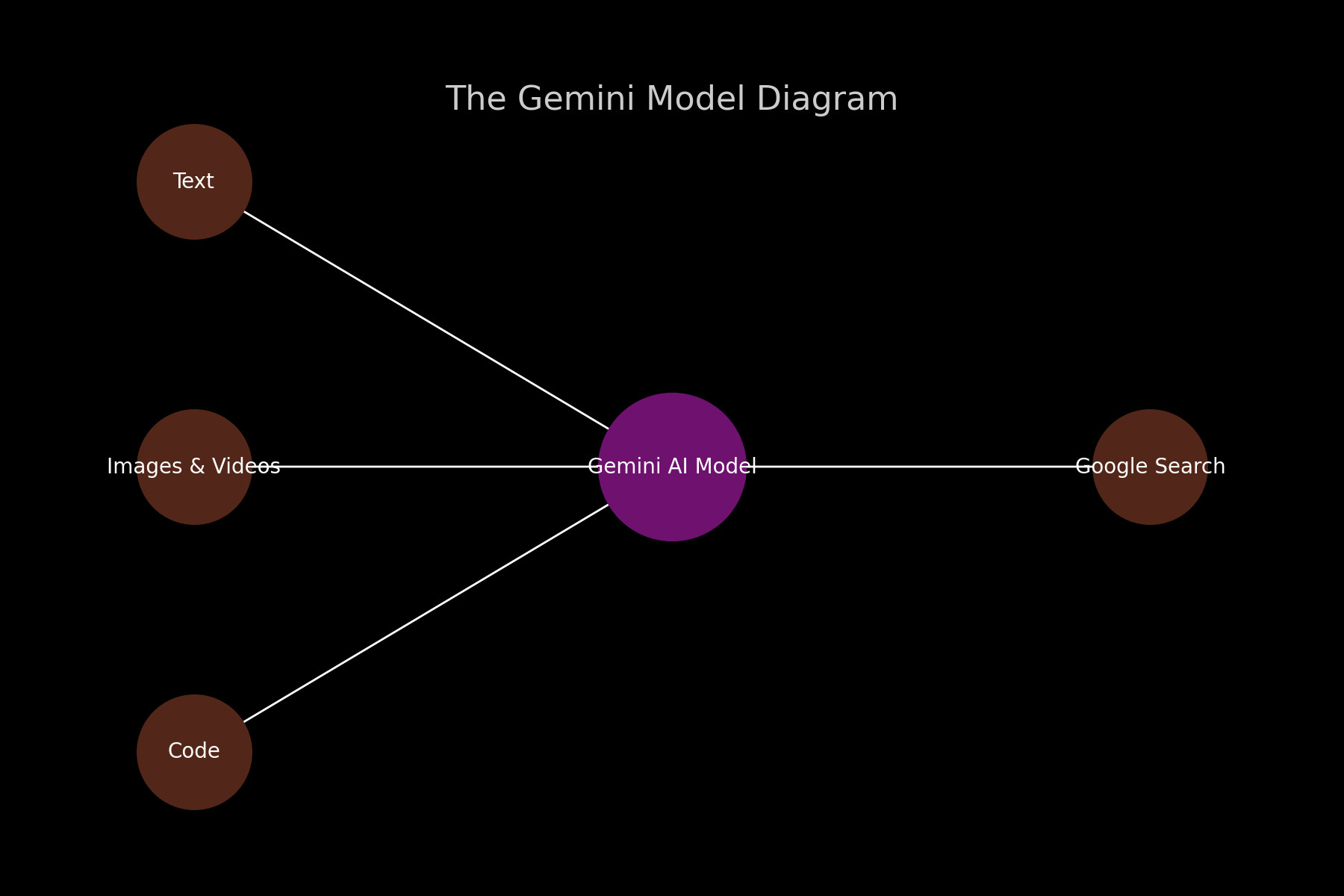

‘Before 2017-18 this wasn’t possible,’ Lachapelle admits, ‘It works because of the ‘transformers’, code that mediates the infinitely available possibilities and limits the responses you get back.’ These transformers are part of Google’s Deep Learning Networks, which in turn have evolved out of the first-generation neural networks that could be trained on relatively simple tasks like image recognition or removing background noise from a video call.

And all this is available from your existing devices as AI is increasingly baked into our everyday technology. Thus far, attempts to squeeze AI into dedicated hardware have failed – the ill-fated Rabbit R1 and Humane’s AI pin, haven’t (yet) lived up to their billing. Instead, we’re reverting to the devices we already have on hand, our smartphones, tablets and laptops.

Google wants us to think Gemini as a benign assistant, a form of passive but proactive helper that works quiet magic in the background. ‘I used to think that cloud computing and mobiles, etc., would make us more productive, but they’ve just made us busier and busier,’ Lachapelle admits, ‘But I do believe this technology will give us back some space.’

But at what cost? The less than palatable truth is that AI's rampant energy consumption doesn't yet equate to the amount of time and effort it saves. At consumer level, where AI is being used for auto-generating shopping lists, itineraries and summaries, or even conjuring up novelty images (not in Gemini’s wheelhouse just yet), it’s hard to square the computational crunching required with the results.

Even when AI is scaled up to solve important logistical issues for companies and organisations, the energy demands will follow. A recent report showed that Google’s greenhouse gas emissions have increased by nearly 50% in the last five years, almost all down to the energy consumption of new AI-focused data centres. It’s not alone: Microsoft is seeing a similar spike in energy demand around the world.

Google wants Gemini to ‘help supercharge your creativity and productivity,’ but chances are you’ll be using the technology without even realising it within a few years, as the code, transformers, networks and language models blur into existing search functionality. We asked Gemini about AI’s potential pitfalls in an attempt to get an answer from a primary source. ‘AI bias, job losses, privacy risks, and lack of human judgment could lead to unfair, unsafe, and unsettling consequences,’ it replied, choosing to skip over the energy question altogether. Perhaps we should just pack our own suitcases for the time being.