To make good on his vow that artificial intelligence issues will top his chamber's to-do list, Senate Majority Leader Chuck Schumer will have some computer science homework to do over the summer. But today, he's starting with the first of three closed-door Senate briefings, as announced in April. The last of which, Schumer said, will mark the first time Congress has ever had a classified briefing on AI.

In a Tuesday letter to colleagues, Schumer called on the chamber to "deepen our expertise in this pressing topic."

"AI is already changing our world, and experts have repeatedly told us that it will have a profound impact on everything from our national security to our classrooms to our workforce, including potentially significant job displacement," he wrote.

Responding in April to widespread public attention on the power of generative AI like OpenAI's ChatGPT program, Schumer said at the time that the briefings would advance a "framework that outlines a new regulatory regime that would prevent potentially catastrophic damage to our country while simultaneously making sure the U.S. advances and leads in this transformative technology."

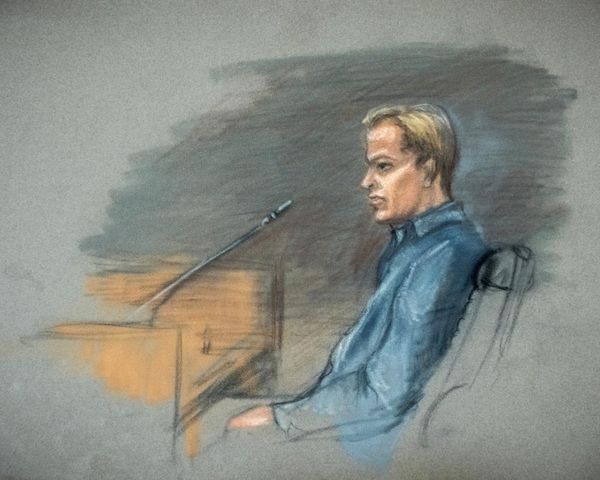

Following a Senate hearing with testimony from OpenAI CEO Sam Altman and other industry and academic leaders in May, more US audiences began turning to the sobering likelihood of AI-driven workforce layoffs and economic upheaval — concerns that AI industry CEOs began acknowledging more openly.

"The first briefing in the next few weeks will focus on the state of artificial intelligence today. The second briefing will focus on where this technology is headed in the future and how America can stay at the forefront of innovation," Schumer said in a June 6 update.

The state of AI in Congress right now

Since the start of the current Congress, there have already been nearly 70 bills introduced which touch on AI — either by renewing previous laws on it, or offering regulatory firsts.

Since the start of the current Congress, there have already been nearly 70 bills introduced which touch on AI

Building blocks for legislative oversight are coming together across bills like those from Colorado Democratic Sen. Michael Bennett. His ASSESS AI Act is a fledgling effort to blueprint the full scope of Congress' AI governance to-do list — starting with a task force full of executive branch department heads and, more optimistically, those departments' chief privacy officers.

In a similar vein, Rep. Josh Harder, D-Calif., has already tendered the broad-strokes AI Accountability Act, which looks to build some familiar consumer protection mechanisms around end users of AI services.

A big part of the GOP's position (or at least its rhetoric) on tech this year has been rooted in the protection of minors' data privacy online. The AI Shield for Kids Act, from Florida Republican Sen. Rick Scott would throw up a parental consent barrier between AI-driven product features and minors. That would include AI chat bots like those used by mental health startup Koko, which targeted suicidal teens on Facebook and Tumblr.

Although AI is already being quietly used in political campaigning, Congress has only just begun fledgling efforts to tamp down on generative AI use in election ads. Another pair of bills, containing the REAL Political Advertisements Act, are being carried by two Democrats, Rep. Evette Clarke of New York and Sen. Amy Klobuchar of Minnesota. The legislation would require political advertisements to include a disclosure to the audience if the spot was generated with AI.

More bills are expected to arrive through the summer in the House. Among them, a package of regulations from Democratic Rep. Tony Cárdenas of California.

One lawmaker is @RepCardenas, who is assembling a package of bills to address AI concerns, including the need for AI-generated material to be clearly labeled. Mr. Winters points out that this will be "a difficult thing in regards to trust," especially w/ the upcoming election.

— EPIC (@EPICprivacy) June 12, 2023

And it's not all gloom and doom in AI bills, either. California Democratic Rep. Zoe Lofgren's SUPERSAFE Act would help the Environmental Protection Agency harness AI and supercomputing power to quickly find safe alternatives to toxic chemicals.

Finally, a whole suite of freshly filed AI bills focuses on military and defense regulation.

"The third, our first-ever classified briefing on AI, will focus on how our adversaries will use AI against us, while detailing how defense and intelligence agencies will use this technology to keep Americans safe," Shumer told members.

While Congress has already given wide berth to Defense Department AI development through budget bills, it only now appears to be laying down the fundamental ground rules for legislative regulation of AI-driven weapons use. A pair of House and Senate bills sponsored by Rep. Ted Lieu, D-Calif., and Sen. Ed Markey, D-Mass., would block any use of autonomous AI to launch nuclear weapons.

Rep. Jay Obernolte, R-Calif., is the only Member of Congress with a graduate degree in AI. In a recent Politico column, he soberly tackled a range of AI regulatory concerns from military uses and data privacy to fake political ads and education system disruptions. So far, however, his AI-related bills have hewn close to national cybersecurity bolstering.

As one might expect of the longtime veterans' advocate, Illinois Democratic Sen. Tammy Duckworth has already introduced a measure to standardize who gets access to any of the military's new in-demand AI jobs and training opportunities as they appear.

The bill aims to keep currently enlisted service members from being overlooked for what will likely be high-paying posts but — with 24% of active-duty troops going hungry right now — it also offers the public an early glimpse at how AI job displacement could have a sweeping impact on the military's more than 2 million government employees.

As a legislative topic, AI is already subject to the same anti-Chinese sentiment that has pervaded much of the current Congress' offered tech legislation. Just as similar provisions appeared in the RESTRICT Act (the privacy-averse bill that became known as the TikTok Ban), a bill from Florida Republican Sen. Marco Rubio aims to block AI-related tech trade between the US and Chinese Communist Party.

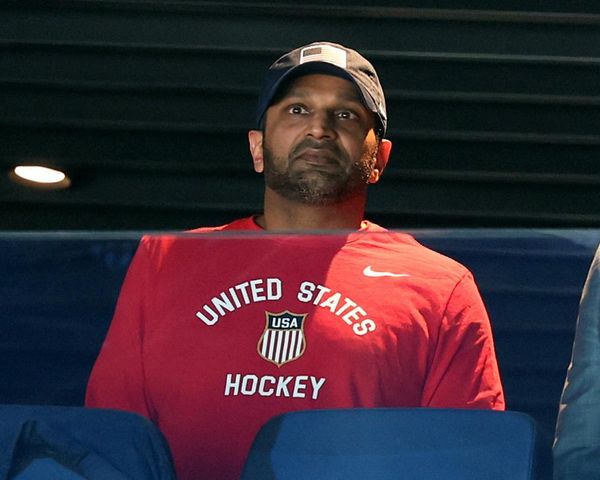

In a similar vein, Indiana Republican Sen. Todd Young is co-sponsoring a bill that would devote government resources to studying how competitive American AI development is in the global market — with an eye toward besting China in AI development. His bill, the Global Technology Leadership Act, is also being carried by Bennet, who previewed the bill in a recent NBC News interview.

"There is nobody — and I say this with complete certainty — no one in the federal government who can tell us how, nor frankly in the United States of America really who can tell us how, the U.S. stacks up in comparison to China in critical technologies like AI," Bennet told NBC News.

The Senate's closed-door information sessions should be wrapped up by the time Congress breaks for August recess, South Dakota Republican Sen. Mike Rounds recently told CNN.

Meanwhile, lawmakers are shuffling through private stakeholder events like the one held by Scale AI and the Ronald Reagan Institute on June 6. At the event, Democratic Senate Intelligence Chair Mark Warner, of Virginia, seemed to caution against merging top AI-industry players for the sake of national security.

.@scale_AI hosted our Gov AI Summit today in DC.

— Alexandr Wang (@alexandr_wang) June 6, 2023

Senator Warner (Chairman of Senate Intel Committee):

“One of the questions I’d have is… [would] it be in the national security interest of our country to [merge] OpenAI/Microsoft, Anthropic/Google, maybe throw in Amazon.”

“We… pic.twitter.com/XdWEvxgtce

A pair of House and Senate bills sponsored by Rep. Lieu and Sen. Markey would block any use of autonomous AI to launch nuclear weapons

It would be dangerous to mistake the slow trickle of bills and the general lack of specific tech education among Congress' 538 members for a broader ignorance of AI in U.S. government, though.

AI research and development has been pervasive in latter-year U.S. military application, and it's now married into the budget of the country's much-needed government cybersecurity hiring push and IT modernization overhaul. And the Federal Trade Commission has been keeping tabs on the rise of AI in over-the-phone scams. Even as recently as last year, AI research was well-allocated in the CHIPS and Science Act.

And there was perhaps no other government outfit which rose to the nation's moment of tech-need quite so adroitly as the National Institute of Standards and Technology this year when — during the peak AI panic of late January — the institute mic-dropped their AI Risk Management Framework, a playbook they'd been honing in drafts since December 2021.

Though overlooked by most of the news world's politerati at the time, the agency's deft handiwork was the first to quell a Congressional AI fear-frenzy and restore the US' poise on the global tech stage. It also served as a flawlessly timed reminder to Uncle Sam: When you need engineering genius with a heart of public service, it's well-funded public science — not privatization — that delivers unsurpassed American nerd-craft.