We've just got an early look at some iOS 17 features thanks to a surprisingly comprehensive update from an official Apple Newsroom post.

The new features are focused on accessibility, and will apparently arrive "later this year." That certainly sounds like they'll be arriving with the new iPhone software, and also iPadOS 17 and macOS 14, which we expect to see revealed at WWDC 2023 in June.

Live Speech

The first of the big new features we'll look at is Live Speech. This is a text-to-speech feature for use during calls, or with another person in real life, letting you type a message into your iPhone, iPad or Mac and then have the device read it out. You can save messages for later or repeated use in a Phrases bookmarks section. It heavily resembles Bixby Text Call on recent Samsung Galaxy phones, except with wider potential applications.

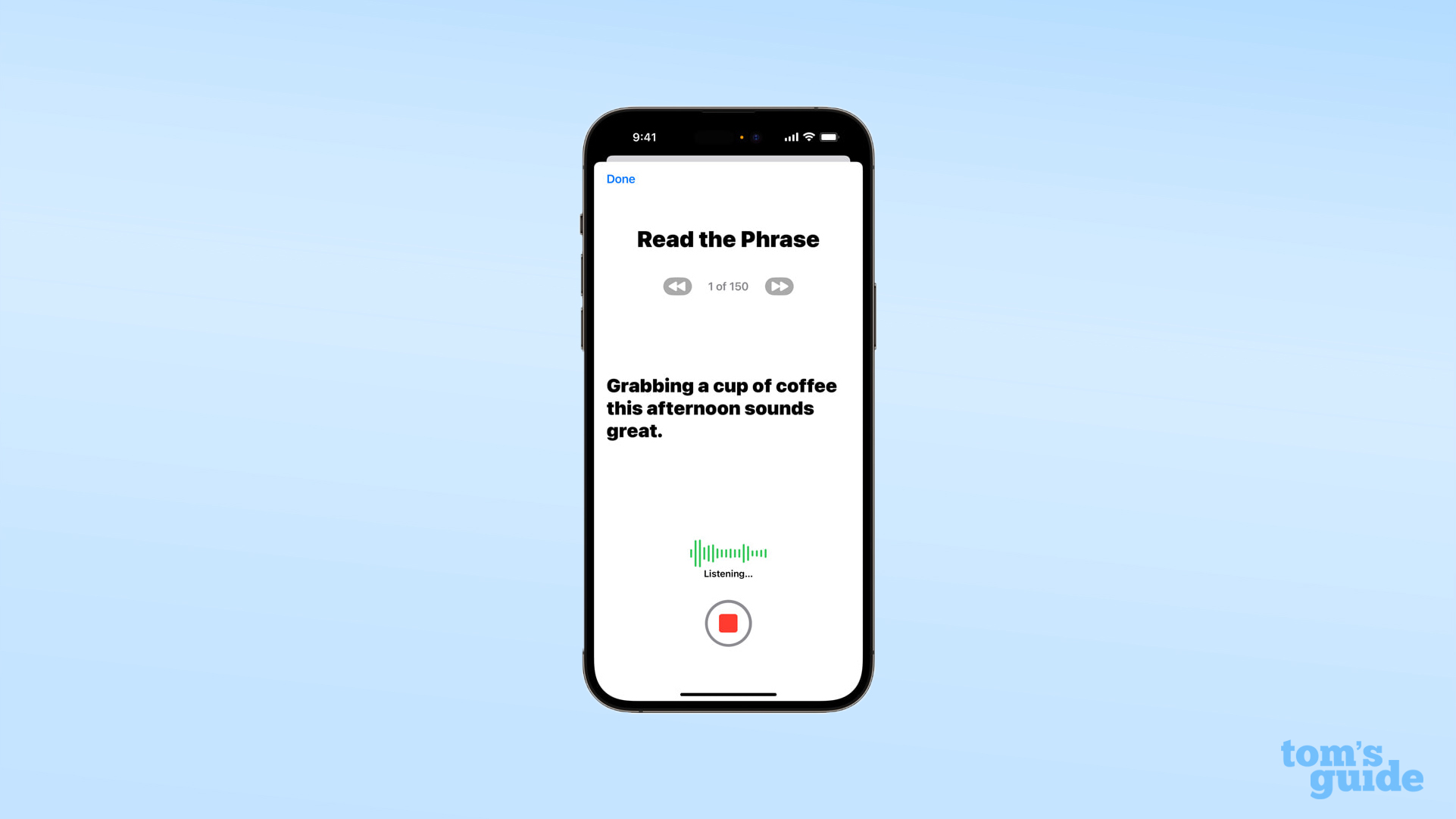

Personal Voice

You'll also be able to use Live Speech with another headline feature: Personal Voice. It's marketed as a way of keeping a copy of one's voice alive even as someone's actual speech declines due to certain medical conditions.

Apple promises that after 15 minutes of reading out text prompts to an iPhone, iPad or M-series chip-powered Mac, it'll be able to produce a synthesized copy (saved and processed on-device for privacy) of your voice that you can then use to talk through TTS, for what sounds like will be a much more natural experience than having a default Siri voice do the job.

Point and Speak in Magnifier

The iPhone's built-in Magnifier app is getting an improvement in the form of a Detection Mode feature which enables "point and speak" for users who rely on the app to navigate the world around them.

What this does is let your device process dense areas of text like normal, but only read it aloud when the user points to a specific area in-frame — useful for reading things like labelled buttons, as Apple's demo video shows with a user operating a microwave.

However, this feature requires the use of "iPhone and iPad devices with the LiDAR Scanner," a sensor found only on the iPhone 12 Pro and later Pro iPhones and recent iPad Pro models. This sounds like users with older or more basic iPhone models may won't be able to use this feature unless they upgrade.

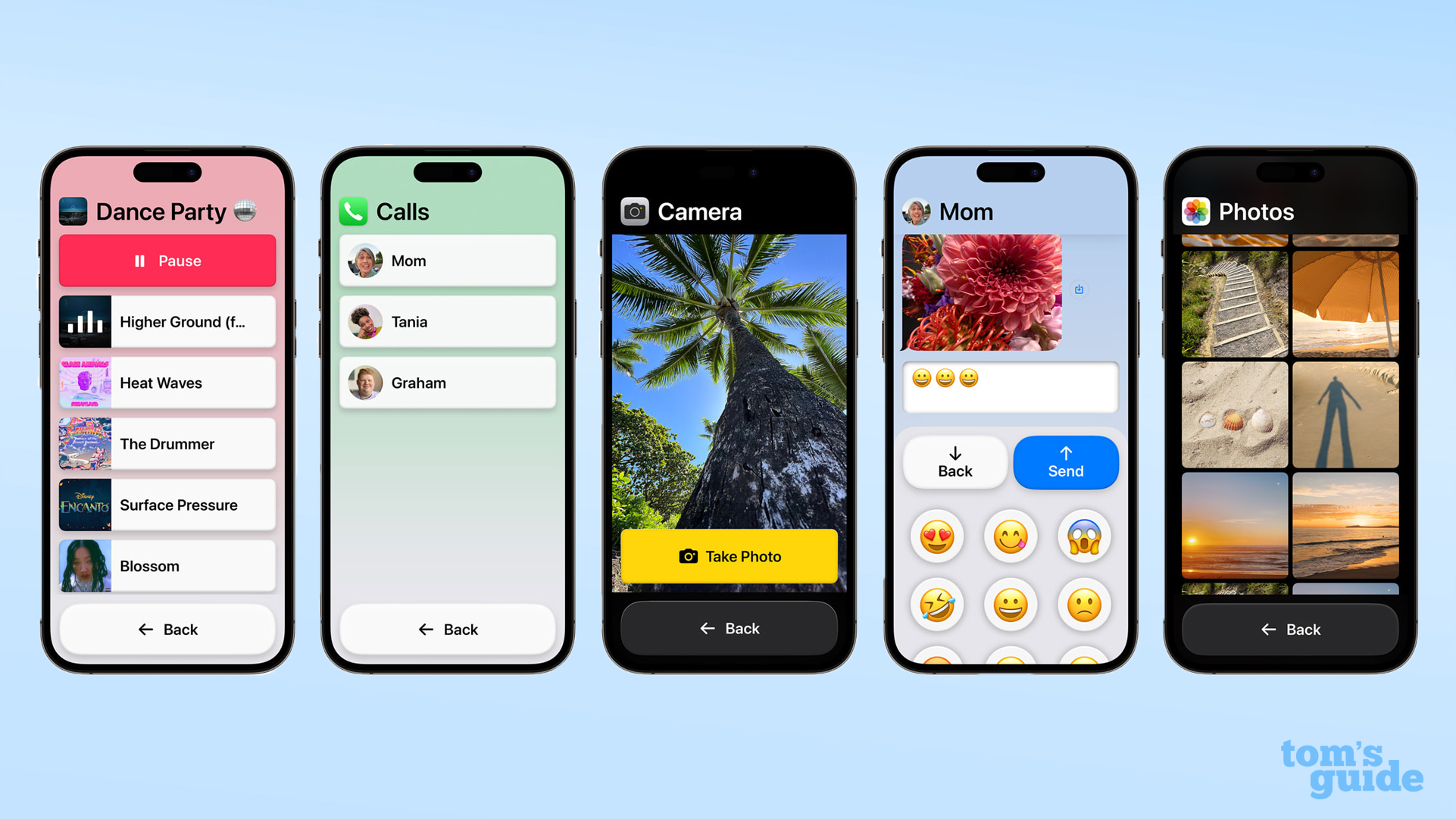

Assistive Access

The last of the big new additions to iOS is Assistive Access, a super-simplified interface, with large and easy-to-read text and buttons that makes using essential Apple apps like Camera, Photos, Music and Messages much simpler to understand and navigate.

It also combines Phone and FaceTime together into a new hybrid Calls app, meaning an unconfident user won't have trouble figuring out which app is needed for contacting someone.

The home screen can also be arranged in two new layouts in this mode, giving a simplified grid with double-sized icons or a list of apps.

Other new iOS 17 features

Apple's updates encompass lots of other smaller accessibility improvements, including hearing aid pairing for Apple Silicon Macs, easier text size adjustment, Siri talk speed adjustment, and phonetic suggestions for easier navigation of similar-sounding words when using text-to-speech.

These changes are of course welcome inclusions for users with conditions and limitations who want to use their device as comfortably and easily as any other user. But even for the average iPhone owner, these new settings could prove useful. You only have to think of something like the iPhone Back Tap gesture, a popular way of adding an additional shortcut to your phone that's enabled through Accessibility settings, to start imagining creative ways these new features could be used beyond their stated purpose.

As major as these updates are, we still suspect Apple's holding back some announcements for WWDC 2023. As well as additional iOS 17, iPadOS 17 and macOS 14 features, we're hoping to see some new hardware too, with a 15-inch MacBook Air model and an all-new Apple AR/VR headset both rumored.