Just when I thought Intel Core Ultra Series 3 couldn’t get any better, Team Blue just dropped XeSS 3 multi-frame generation in a new graphics driver. And after testing it on my Asus Zenbook Duo with Intel Core Ultra X9 388H, my mind is blown.

While Nvidia maintains a comfortable lead in terms of performance and fidelity with DLSS 4.5, Intel's adopted the same AI trickery to scale the resolution and fill the space between rendered frames with AI-generated frames. And that leads seriously impressive gaming on ultraportable notebooks.

Of course, raw horsepower-wise, Team Green keeps a commanding lead — it has a dedicated GPU after all. But for most players, what you’re getting here is more than enough, and you get that additional benefit of vastly improved battery life, too!

So rather than rant on, I’ll answer the questions you have about it: how good are the frame rates now? Any latency worries? Any telltale signs of AI at work (like jagged edges and ghosting around objects)?

I decided to do this by putting it in one helluva mismatch of a competition — facing it off against the Acer Predator Triton 14 AI with RTX 5070. And the results? Well, there’s a lot here for Nvidia to get nervous about. Let’s get into it.

What is XeSS 3?

Think DLSS, but Intel’s version. XeSS (Xe Super Sampling) 3 brings frame generation and resolution scaling together to make games run a lot smoother than you could’ve done on just the GPU itself.

Nvidia, AMD and Intel all learned a while ago that instead of stuffing graphics cards with more and more transistors to work harder in rendering computer graphics directly on the card, they could work smarter and introduce AI into the mix to bring more efficient generational performance gains.

In Intel’s case, two things are at play here:

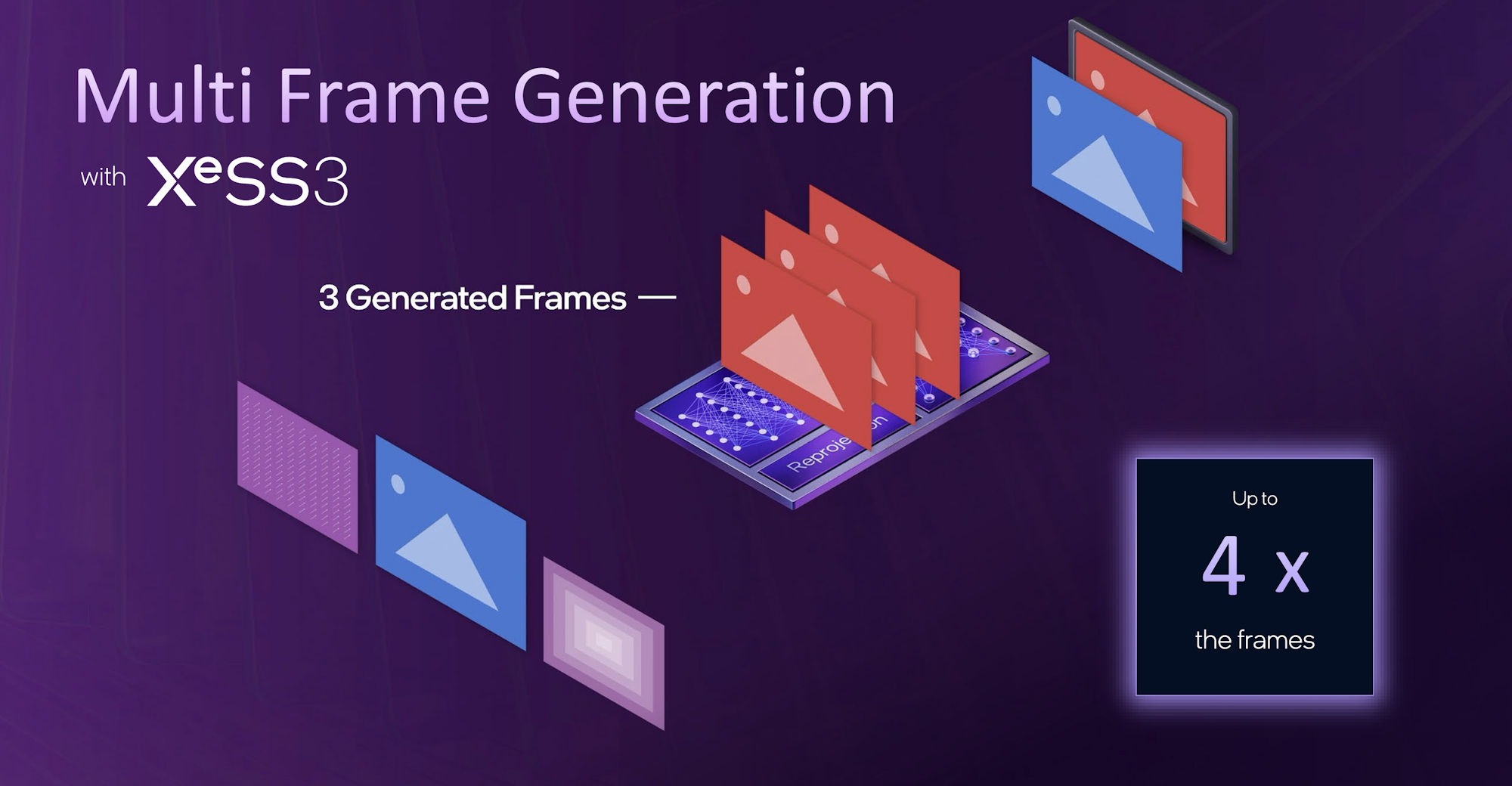

- Multi-frame generation: For every one frame of gameplay rendered by the GPU, AI can generate up to an extra three.

- Super Resolution: Your GPU will render the game at a lower resolution to save power, and then use AI to upscale that image to the resolution you want to play at.

XeSS 3 vs DLSS 4: Features

Intel's worked hard to bring backward compatibility to XeSS 3 with previous XeSS 2-supported games. But the list of supported games is completely dwarfed by Nvidia's DLSS.

On top of that, Team Green's been busy building out the capabilities of DLSS 4/4.5 around that new Transformer-based AI tech — with new features coming down the line like dynamic frame generation.

Technology |

Intel XeSS 3 |

Nvidia DLSS 4/4.5 |

|---|---|---|

Primary focus |

Accessible performance for iGPUs and Arc |

Maximum fidelity and extreme frame rates |

AI upscaling tech |

AI-based super resolution upscaler |

AI Transformer-based super resolution upscaler with ray reconstruction |

Game compatibility |

200+ games (Upscaling) / 50+ games (Multi-frame generation) |

800+ games (upscaling) / 250+ games (Multi-frame generation) |

Frame generation |

Up to 4X multi-frame generation |

Up to 4X multi-frame generation* (*6X and dynamic frame generation coming soon) |

By the numbers

Running around Night City in Cyberpunk 2077 was already decent enough at our initial results of 67.1 FPS with XeSS 2. To watch that number increase to 217 FPS with one flick of a switch in Intel’s Graphics software is frankly mind-bending.

And don’t get it twisted — this is on an Asus Zenbook Duo running at a total power of 45 watts. There is a “burst” option that can go up to 80 watts for short durations, but for gaming, it stays largely at 45.

For context, the Nvidia gaming laptop I’m running these comparisons against (the Acer Predator Triton 14 AI with Intel Core Ultra 9 288V and RTX 5070) goes up to a combined power of 140W. So with that in mind, here’s what I was getting out-of-the-box with no tweaks to power settings.

Testing conditions and one thing to note: These games were set to the same in-game settings (1080p High), and DLSS/XeSS settings matched on parity. In some situations, this could cause potential CPU bottlenecks (the Intel Core Ultra 9 288V is more limited in performance than the Ultra X9 388H). Once we have Intel Core Ultra Series 3 gaming laptops with RTX 50-series GPUs, then the testing will be more on an even level. Consider these results a frame of reference.

As you can see, the Predator Triton 14 AI takes a clear lead here — turning on multi-frame generation brings the integrated GPU a lot closer to RTX 50-series than I expected. And at these high levels of frames, only the most enthusiastic of esports competitors need to really worry about that higher number.

Of course, I anticipate this gap between an RTX 50-series gaming laptop and an Intel Core Ultra X9 388H system would widen once you start to raise the resolution (think 1440p). In all the gaming tests Team Blue has shown me at CES 2026, they've all been at 1080p. That is for another test in the future, though!

But ultimately, this is the key to jumping ahead of AMD, but that’s an unfair comparison given the Ryzen AI Max silicon is definitely stronger than Intel. That being said, though, this does put AMD way on the back foot, and given the rumors that Team Red could be stuck on RDNA 3.5 iGPU architecture until 2029, it could stay that way for years… Yikes.

Visual impressions

OK, so the frames are good. Let’s pixel peep it (of course, these images are compressed a bit — you've gotta love the internet for that). Let me put some comparisons up with no names:

Now, for a question for my fellow gamers.

Got your answer locked in? You best not have been peeping at the answer!

…answered? OK, click to reveal the truth below.

Reveal the answer ▼

That’s right — the first of all the images is the Predator Triton 14 AI with RTX 5070, and the second is the Zenbook Duo. Could you tell the difference?

So to my eyes, the answer is “yes.” XeSS 3 is still a step behind DLSS 4. There’s noticeable ghosting around fast-moving objects, small details like chain fences can warp the image behind it a little, and there’s some jagged edges.

But in the midst of gameplay, it doesn’t discount what Intel has done here. For the ultimate fidelity, a gaming laptop packing an Nvidia GPU is still the way to go. But with what Intel's managed to do, it’s clear that integrated graphics have taken a monster leap forward.

What about latency?

Multi-frame generation may be a bit of a breakthrough to many reading this, but it’s not all rosy. Fitting additional AI-generated frames in between rendered frames can introduce latency. And if your GPU is already chugging along at a slow frame rate, you’ll feel that latency more when the gameplay is smoother.

That’s why I’d always recommend tweaking your game’s graphics settings to ensure you’re getting 45-60 FPS before fiddling with multi-frame generation. With this base level, your latency should be low enough that you won’t feel it impact your gameplay — particularly in single player titles. In competitive multiplayer, where every frame counts, I’d keep it turned off.

That being said, though, Nvidia has improved DLSS quite a lot in this area, and Intel’s just rocked up with something mighty impressive. On average, you’re getting about 2ms more latency than you’d find compared to DLSS 4 MFG’s 42ms (4X mode).

So 44ms on average, and at these levels of latency, the vast majority of you are going to have a perfectly fine gaming experience on this system — which I should remind you is INTEGRATED GRAPHICS.

The future

So the present is looking incredibly bright for Intel’s XeSS 3. Without this tech already in there, the Zenbook Duo I’ve been testing has been a marvel for iGPU gaming, while bringing a huge battery life increase over your standard gaming laptop.

And now, this mini breakthrough just made things a whole lot more intriguing. With gaming laptops furnishing those who are chasing the absolute best performance and nothing else, Intel Core Ultra X series chips now pose a better balance for most players.

But where does it go in the future? Well, as Tony Polanco confirmed, Intel has told Tom’s Guide that multi-frame generation is coming to the Intel Arc B580 desktop GPU. And with that, my favorite budget GPU just became the one I’d recommend to everyone, given the RAM price crisis has just made all Nvidia GPUs skyrocket in cost.

Next, if I could make a quick wish, it would be to take a couple of learnings from Nvidia. Dynamic frame generation would be significant not just on-laptop performance, but also plugging into monitors and TVs.

And finally, there’s one area that Team Green has had the lead for years that Intel needs to catch up on — game compatibility. Don’t get me wrong, they’re working on it and all 50+ XeSS 2 titles support XeSS 3. But we need more titles on this list!

Follow Tom's Guide on Google News and add us as a preferred source to get our up-to-date news, analysis, and reviews in your feeds.

More from Tom's Guide

- I’m benchmarking an Intel Core Ultra X9 388H laptop right now — what would you like me to test?

- Samsung just confirmed 20% higher prices for Galaxy Book 6 with Intel Core Ultra Series 3 — here's what this means for you

- ‘Chaotic’ RAM pricing won’t kill PC gaming, a CEO told me — and history backs him up