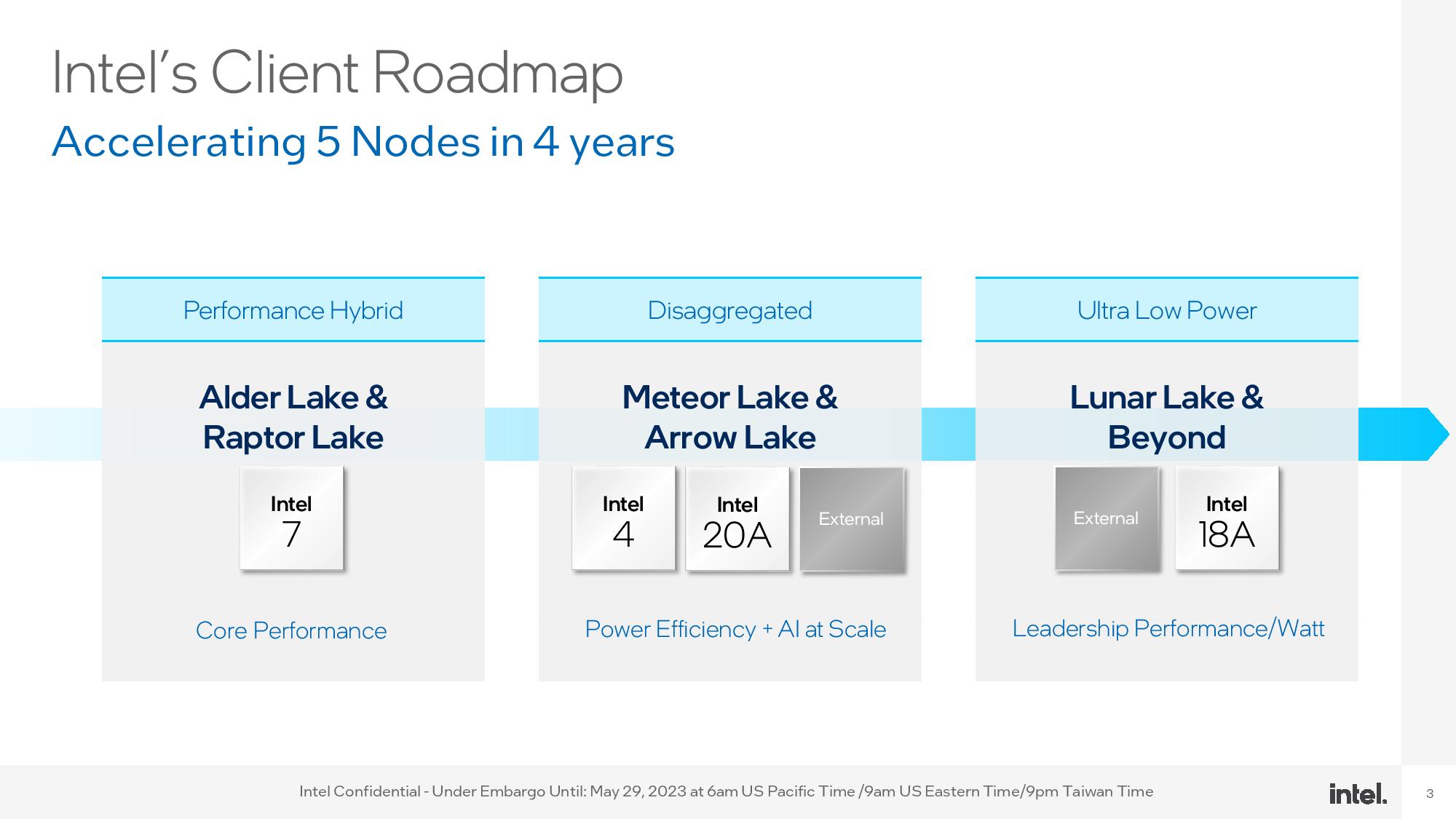

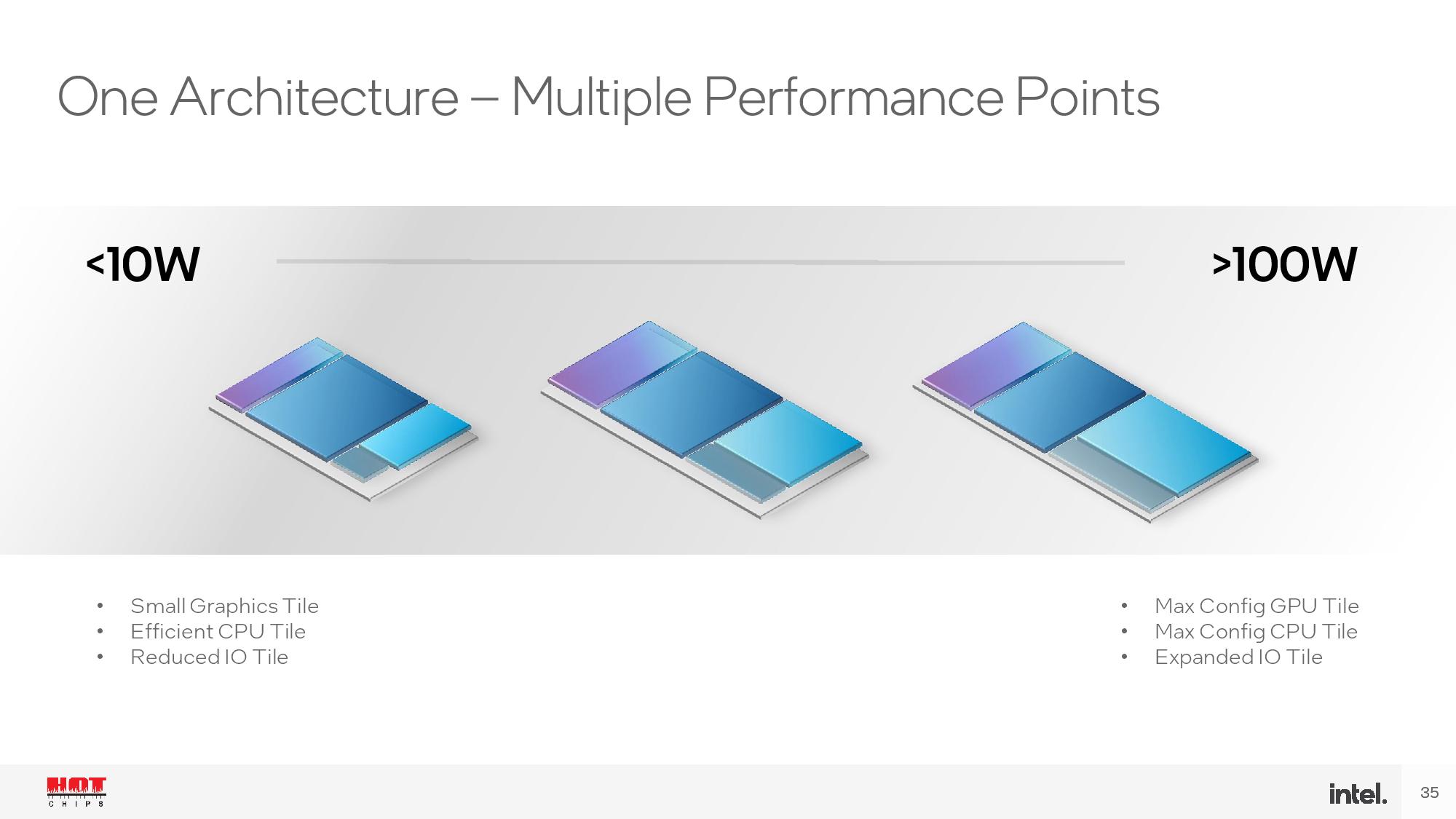

For Computex 2023, Intel announced new details about its new AI-focused VPU silicon that will debut in the company's new Meteor Lake chips. The company also outlined its efforts to enable the AI ecosystem for its upcoming Meteor Lake chips. Intel plans to release the Meteor Lake processors, it's first to use a blended chiplet-based design that leverages both Intel and TSMC tech in one package, by the end of the year. The chips will land in laptops first, focusing on power efficiency and performance in local AI workloads, but different versions of the design will also come to desktop PCs.

Both Apple and AMD have already forged ahead with powerful AI acceleration engines built right into their silicon, and Microsoft has also been busy plumbing Windows with new capabilities to leverage custom AI acceleration engines. Following announcements from Intel, AMD, and Microsoft last week about the coming age of AI to PCs, Intel dove in deeper on how it will address the emerging class of AI workloads with its own custom acceleration blocks on its consumer PC chips.

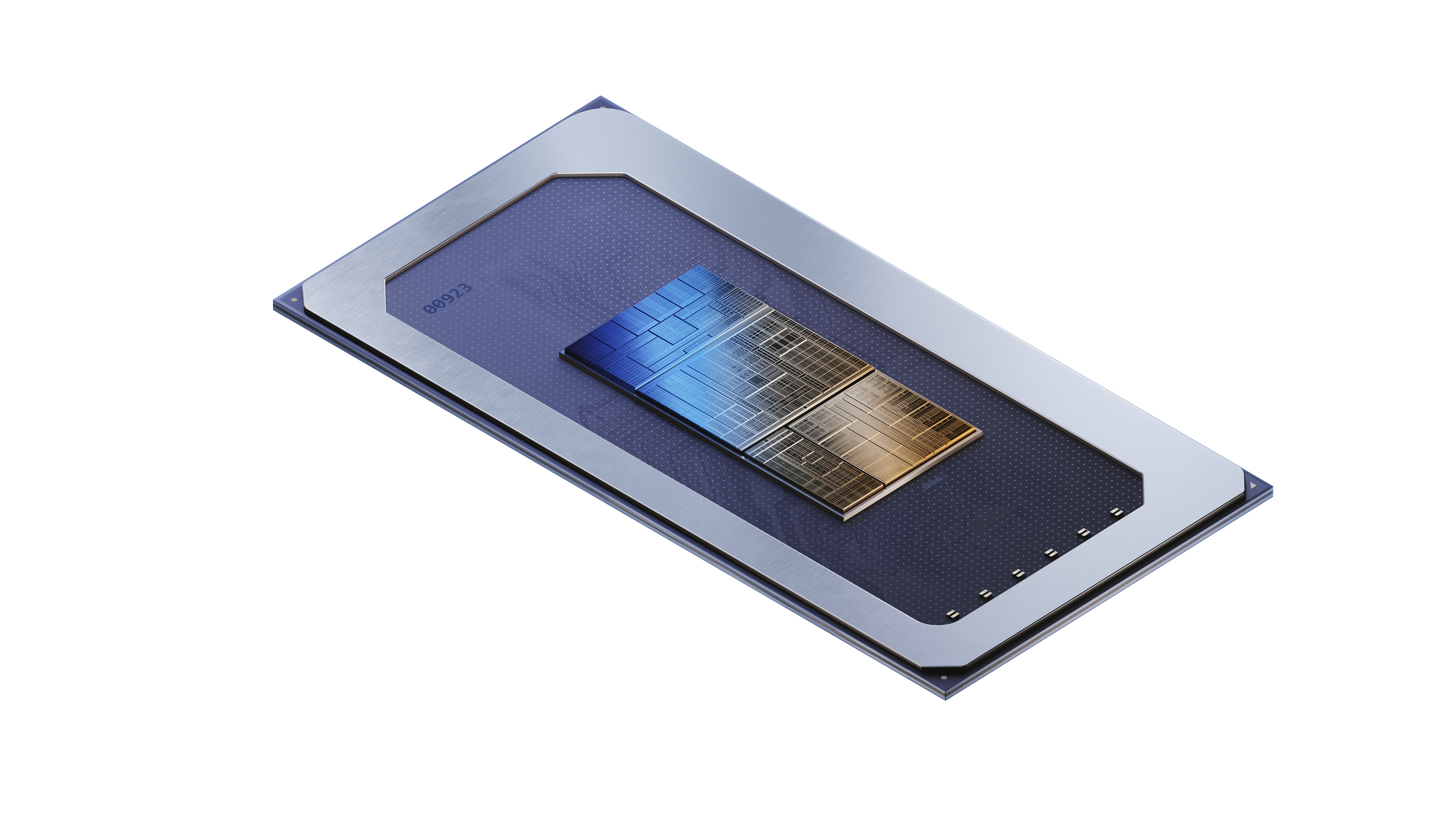

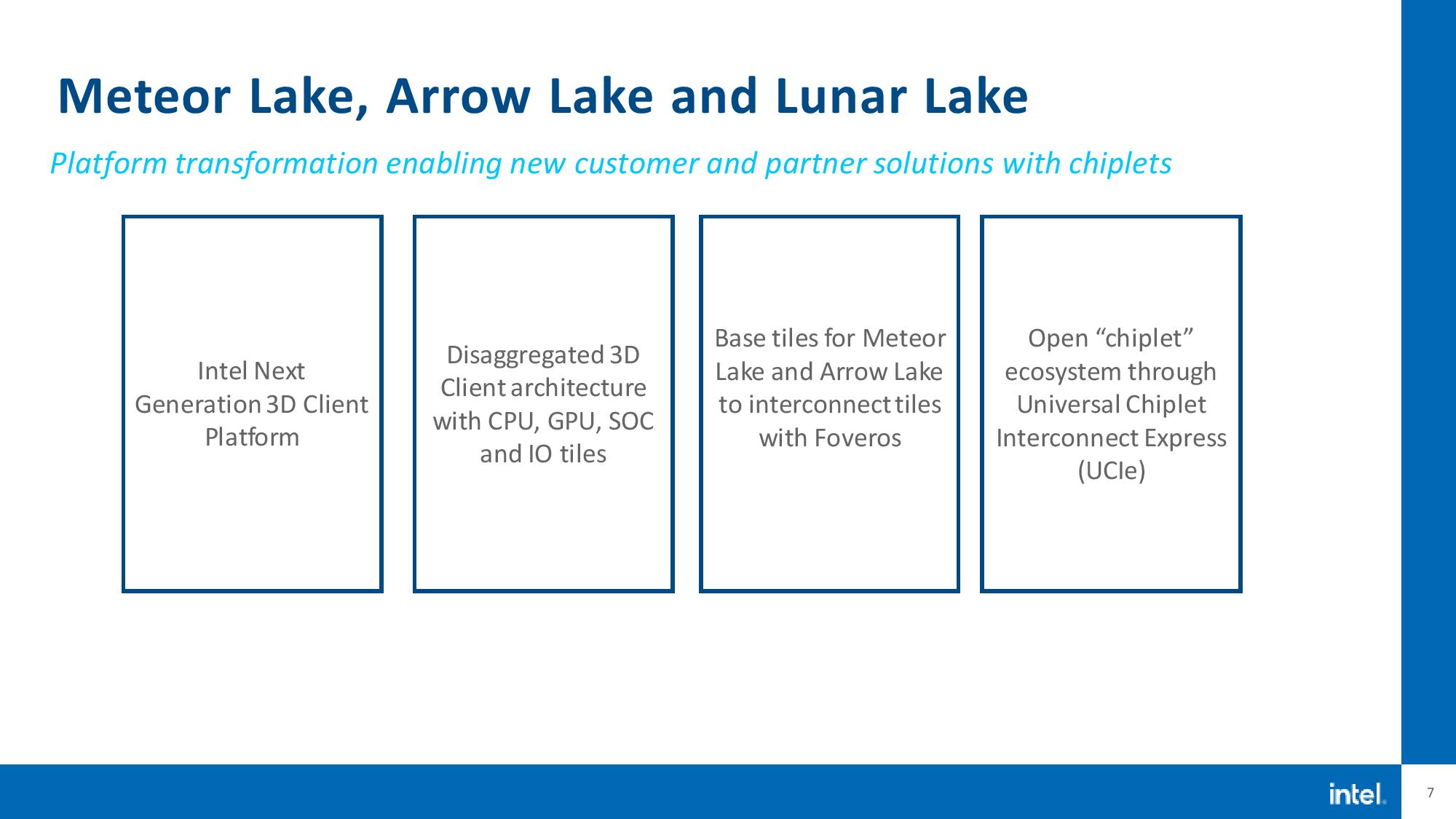

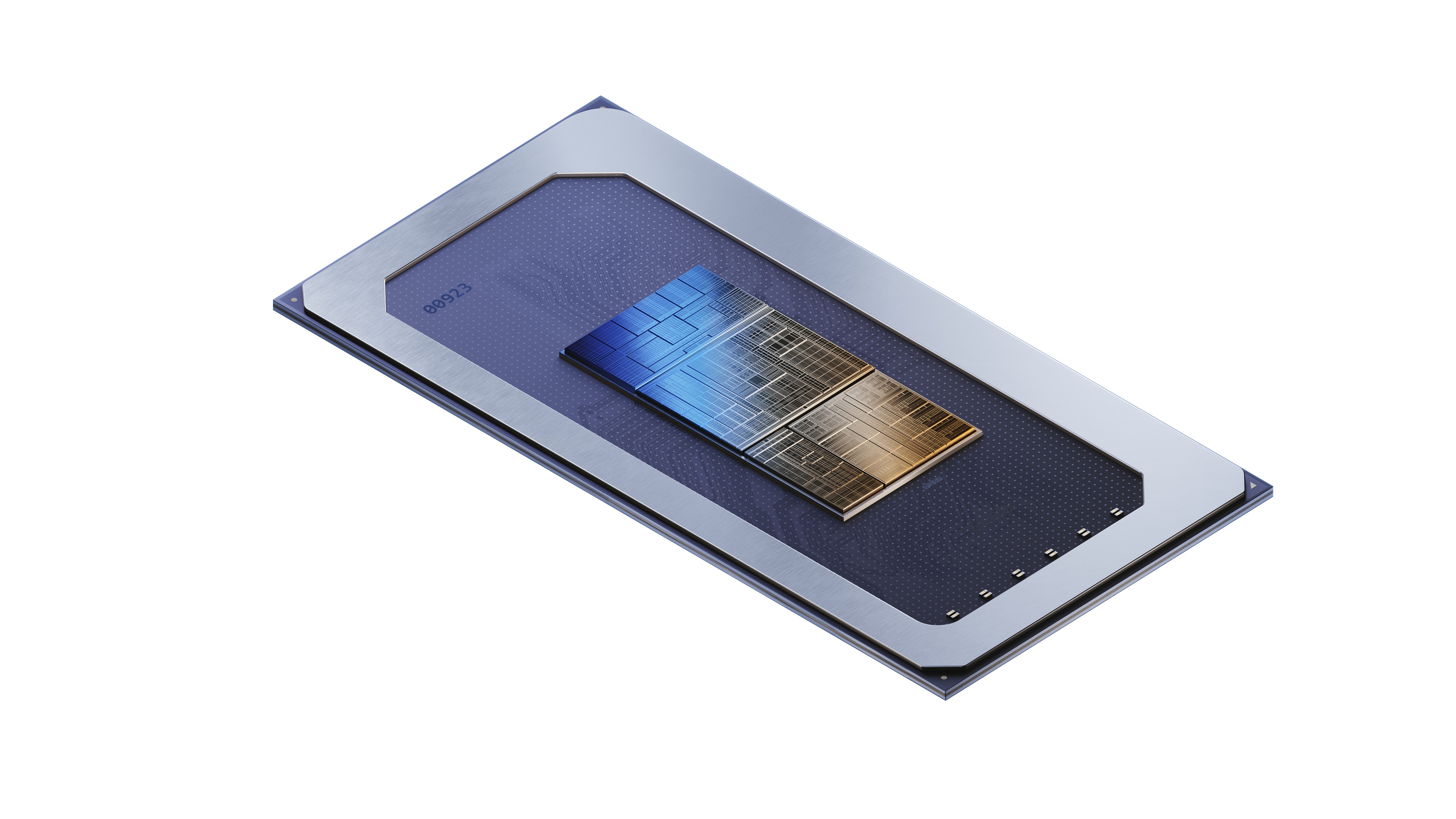

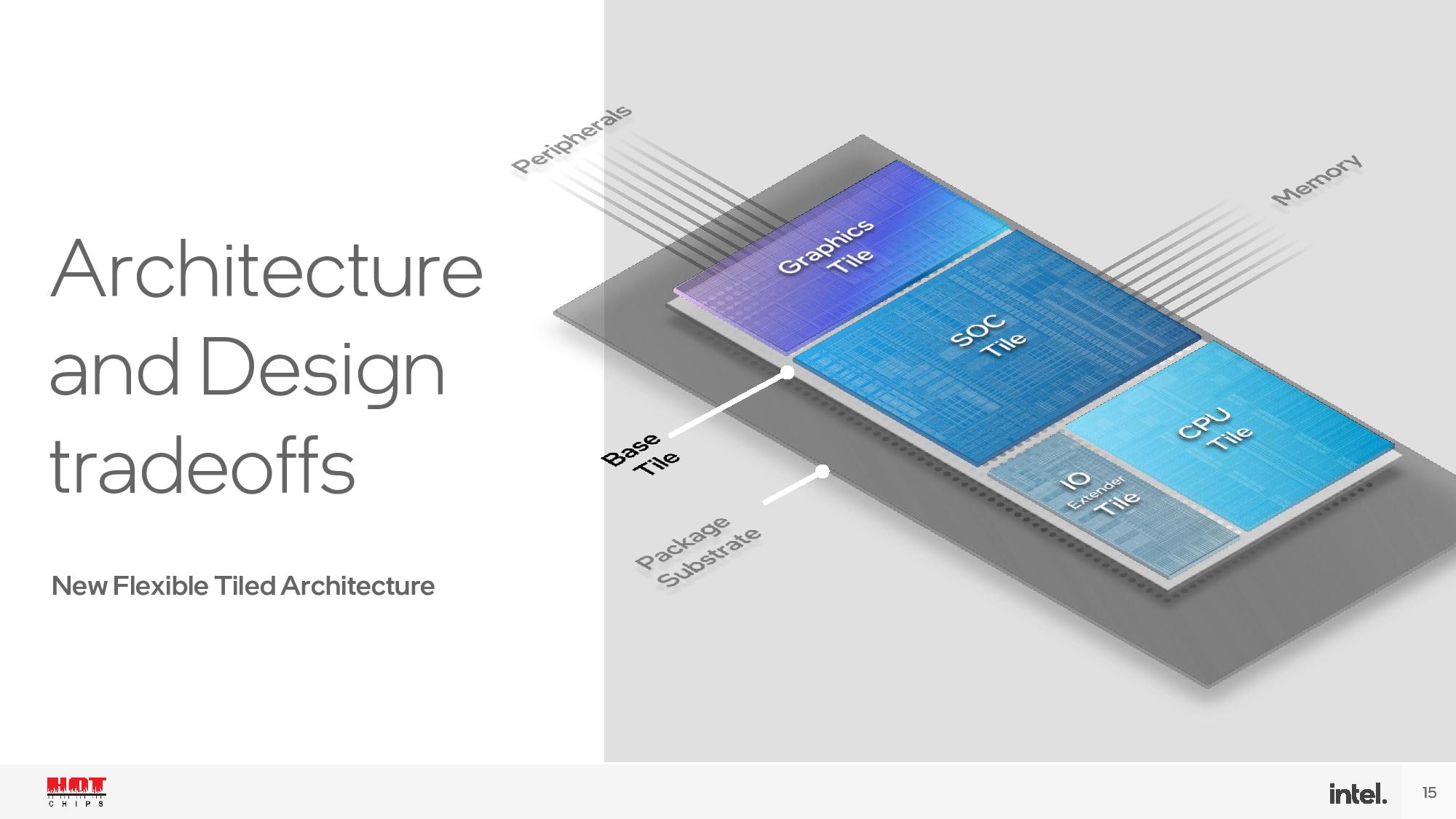

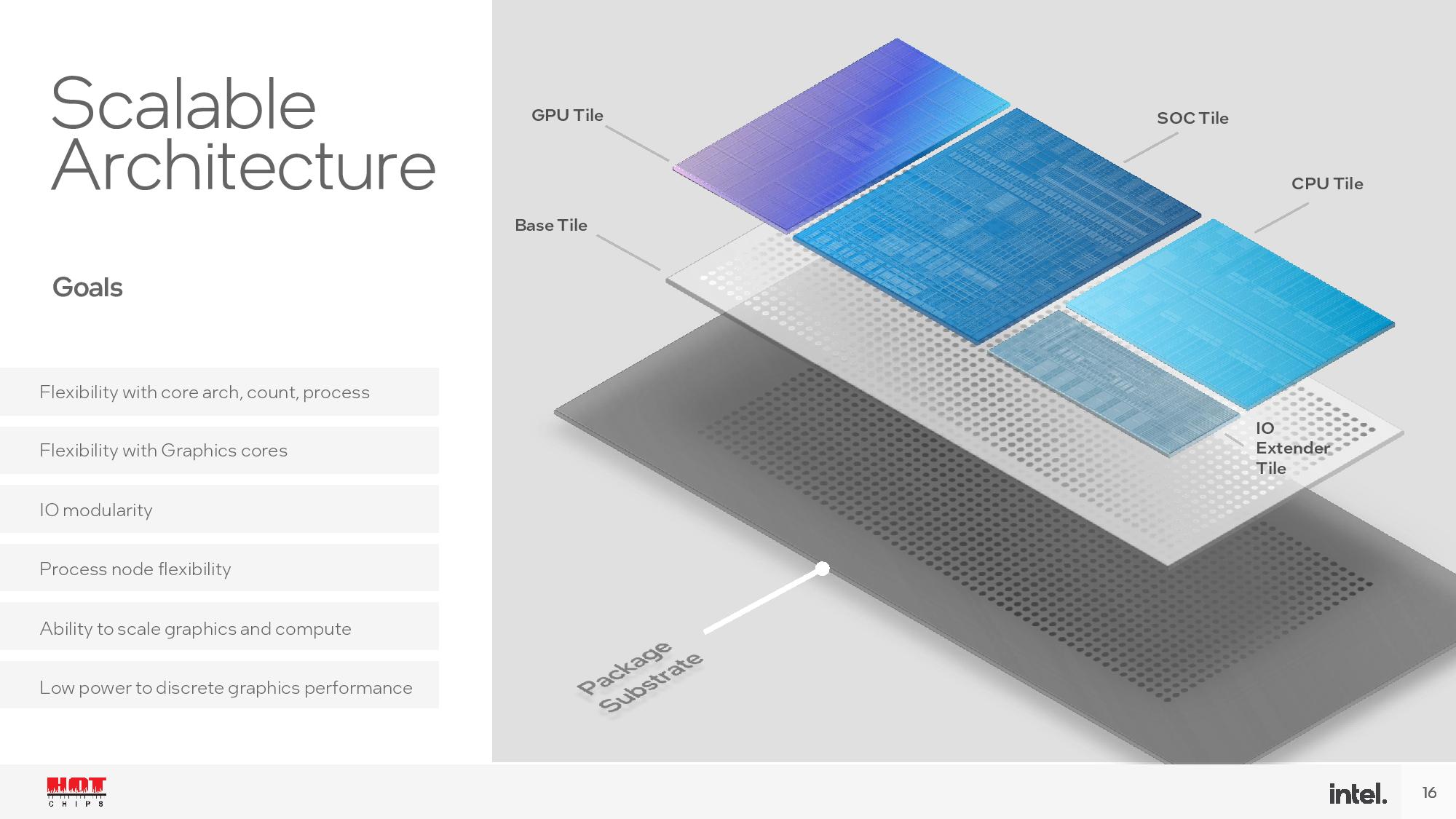

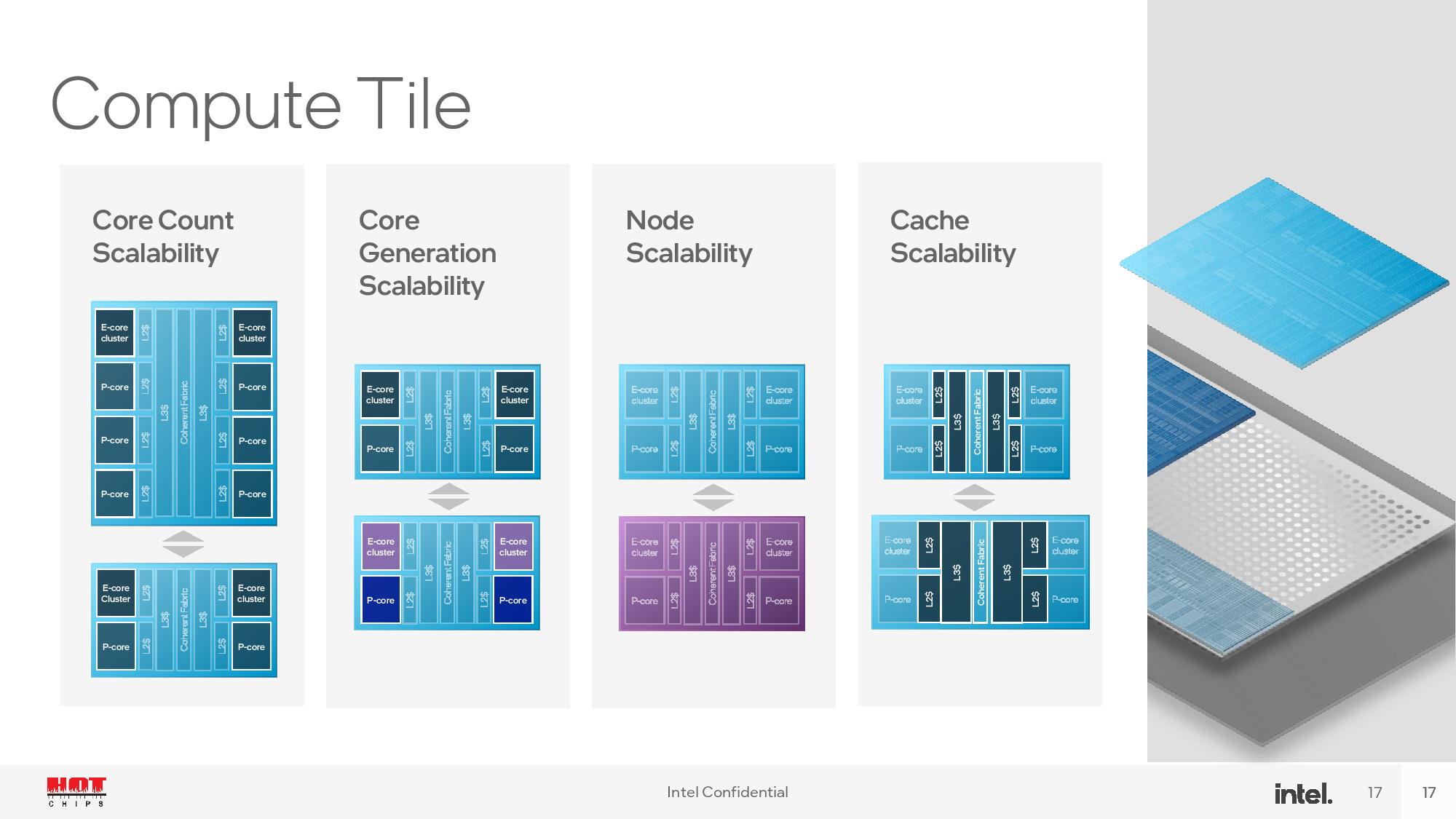

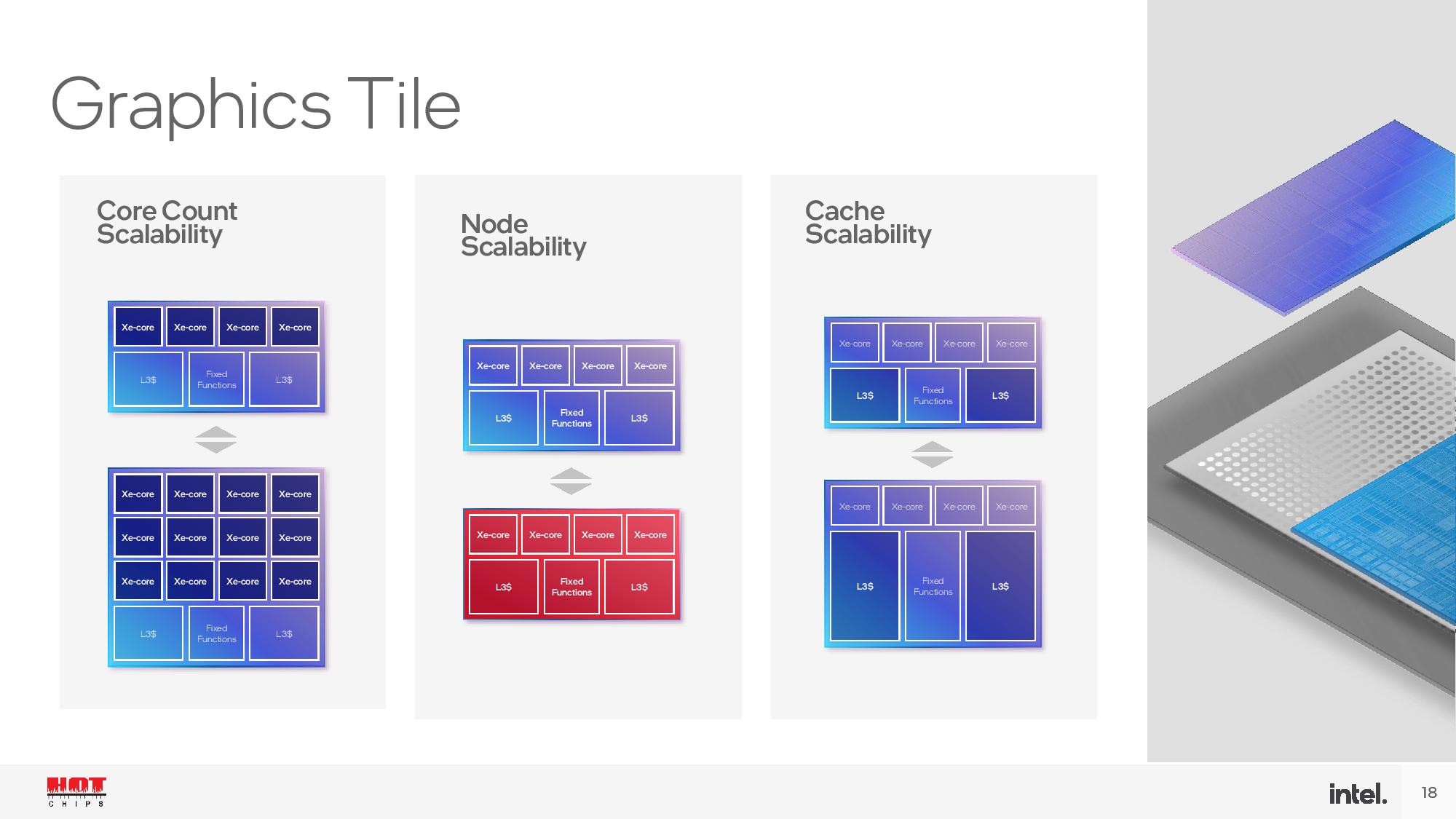

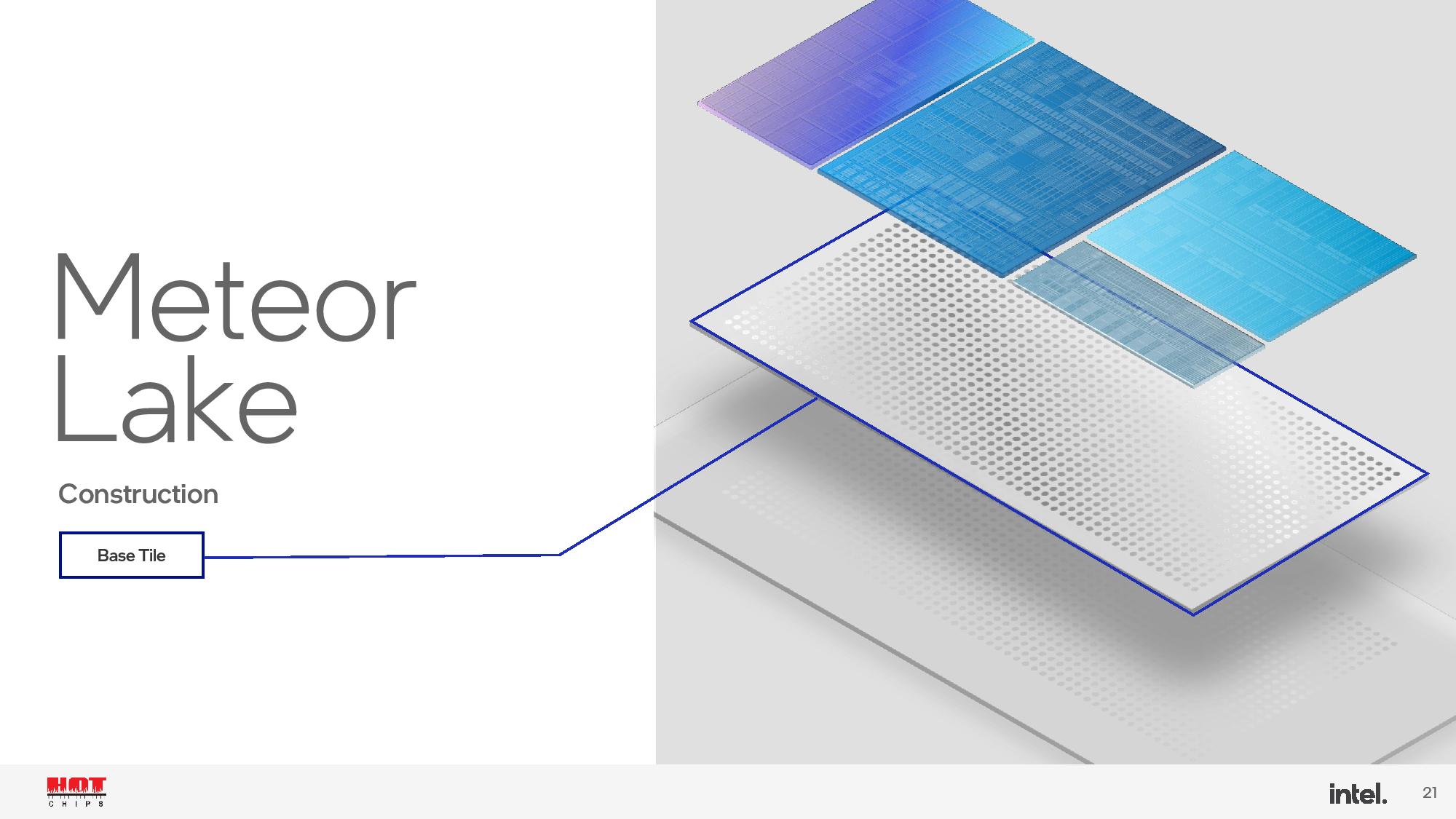

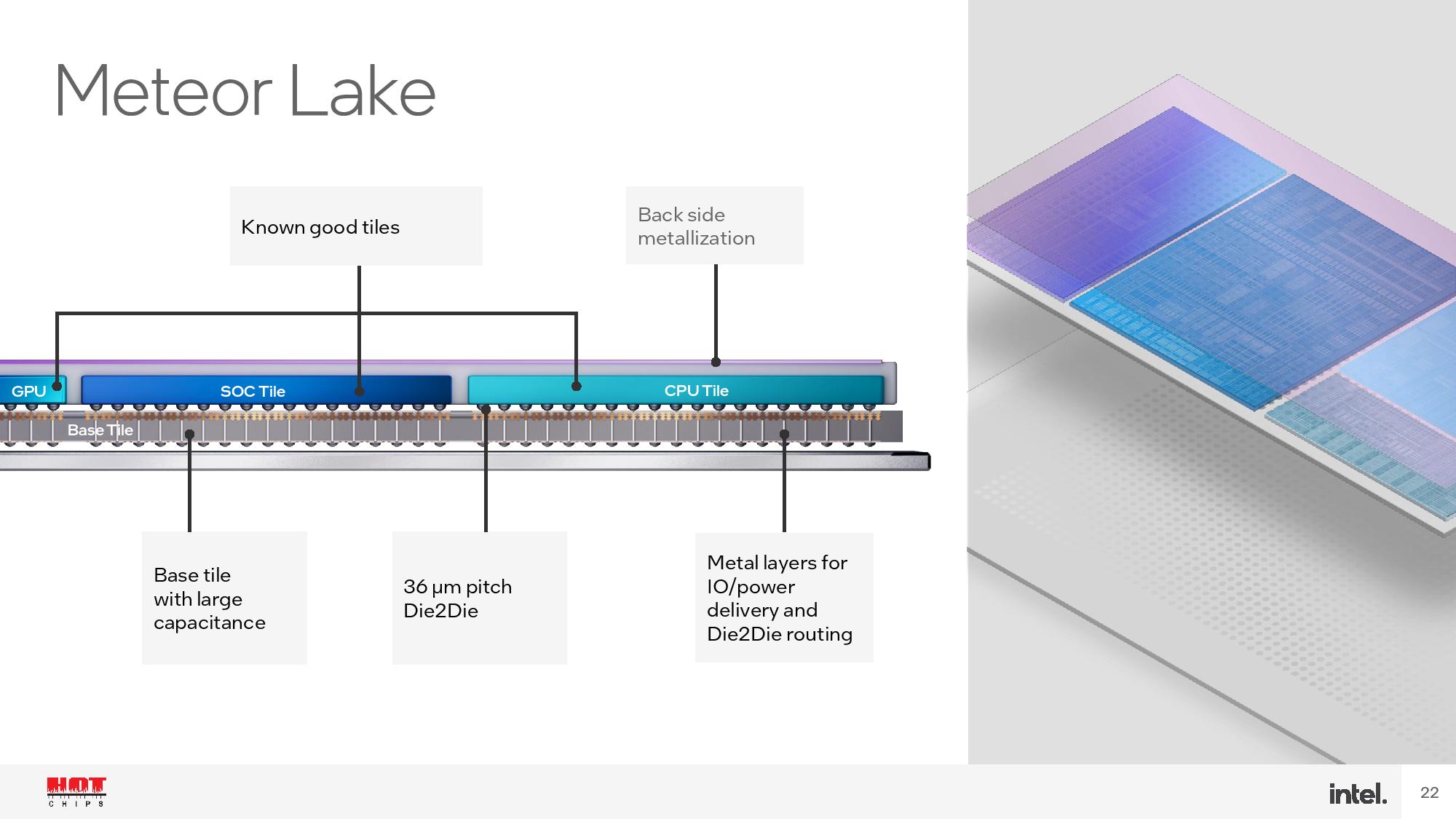

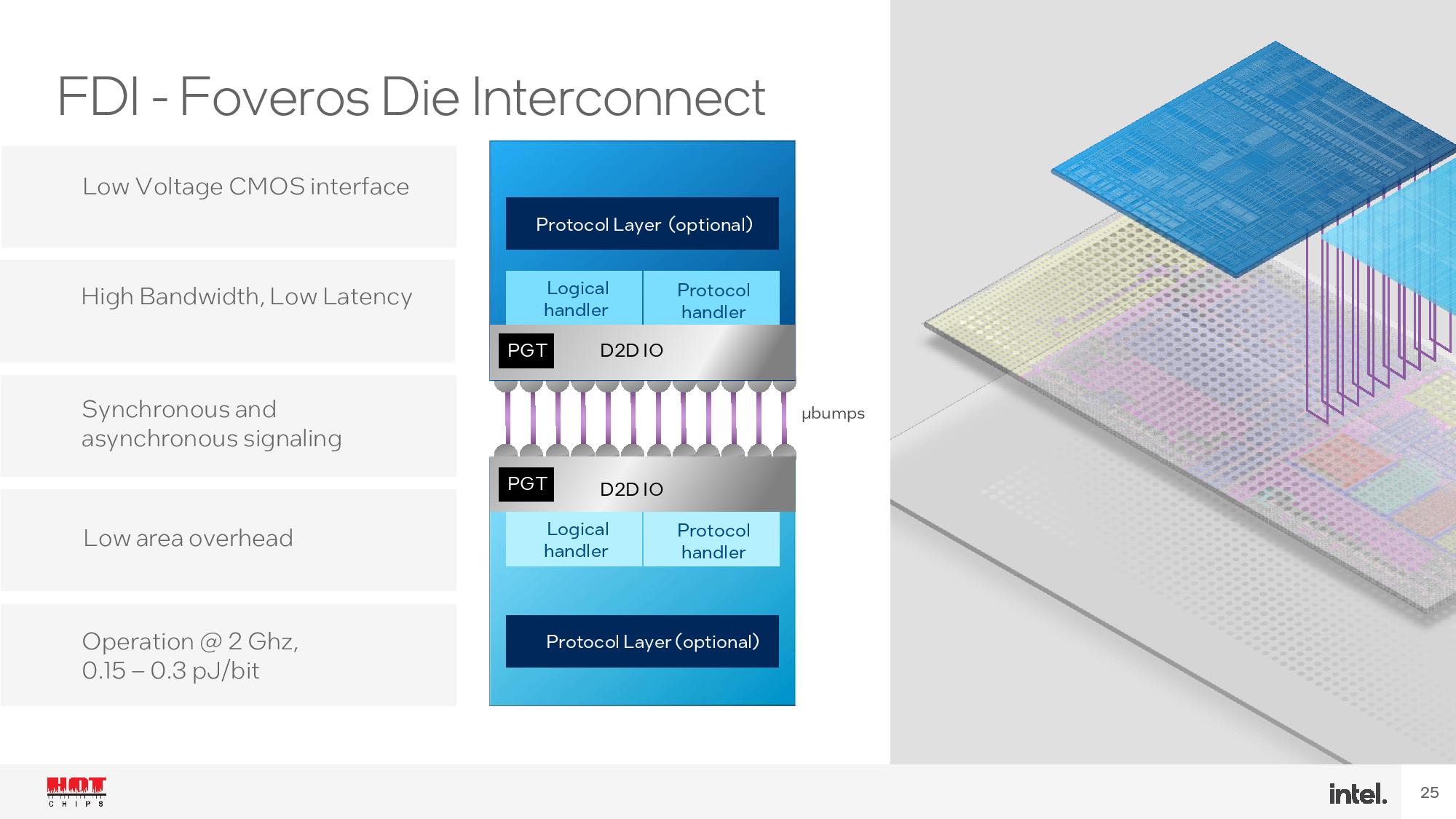

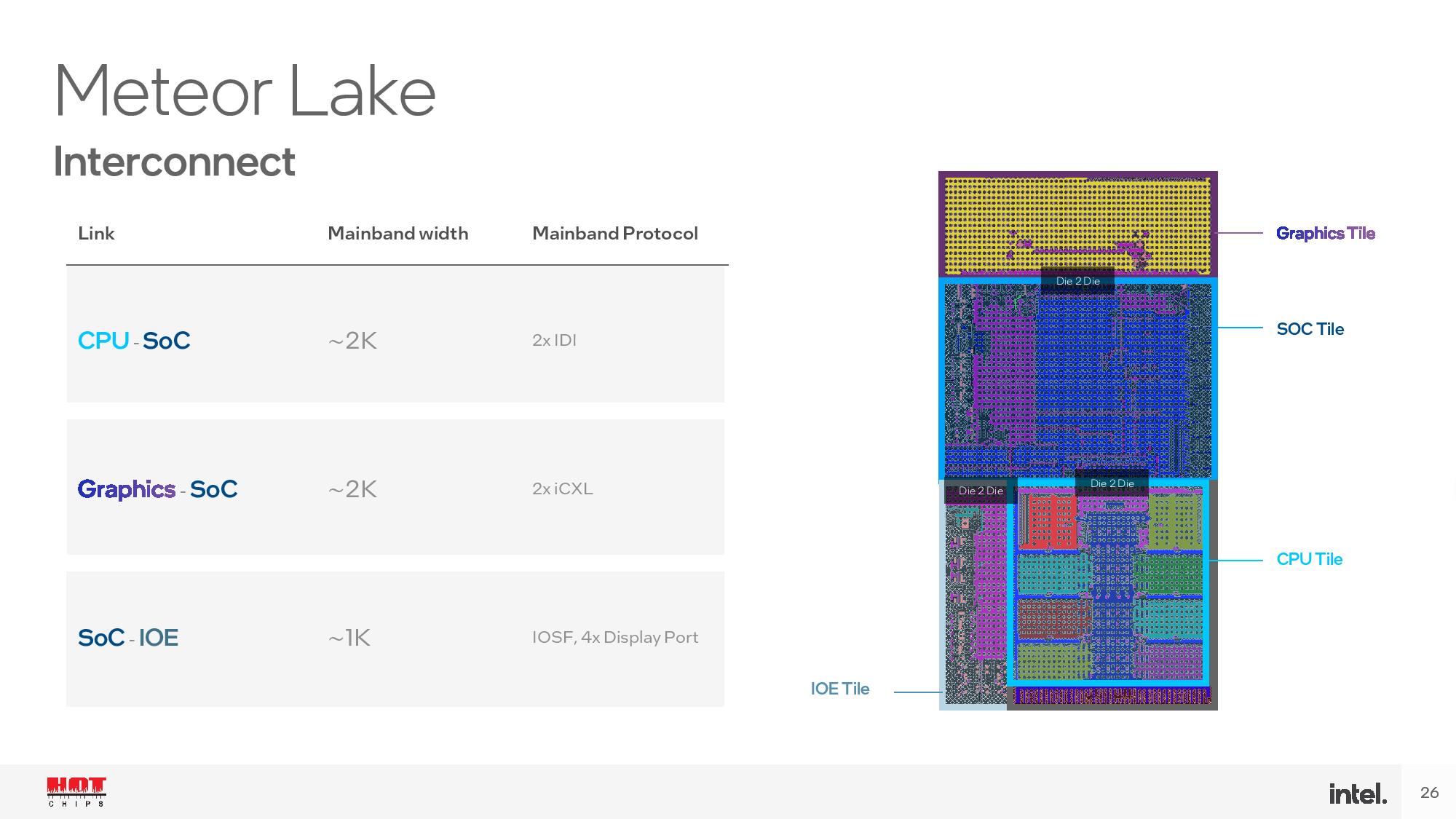

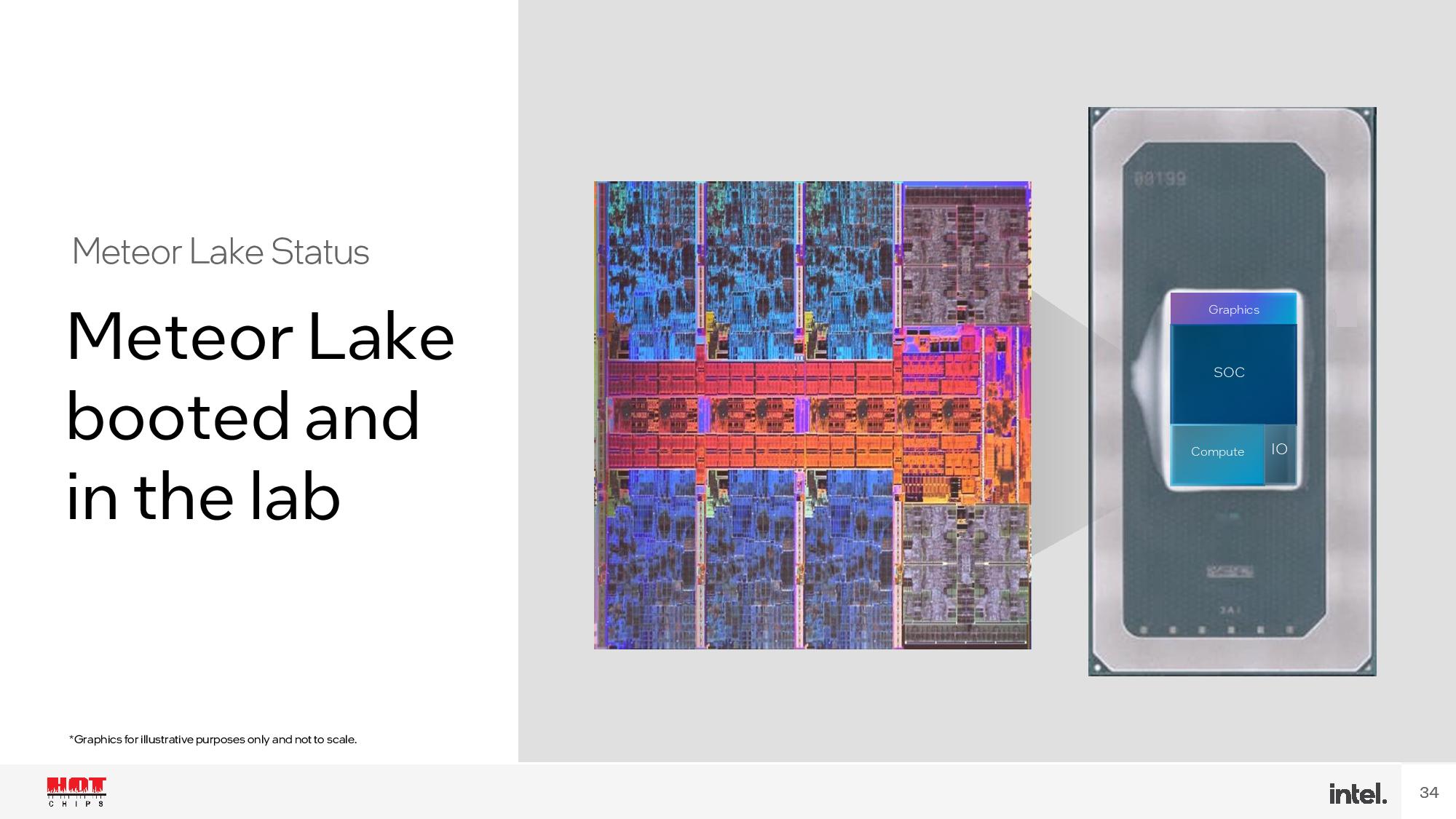

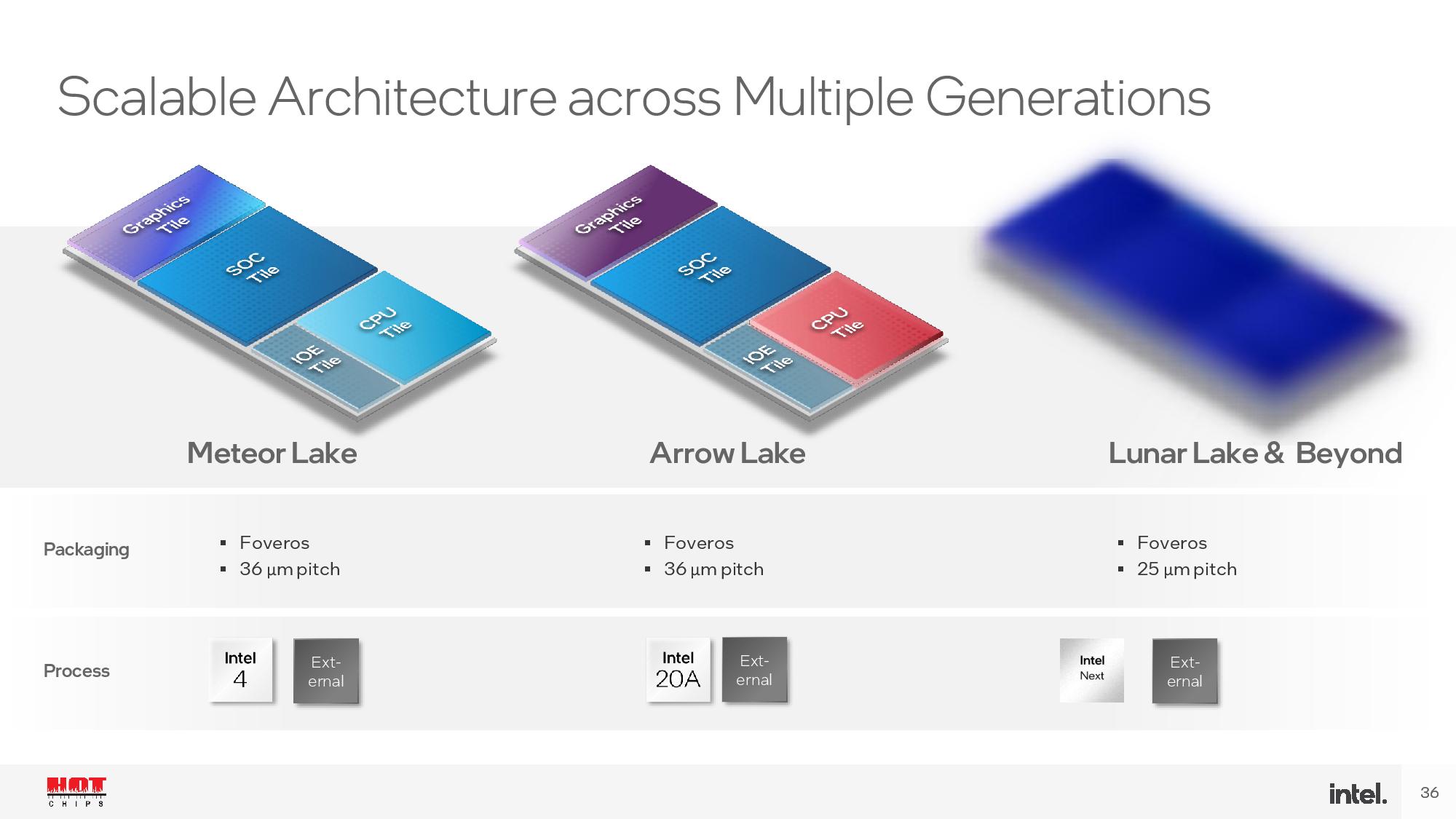

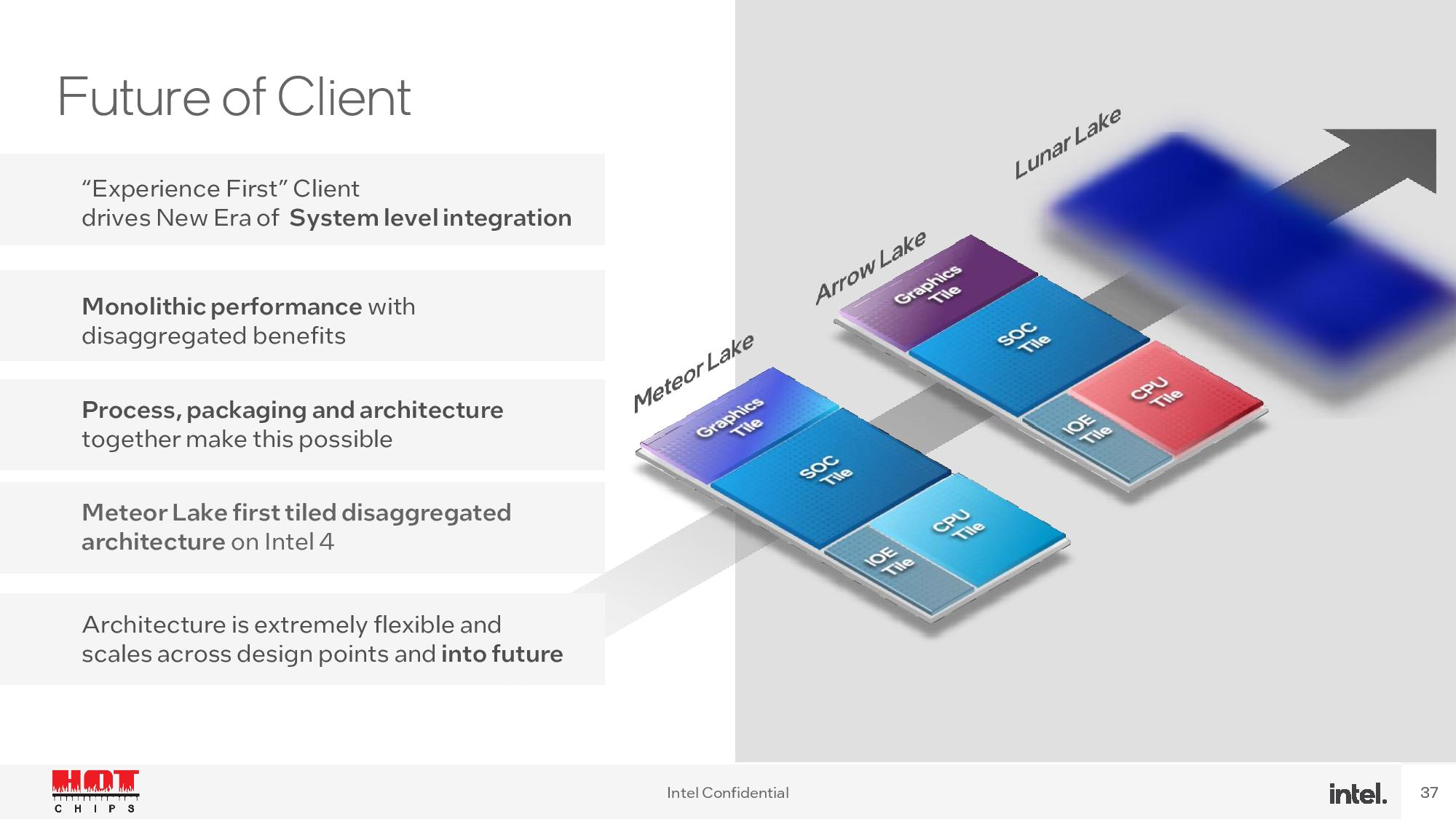

Intel shared a few new renders of the Meteor Lake chips, and we already covered the overall hardware design during Hot Chips 2022. These chips will be the first to leverage the Intel 4 process node and a slew of TSMC-fabbed chiplets on the N5 and N6 processes for other functions, like the GPU and SoC tiles. Here we can see the chip is broken up into four units, with a CPU, GPU, SoC/VPU, and I/O tile vertically stacked on top of an interposer using Intel's 3D Foveros packaging technique. We've also included another slide deck at the end of the article with more granular architectural details from the Hot Chips conference.

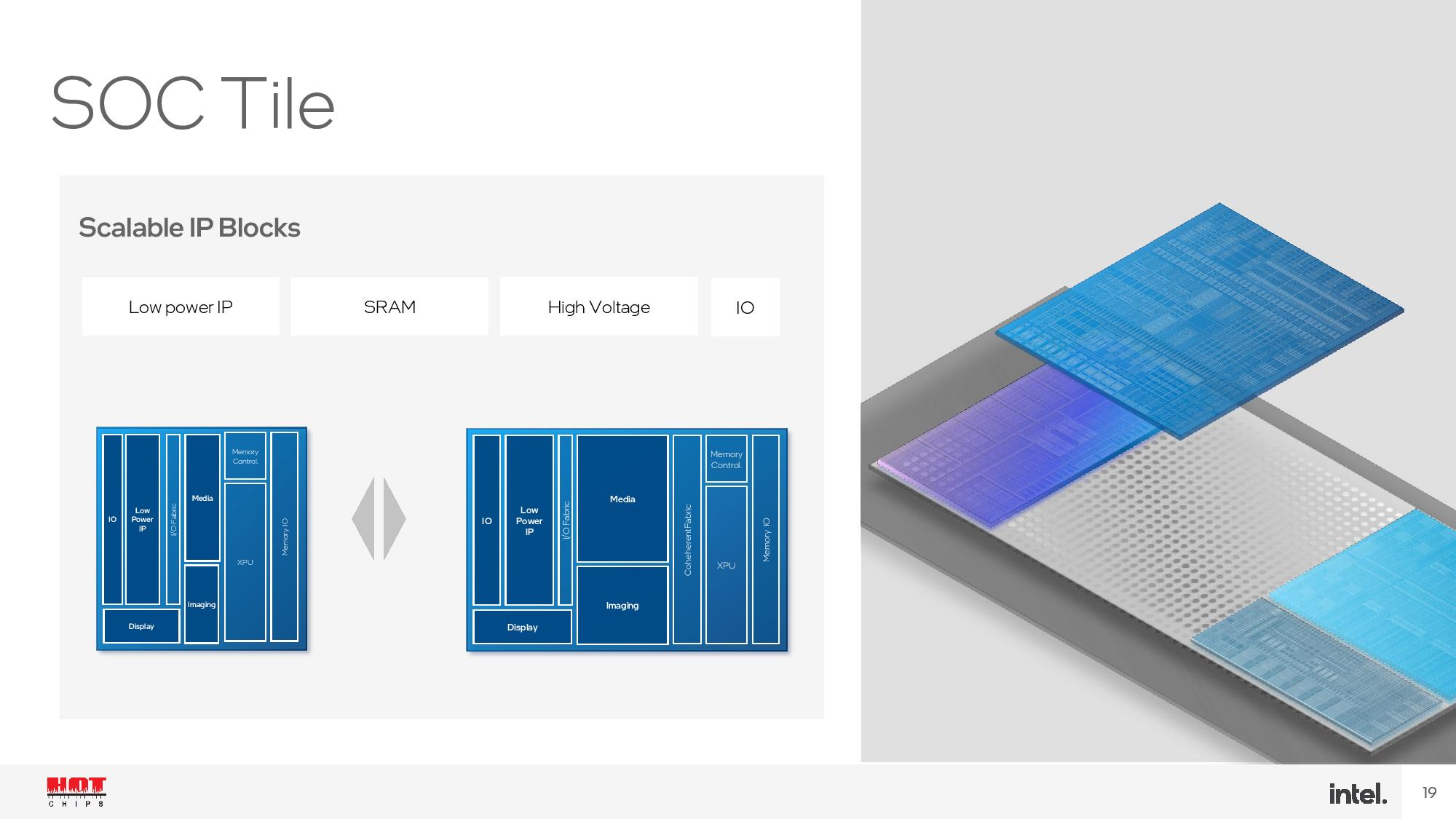

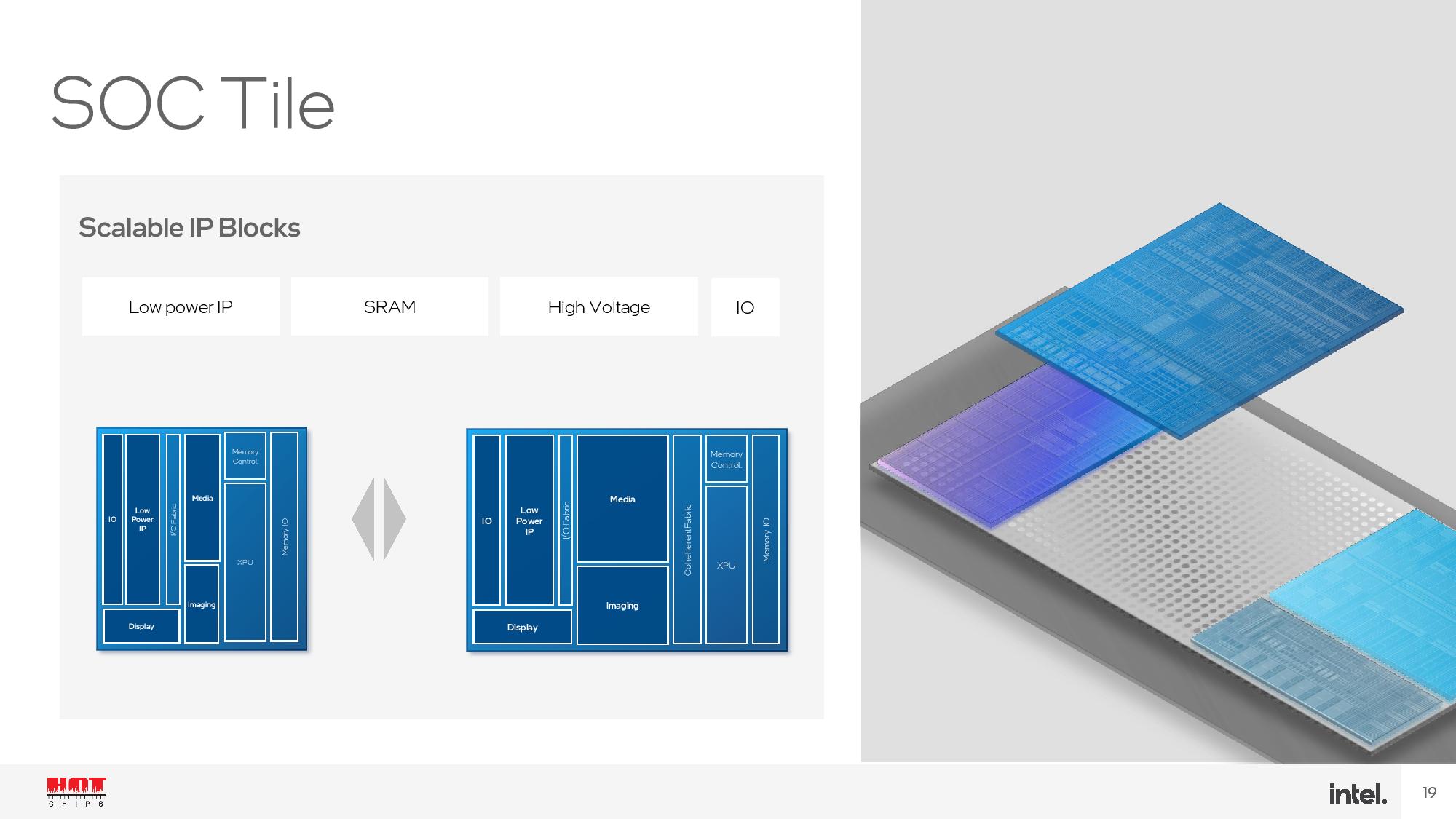

The focus here is the VPU unit, but don't let the first image, which is Intel's simplified illustration it shared for today's announcement, mislead you -- the entire tile is not dedicated to the VPU. Instead, it is an SoC tile with various other functions, like I/O, VPU, GNA cores, memory controllers, and other functions. This tile is fabbed on TSMC's N6 process but bears the Intel SoC architecture and VPU cores. The VPU unit doesn't consume this entire die area, which is good -- that would mean Intel was employing nearly 30% of its die area to what will be a not-frequently used unit, at least at first. However, as we'll cover below, it will take some time before developers enable the application ecosystem needed to make full use of the VPU cores.

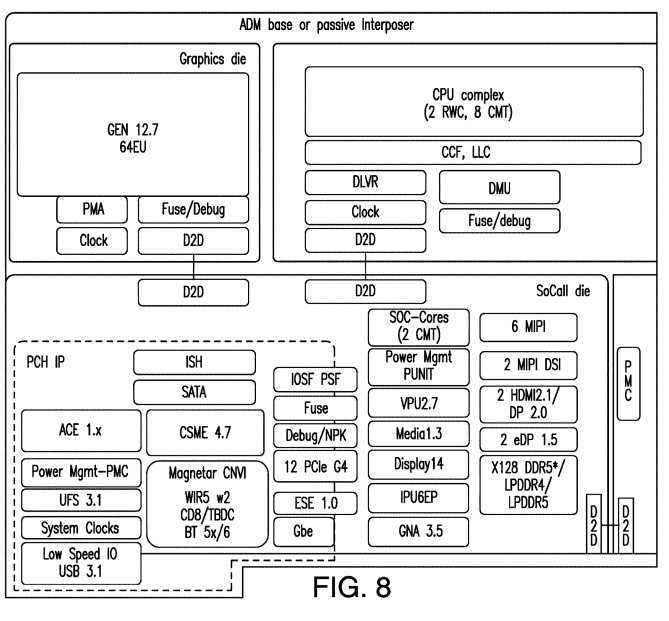

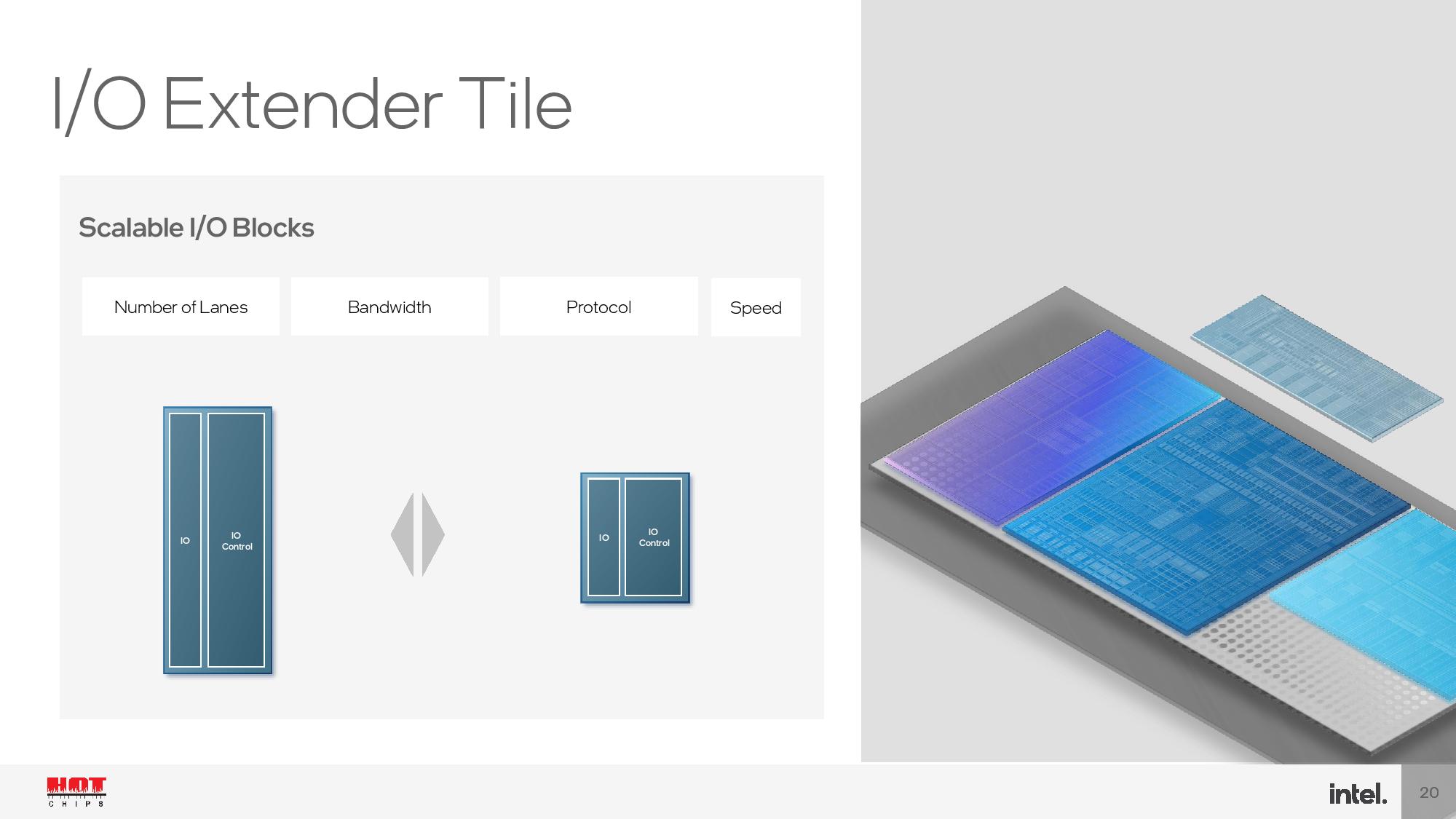

In the above album, I included an image from Intel's Hot Chips presentation that provides the company's official graphical representation of the functions on the I/O die. I also included a slide labeled 'fig. 8.' This block diagram comes from an Intel patent that is widely thought to outline the Meteor Lake design, and it generally matches what we've already learned about the chip.

Intel will still include the Gaussian Neural Acceleration low-power AI acceleration block that already exists on its chips, marked as 'GNA 3.5' on the SoC tile in the diagram (more on this below). You can also spot the 'VPU 2.7' block that comprises the new Movidius-based VPU block.

Like Intel's stylized render, the patent image is also just a graphical rendering with no real correlation to the actual physical size of the dies. It's easy to see that with so many external interfaces, like the memory controllers, PCIe, USB, and SATA, not to mention the media and display engines and power management, that the VPU cores simply can't consume much of the die area on the SoC tile. For now, the amount of die area that Intel has dedicated to this engine is unknown.

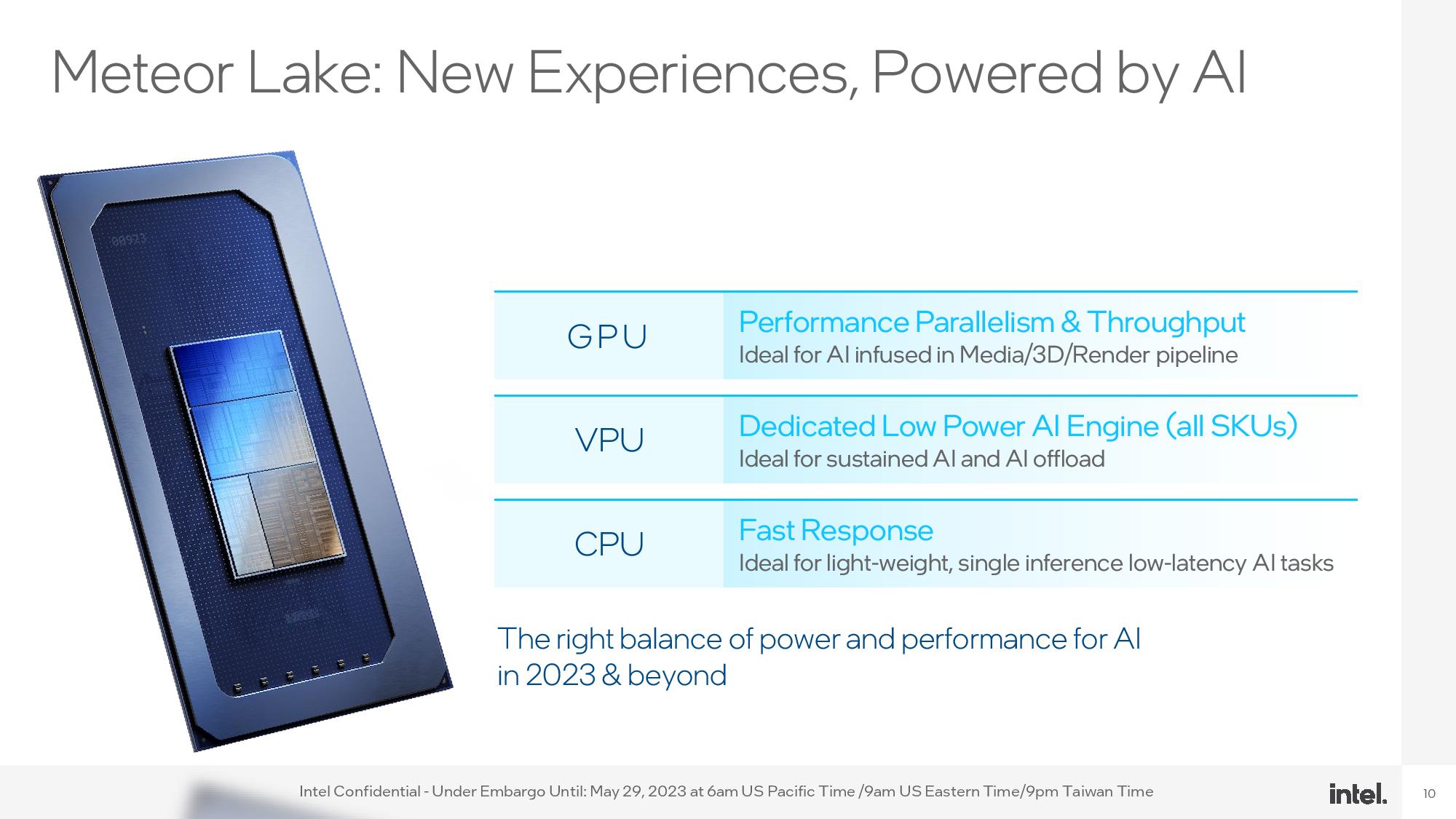

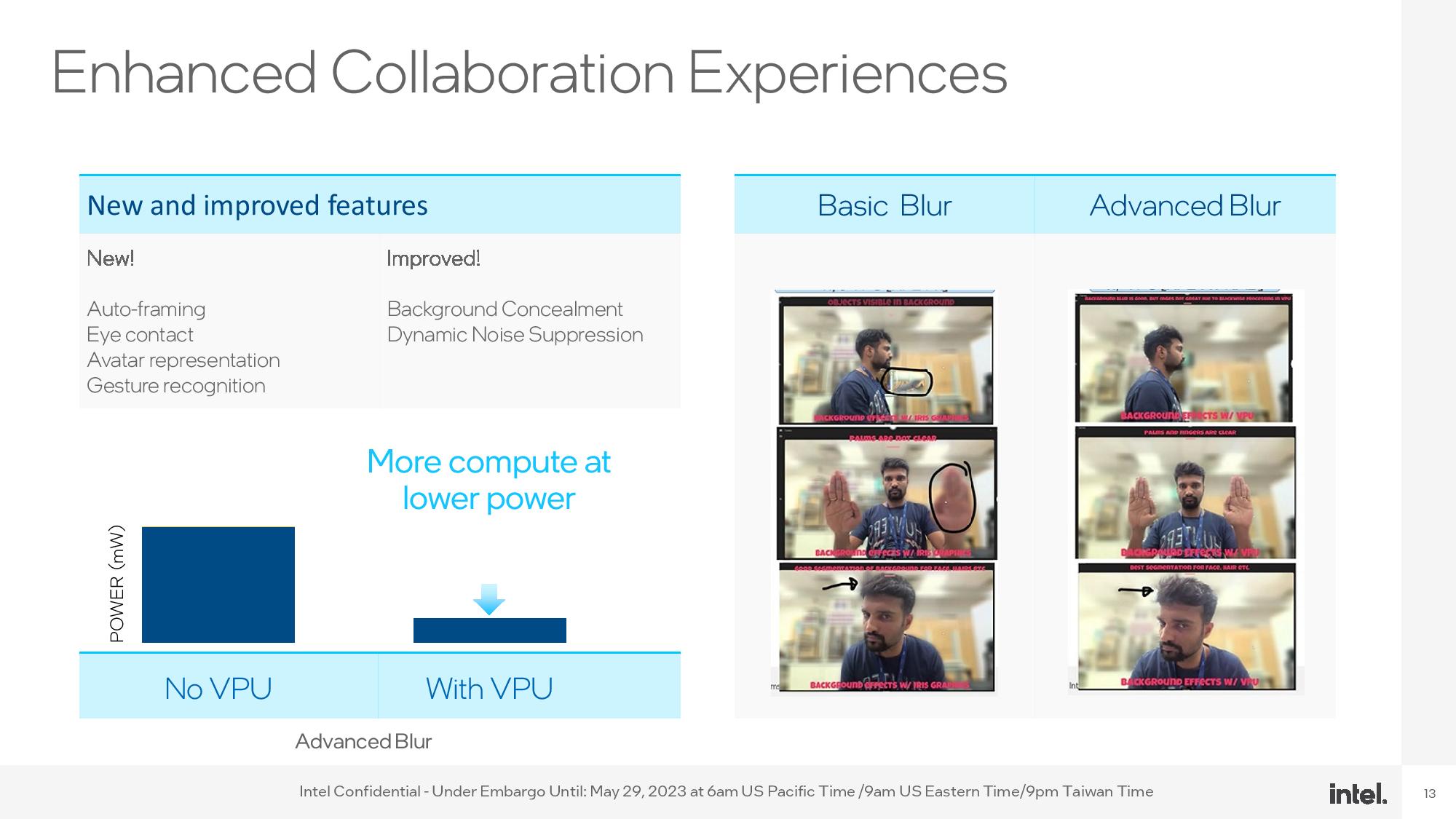

The VPU is designed for sustained AI workloads, but Meteor Lake also includes a CPU, GPU, and GNA engine that can run various AI workloads. Intel's Intel says the VPU is primarily for background tasks, while the GPU steps in for heavier parallelized work. Meanwhile, the CPU addresses light low-latency inference work. Some AI workloads can also run on both the VPU and GPU simultaneously, and Intel has enabled mechanisms that allow developers to target the different compute layers based on the needs of the application at hand. This will ultimately result in higher performance at lower power -- a key goal of using the AI acceleration VPU.

Intel's chips currently use the GNA block for low-power AI inference for audio and video processing functions, and the GNA unit will remain on Meteor Lake. However, Intel says it is already running some of the GNA-focused code on the VPU and achieving better results, with a heavy implication that Intel will transition to the VPU entirely with future chips and remove the GNA engine.

Intel also disclosed that Meteor Lake has a coherent fabric that enables a unified memory subsystem, meaning it can easily share data among the compute elements. This is a key functionality that is similar in concept to other contenders in the CPU AI space, like Apple with its M-series and AMD's Ryzen 7040 chips.

Here we can see Intel's slideware covering its efforts in enabling the vast software and operating system ecosystem that will help propel AI-accelerated applications onto the PC. Intel's pitch is that it has the market presence and scale to bring AI to the mainstream and points to its collaborative efforts that brought support for its x86 hybrid Alder and Raptor Lake processors to Windows, Linux, and the broader ISV ecosystem.

The industry will face similar challenges in bringing AI acceleration to modern operating systems and applications. However, having the capability to run AI workloads locally isn't worth much if developers won't support the features due to difficult proprietary implementations. The key to making it easy to support local AI workloads is the DirectML DirectX 12 acceleration libraries for machine learning, an approach championed by Microsoft and AMD. Intel's VPU supports DIrectML, but also ONNX and OpenVINO, which Intel says offers better performance on its silicon. However, ONNX and OpenVINO will require more targeted development work from software devs to extract the utmost performance.

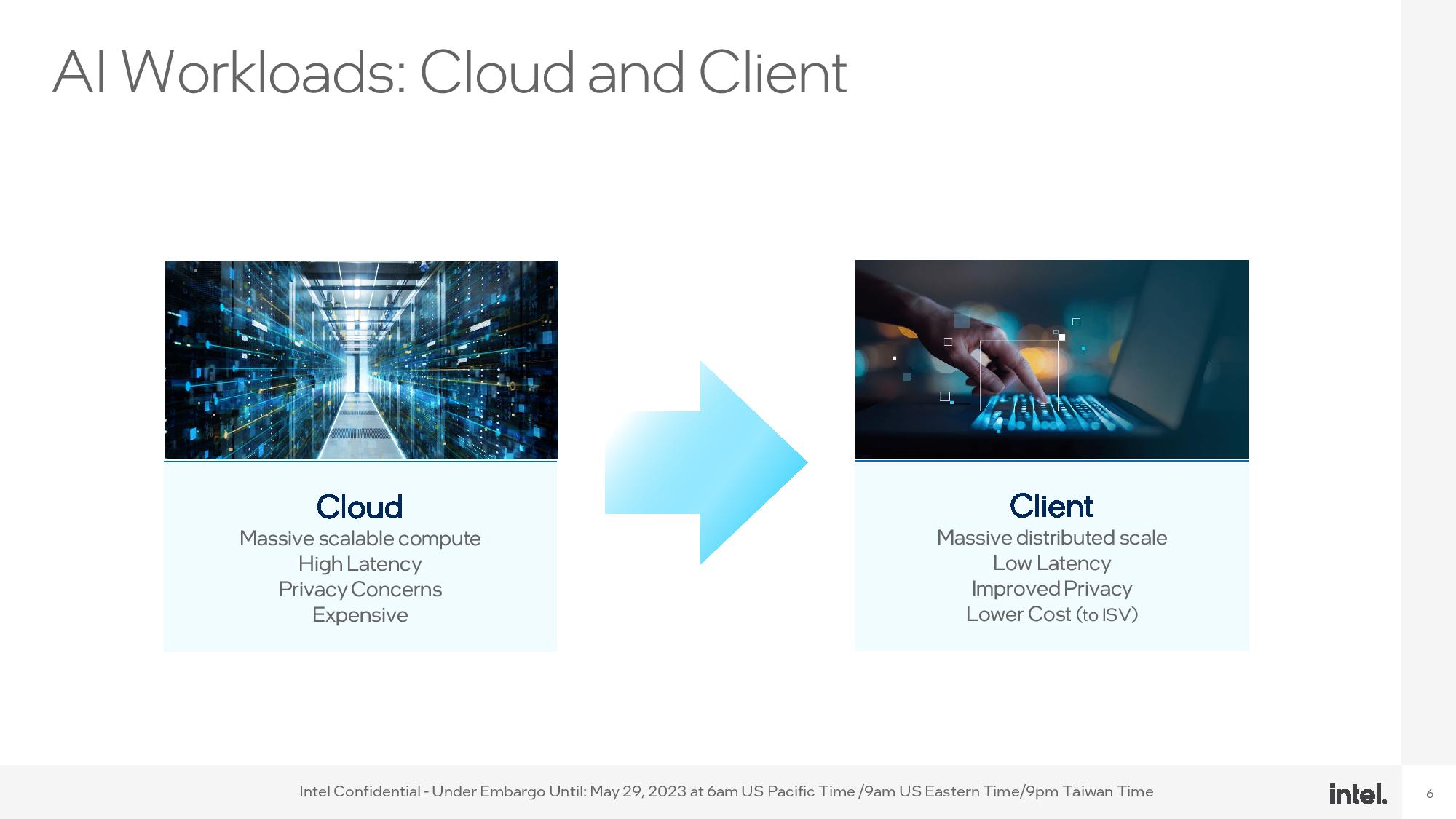

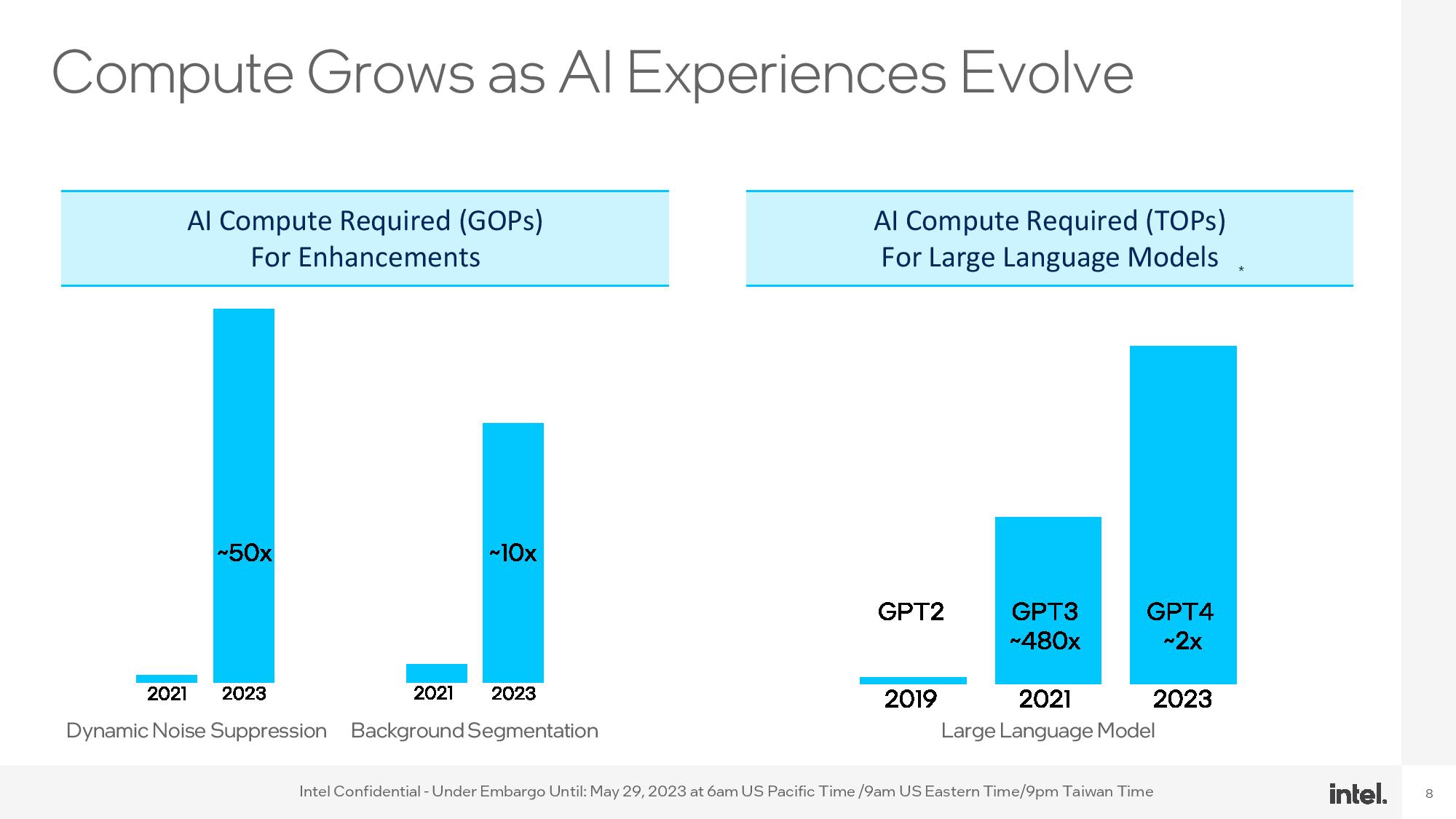

Many of today's more intense AI workloads, such as large language models like ChatGPT and the like, require intense computational horsepower that will continue to run in data centers. However, Intel contends that it presents latency and privacy concerns, not to mention adding cost to the equation. Some AI applications, like audio, video, and image processing, will be able to be addressed locally on the PC, which Intel says will improve latency, privacy, and cost.

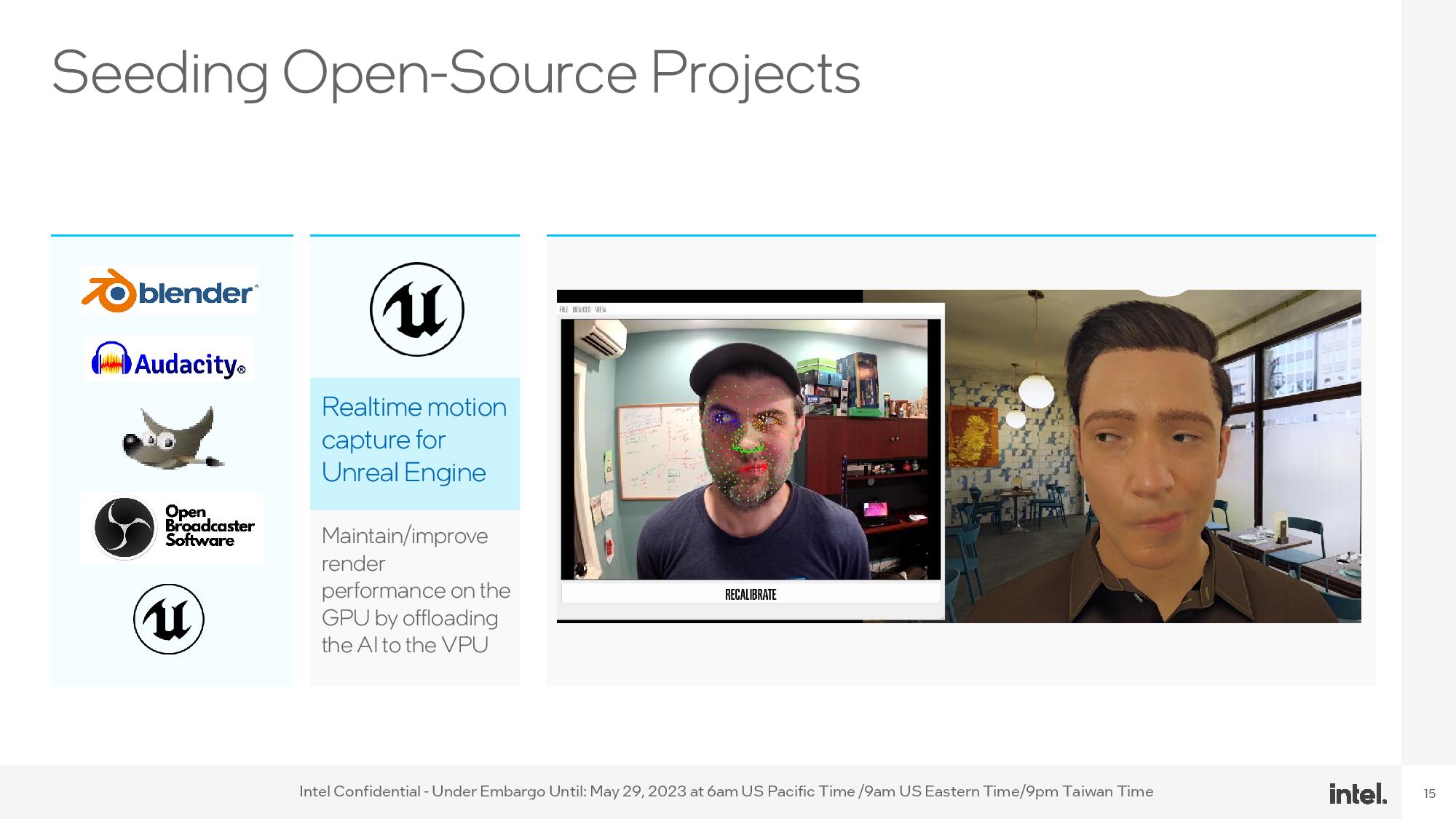

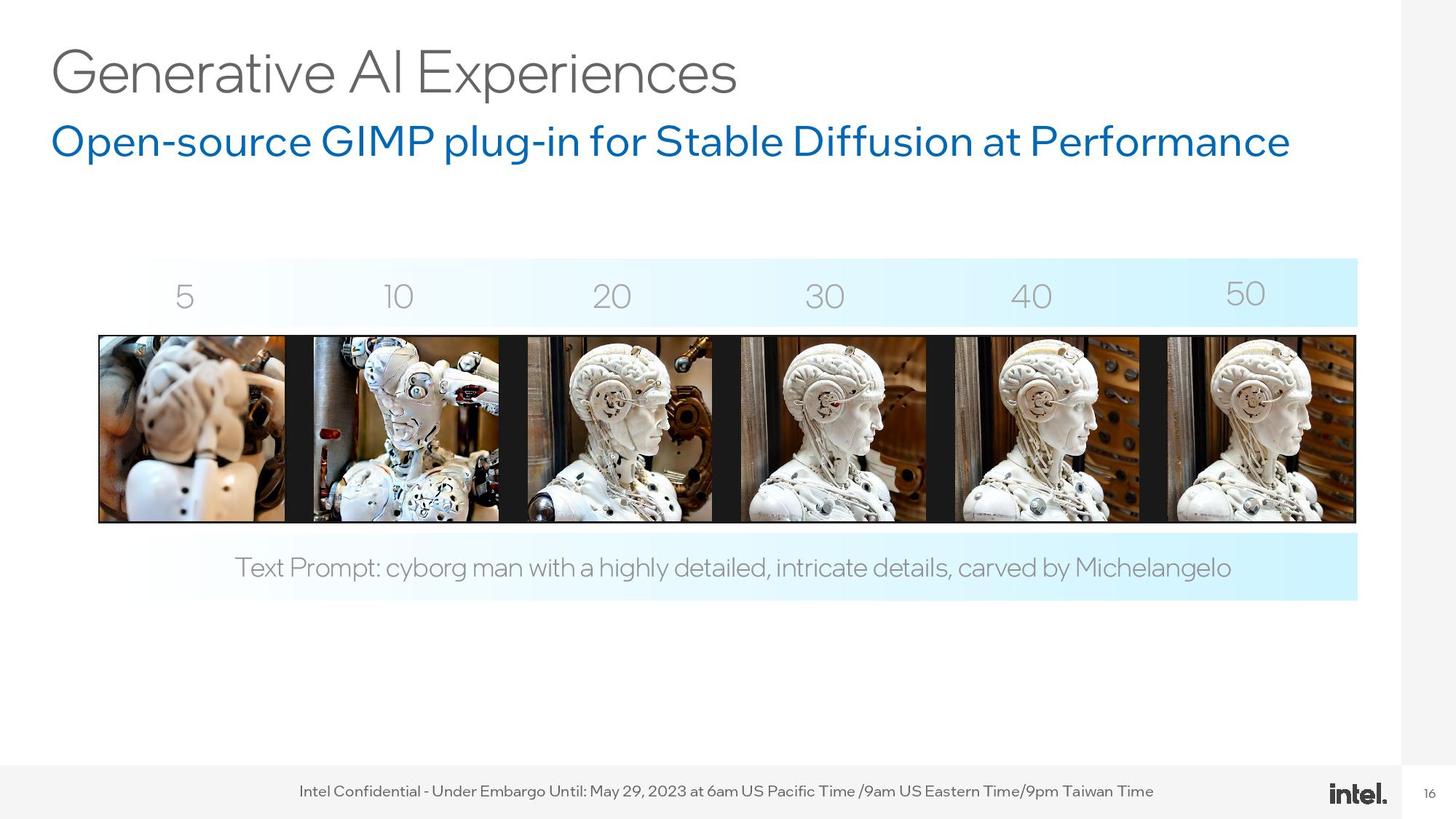

Intel points to a range of different workloads that can benefit from local AI acceleration, including video and audio processing in real-time and real-time motion capture for Unreal Engine. Intel also demoed Stable Diffusion running on Meteor Lake's GPU and VPU simultaneously and super-resolution running on only the VPU. However, the demo doesn't give us a frame of reference from a performance perspective, so we can't attest to the relative performance compared to other solutions. Additionally, not all Stable Diffusion models can run locally on the processor -- they'll need discreet GPU acceleration.

A spate of common applications currently support some form of local AI acceleration, but the selection still remains quite limited. However, the continued development work from Intel and the industry at large will enable AI acceleration to become more common over time.

Here are some slides with more architectural details from the Hot Chips presentation. Intel says that Meteor Lake is on track for release this year, but it will come to laptops first.

All signs currently point to the Meteor Lake desktop PC chips being limited to comparatively lower-end Core i3 and Core i5 models rated for conservative 35W and 65W power envelopes, but Intel has yet to make a formal announcement. We expect to learn more as we near the launch later this year.