Intel will hold a webinar today, March 28, at 8:30 am PDT to provide an update on the company's Data Center and AI roadmaps and businesses. Given Intel's recent teasers on Twitter, we expect new product announcements and perhaps demos with head-to-head benchmarks against AMD's silicon.

UPDATE: We have our complete written coverage now available here: Intel Roadmap Update Includes 144-Core Sierra Forest, Clearwater Forest in 2025. You can also see the full live blog below.

We already know the broad strokes of Intel's existing plan. The company is attempting to execute a daunting roadmap that includes five new process nodes in a mere four years — an unprecedented and audacious goal designed to bring it back to the leadership position in the data center.

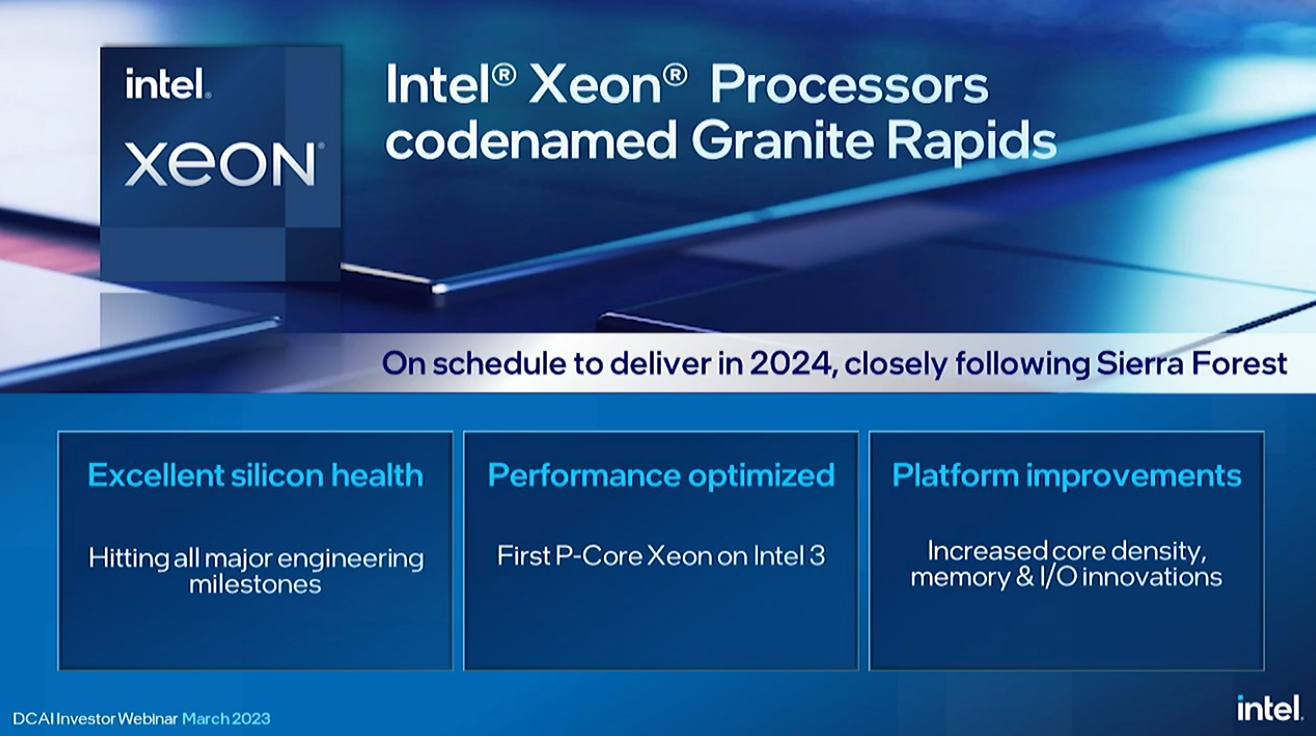

Intel will pair its new process tech with a re-thinking of its Xeon roadmap that includes the new efficiency-focused Sierra Forest chips and high-performance Granite Rapids models. The Sierra Forrest chips debut the company's Efficiency cores (E-cores) in its Xeon data center. These are designed to address Arm contenders, not to mention AMD's coming 128-core 5nm EPYC Bergamo processors that take a similar approach. Intel also has its Xeon Emerald Rapids and Granite Rapids chips with standard Performance cores (P-cores), and we expect to learn more details about these chips, too.

Intel's goals are far-reaching, but the company has definitely had plenty of challenges as the Sapphire Rapids Fourth-Gen Xeon CPUs and Ponte Vecchio Max GPUs worked their way to market, with several missteps leading to extended delays. However, the company says it has solved the underlying issues in its process node tech and revamped its chip design methodology to prevent further delays to its next-gen products.

Today the company will reveal if the Xeon roadmap remains intact, and perhaps outline some new goals, too. Intel's Sandra Rivera, the executive vice president and general manager of the Data Center and AI group (DCAI), will host the event. We also expect to see Lisa Spelman, the corporate vice president and general manager of Xeon products.

Pull up a seat; updates will appear below as the show begins.

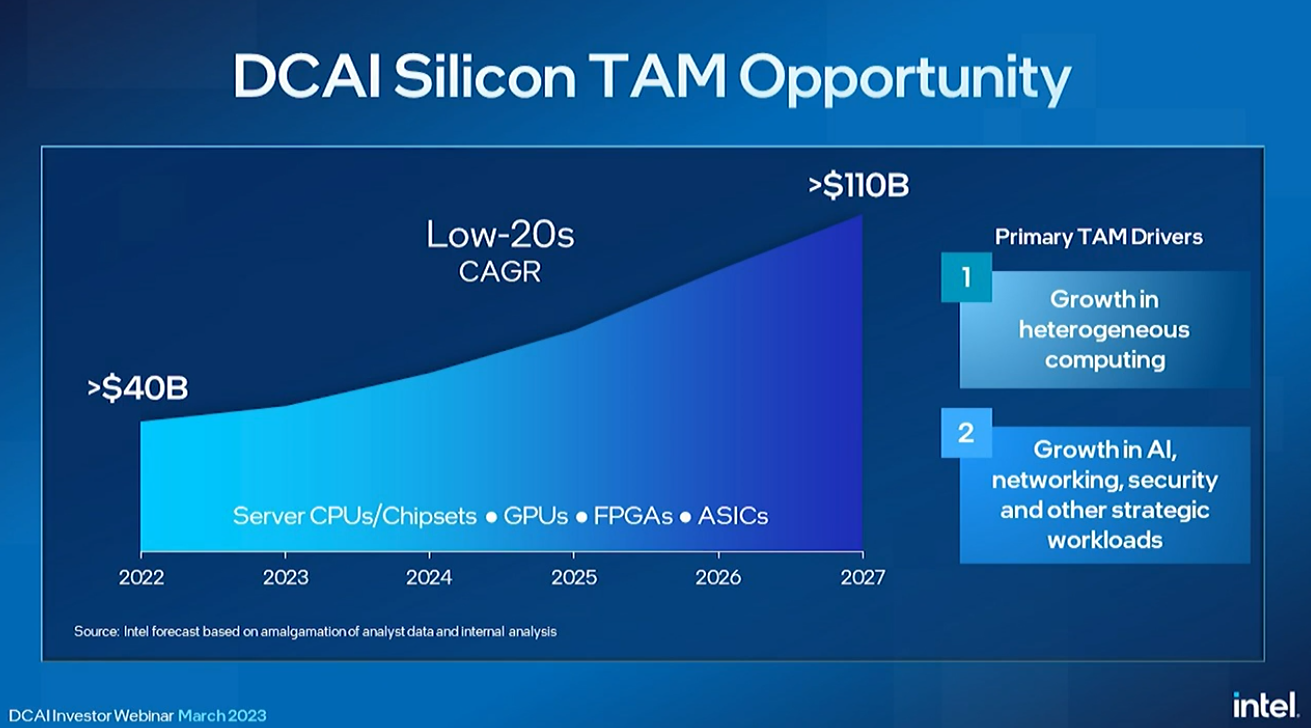

Sandra Rivera has taken the stage to outline that she will cover the new data center roadmap, the total addressable market (TAM) for Intel's datacenter business', which she values at $110 billion, and Intel's efforts in the AI realm.

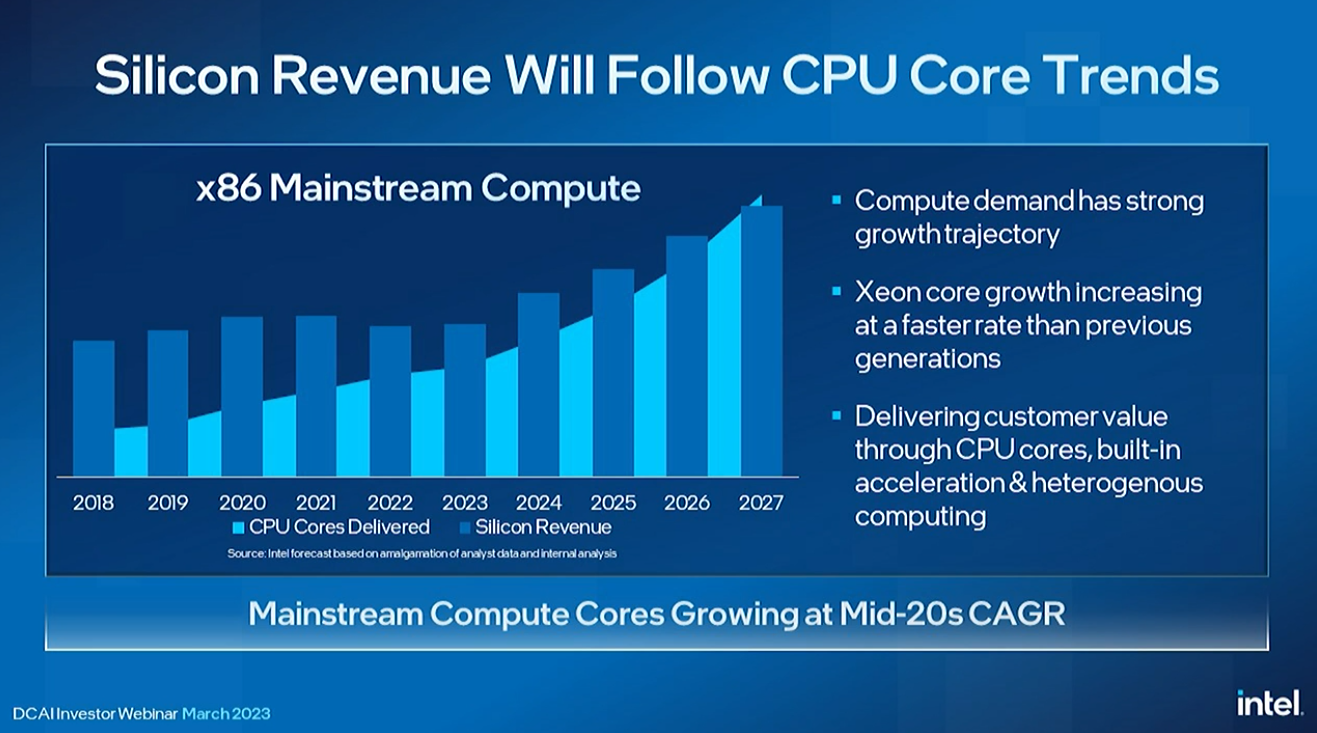

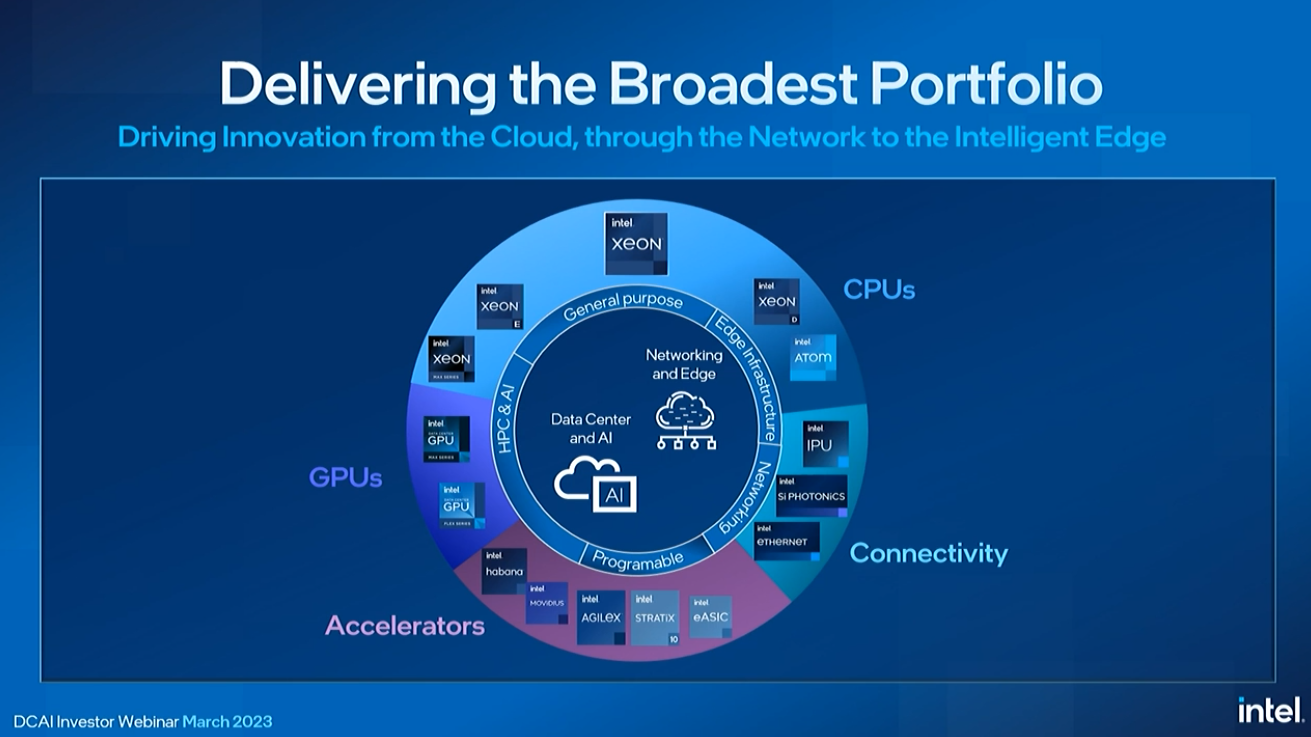

Rivera explained that Intel often looks through the lens of CPUs to measure its total data center revenue, but is now broadening its scope to include different types of compute, like GPUs and custom accelerators.

Intel is working to develop a broad portfolio of software solutions to complement its portfolio of chips.

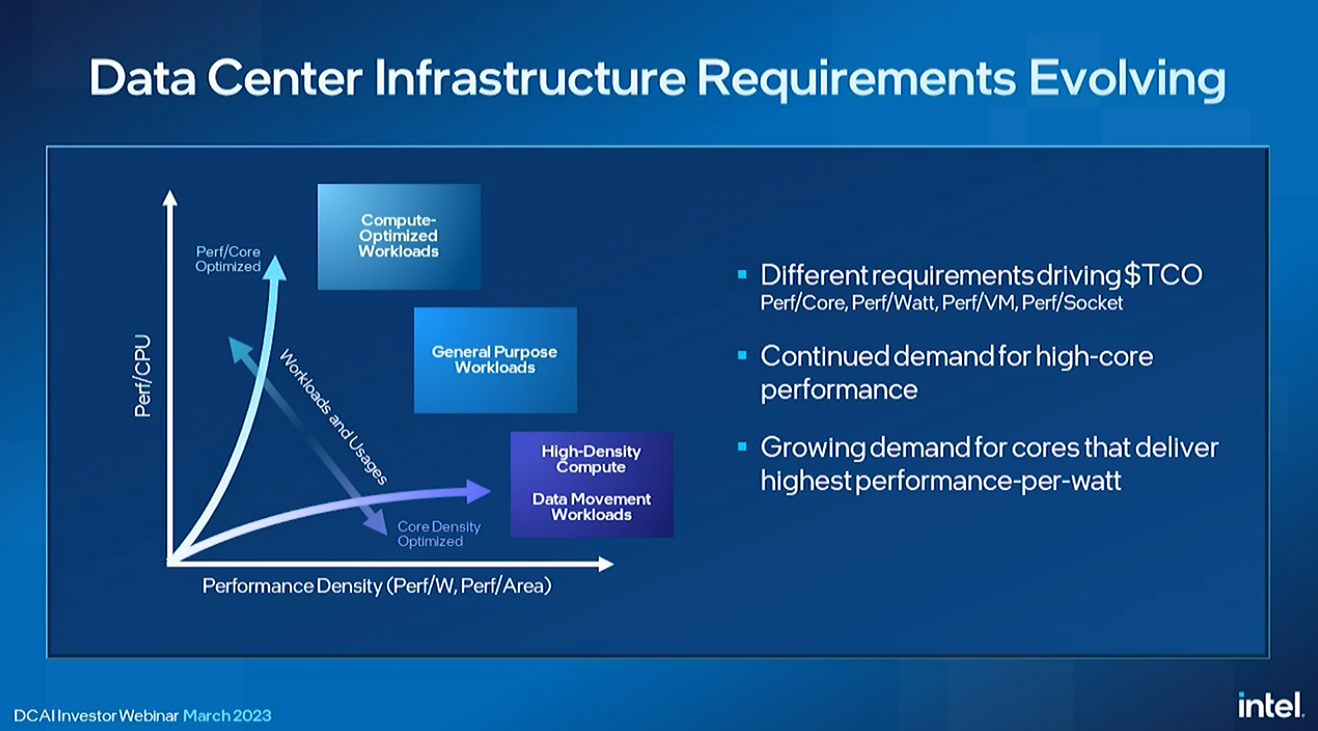

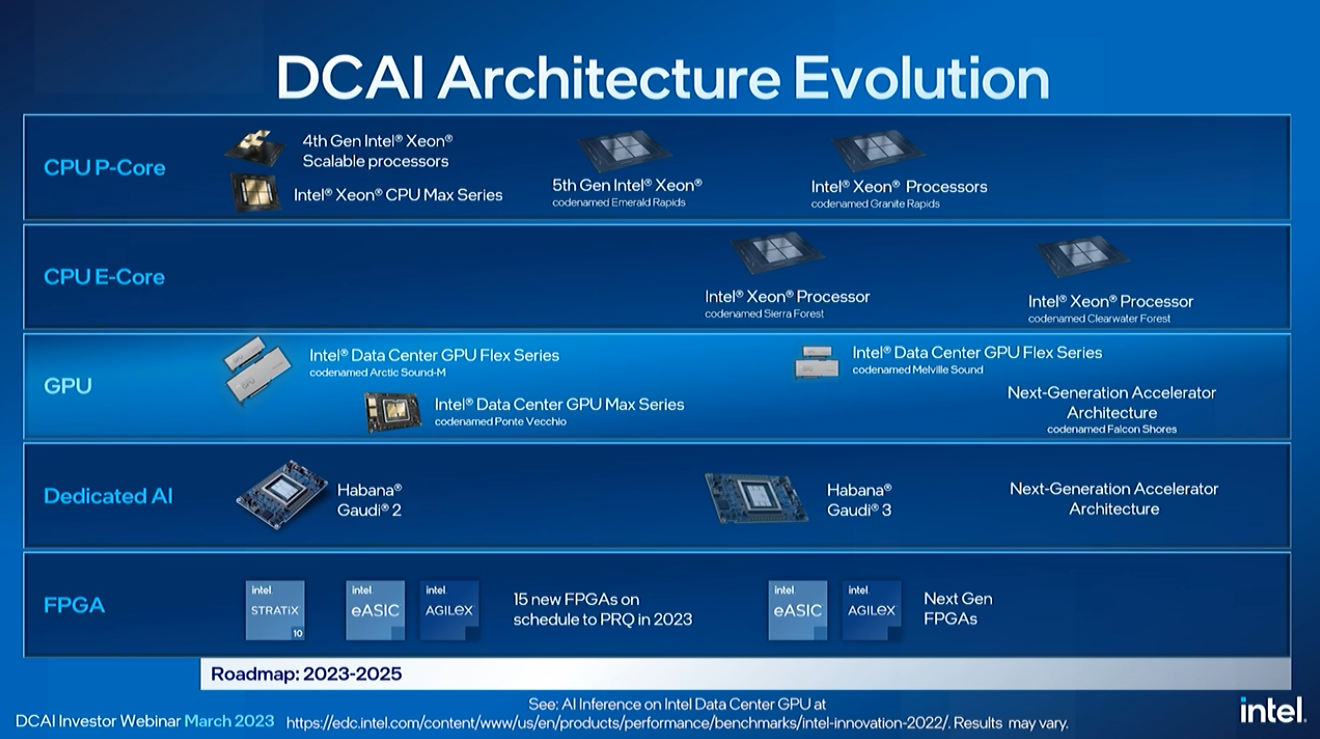

Intel has split its Xeon roadmap into two lines, one with P-cores and one with E-Cores, with each having its own advantages. The P-Core (Performance Core) models being the traditional Xeon data center processor with only cores that deliver the full performance of Intel's fastest architectures. These chips are designed for top per-core and AI workload performance. They also come paired with accelerators, as we see with Sapphire Rapids.

The E-Core (Efficiency Core) lineup consists of chips with only smaller efficiency cores, much like we see present on Intel's consumer chips, that eschew some features, like AMX and AVX-512, to offer increased density. These chips are designed for high energy efficiency, core density, and total throughput that is attractive to hyperscalers. Intel’s Xeon processors will not have any models with both P-cores and E-cores on the same silicon, so these are distinct families with distinct use-cases.

The E-cores are designed to combat Arm competitors.

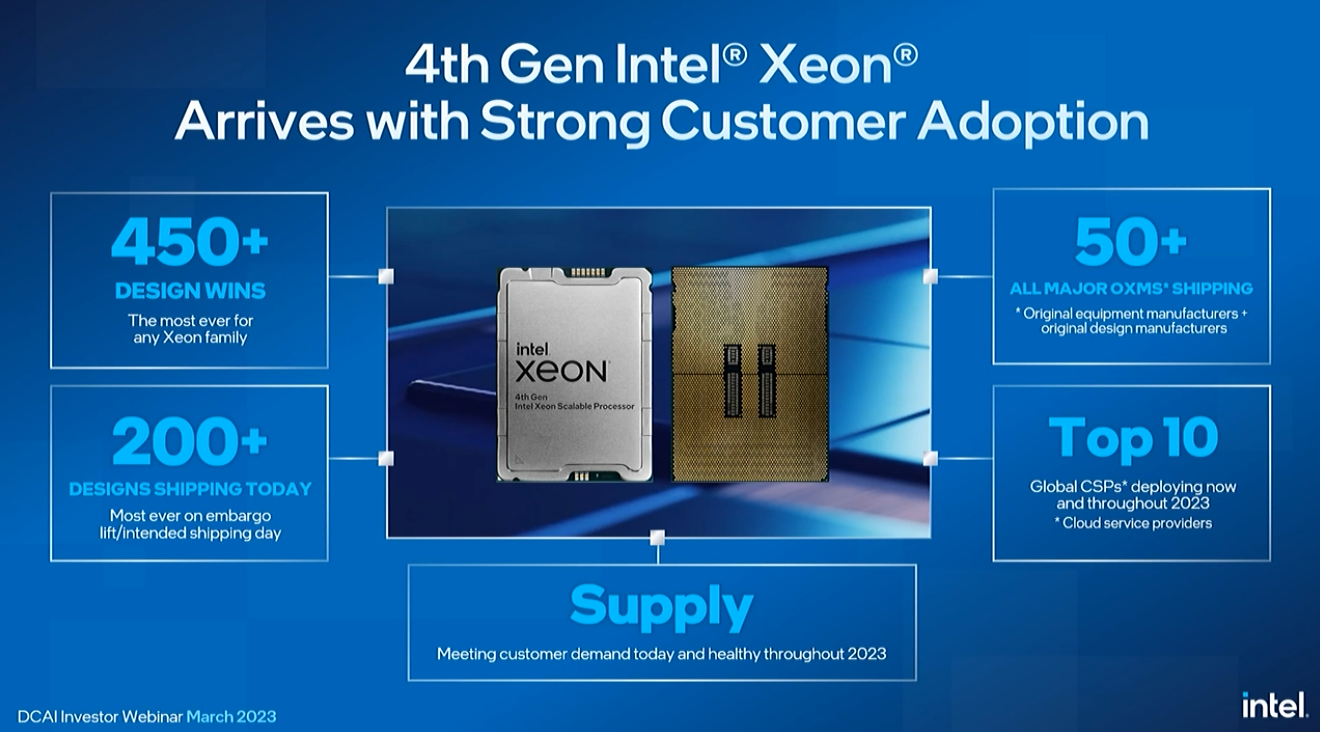

Intel has launched its Sapphire Rapids, with over 450 design wins, and 200+ designs shipping from top OEMs. Intel claims a 2.9X gen-on-gen efficiency improvement.

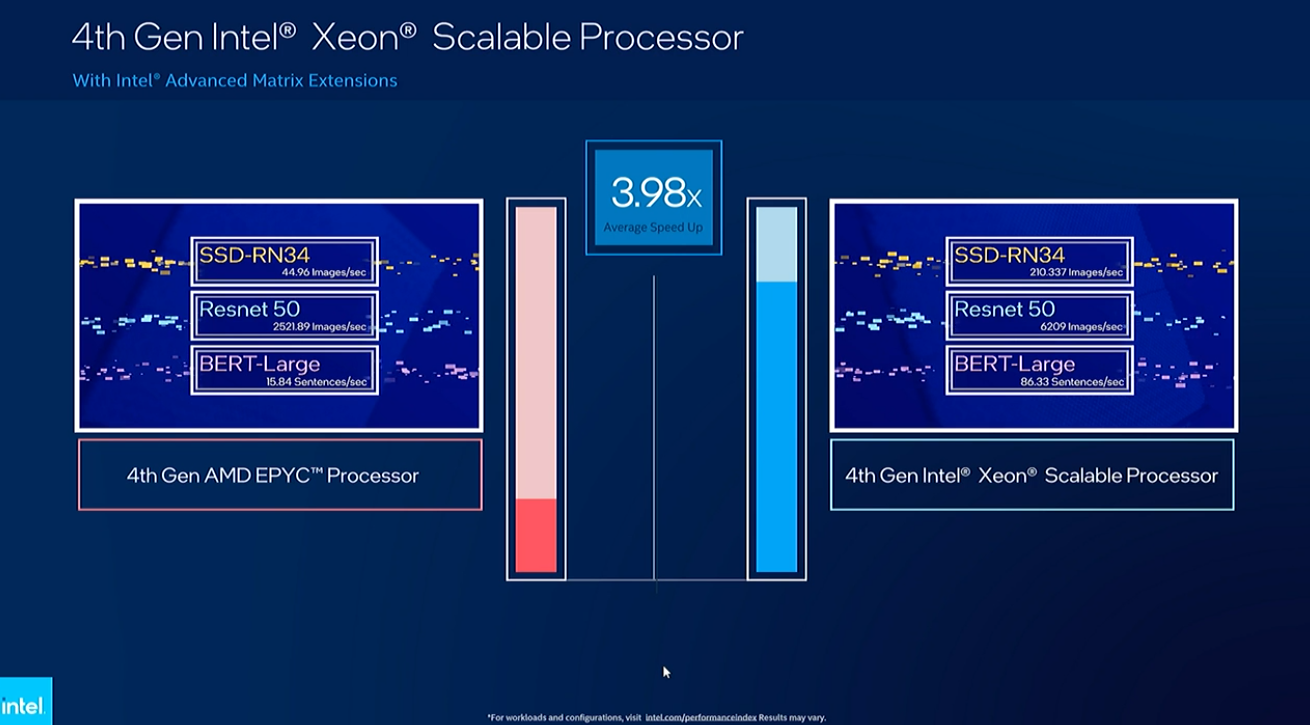

Intel's Sapphire Rapids supports its AI-boosting AMX technology, which uses different data types and vector processing to boost performance. Lisa Spelman conducted a demo showing that a 48-core Sapphire Rapids beats a 48 Core EPYC Genoa by 3.9X in a wide range of AI workloads.

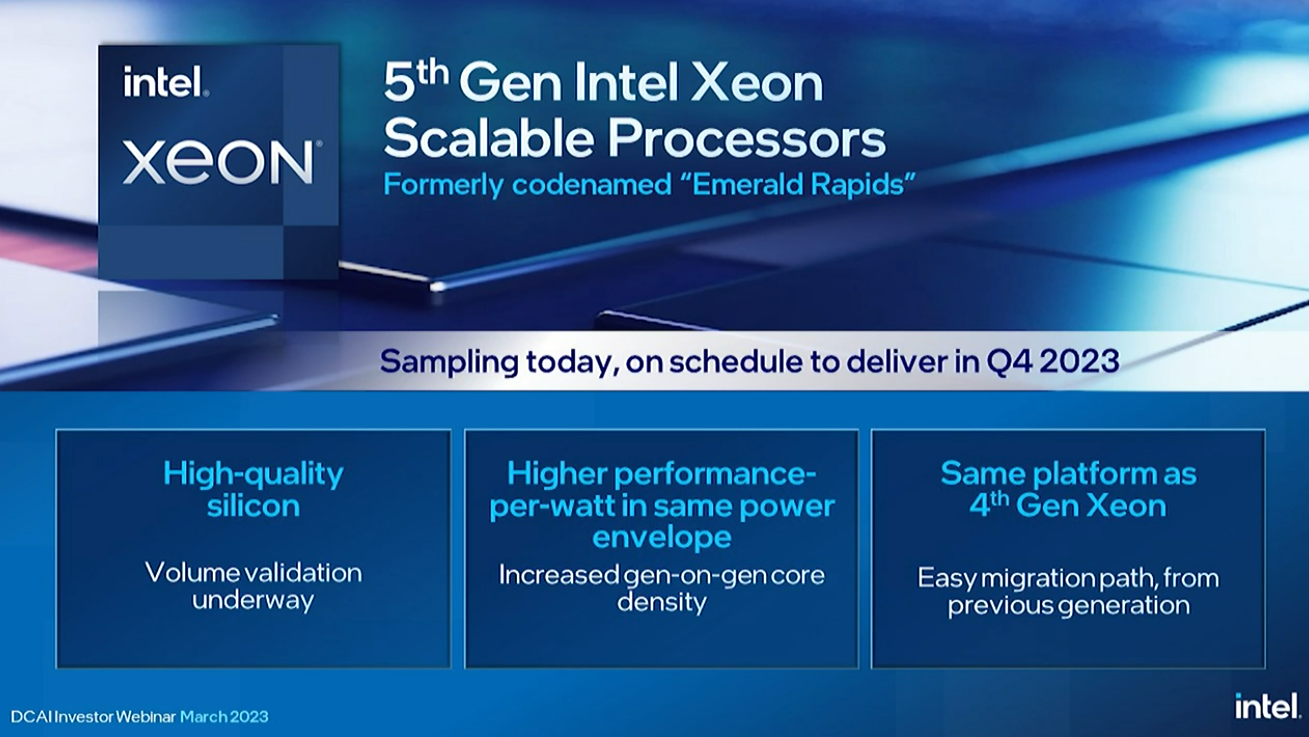

Rivera showed us the company's forthcoming Emerald Rapids chip. Intel’s next-gen Emerald Rapids is scheduled for release in Q4 of this year, which is a compressed timeframe given that Sapphire Rapids just launched a few months ago.

Intel says it will provide faster performance, better power efficiency, and more importantly, more cores than its predecessor. Intel says it has the Emerald Rapids silicon in-house and that validation is progressing as expected, with the silicon either meeting or exceeding its performance and power targets.

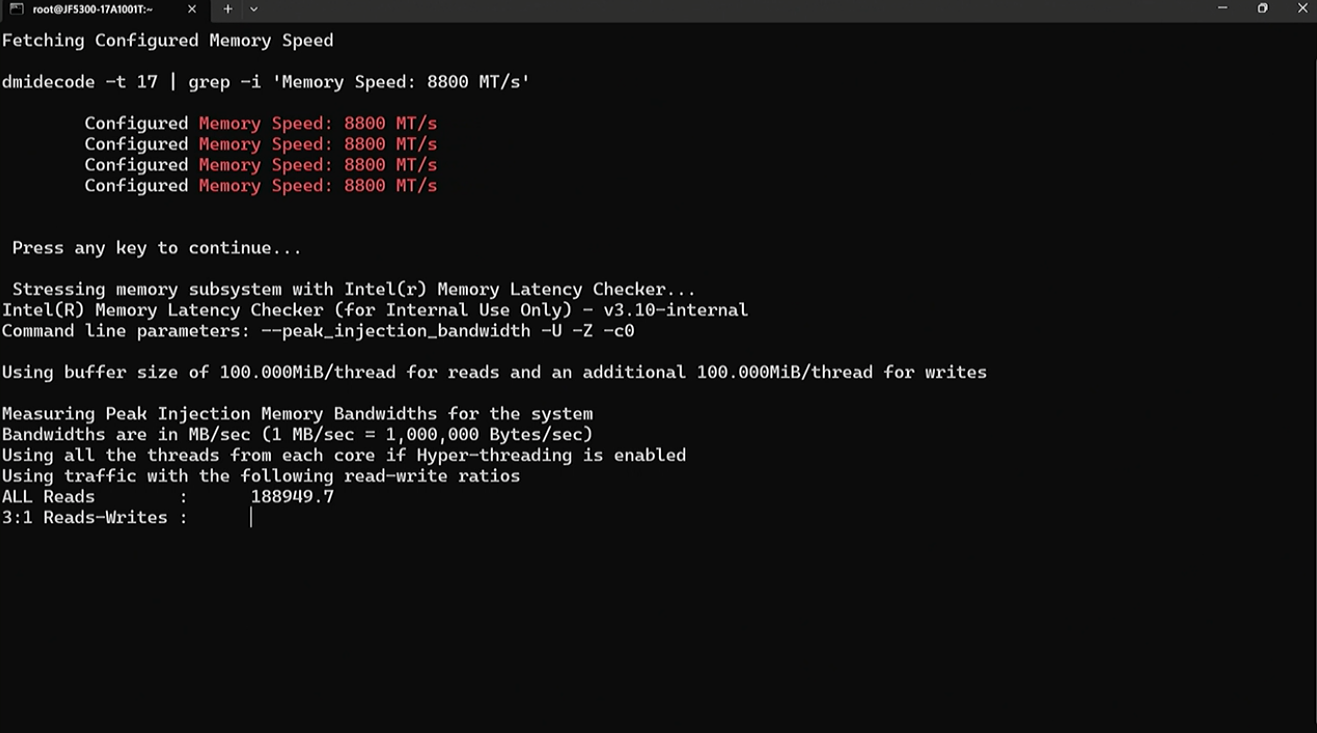

Granite Rapids will arrive in 2024, closely following Sierra Forest. Intel will fab this chip on the ‘Intel 3’ process, which is a vastly improved version of the ‘Intel 4’ process that lacked the high-density libraries needed for Xeon. This is the first p-core Xeon on ‘Intel 3,’ and it will feature more cores than Emerald Rapids, higher memory bandwidth from DDR5-8800 memory, and other unspecified I/O innovations. This chip is sampling to customers now.

Intel demoed a dual-socket Granite Rapids providing a beastly 1.5 TB/s of DDR5 memory bandwidth during its webinar, a claimed 80% peak bandwidth improvement over existing server memory. For perspective, Granite Rapids provides more throughput than Nvidia’s 960 GB/s Grace CPU superchip that is designed specifically for memory bandwidth, and more than AMD’s dual-socket Genoa, which has a theoretical peak of 920 GB/s.

Intel accomplished this feat using DDR5-8800 Multiplexer Combined Rank (MCR) DRAM, a new type of bandwidth-optimized memory that it invented. Intel has already introduced this memory with SK hynix.

Here we can see the demo.

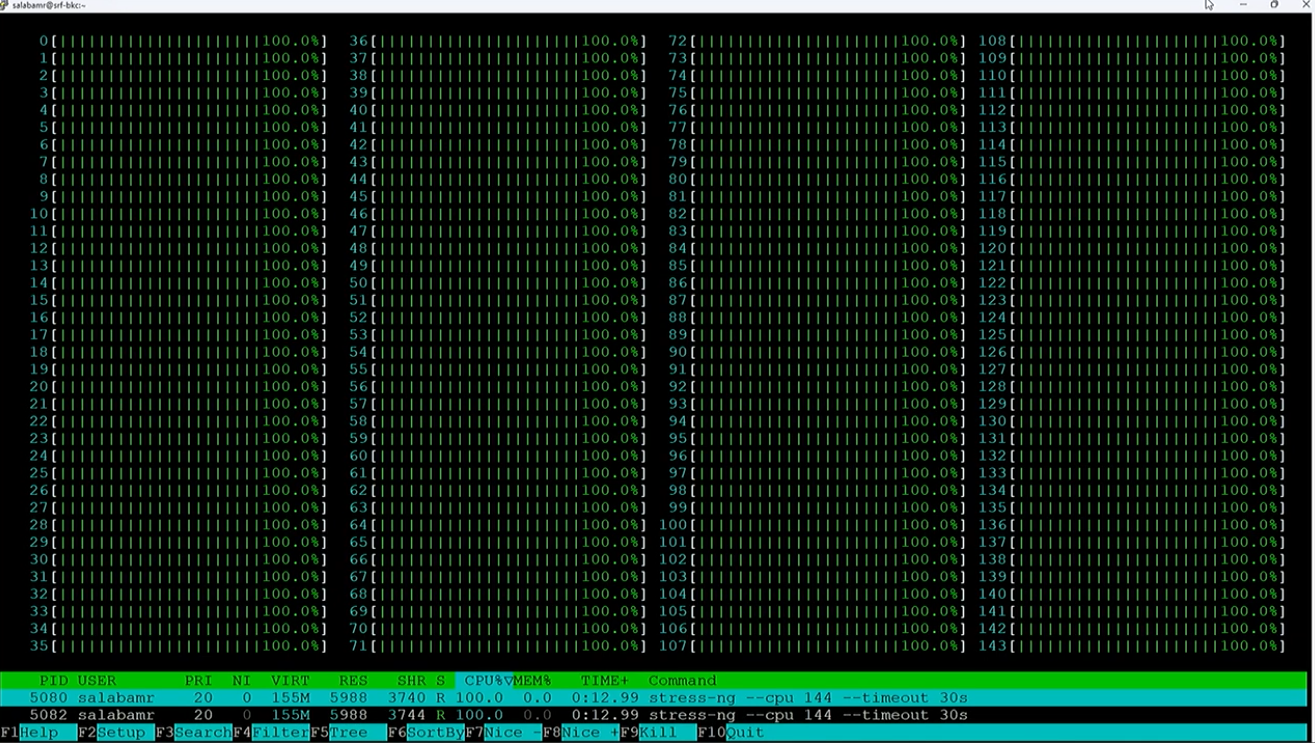

Intel’s e-core roadmap begins with the 144-core Sierra Forest, which will provide 256 cores in a single dual-socket server. The fifth-generation Xeon Sierra Forest’s 144 cores also outweigh AMD’s 128-core EPYC Bergamo in terms of core counts, but likely doesn’t take the lead in thread count — Intel’s e-cores for the consumer market are single-threaded, but the company hasn’t divulged whether the e-cores for the data center will support hyperthreading. AMD has shared that the 128-core Bergamo is hyperthreaded, thus providing a total of 256 threads per socket.

Rivera says Intel has powered on the silicon and had an OS booting in less than 18 hours (a company record). This chip is the lead vehicle for the ‘Intel 3’ process node, so success is paramount. Intel is confident enough that it has already sampled the chips to its customers and demoed all 144 cores in action at the event. Intel aims the e-core Xeon models at specific types of cloud-optimized workloads at first but expects them to be adopted for a far broader range of use-cases once they are in market.

Spelman returned to show us all 144 cores in the Sierra Forest chip working in a demo.

Rivera has now announced the follow-on to Sierra Forest -- Clearwater Forest. Intel didn’t share many details beyond the release in 2025 timeframe, but did say it will use the 18A process for the chip, not the 20A process node that arrives half a year earlier. This will be the first Xeon chip with the 18A process.

Intel also has a full roster of other chips for AI workloads. Intel pointed out that it will launch 15 new FPGAs this year, a record for its FPGA group. We have yet to hear of any major wins with the Gaudi chips, but Intel does continue to develop its lineup and has a next-gen accelerator on the roadmap. The Gaudi 2 AI accelerator is shipping, and Gaudi 3 has been taped in.

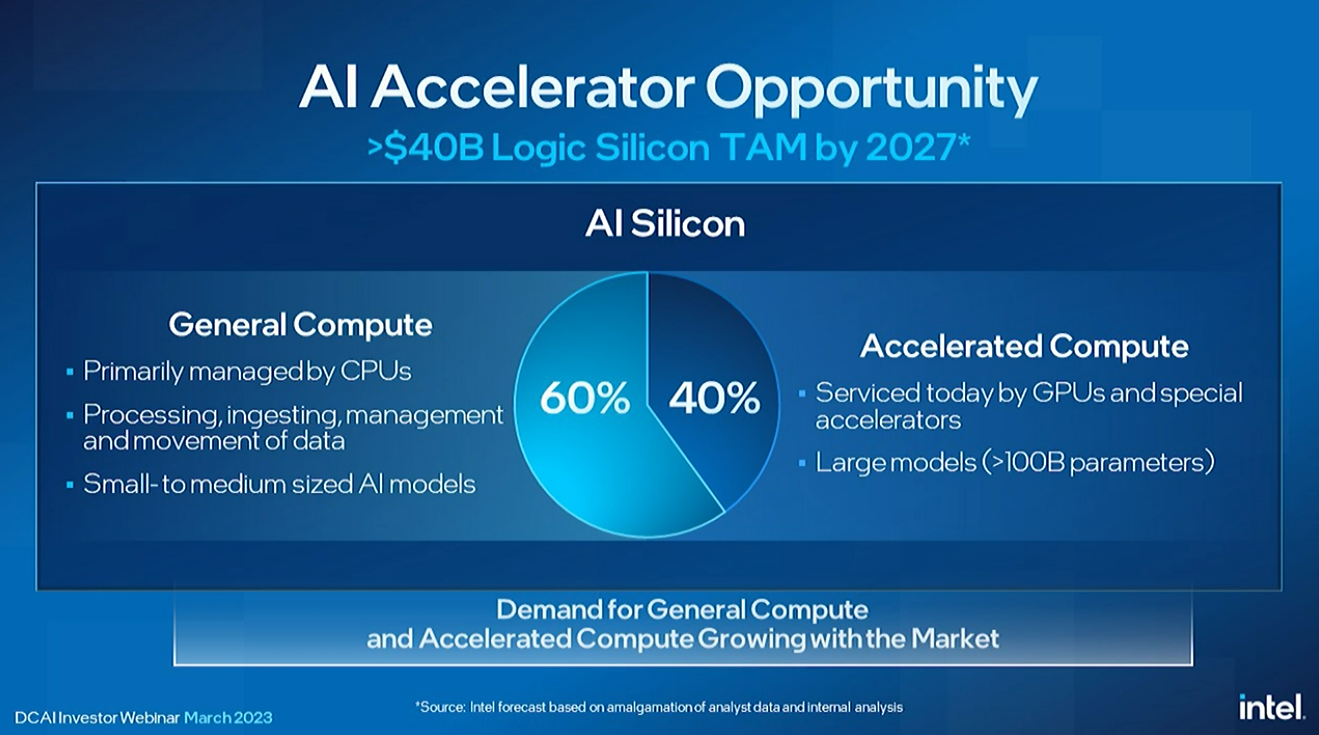

Rivera outlined Intel's broad efforts in the AI space. Intel predicts that AI workloads will continue to be run predominantly on CPUs, with 60% of all models, mainly the small- to medium-sized models, running on CPUs. Meanwhile, the large models will comprise roughly 40% of the workloads and run on GPUs and other custom accelerators.

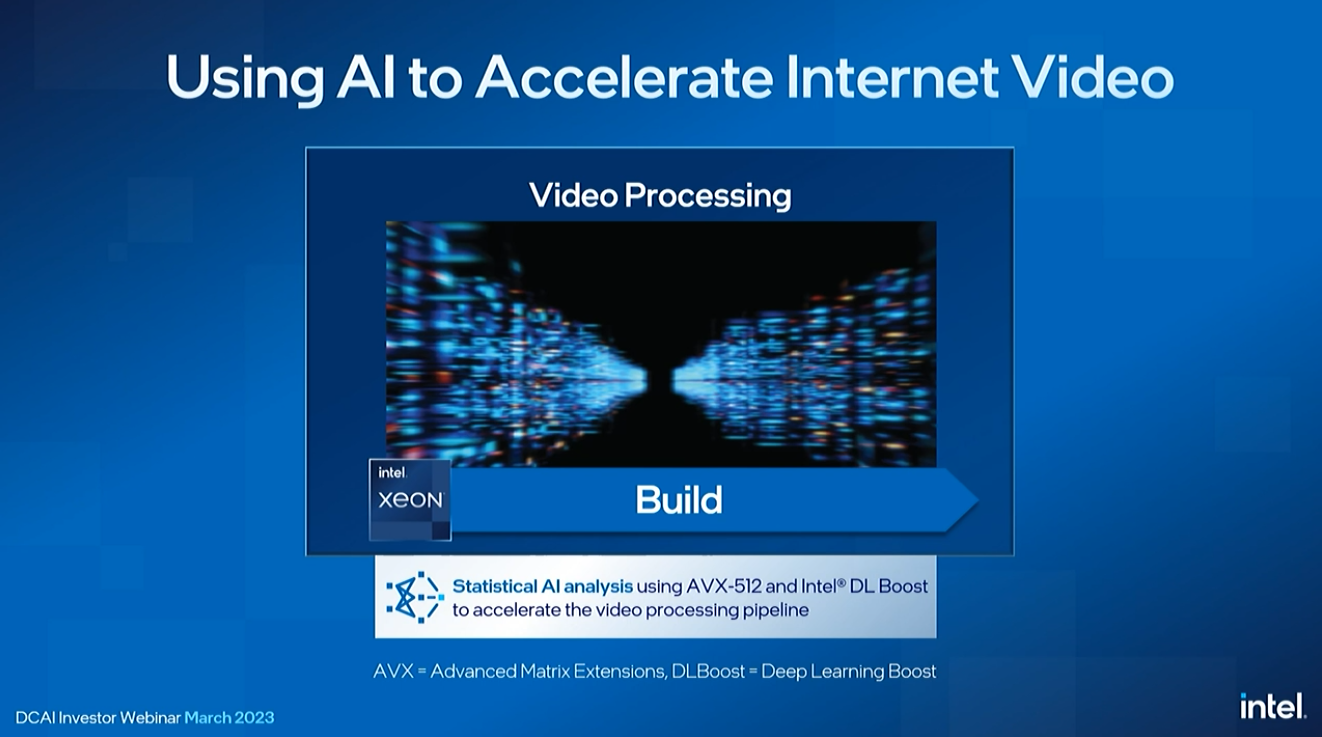

Intel is working with content providers to perform AI workloads on video streams, and AI-based compute can accelerate, compress and encrypt data moving across the network, all of which occurs on a single Sapphire Rapids CPU.

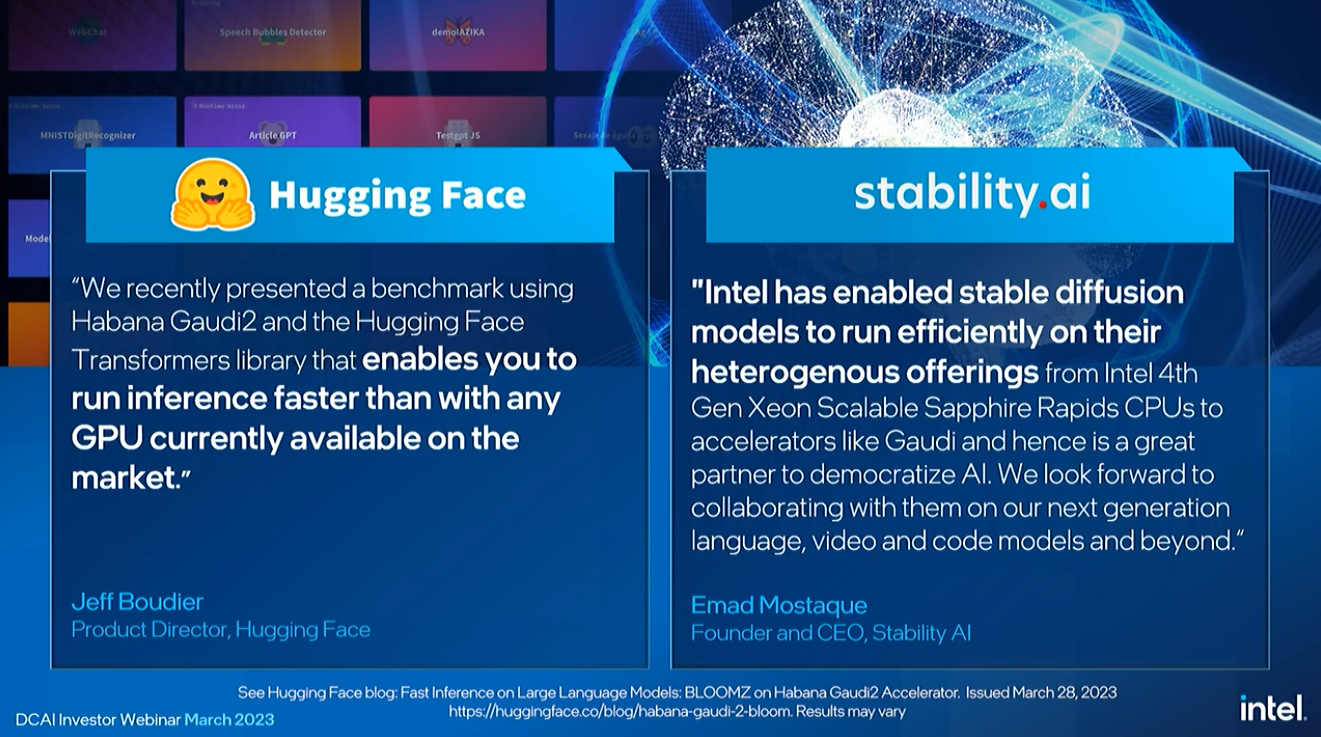

CPUs are also good for smaller inference models, but discrete accelerators are important for larger models. Intel uses its Gaudi and Ponte Vecchio GPUs to address this market. Hugging Face recently said Gaudi gave it 3X the performance in the Hugging Face Transformers library.

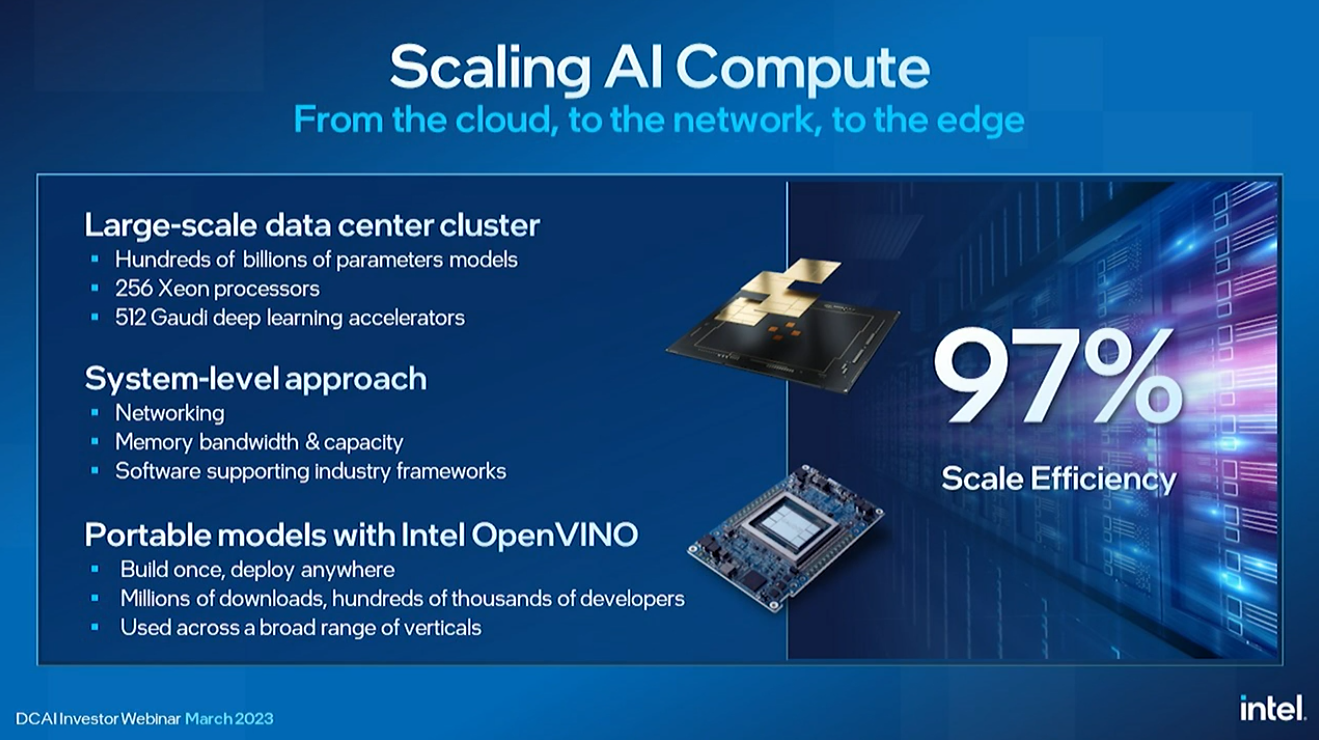

Rivera touted Intel's 97% scale efficiency in a cluster benchmark.

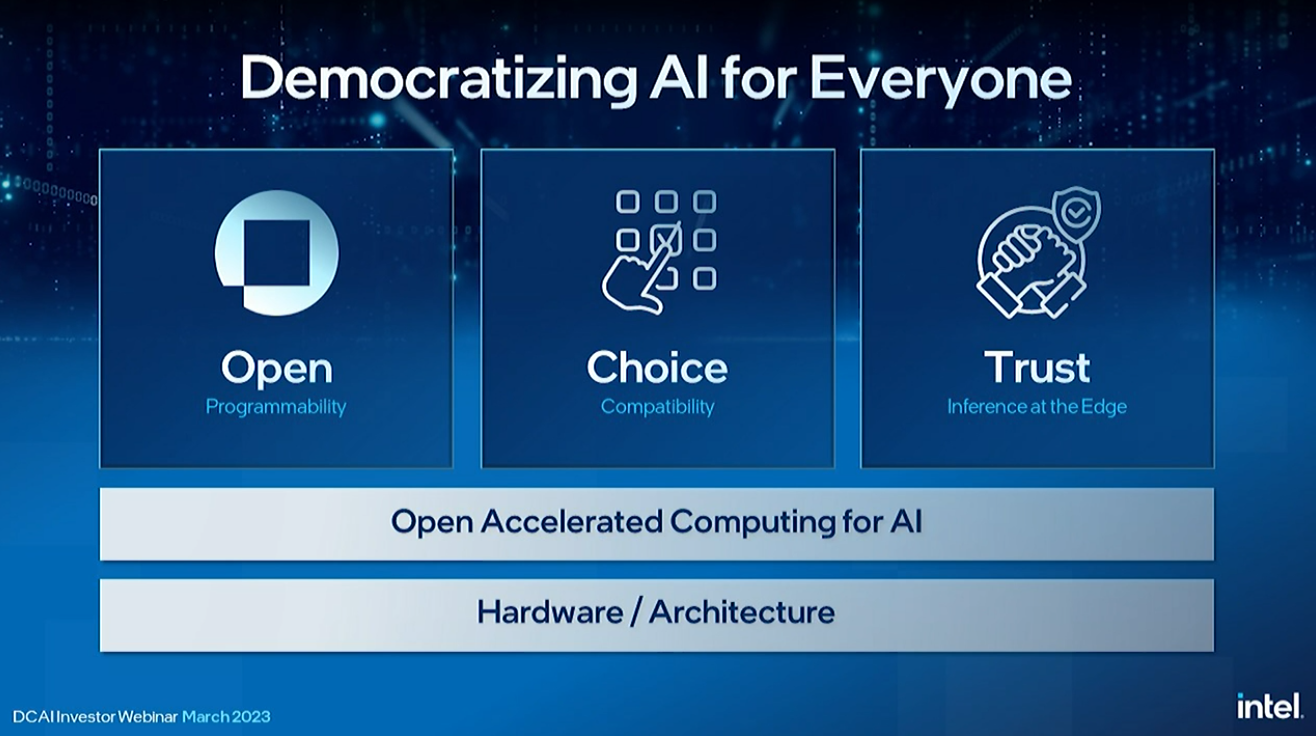

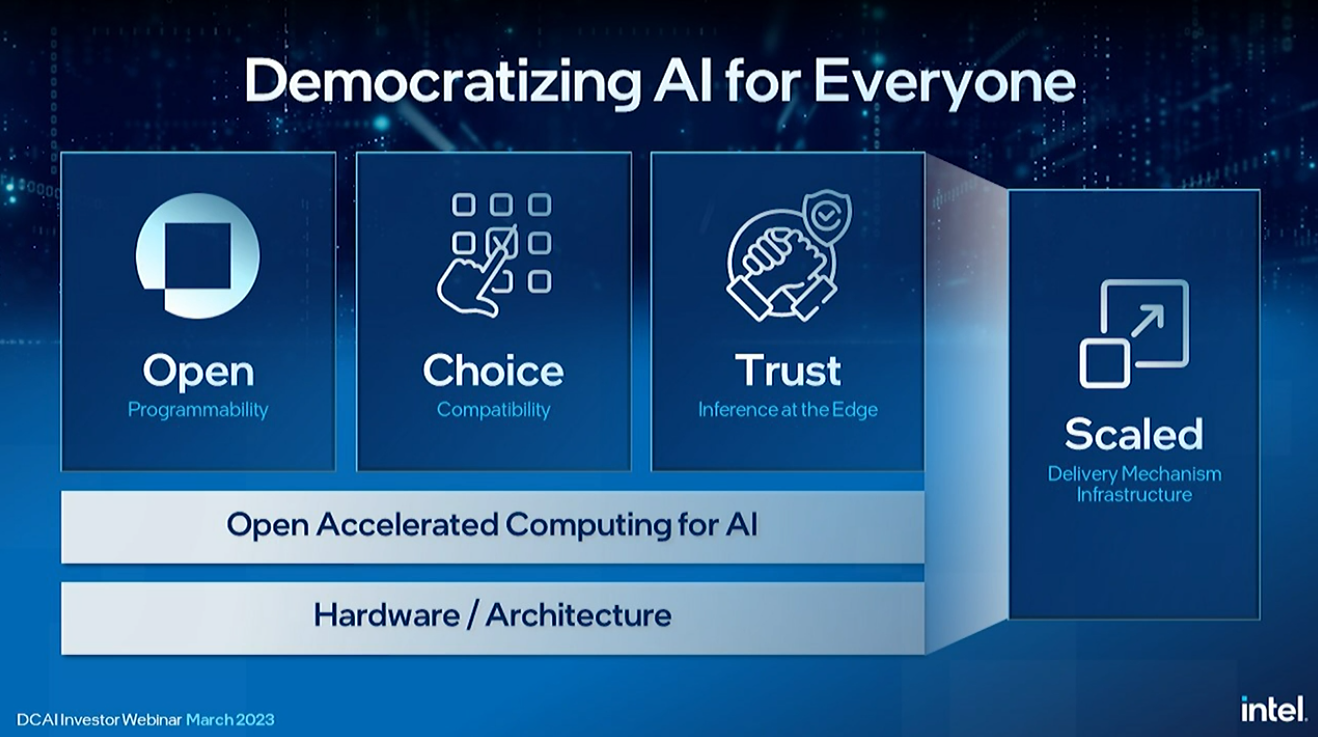

Intel's Greg Lavendar, the SVP and CTO at Intel, joined the webcast to discuss the democratization of AI.

Intel is also working to build out a software ecosystem for AI that rivals Nvidia’s CUDA. This also includes taking an end-to-end approach that includes silicon, software, security, confidentiality, and trust mechanisms at every point in the stack.

Intel aims for an open multi-vendor approach to providing a alternative to Nvidia's CUDA.

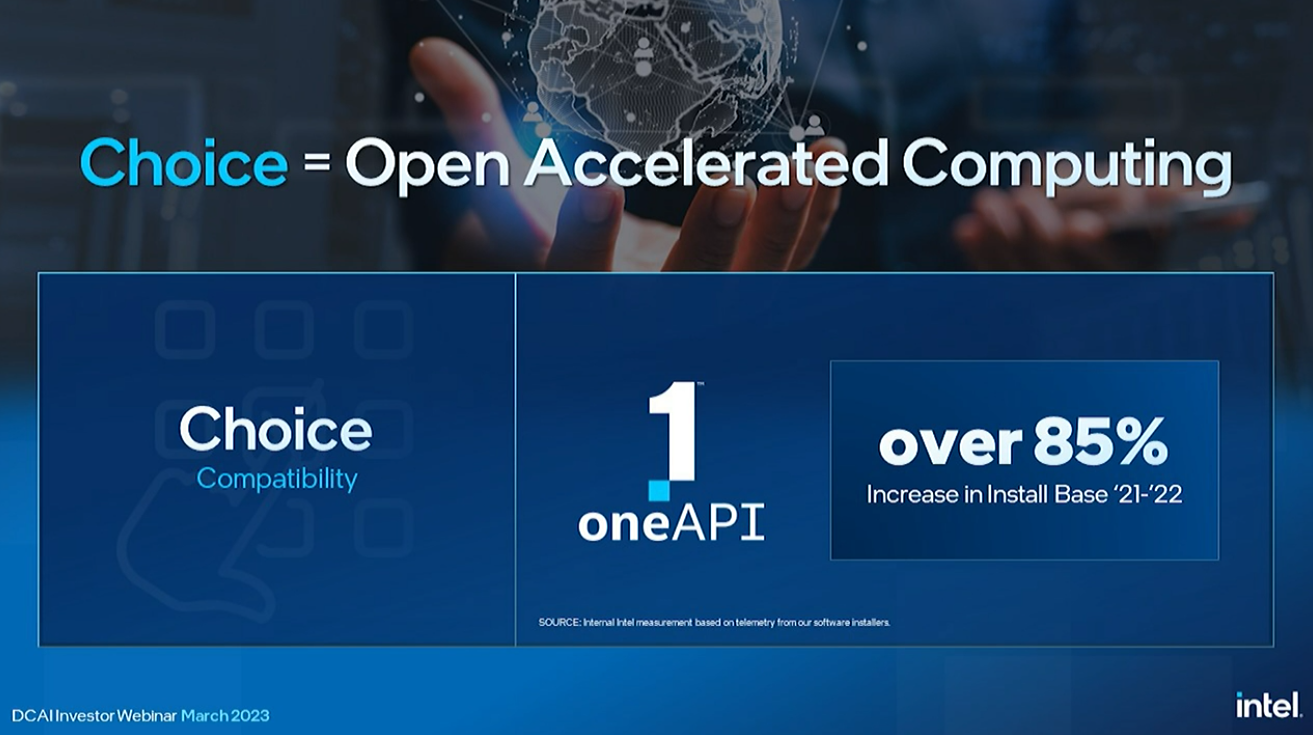

Intel's efforts with OneAPI continues, with 6.2 million active developers using the Intel tools.

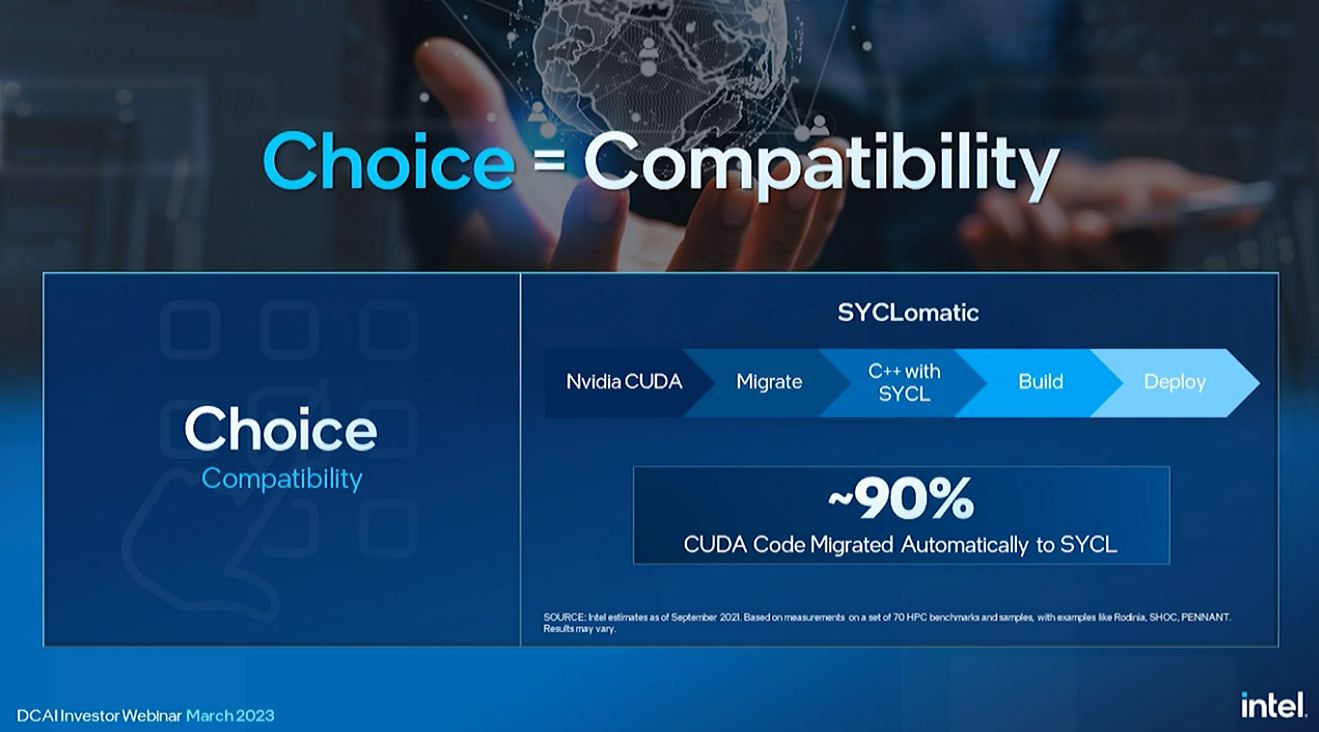

Intel launched SYCLomatic to automatically migrate CUDA code to SYCL.

Lavender also outlined the company's efforts to provide scale and accelerate development through the Intel Developer Cloud. Intel has 4X the number of users since it announced the program in 2021. And with that, he passed the baton back to Sandra.

Rivera thanked the audience for joining the webinar and also shared a summary of the major announcements.

In summary, Intel announced that Sierra Forest, its first-gen efficiency Xeon, will come with an incredible 144 cores, thus offering better core density than AMD’s competing 128-core EPYC Bergamo chips. The company also teased the chip in a demo. Intel also revealed the first details of Clearwater Forest, it's second-gen efficiency Xeon that will debut in 2025. Intel skipped over its 20A process node for the more performant 18A for this new chip, which speaks volumes about its faith in the health of its future node.

Intel also presented several demos, including head-to-head AI benchmarks against AMD’s EPYC Genoa that show a 4X performance advantage for Xeon in a head-to-head of two 48-core chips, and a memory throughput benchmark that showed the next-gen Granite Rapids Xeon delivering an incredible 1.5 TB/s of bandwidth in a dual-socket server.

This is an investor event, so now the company will now conduct a Q and A that focuses on the financial side of the presentation. We will not focus on the Q and A section here unless the answers are especially pertinent to the hardware that is our forte. If you're more interested in the financial side of the conversation, you can see the webinar here.