Intel officially announced the Arc B580 and B570 'Battlemage' GPUs on December 3, 2024. The Arc B580 launched on December 13, delivering a potent blend of performance, features, and value — and it's mostly been sold out in the following weeks. The B570 will arrive on January 16, 2025, but we expect there are more Battlemage GPUs still to come.

While Intel won't comment on future products, but these are the first two of what should eventually be a full range of discrete GPUs for the Battlemage family, designed for both desktop and mobile markets. The Arc B580 with 12GB of VRAM debuts at $249, while the B570 comes equipped with 10GB of VRAM and will retail for $219.

Here are the known specs for the B580 and B570, with speculation on what we might see from future Arc Battlemage GPUs.

Battlemage Specifications

We've known the Battlemage name officially for a long time, and in fact, we know the next two GPU families Intel plans to release in the coming years: Celestial and Druid. But Intel has now officially spilled the beans on the specifications, pricing, features, and more for the first two Battlemage (BMG) graphics cards, the B580 and B570.

Most of the details line up with recent leaks, and we also have our own performance results from the B580 along with Intel's own performance estimates. These are mainstream to budget graphics cards that deliver a good value, especially in games where the drivers work as expected. If you play older games or esoteric stuff, you may encounter more issues.

The other three GPUs in the above table are, for now, speculative on our part. There have been rumors of a BMG-G10 GPU for a while now, and there's potentially a third BMG-31 GPU in the works as well, but no hard details have been given so far. It's not even clear on whether BMG-10 will be the biggest chip, or if BMG-31 will be larger.

If Intel sticks with the naming pattern established with Arc Alchemist, we anticipate at least seeing an Arc B770 and B750 from the largest chip, and then the B380 from whatever ends up as the smallest chip. However, there's an alternate rumor that says we could see a 48 Xe-core Battlemage GPU with a 384-bit memory interface and 24GB of VRAM. Large grains of salt are in order and we suspect — as indicated in the table — that the largest Battlemage GPU will stick with 32 Xe-cores.

Given the B580 lands about 10% ahead of the RTX 4060 overall based on our testing, the B770 with 60% more GPU cores and 33% more memory and bandwidth should be about 50% faster. That would potentially put it as high the RTX 4070 Super, maybe even the RTX 4070 Ti Super, based on our GPU benchmarks hierarchy. But again, drivers and support tend to be more of a wildcard with Intel and so some games may not run as well.

The middle-tier B750 on the other hand represents a lot of unknowns. Could it have 16GB of memory as well? Yes. Or Intel could disable one memory channel and give it 14GB — like the B570 has 10GB using the BMG-G21 GPU. And finally there's the question of an even lower tier B380, which may or may not exist. The potential profits from sub-$150 GPUs has all but vanished these days.

We anticipate any remaining Battlemage GPUs will launch in 2025, and sooner rather than later would be prudent since Nvidia and AMD are also launching new GPUs, but we'll have to wait and see what Intel can manage to put together.

Battlemage Performance

Next, let's talk about performance, both using our own test results as well as what Intel provided prior to the B580 launch. We'll start with our results, as they're independent and we know precisely how everything was tested.

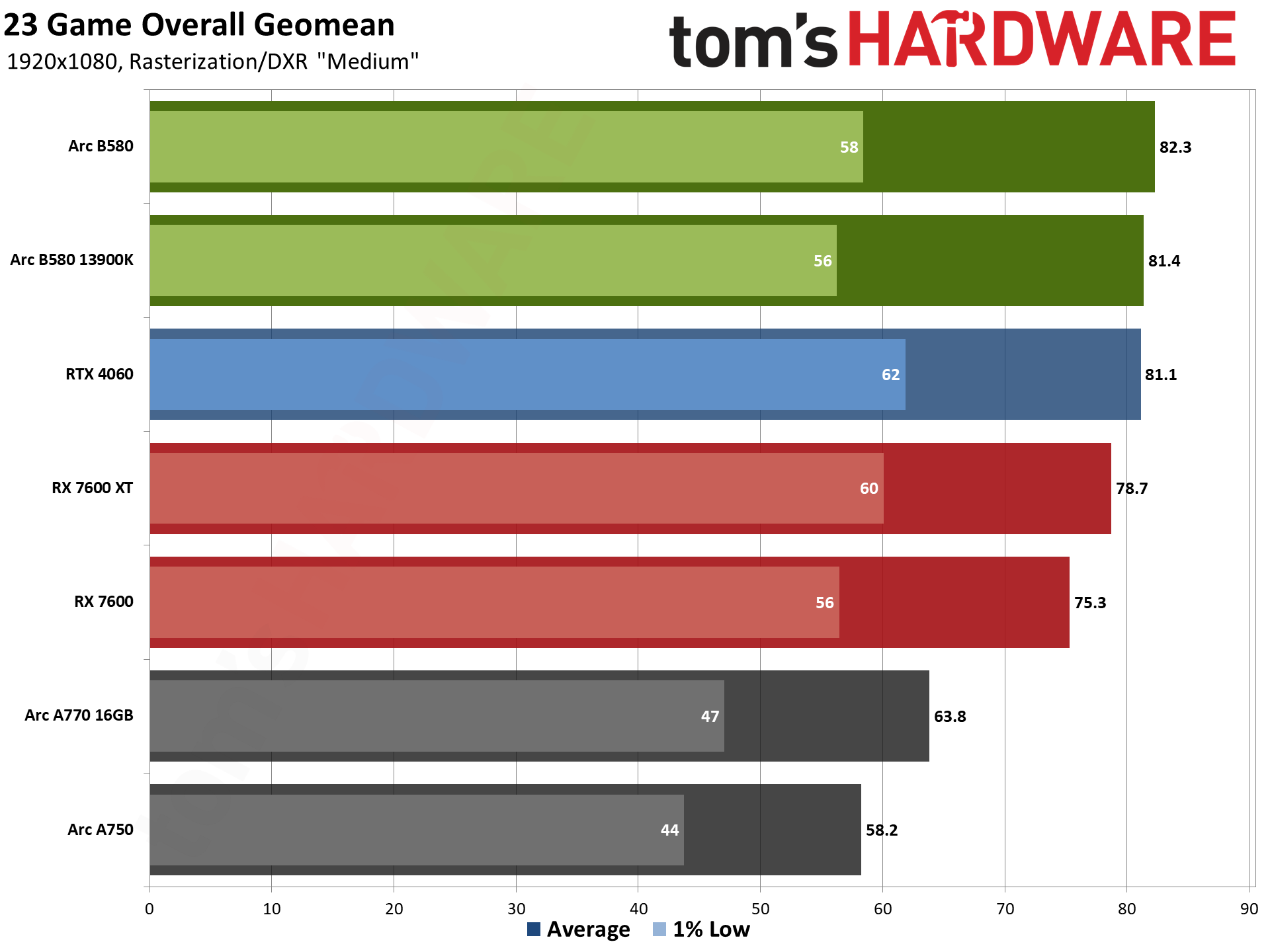

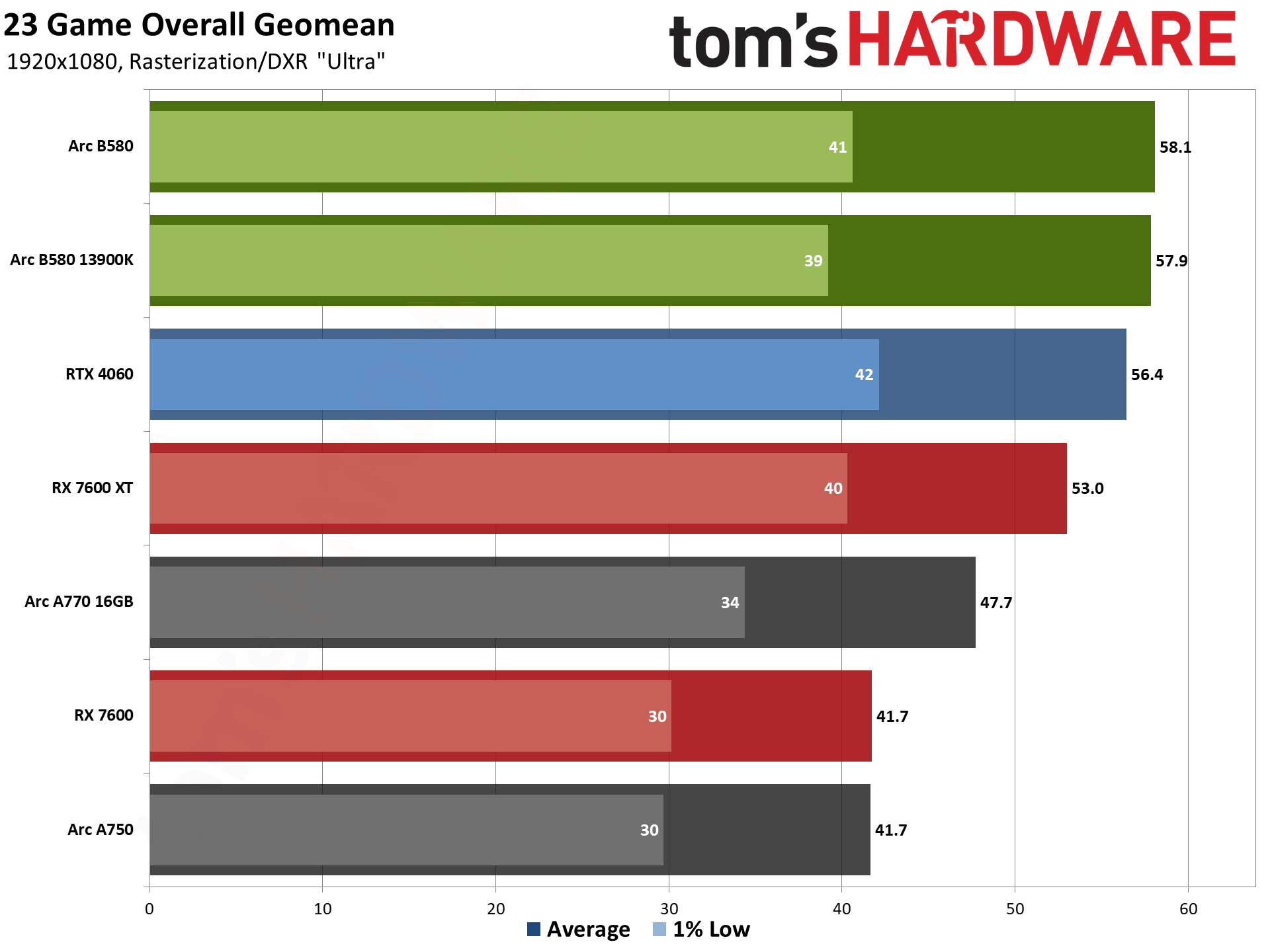

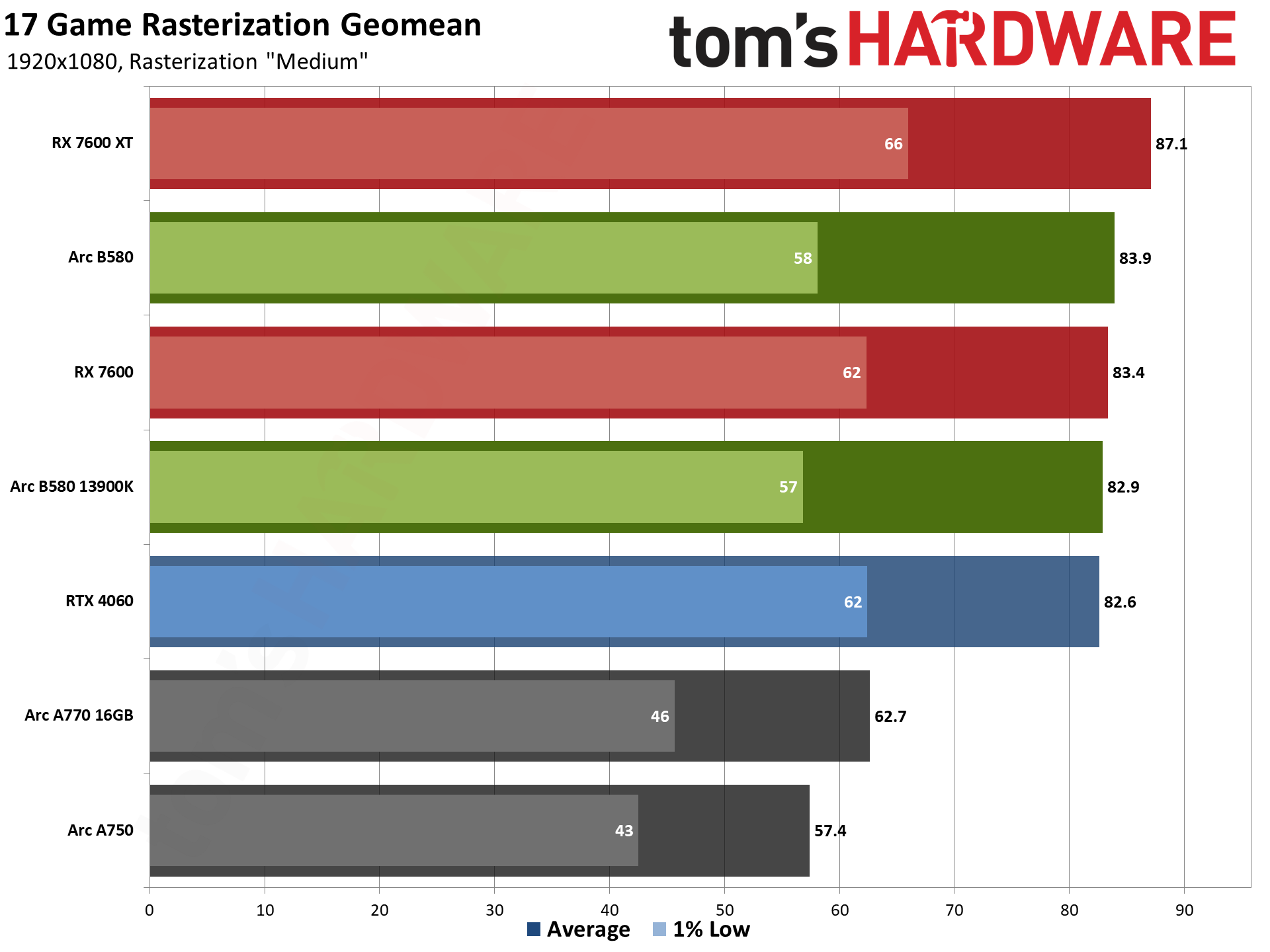

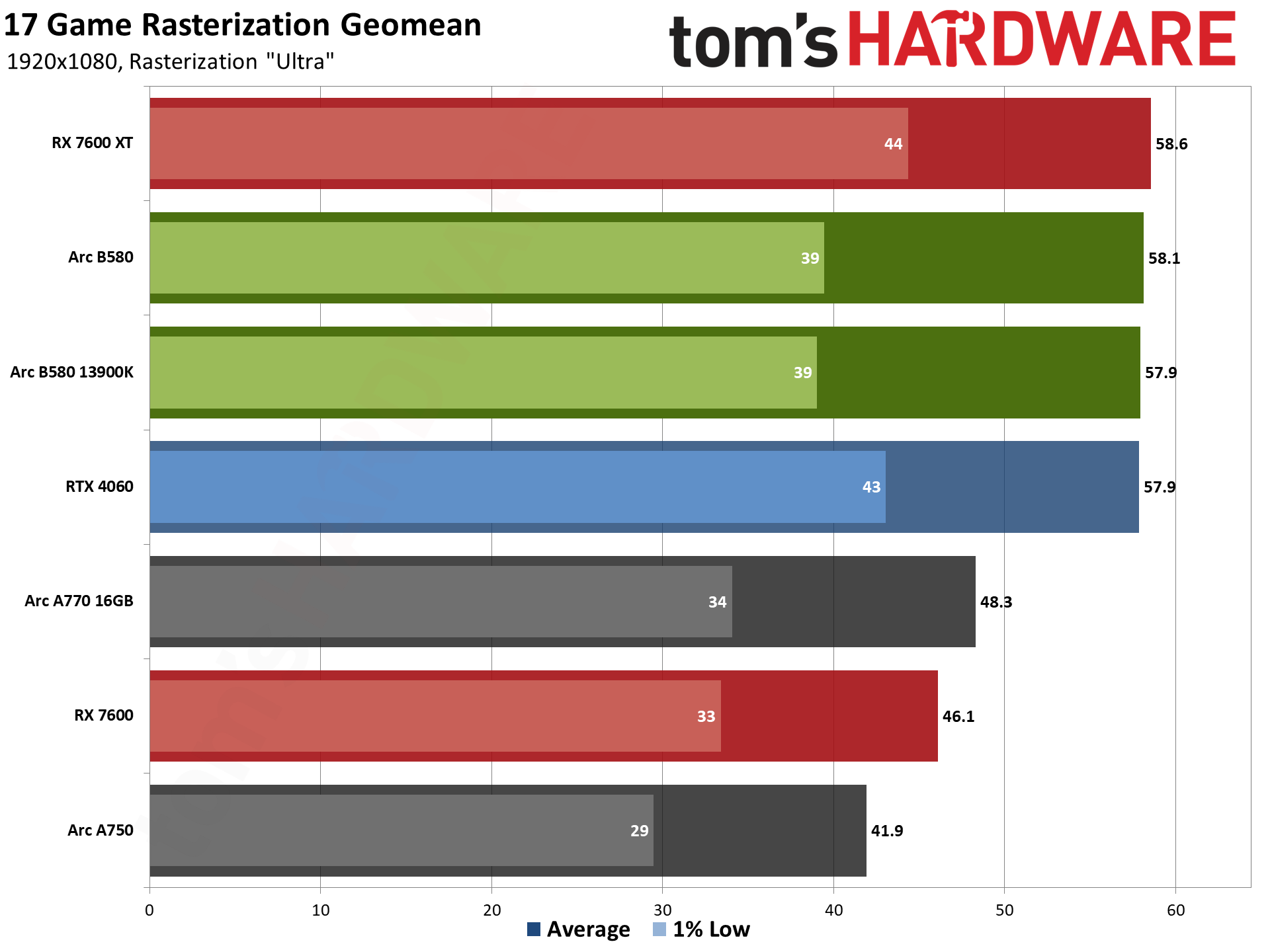

Here's our high-level overview of Arc B580, using the geometric mean of performance in 23 different games. Overall, at 1080p medium, the B580 trades blows with Nvidia's existing RTX 4060, but that's not really pushing either GPU very hard. Stepping up to 1080p ultra, the B580 claims a still negligible 3% lead over the 4060, with a larger 10% lead over AMD's more expensive RX 7600 XT.

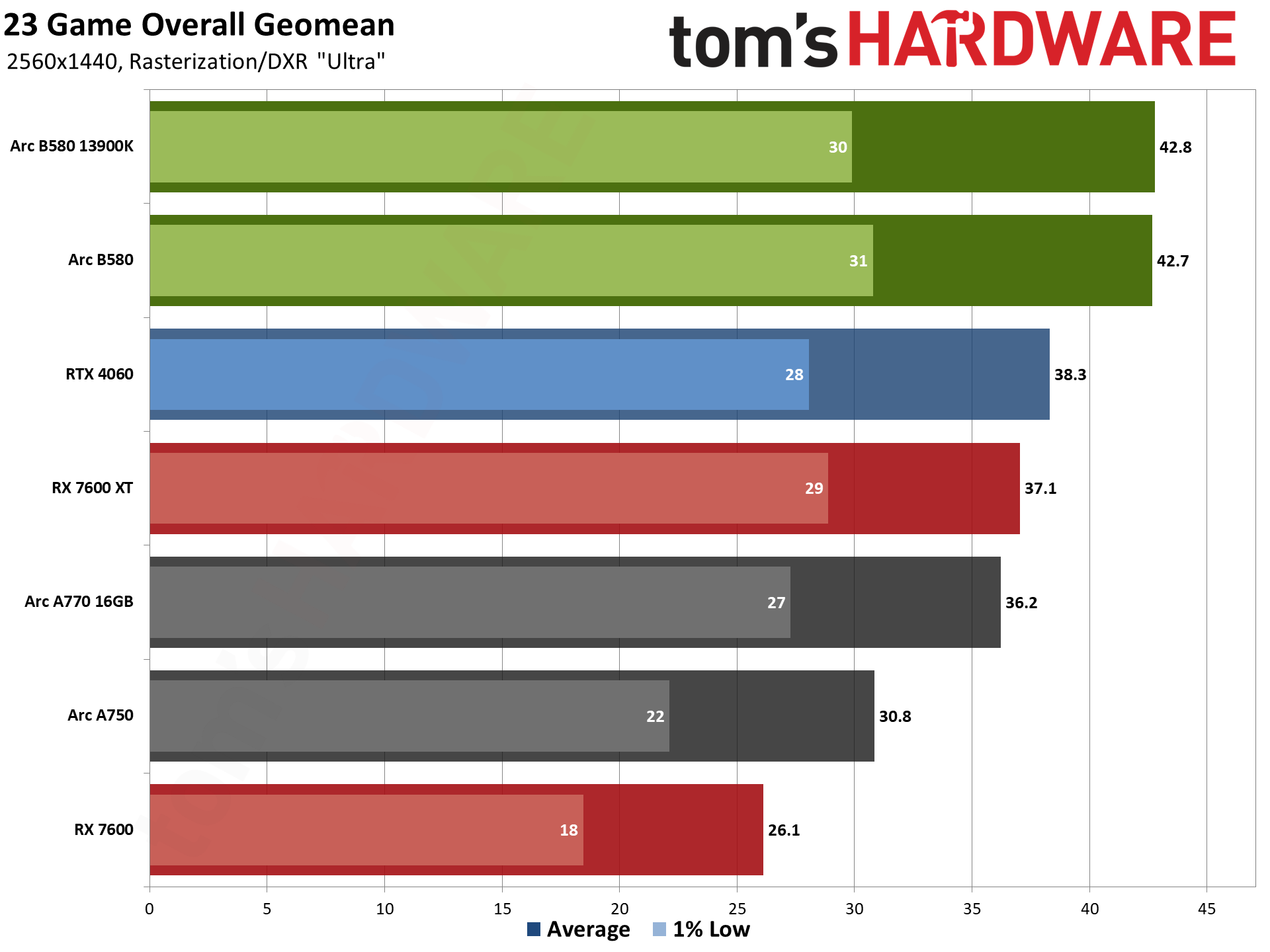

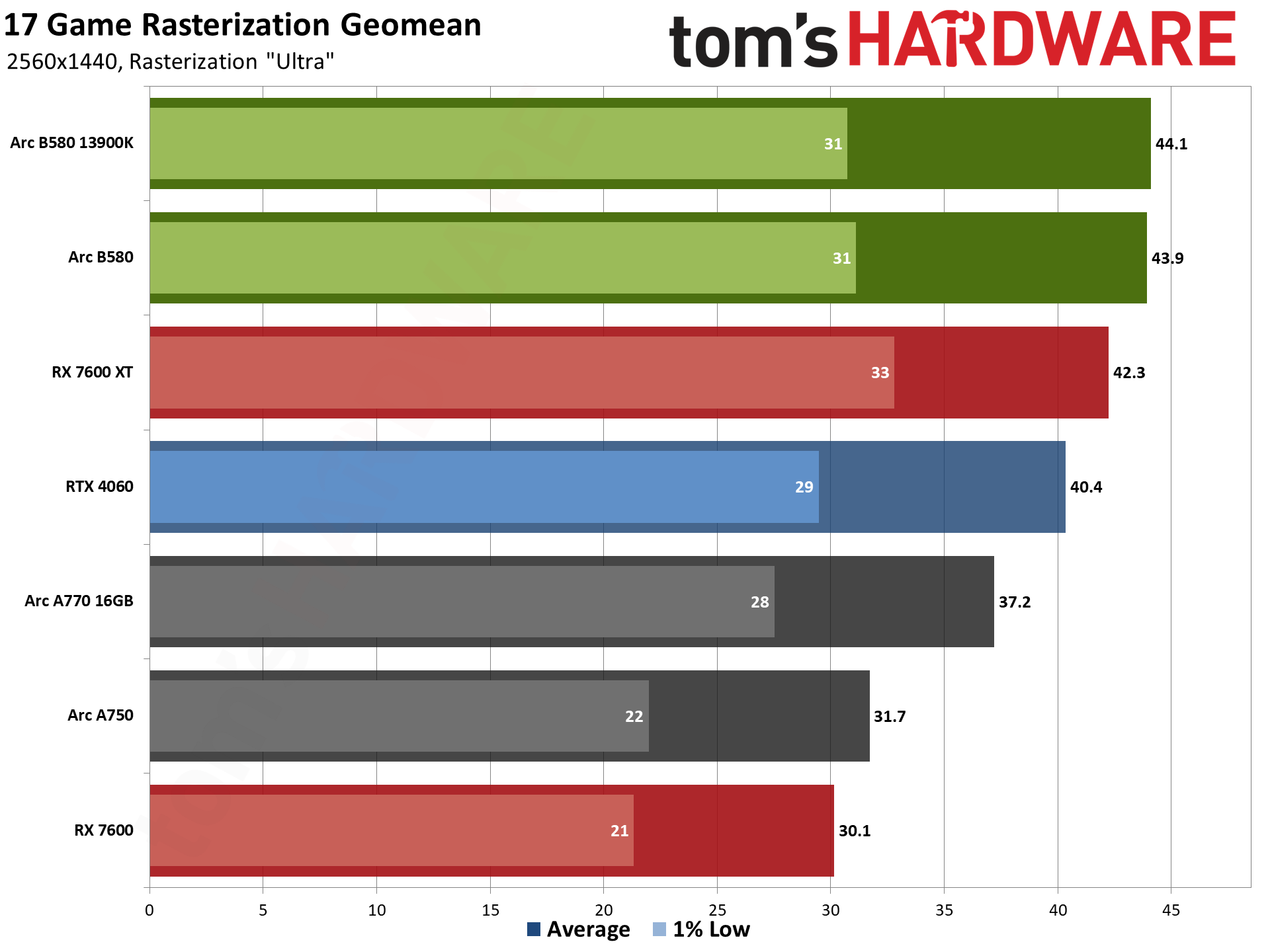

Where things get interesting is at 1440p ultra. That starts to get beyond the VRAM capabilities of the 8GB cards, at least in some games, and the result is that the B580 nets an 11% lead over the 4060 and a 15% lead over the 7600 XT — so it's obviously not just about VRAM capacity, but also VRAM bandwidth.

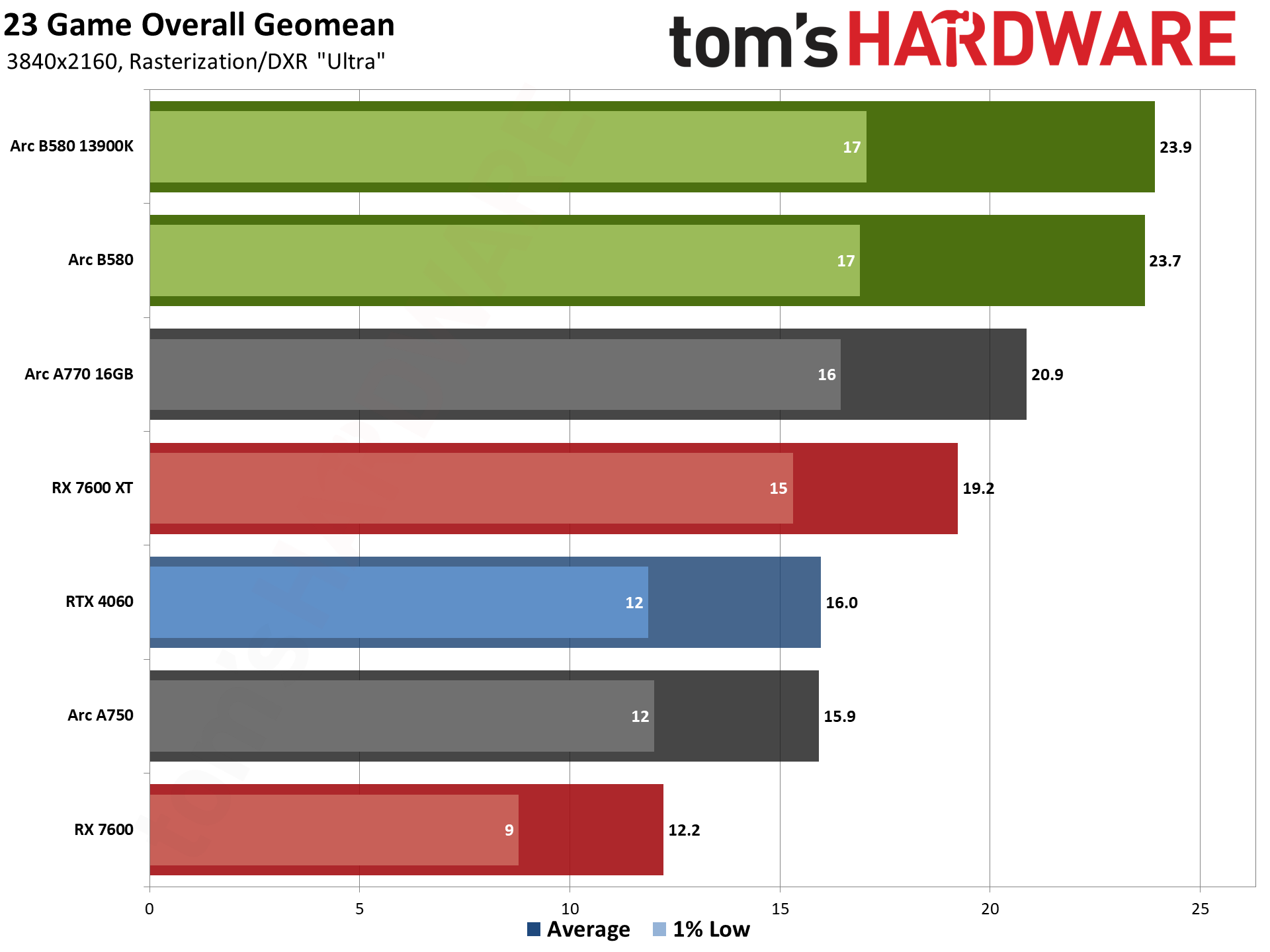

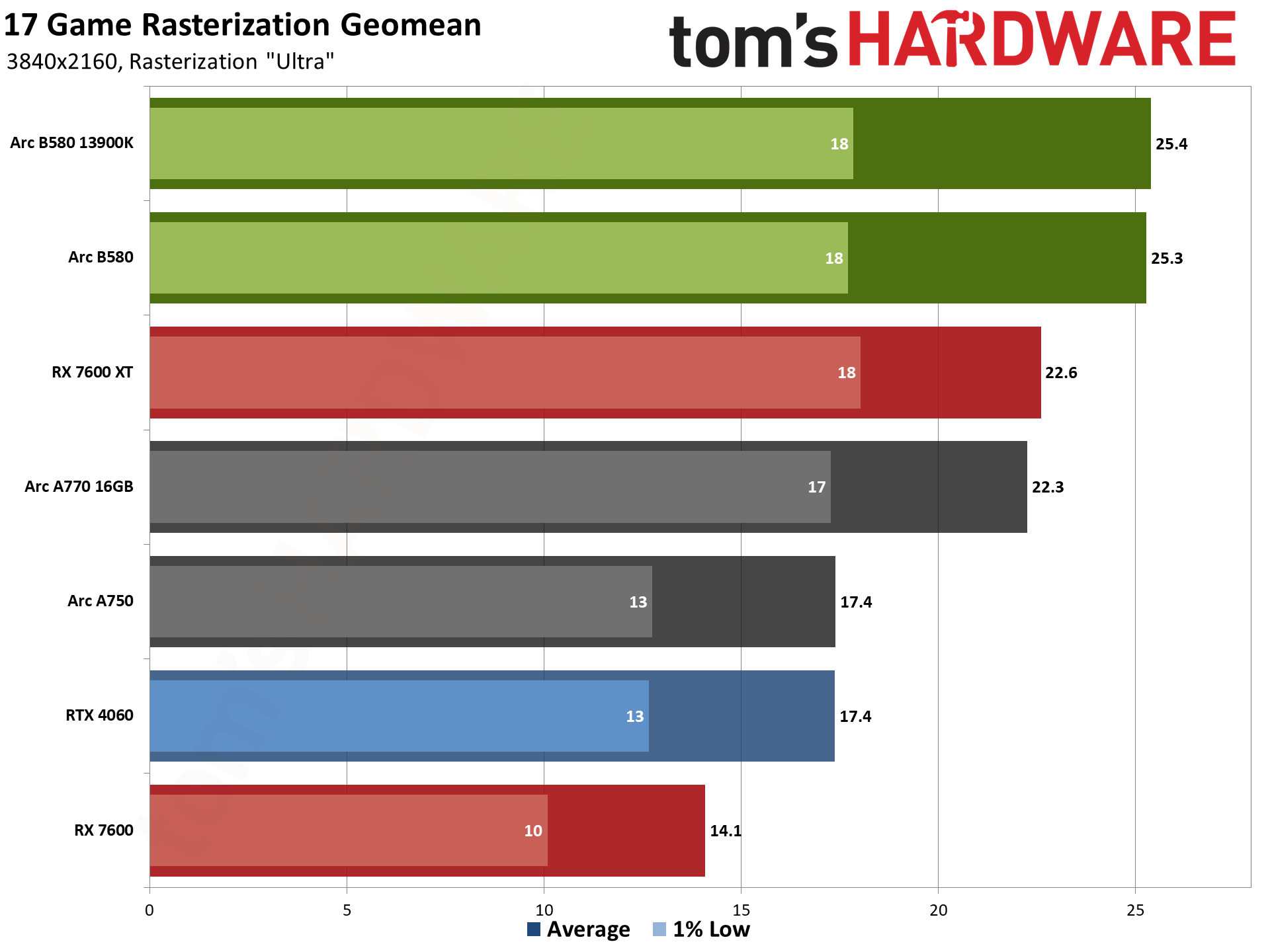

There's also 4K ultra, where the B580 takes home a 48% victory versus Nvidia's 4060, and a 23% lead over the 7600 XT, but none of the GPUs are very playable at these settings. You can look to the 1440p native results as a proxy for 4K with upscaling as well, which would be more manageable.

But the above charts start with a mix of ray tracing and rasterization benchmarks. If we just eliminate ray tracing — because even six years after hardware RT arrived in the RTX 20-series, there's still a dearth of games that meaningfully benefit from the tech, and a $250 GPU really doesn't need to worry so much about RT in our view — we get a slightly different view of performance.

Without the heavier RT games, Arc B580 drops a bit in the rankings. It's slightly slower than the 7600 XT at 1080p medium and basically ties it at 1080p ultra. It's also effectively tied with the RTX 4060 at 1080p, but with worse minimum FPS — there's more microstutter, particularly in a few of the games we tested. Chalk that up to drivers.

B580 does take the top spot at 1440p ultra, though, leading the 4060 by 9% and the 7600 XT by 4%. It does so with a lower price as well, which is a big factor to consider. And then finally at 4K ultra, it's 12% ahead of the 7600 XT and 45% ahead of the 4060, but as before none of the GPUs are really doing well at 4K native. How does that compare with Intel's own advertised numbers?

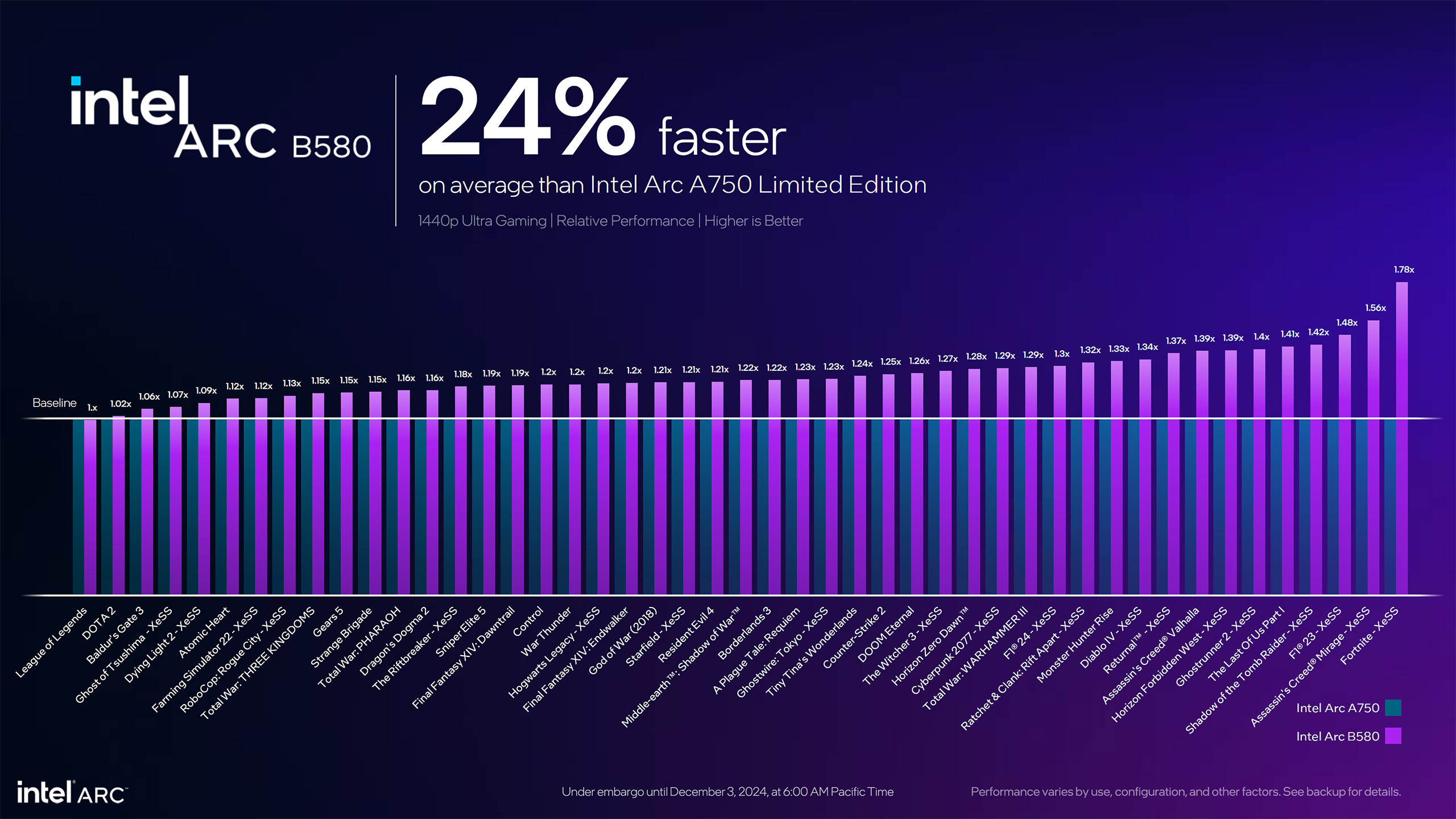

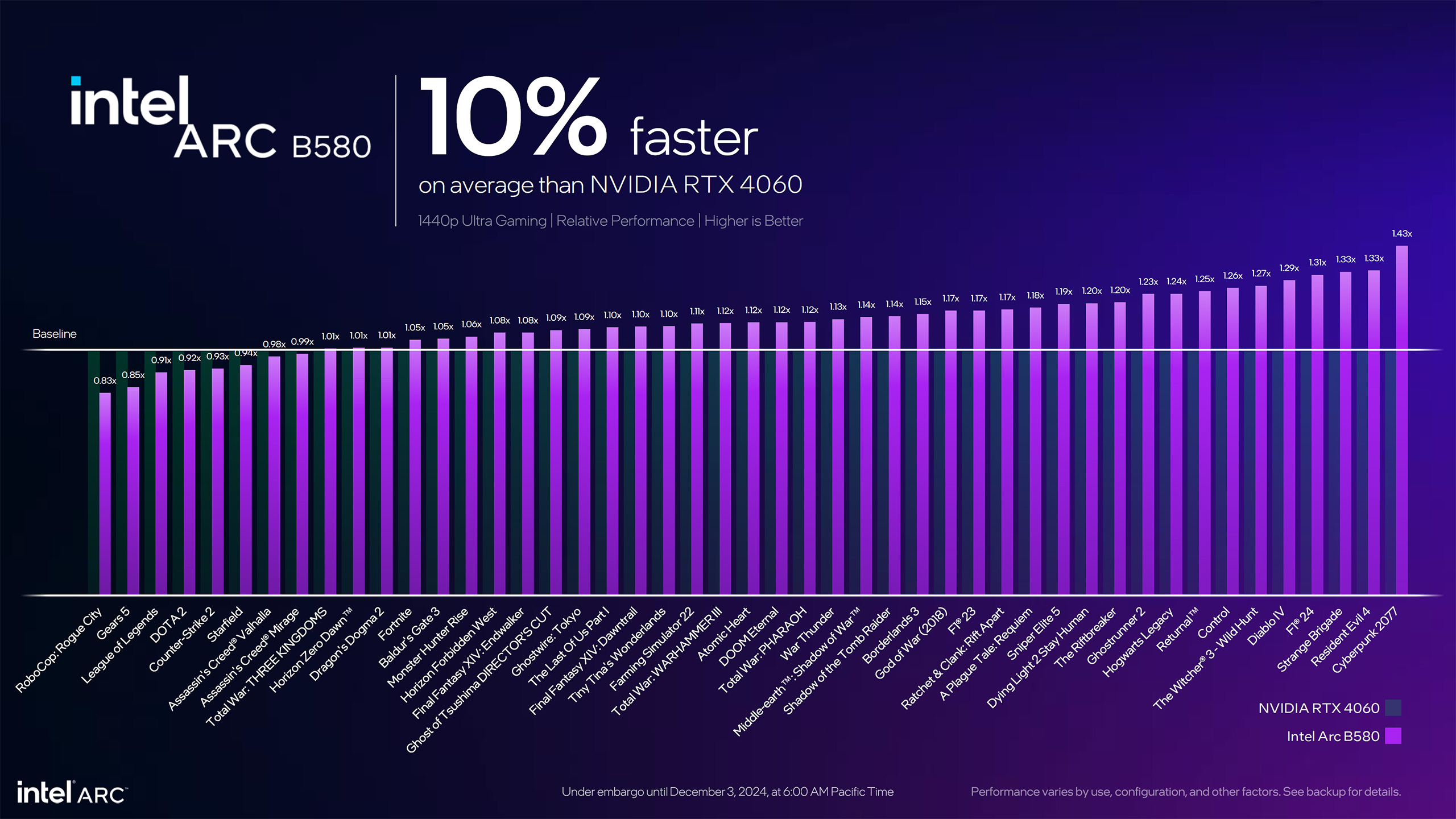

Not surprisingly, Intel opted to only show 1440p performance data — the best overall choice for making B580 look stronger than the competition without going into the realm of the ridiculous with 4K results. Intel provided two points of comparison: How it stacks up against the Arc A750, and how it compares with the Nvidia RTX 4060.

Intel showed a 24% average performance uplift across an extensive 47-game test suite when comparing Arc A750 with Arc B580. Our own similar testing (using different games) showed a 38% lead in rasterization games and a 39% lead in our full test suite, but we used games that are slanted more toward newer and more demanding releases, where the A750's 8GB of VRAM proves a serious liability.

Against the RTX 4060, Intel showed a 10% performance advantage across the same 47-game test suite. We measured an 11% difference overall, and 9% in rasterization games, so the numbers Intel provided agree with our own results — but again, that's without accounting for the less impressive 1080p results.

What's interesting is that Arc B580 with 20 Xe-cores and 14.6 teraflops of FP32 compute ends up besting the 28 Xe-core A750 with 17.2 teraflops of compute by a rather large margin, whether you want to use Intel's figures or our own results. That shows the significant gains made with the Battlemage architecture, and those gains should extend to future Arc B-series GPUs.

An Arc B770 with 32 Xe-cores should be pretty compelling, based on these results. As mentioned above, we anticipate it will deliver about 50% more performance than the B580, which would put it at roughly the level of the RTX 4070 Super. Give Intel a bit more time for the "fine wine" drivers effect and it could look even better. But pricing and future competion from Nvidia's Blackwell RTX 50-series GPUs will ultimately decide where it fits into the GPU hierarchy.

Battlemage Release Dates and Pricing

Perhaps just as important as the performance is the intended pricing. With performance data in tow, the $249 launch price for the B580 looks great; the B570 at $219 seems a bit less compelling, though we'll withhold final judgement until we've actually tested the GPU. On paper, the loss of 2GB of VRAM looks to be the bigger issue with the B570, and we've seen an increasing number of games push beyond 10GB of VRAM use.

The Arc B580 at present goes up against AMD's RX 7600 8GB card, while Nvidia doesn't have any current generation parts below the 4060 — you'd have to turn to the previous generation RTX 30-series, which doesn't make much sense as a comparison point these days as inventory of the last of those cards (RTX 3060 and 3050) has been disappearing in the past few months.

The bigger concern is that the RTX 4060 launched in mid-2023, over 18 months ago. It's due for replacement in mid-2025, give or take. Likewise, AMD's RX 7600 first appeared a month before the 4060, and it's also due for replacement in the near future. Regardless of what happens with the future AMD and Nvidia GPUs, beating up on what were arguably some of the weaker offerings — in specs and performance — isn't exactly difficult.

What we don't know, yet, are the release dates and prices for potentially higher tier Arc B-series GPUs. Arc B580 has so far received a warm reception, though it's not clear if the cards are truly popular and selling fast or if there simply weren't that many produced ahead of the launch. If Intel can put out some higher performance models at similarly compelling prices, we'd be equally pleased to see them.

Battlemage Architecture

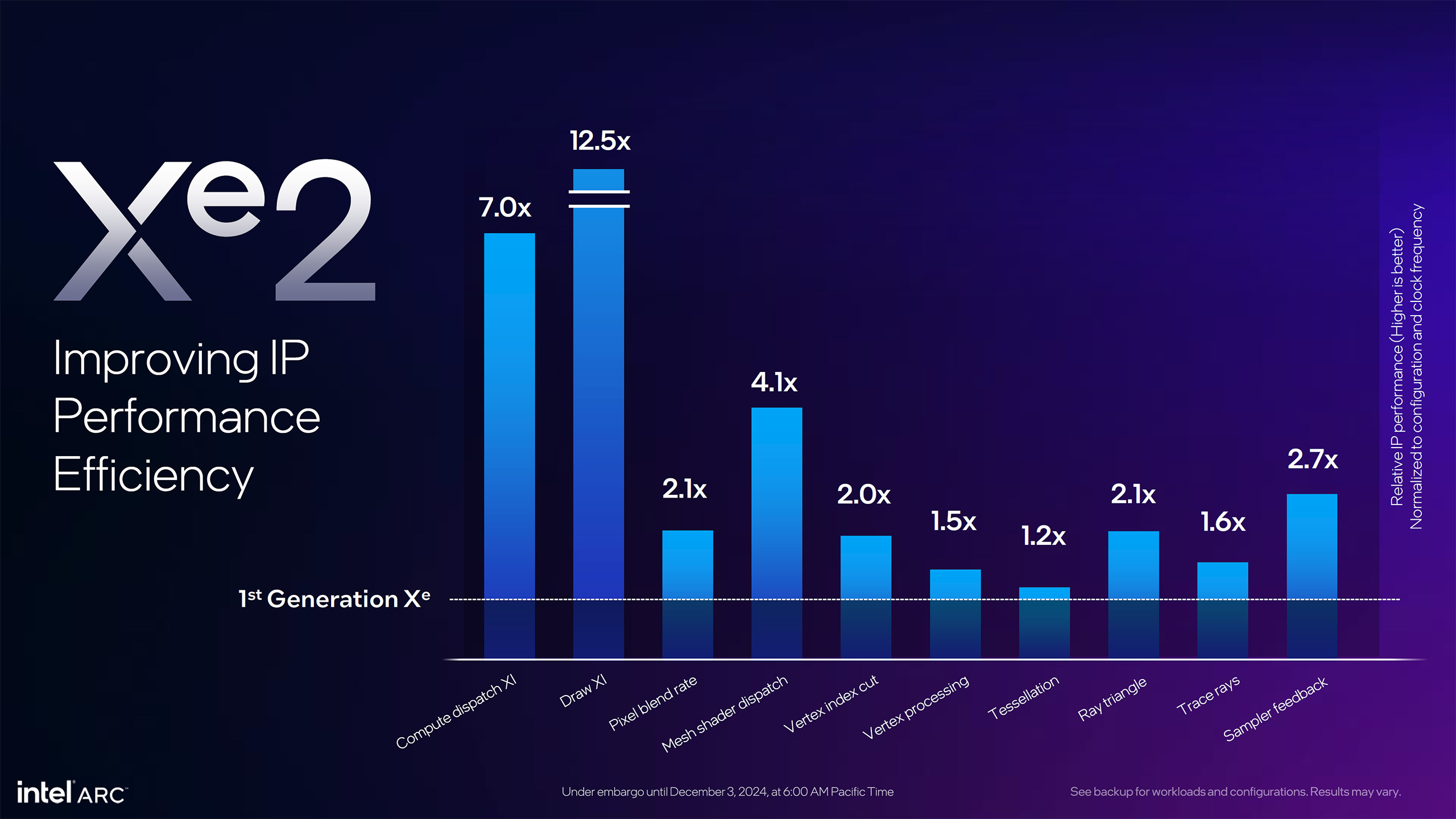

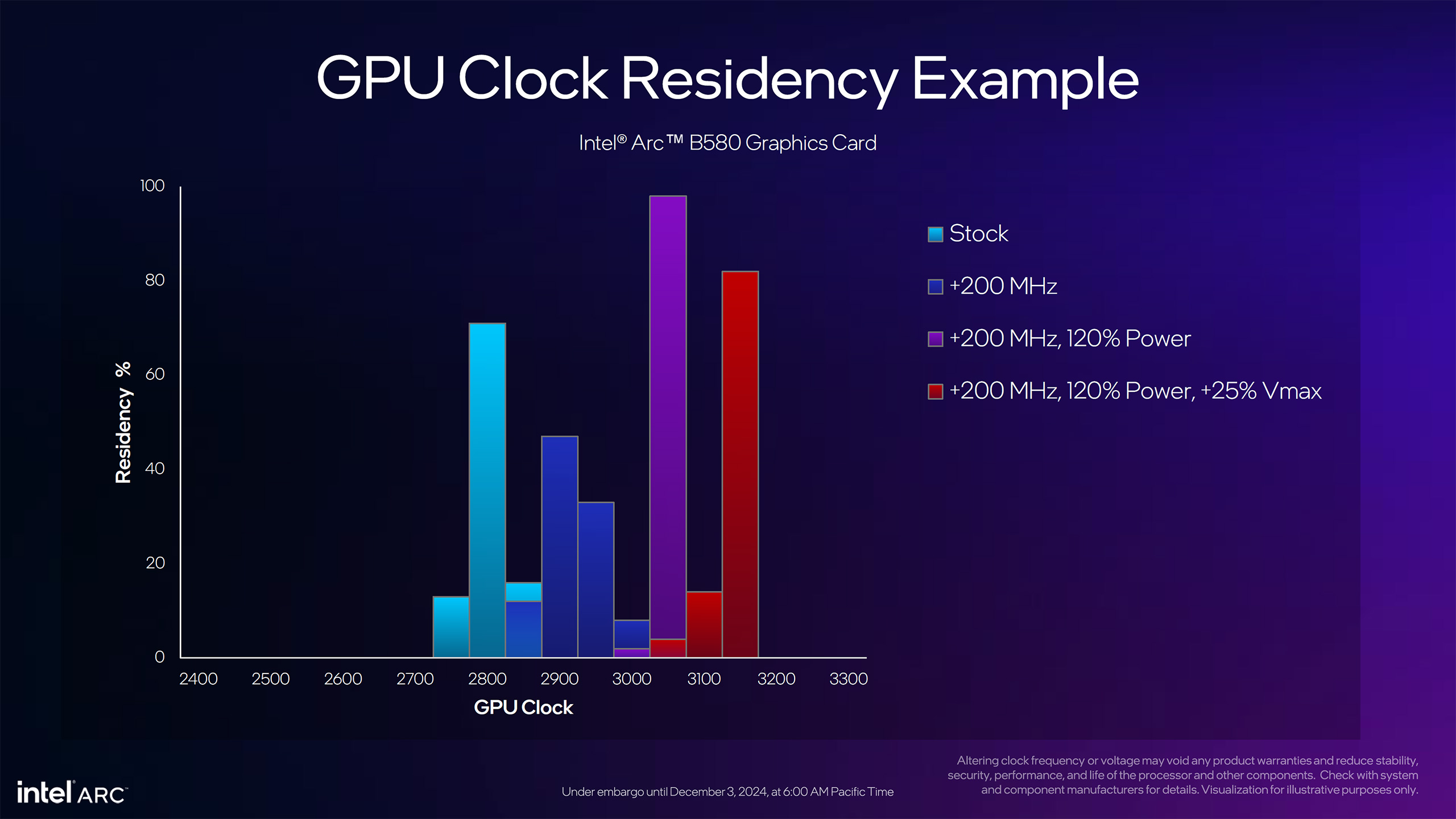

Intel Arc Alchemist was the first real attempt at scaling up the base architecture to significantly higher power and performance. And that came with a lot of growing pains, both in terms of the hardware and the software and drivers. Battlemage gets to take everything Intel learned from the prior generation, incorporating changes that can dramatically improve certain aspects of performance.Intel's graphics team set out to increase GPU core utilization, improve the distribution of workloads, and reduce software overhead.

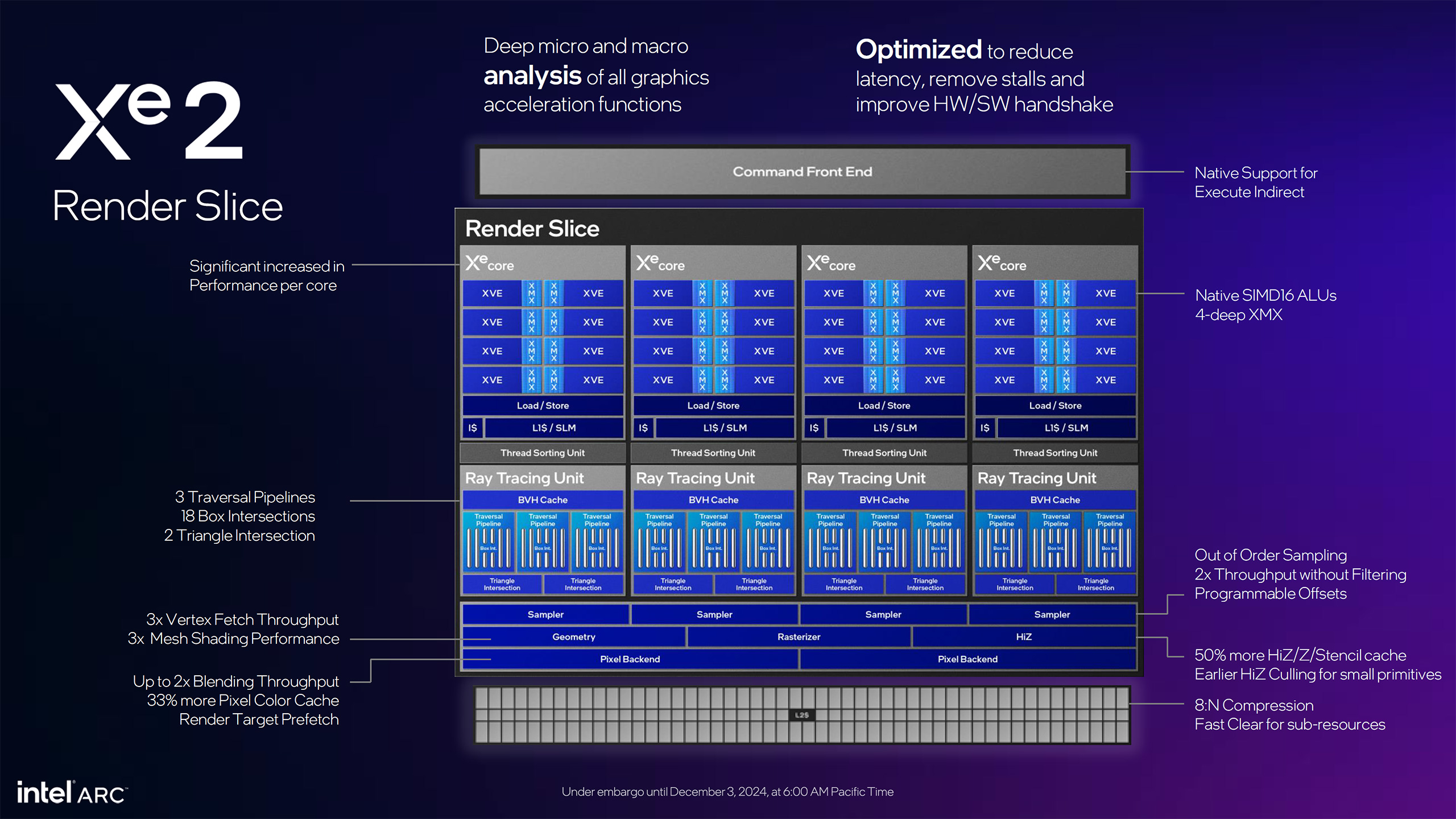

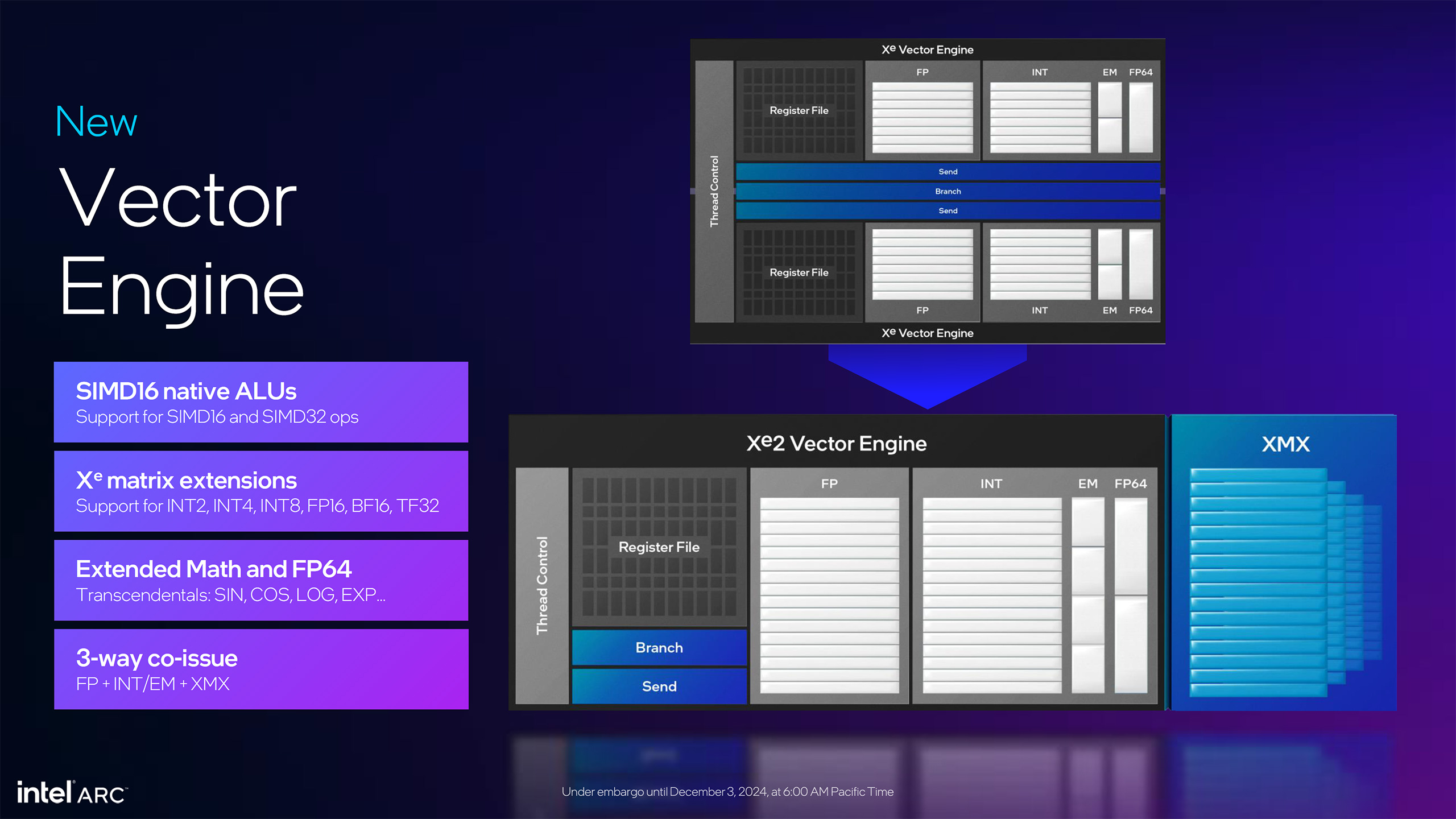

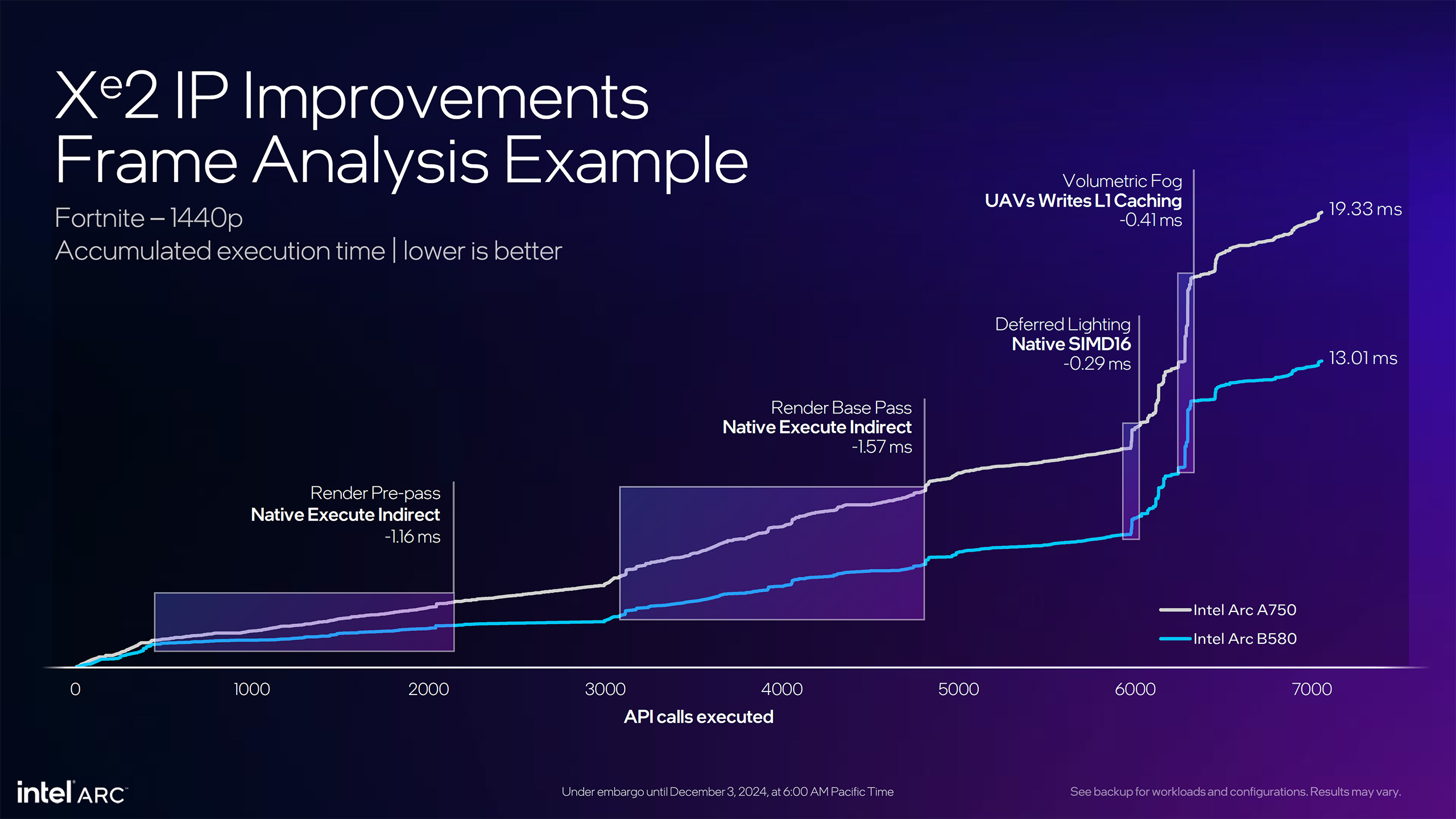

The fifth slide in the above set gives a high-level overview of all the changes. Intel added native support for Execute Indirect, significantly improving performance for certain tasks. One of the biggest changes is a switch from native SIMD32 execution units to SIMD16 units — SIMD stands for "single instruction multiple data," with the number being the concurrent pieces of data that are operated on. With SIMD32 units, Alchemist had to work on chunks of 32 values (typically from pixels), while SIMD16 only needs 16 values. Intel says this improves GPU utilization, as it's easier to fill 16 execution slots than 32. The net result is that Battlemage should deliver much better GPU utilization and thus better performance per theoretical TFLOPS than Alchemist.

Vertex and mesh shading performance per render slice is three times higher compared to Alchemist, and there are other improvements in the Z/stencil cache, earlier culling of primitives, and texture sampling.

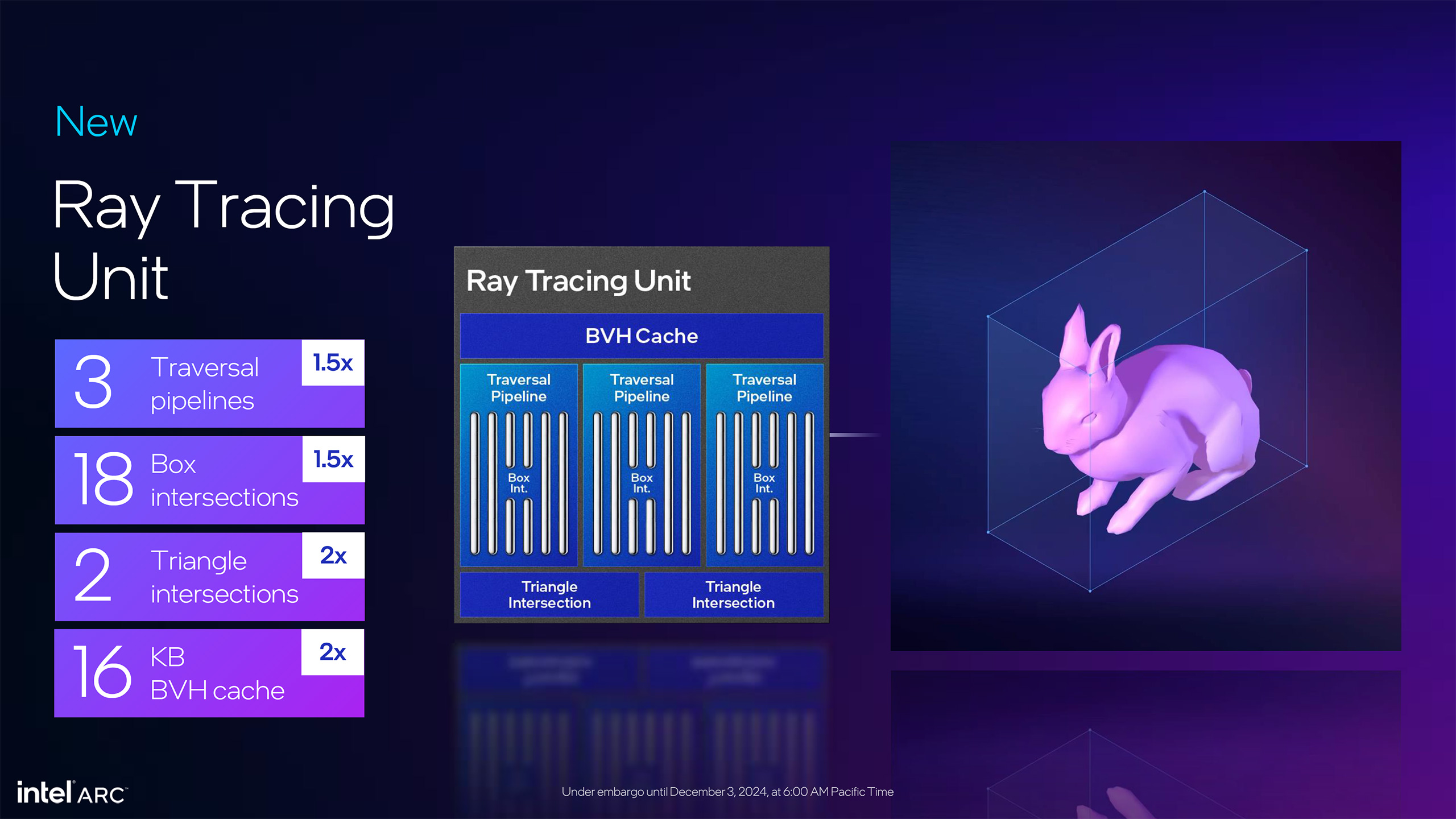

The ray tracing units also see some major upgrades, with each now having three traversal pipelines, the ability to compute 18 box intersections per cycle, and two triangle intersections. By way of reference, Alchemist had two BVH traversal pipelines and could do 12 box intersections and one triangle intersection per cycle. That means the ray tracing performance of each Battlemage RT unit is 50% higher on box intersections, with twice as many ray triangle intersections. There's also a 16KB dedicated BVH cache in Battlemage, twice the size of the BVH cache in Alchemist.

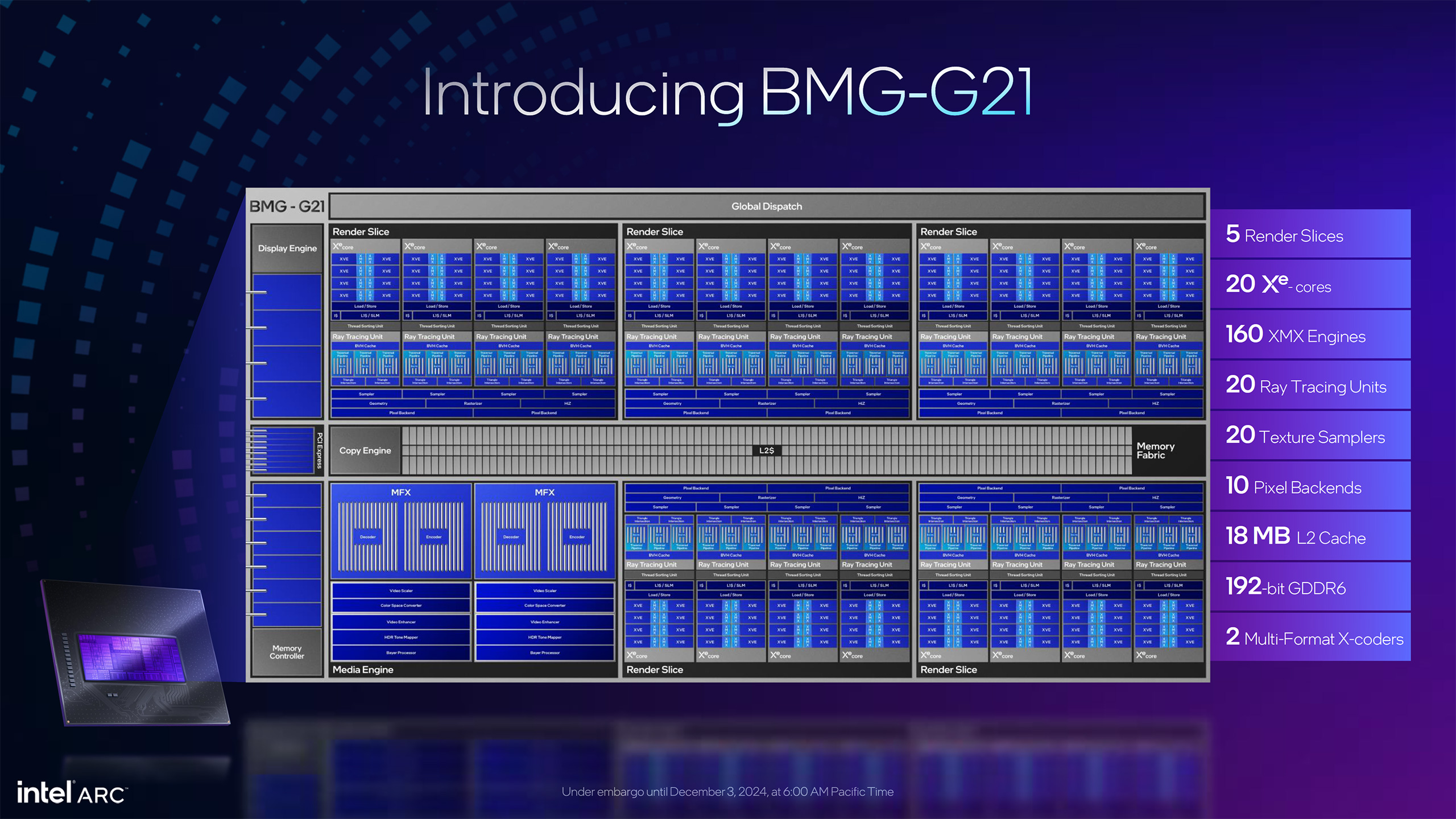

Battlemage has updates to the caching hierarchy for the memory subsystem as well. Each Xe-core comes with a shared 256KB L1/SLM cache, 33% larger than Alchemist's 192KB shared L1/SLM. The L2 cache gets a bump as well, though how much of a bump varies by the chosen comparison point. BMG-G21 has up to 18MB of L2 cache, while ACM-G10 had up to 16MB of L2 cache. However, the A580 cut that down to 8MB, and presumably, any future GPU — like BMG-G10 (or is it BMG-31?) for B770/B750 — would increase the amount of L2 cache. What that means in terms of effective memory bandwidth remains to be seen.

In terms of the memory subsystem, the B580 will use a 192-bit interface with 12GB of GDDR6 memory, while the B570 cuts that down to a 160-bit interface with 10GB of GDDR6 memory. In either case, the memory runs at 19 Gbps effective clocks, a modest improvement over Alchemist's maximum 17.5 Gbps. There's a slight reduction in total bandwidth relative to the A580 and A750 (both 512 GB/s), with the A770 at 560 GB/s. The good news is that these new budget / mainstream GPUs will both have more than 8GB of VRAM, which has become a limiting factor on quite a few newer games.

Most supported number formats remain the same as Alchemist, with INT8, INT4, FP16, and BF16 support. New to Battlemage are native INT2 and TF32 support. INT2 can double the throughput again for very small integers, while TF32 (tensor float 32) looks to provide a better option for precision relative to FP16 and BF16. It uses a 19-bit format, with an 8-bit exponent with a 10-bit mantissa (the fraction portion of the number). The net result is that it has the same dynamic range of FP32 with less precision, but it runs on the XMX cores (which don't support FP32) at half the rate of BF16/FP16. TF32 has proven effective for certain AI workloads.

Battlemage now supports 3-way instruction co-issue, so it can independently issue one floating-point, one Integer/extended math, and one XMX instruction each cycle. Alchemist also had instruction co-issue support and seemed to have the same 3-way co-issue, but Intel says Battlemage is more robust in this area.

The full BMG-G21 design has five render slices, each with four Xe-cores. That gives 160 vector and XMX engines and 20 ray tracing units and texture samplers. It also has 10-pixel backends, each capable of handling eight render outputs. Rumors are that a larger BMG-G10 could scale up the number of render slices and the memory interface. Will it top out at eight render slices and 32 Xe-cores like Alchemist? That seems likely, though there's no official word on other Battlemage GPUs at present.

Battlemage will also be more power efficient, as the total graphics power is 190W on the B580 compared to 225W on the A750. That means Battlemage delivers higher performance — 15 to 35 percent more, depending on the game and settings used — while using at less power. In our own testing, B580 came in far below the rated 190W TBP as well, averaging just 162W at 4K, 155W at 1440p, 146W at 1080p ultra, and 141W at 1080p medium. Combined with the performance improvements, at 1440p the B580 ends up being 60% more performance per watt than the A770, and 70% higher than the A750. That matches up nicely with Intel's claimed 70% improvement in performance per Xe-core, based on the architectural upgrades.

Part of the power efficiency improvements come from the move to TSMC's N5 node versus the N6 node used on Alchemist. N5 offered substantial density and power benefits, and that's reflected in the total die size as well. The ACM-G10 GPU used in the A770 had 21.7 billion transistors in a 406 mm^2 die, and BMG-G21 has 19.6 billion transistors in a 272 mm^2 die. That's an overall density of 72.1 MT/mm^2 for Battlemage compared to 53.4 MT/mm^2 on Alchemist.

And finally, Intel will use a PCIe 4.0 x8 interface on B580 and B570. Given their budget-mainstream nature, that's probably not going to be a major issue, and AMD and Nvidia have both opted for a narrower x8 interface on lower-tier parts. Presumably, it determined that there was no need for a wider x16 interface, and likewise, there wasn't enough benefit to moving to PCIe 5.0 — which tends to have shorter trace lengths and higher power requirements.

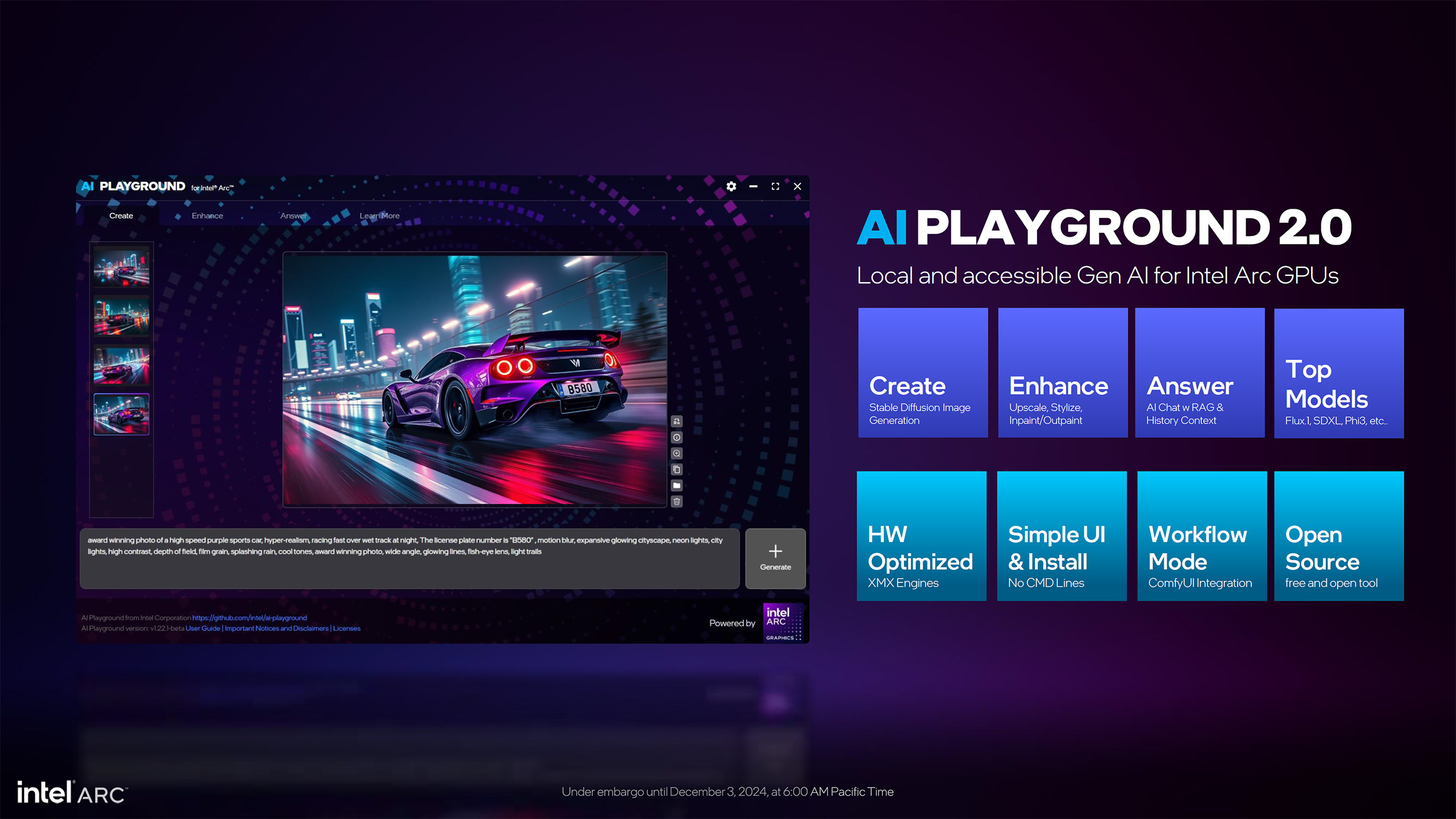

XeSS 2, with frame generation and latency reduction

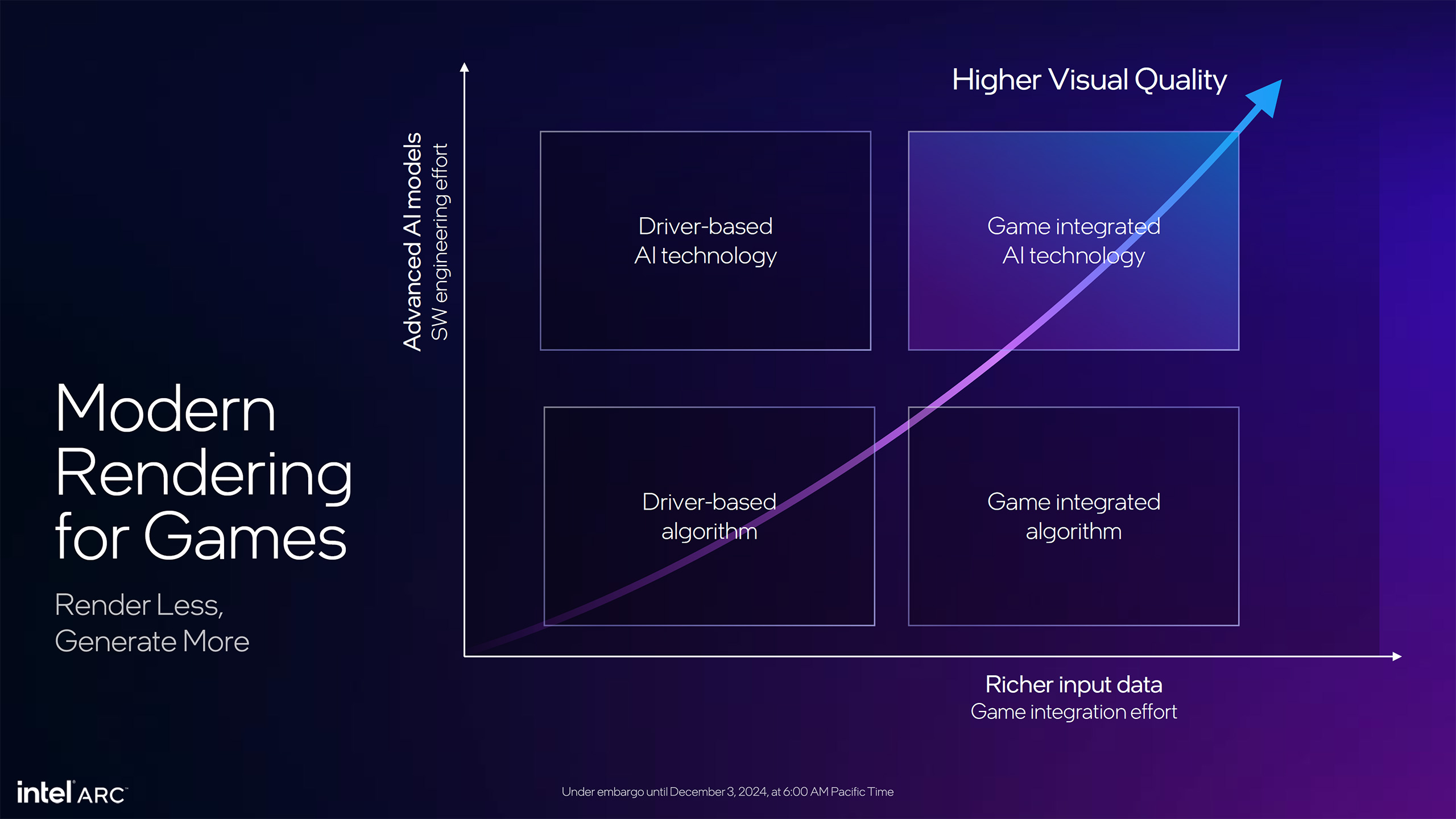

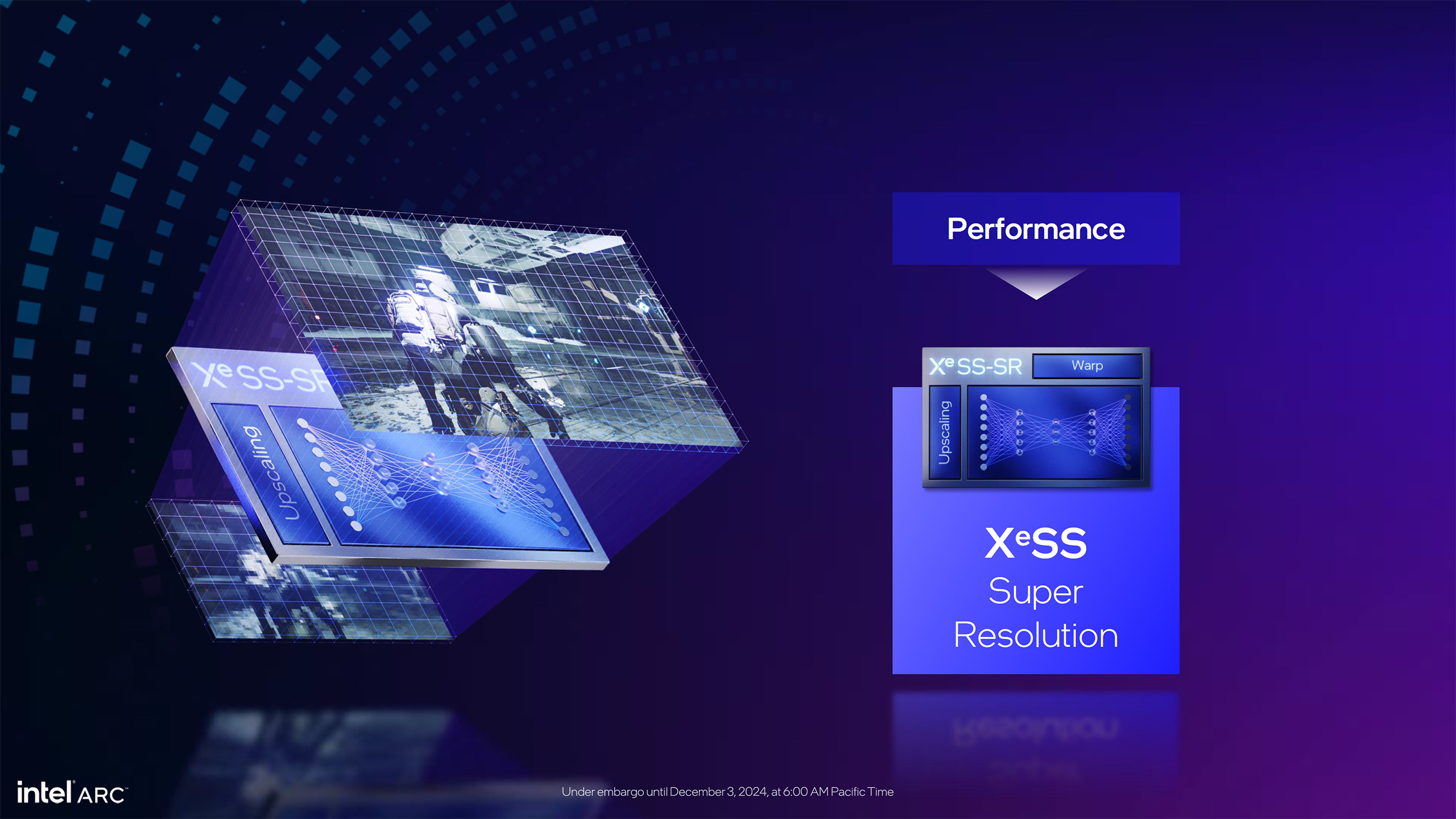

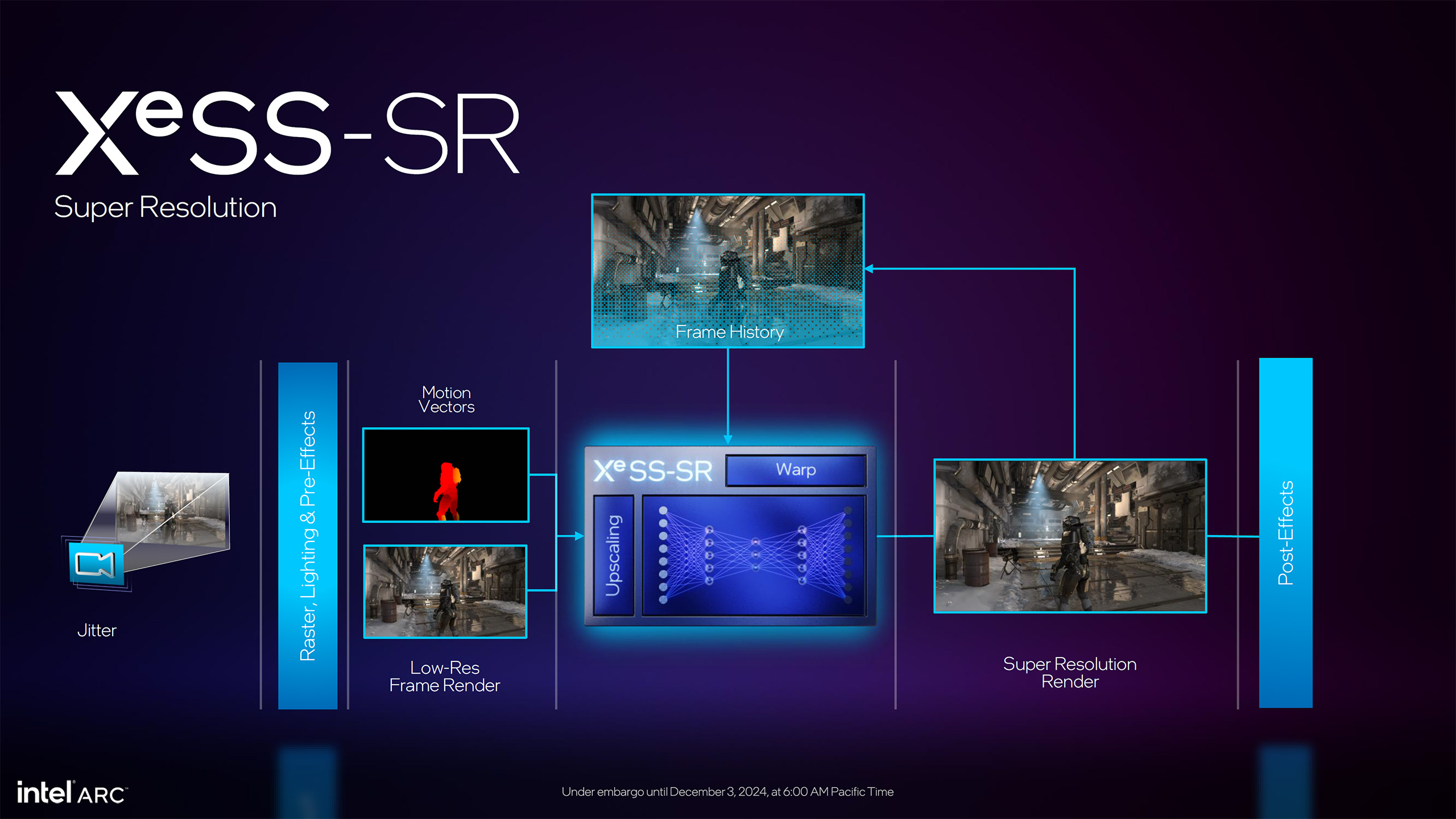

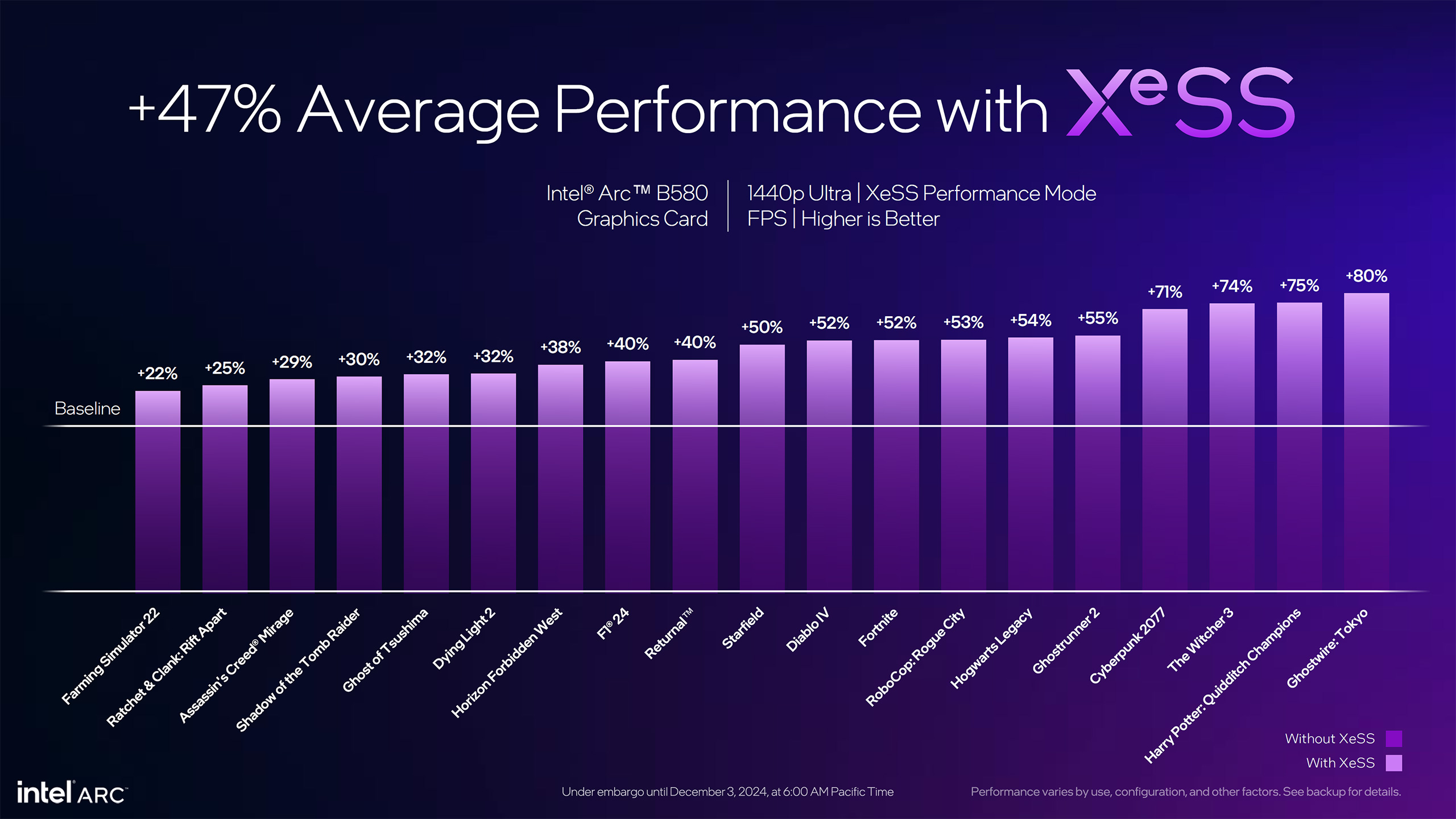

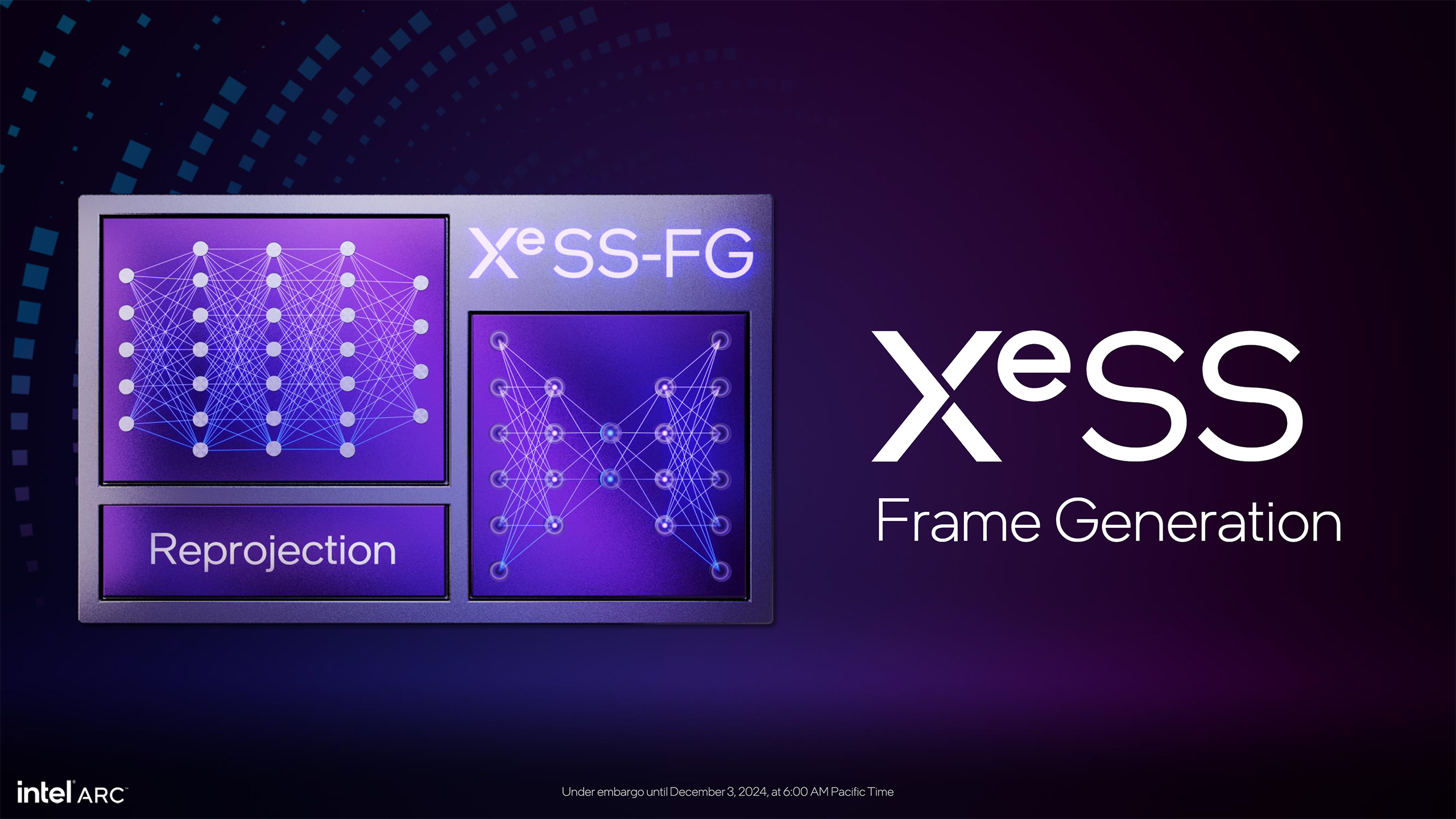

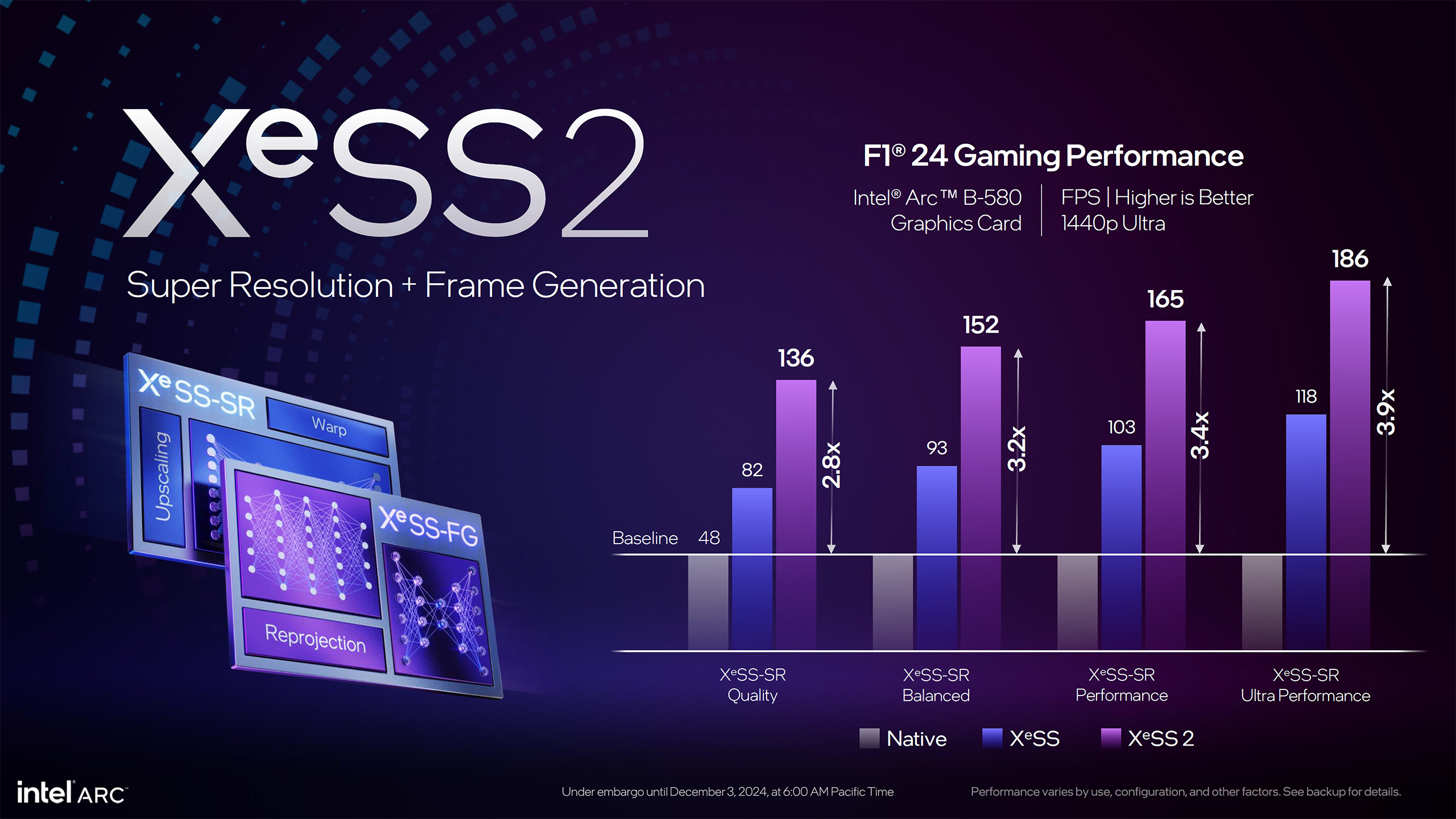

Besides the core hardware, Intel has also worked to improve it's XeSS upscaling technology. It's not too surprising that Intel will now add frame generation and low-latency technology to XeSS. It puts all of these under the XeSS 2 brand, with XeSS-FG, XeSS-LL, and XeSS-SR sub-brands (for Frame Generation, Low Latency, and Super Resolution, respectively).

XeSS continues to follow a similar path to Nvidia's DLSS, with some notable differences. First, XeSS-SR supports non-Intel GPUs via DP4a instructions (basically optimized INT8 shaders). However, XeSS functions differently in DP4a mode than in XMX mode, with XMX requiring an Arc GPU — basically Alchemist, Lunar Lake, or Battlemage.

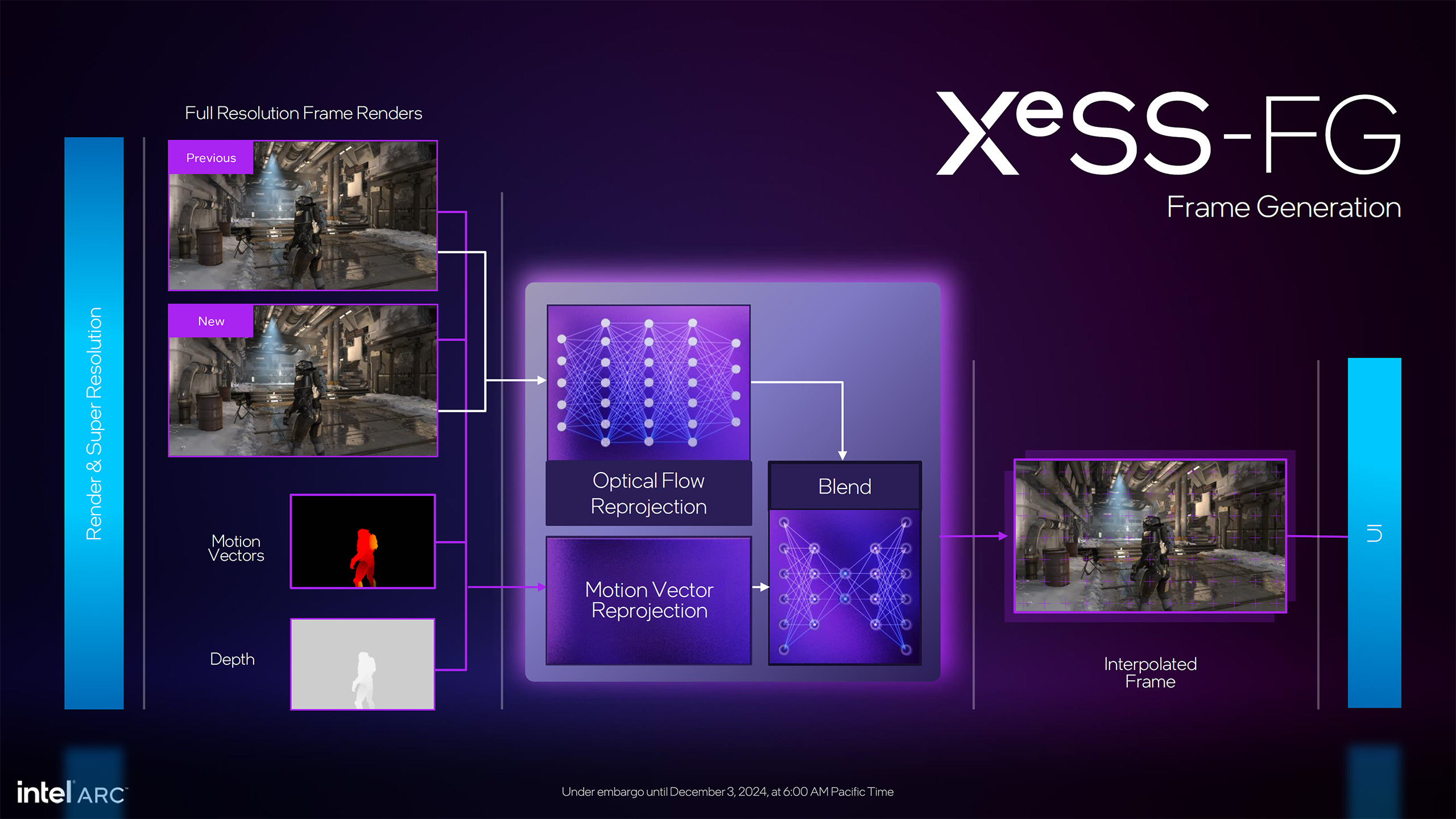

XeSS-FG frame generation interpolates an intermediate frame between two already rendered frames, pretty much in the same way that DLSS 3 and FSR 3 framegen interpolate. However, where Nvidia requires the RTX 40-series with its newer OFA (Optical Flow Accelerator) to do framegen, Intel does all the necessary optical flow reprojection via its XMX cores. It also does motion vector reprojection, and then uses another AI network to blend the two to get an 'optimal' output.

This means that XeSS-FG will run on all Arc GPUs — but not on Meteor Lake's iGPU, as that lacks XMX support. It also means that XeSS-FG will not run on non-Arc GPUs, at least for the time being. It's possible Intel could figure out a way to make it work with other GPUs, similar to what it did with XeSS-SR and DP4a mode, but we suspect that won't ever happen due to performance requirements.

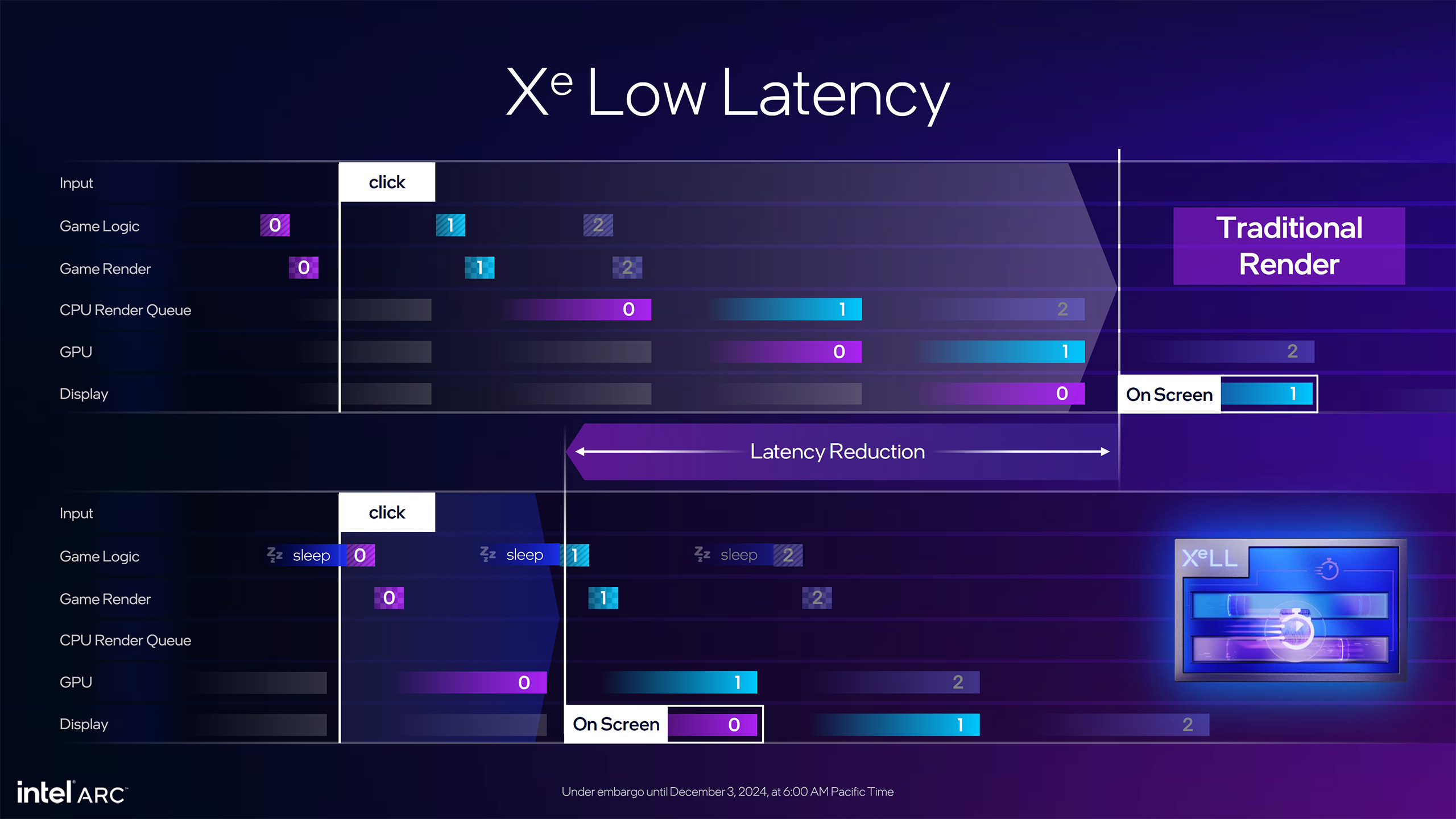

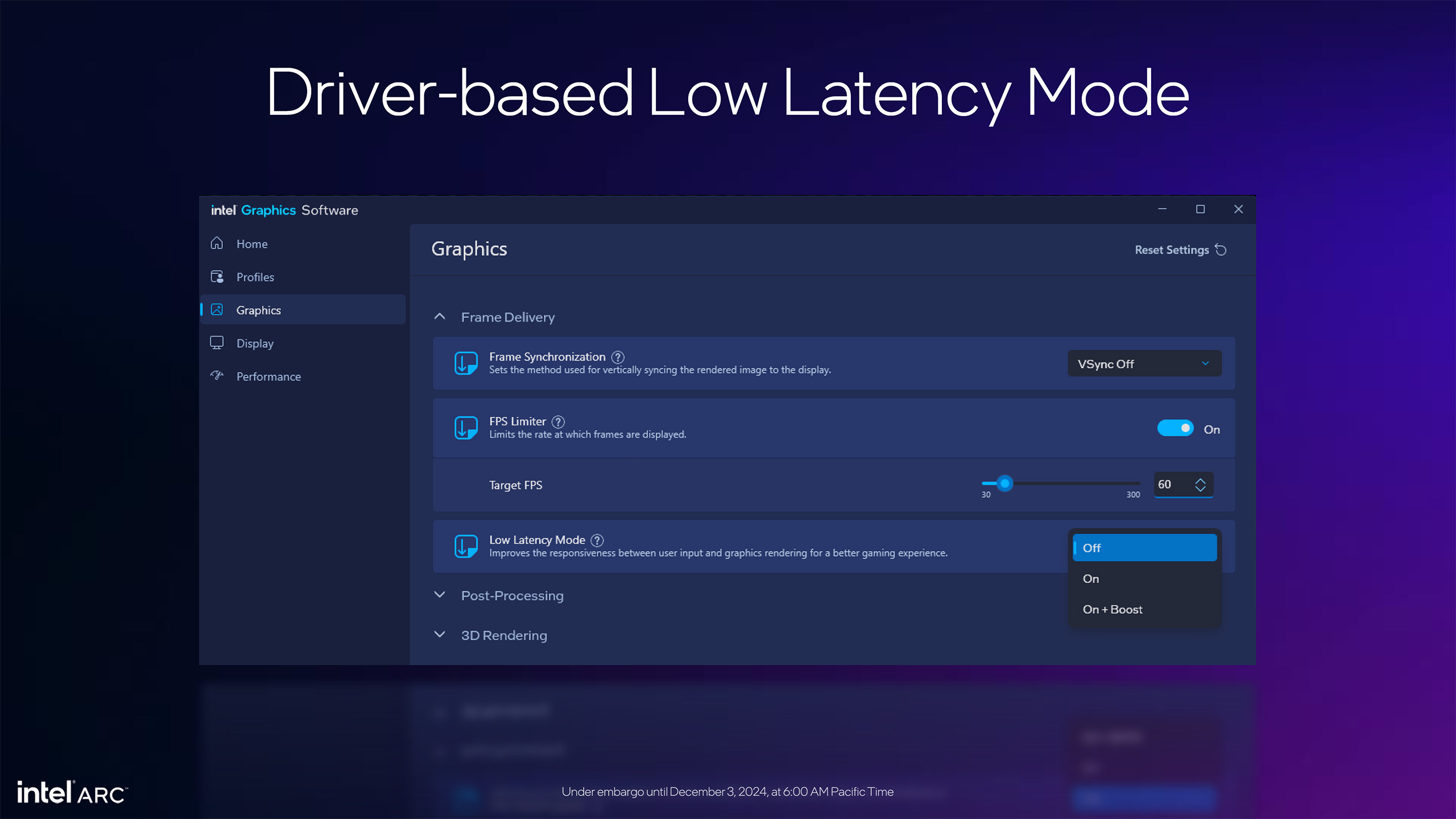

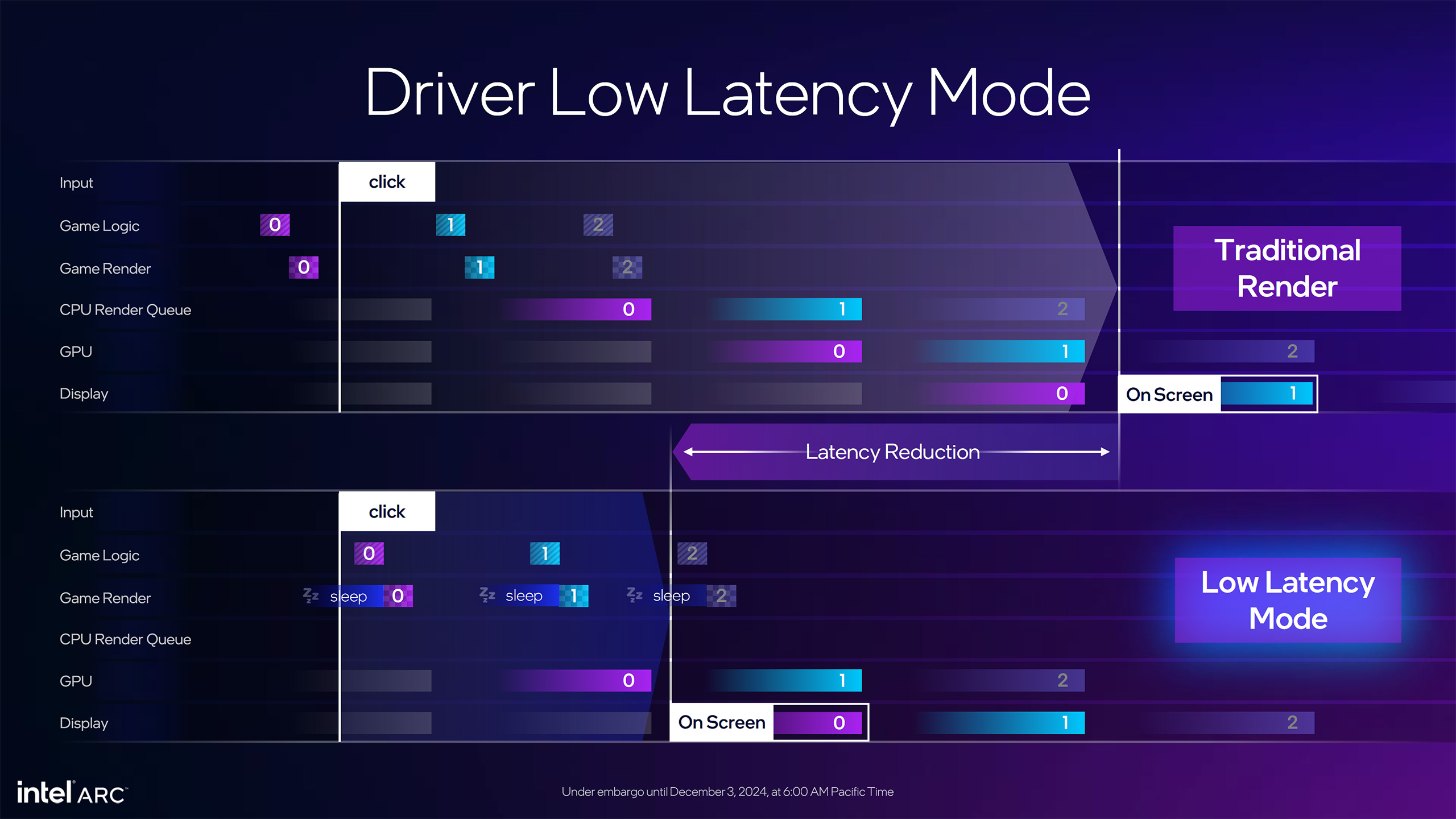

XeSS-LL pairs with framegen to help reduce the added latency created by framegen interpolation. In short, it moves certain work ahead of additional game logic calculations to reduce the latency between user input and having that input be reflected on the display. It's roughly equivalent to Nvidia's Reflex and AMD's Anti-Lag 2 in principle, though the exact implementations aren't necessarily identical.

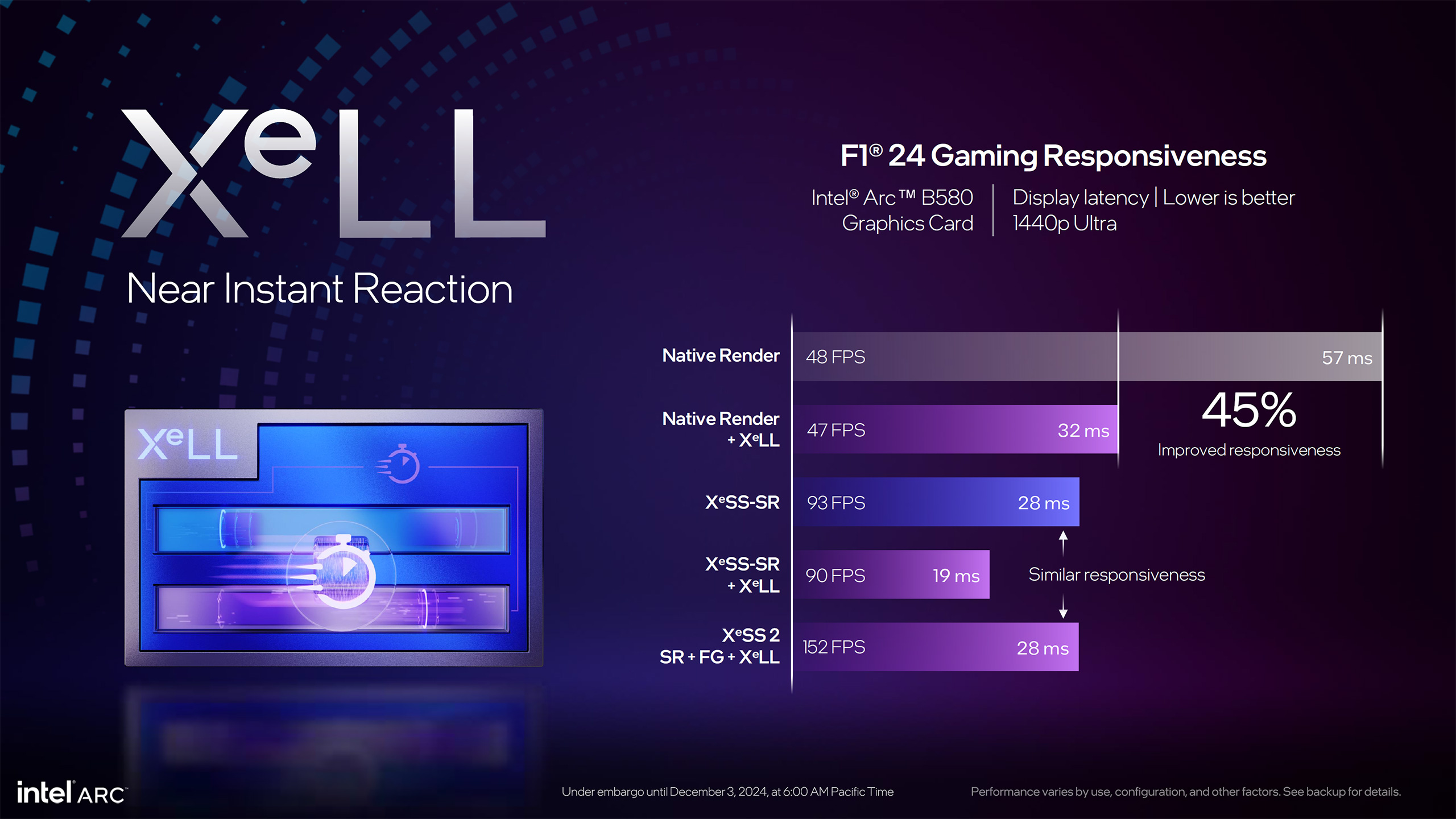

Like DLSS 3 with Reflex and FSR 3 with Anti-Lag 2, Intel says you can get the same latency with XeSS 2 running SR, FG, and LL as with standard XeSS-SR. It gave an example using F1 24 where the base latency at native rendering was 57ms, which dropped to 32ms with XeSS-LL. Turning on XeSS-SR upscaling instead dropped latency to 28ms, and SR plus LL resulted in 19ms of latency. Finally, XeSS SR + FG + LL ends up at the same 28ms of latency as just doing SR, but with 152 fps instead of 93 fps. So, you potentially get the same level of responsiveness but with higher (smoother) framerates.

XeSS has seen plenty of uptake by game developers since it first launched in 2022. There are now over 150 games that support some version of XeSS 1.x. However, as with FSR 3 and DLSS 3, developers will need to shift to XeSS 2 if they want to add framegen and low latency support. Some existing games that already support XeSS will almost certainly get upgraded, but at present Intel only named eight games that will have XeSS 2 in the coming months — with more to come.

And no, you can't get XeSS 2 in a game with XeSS 1.x support by swapping GPUs, as there are other requirements for XeSS 2 that the game wouldn't support. But as we've seen with FSR 3 and DLSS 3, it should be possible for modders to hack in support with a bit of creativity.

Battlemage's AI Aspirations

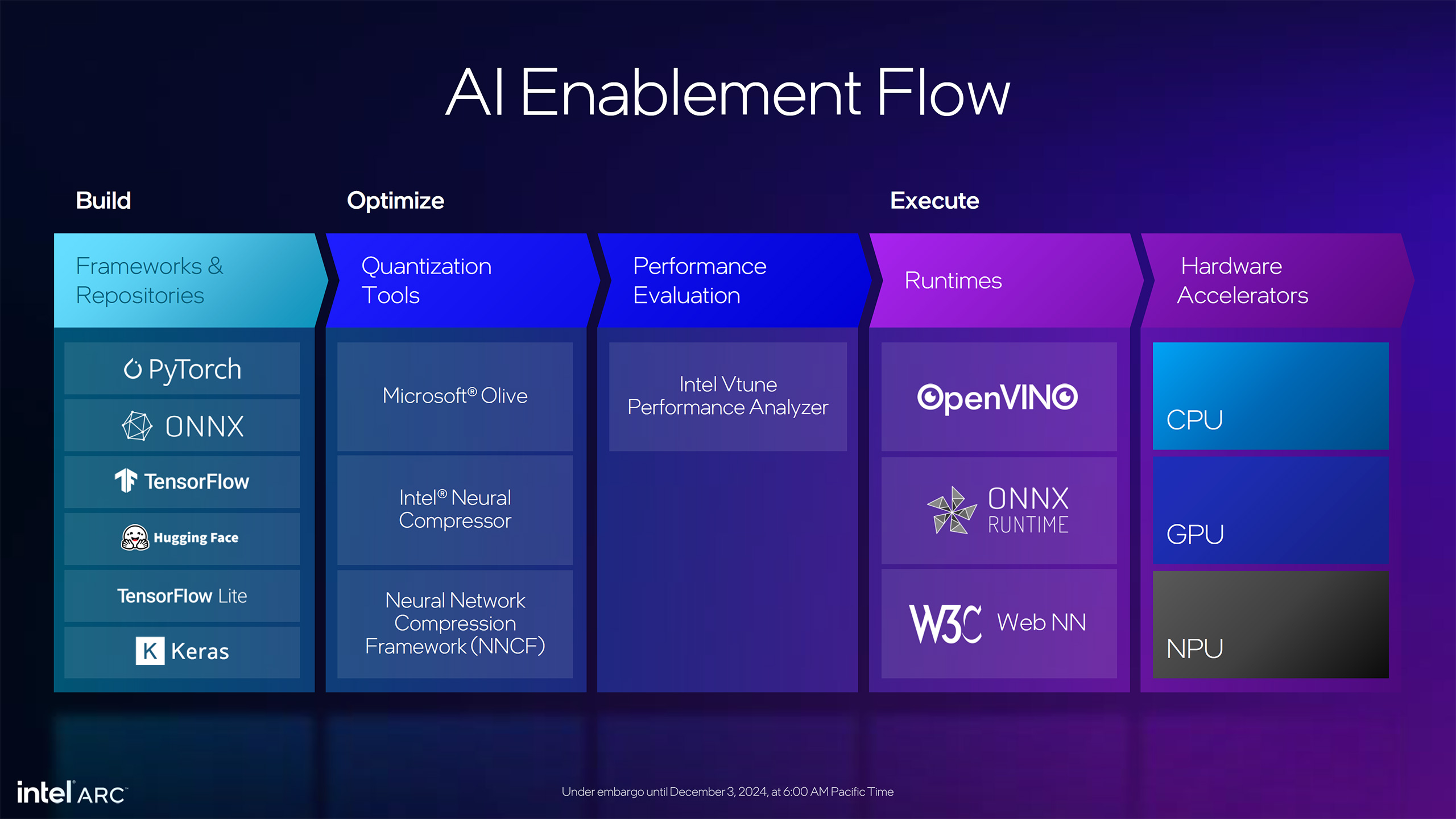

Intel also updated the XMX engines, which are mostly used for AI workloads — including XeSS upscaling. The above slides provide most of the details, and people interested in AI should already be familiar with what's going on in this fast-moving field.

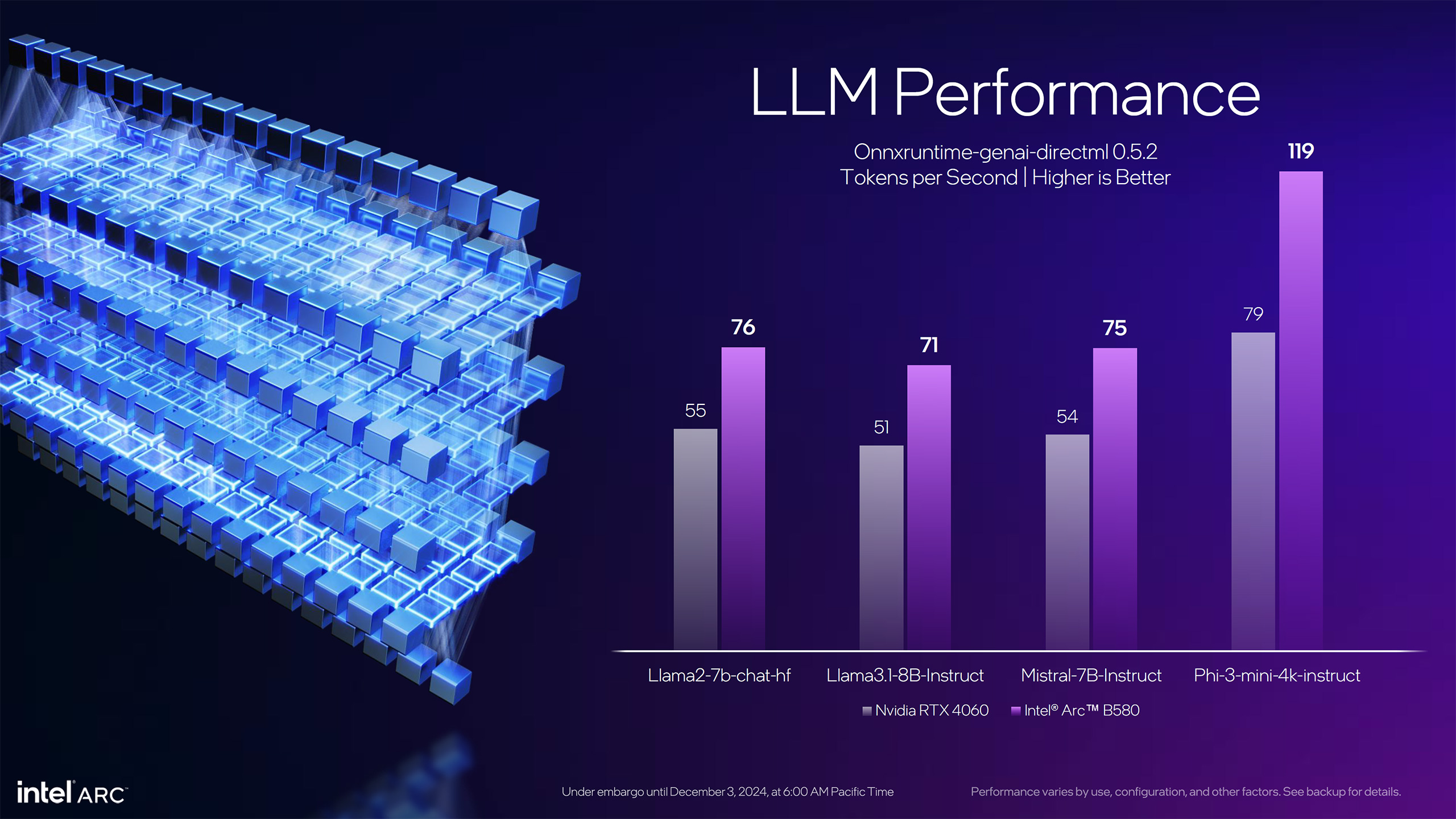

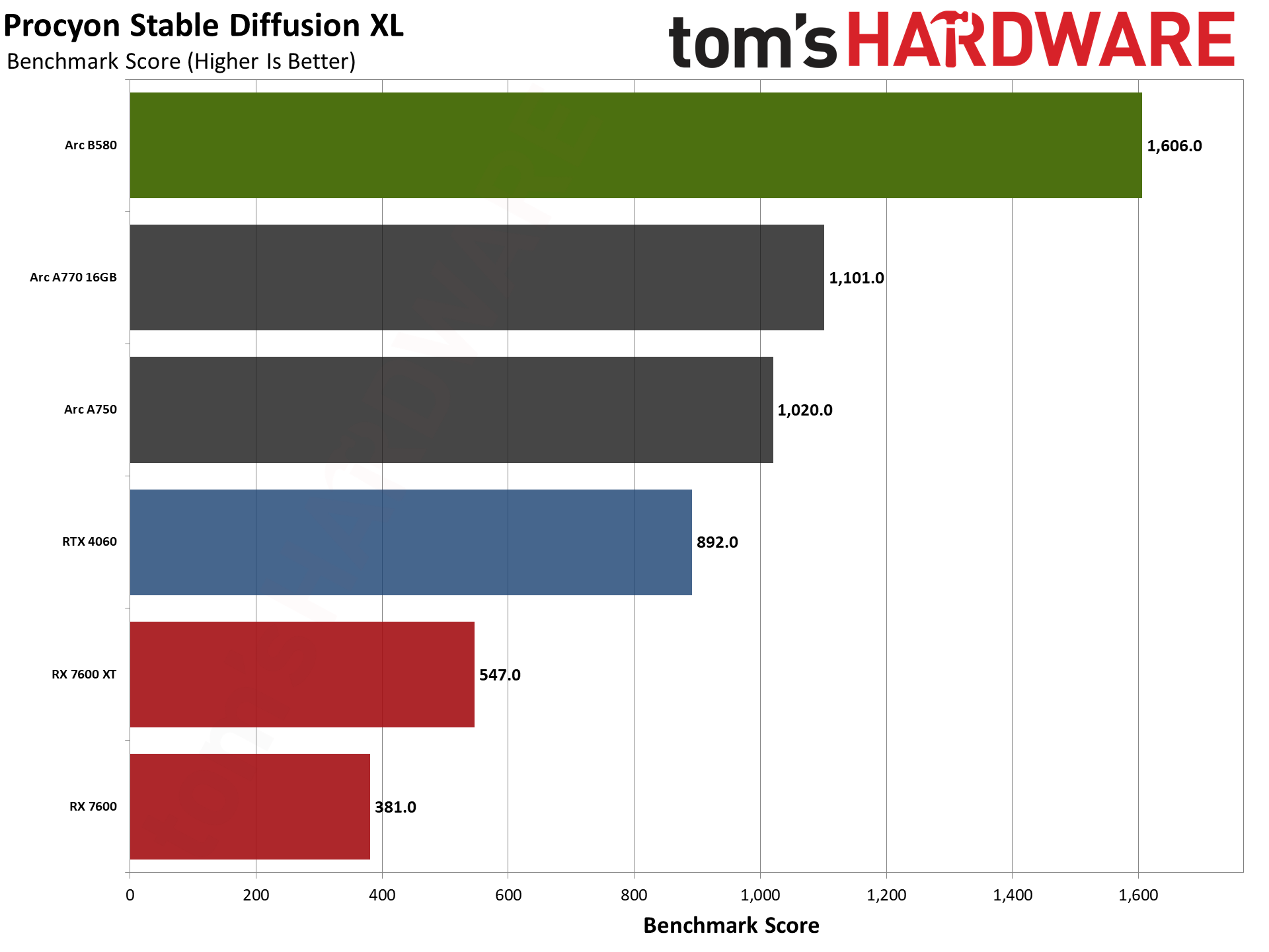

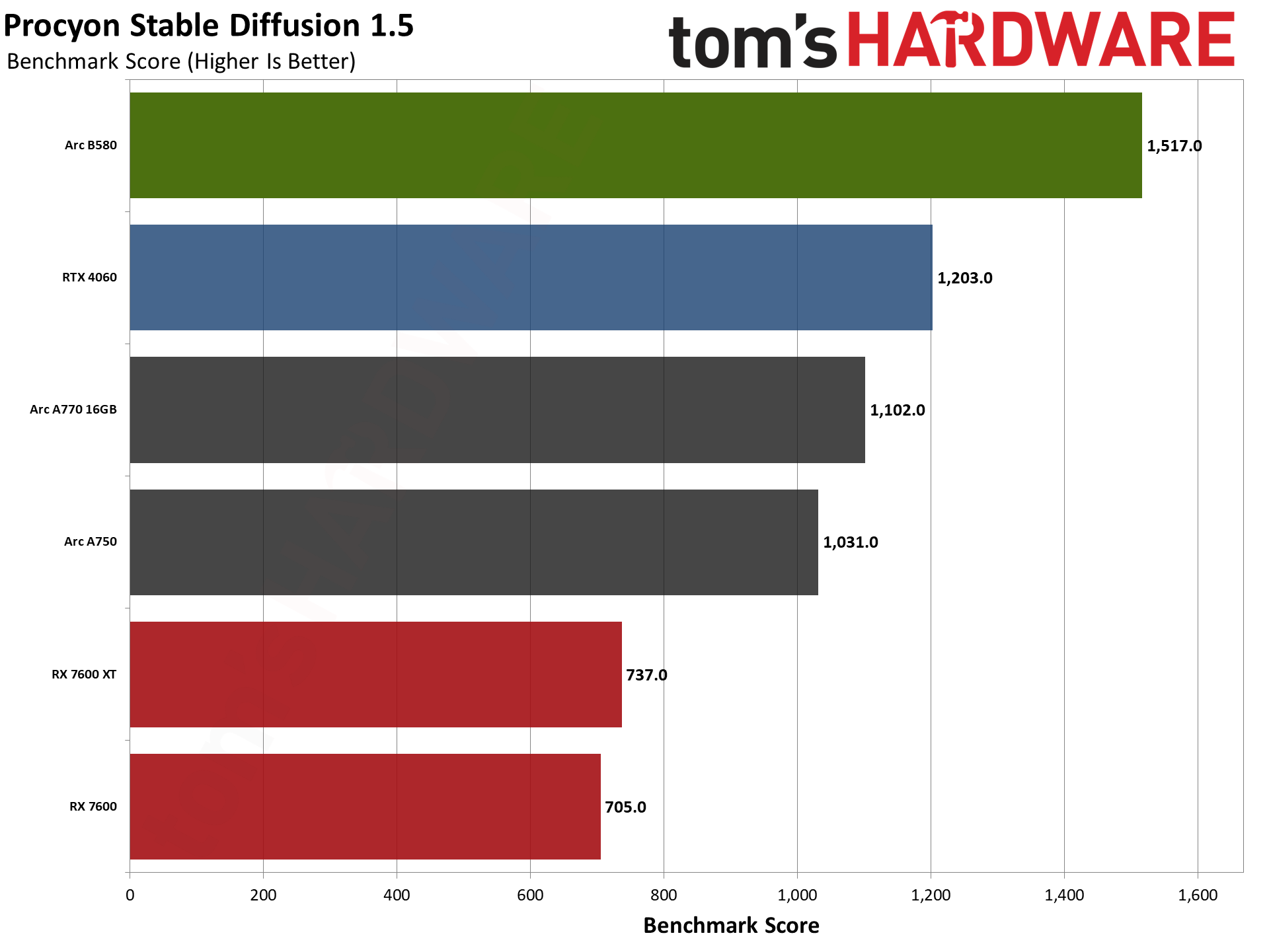

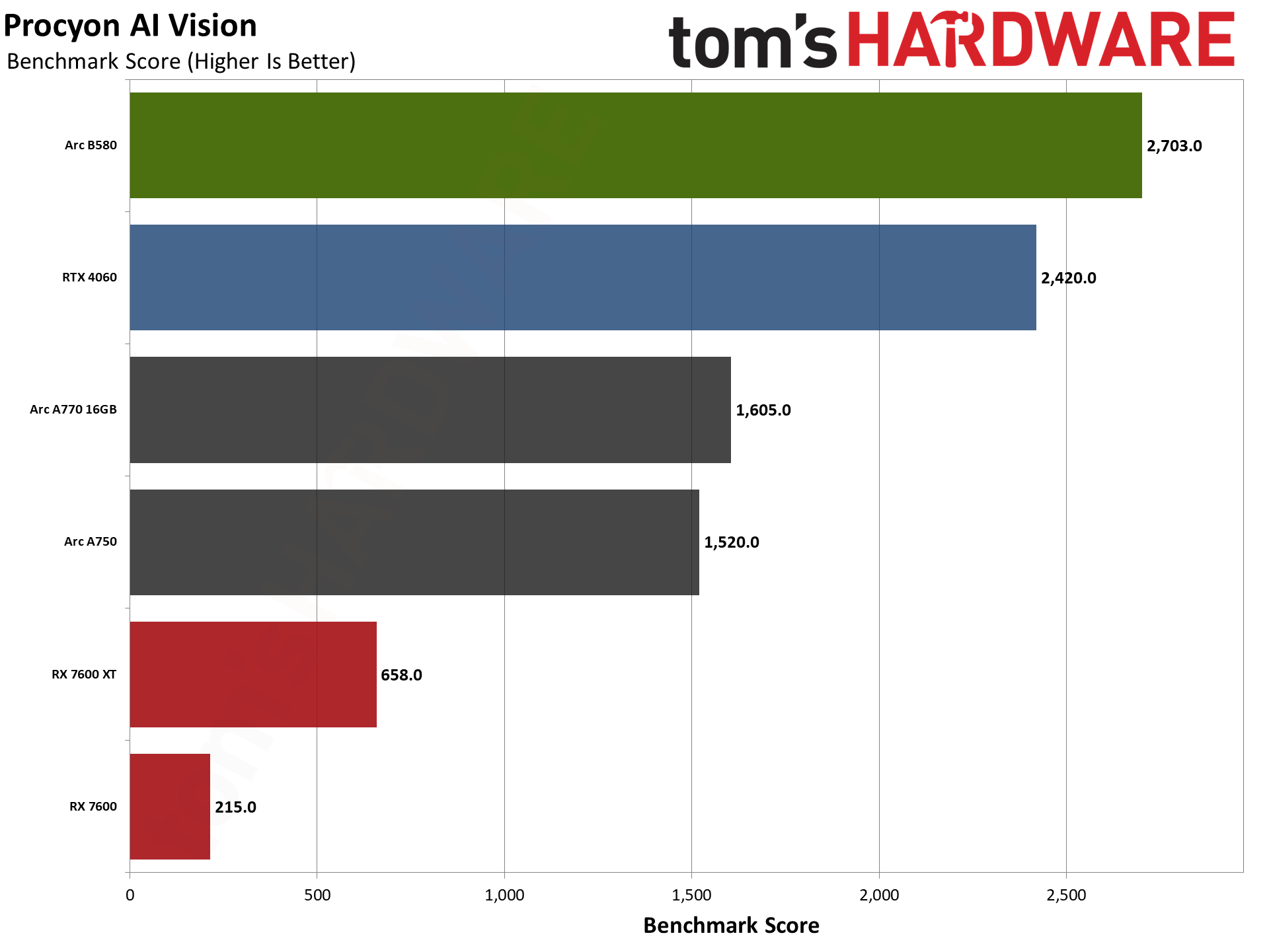

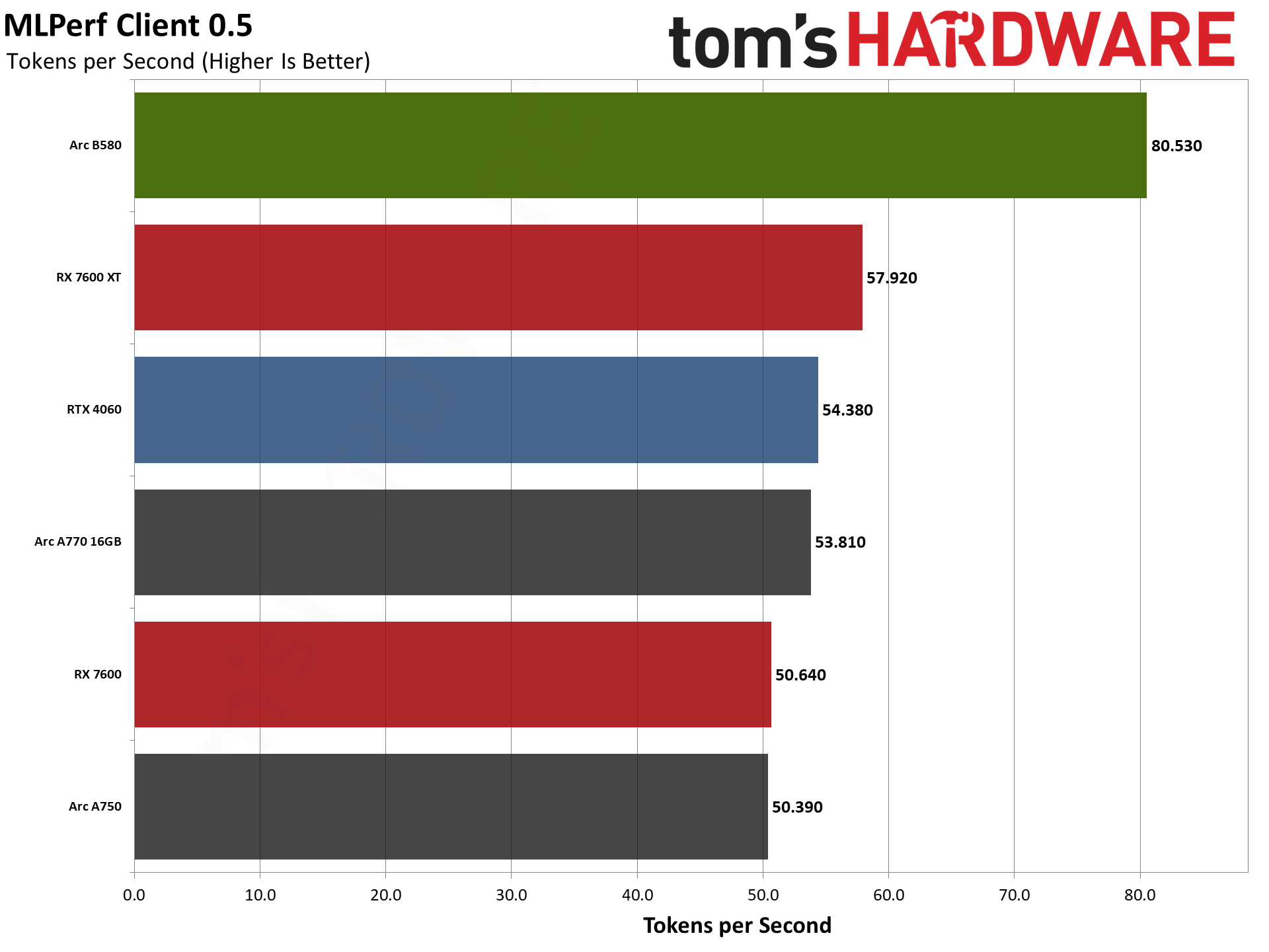

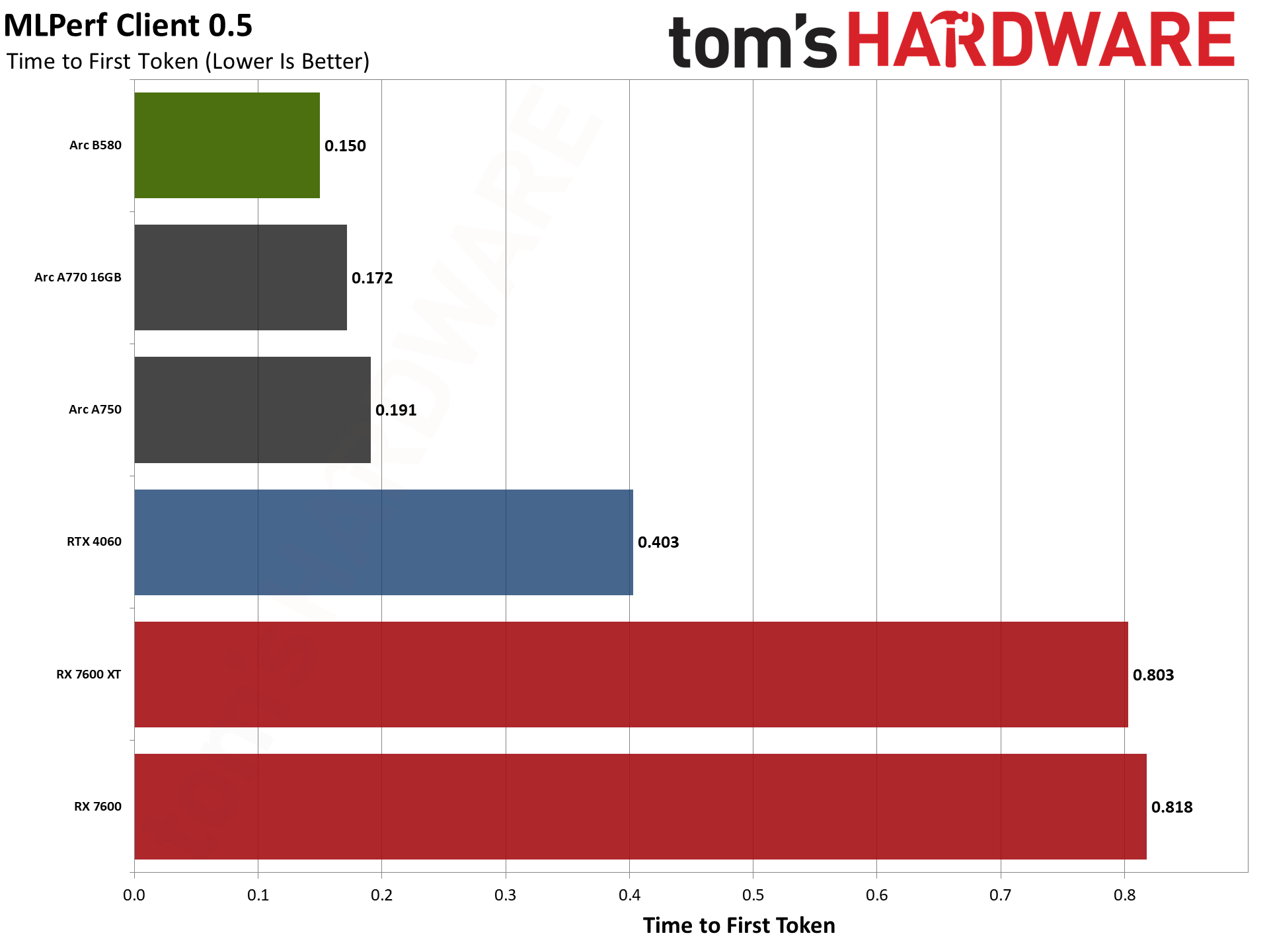

Intel says it's getting better LLM performance in terms of tokens per second via several text generation models. Depending on the model, Intel says Arc B580 delivers around 40–50 percent higher AI performance than the RTX 4060. That's going after some pretty low-hanging fruit, as the RTX 4060 isn't exactly an AI powerhouse. The B580 should also outpace AMD's RDNA 3 offerings in the AI realm.

But we don't just have Intel's word on the subject of AI. We also tested the B580 in several AI workloads.

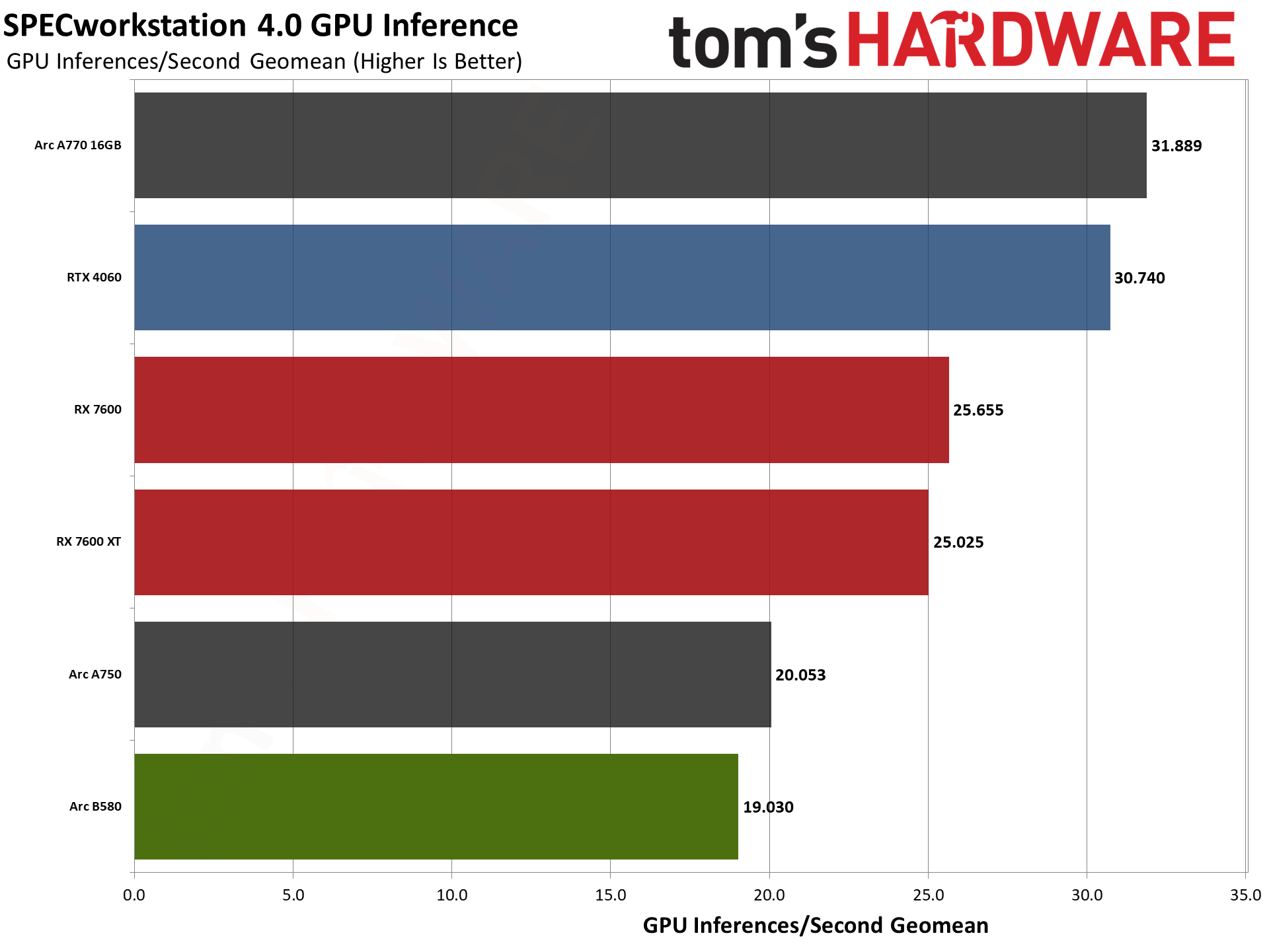

Intel's B580 takes a clear lead in the Procyon Stable Diffusion workloads, both SD1.5 and SDXL. It also wins in the AI Vision test, but only by a small amount. MLPerf Client also looks very good, while the SPECworkstation 4.0 inference results fall to the bottom of the chart.

And that leads us to the final subject in regards to Arc Battlemage...

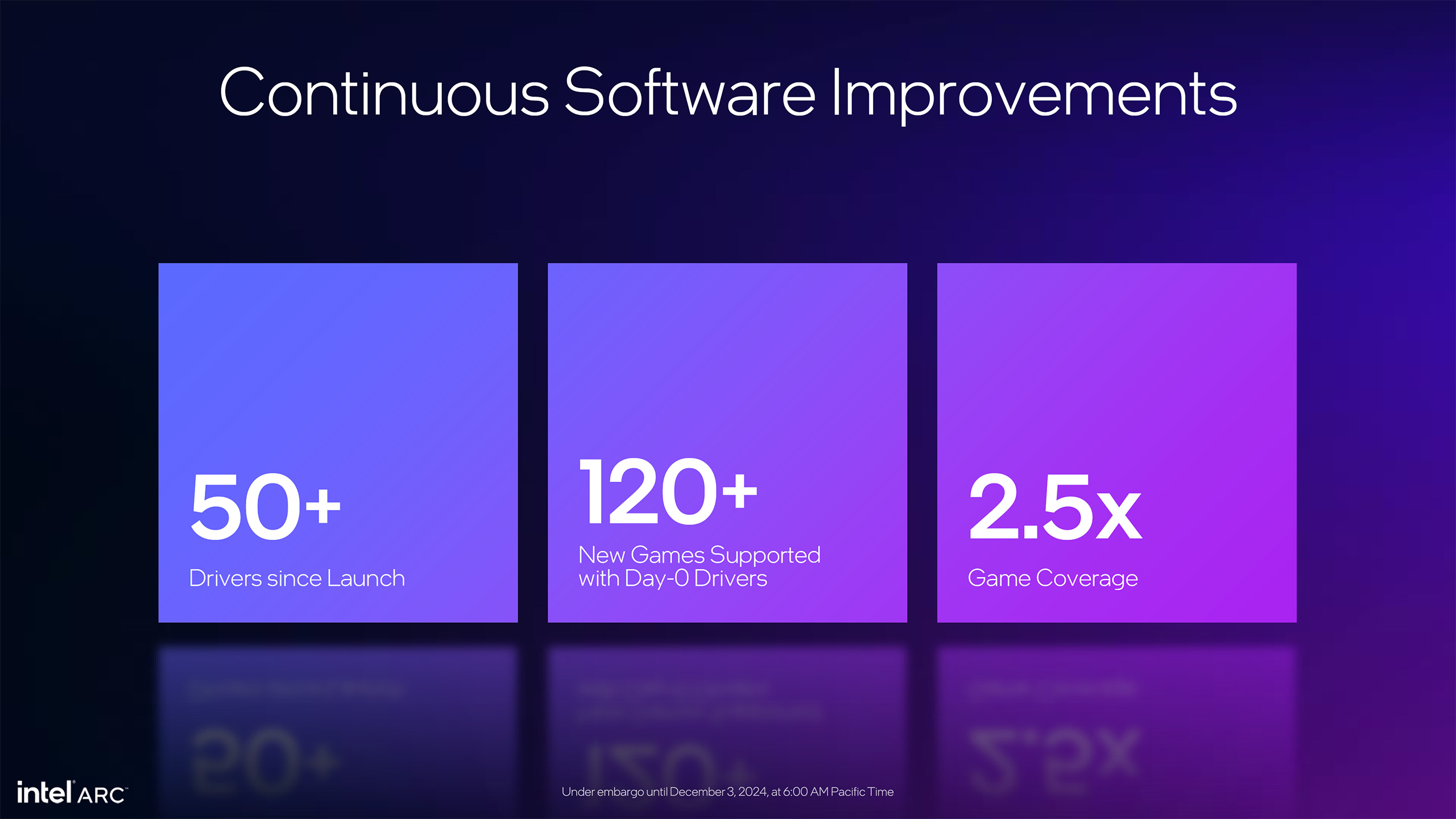

Battlemage Drivers

More than anything else, the biggest sticking point with Intel Arc GPUs was and is the graphics drivers. Things have improved a lot since the first Arc GPUs launched in 2022, which Intel likes to refer to as the "fine wine" aspect of driver development. While it's true that drivers have and continue to improve, there are still plenty of cases where B580 at least hasn't quite lived up to expectations.

Looking at the results of our 23 game test suite, seven of the games appear to underperform on the B580 relative to the A770. It's not always a loss, but Minecraft (with RT enabled), Black Myth: Wukong, Baldur's Gate 3, Call of Duty: Black Ops 6, Dragon Age: The Veilguard, Final Fantasy XVI, God of War Ragnarök, Hogwarts Legacy, The Last of Us Part 1, Stalker 2, Starfield, and Warhammer 40,000: Space Marine 2 all exhibited at least some level of sub-par performance. That's basically half of the games.

Some of the issues mostly relate to having either poor 1080p performance relative to the competition, or poor minimum FPS (ie, more microstutter), or in some cases both. Intel is aware of the concerns, and the driver team appears to be working feverishly to address problems. But for now, there are quite a few "known issues" with the latest drivers.

It also doesn't help that the Arc B580 currently exists on a separate driver branch from the other GPUs. The latest (as of Jan. 2, 2025) drivers have version 6449 for Alchemist and other Intel GPUs, or 6256 for Battlemage. At some point in the hopefully near future, the drivers will get merged into a single path and version, but it might take another month or two for that to happen. We suspect that some of the issues we encountered are due to B580 being on an older code path for newer releases like Stalker 2.

Support for major launches has been hit and miss for Intel over the past two years. Some games have worked great with a day zero driver release, others have had minor to moderate rendering errors, and a few even failed to work until a driver fix became available. Generally speaking, we don't experience this level of problems with AMD and Nvidia GPUs. Older games can also be hit and miss.

And while the "fine wine" stuff might be sort of funny on the surface, in practice no gamer wants to wait potentially months or more to get the expected level of performance from their graphics card.

I'm also disappointed that the Studio video capture and streaming part of Intel's driver package was also removed. It wasn't as good as the Nvidia and AMD options, but it at least worked and it shouldn't have been too difficult to fix — mostly, capturing videos with a process name and time stamp was all it really needed. Now, you have to use a third-party app like OBS, and my experience with capturing via OBS using the B580 has been problematic so far.

Intel's video encoding is otherwise roughly equal to Nvidia's recording, so it's a shame that this has apparently been deemed less important going forward. AV1 and VP9 support are still present, and should work at least as well as on Alchemist, but there haven't been any significant upgrades on the video codec side of things.

Closing Thoughts

Battlemage is here, at least the first mainstream model, with compelling performance and value. It's been routinely sold out for the past month or so after launch, indicating some pentup demand — or perhaps it's just that the supply of cards hasn't been very high; we don't know for certain. What remains to be seen is how the rest of the Intel GPU family fleshes things out, and where AMD and Nvidia land with their next-gen graphics cards. Beating the older existing architectures is a good start, but the competition isn't standing still.

Virtually every aspect of the Arc B580 represents a healthy step forward for Intel. It's significantly faster than the previous generation A750 and A580, even beating the A770 card. There are new features and a reworked architecture to help it compete, and it offers a good improvement in power efficiency as well. Intel drivers are better now than when the first Arc GPUs arrived two years ago, and in just the past three weeks Intel has released four new driver versions.

With all the good, we can't declare Battlemage a universal success yet, mostly because we haven't seen precisely where the new Nvidia and AMD GPUs will land. AMD has hinted that it's also planning to go after the mainstream markets, while Nvidia appears to be taking its usual approach of a top-down release, starting with extreme RTX 5090 and 5080 chips that will likely cost 4X to 8X more than the B580. Will Nvidia and AMD even try to compete with Intel's $220–$250 offerings? Perhaps not, but that remains to be seen.

We also need to see the rest of the Battlemage family. B580 looks good so far, B570 will probably be 10~15 percent slower while shaving 12% off the price. If that proves accurate, most people would be better served by spending the additional $30 — because there's plenty of cost in the rest of any gaming PC already, so getting 15% higher performance for less than a 15% price bump represents the more sensible choice. But Battlemage chips above the B580 level haven't been officially announced and will almost certainly have newer competition from AMD to deal with once they arrive.