Instagram helped define the look of social media: aesthetic feeds, filtered selfies and perfectly curated grid posts. But according to Instagram head Adam Mosseri, that era is over — and AI is to blame. Could social media be becoming too fake?

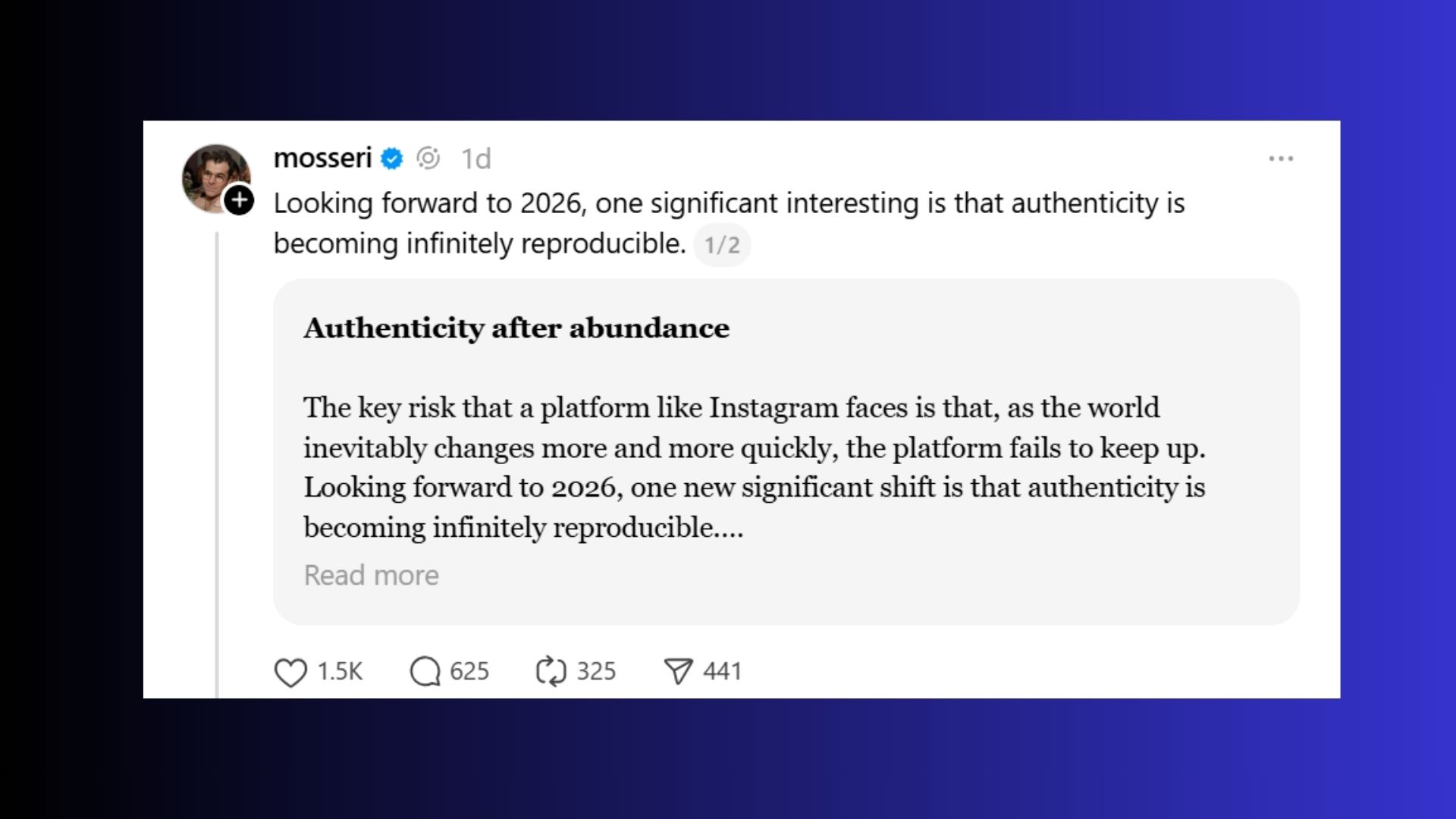

In a year-end essay, Mosseri posted on Threads that AI-generated content has shattered the visual trust that once powered the platform. When images can be generated in seconds, a perfectly lit photo no longer proves that something happened. In fact, he says, it now sparks "suspicion."

Interesting sentiment considering Meta AI, Instagram's parent company, just purchased Manus AI.

It's clear that the most trustworthy content has shifted to what looks the least produced — blurry candid photos, random DMs and anything that feels too messy to fake. But as we begin 2026, AI is only becoming more integrated across the web.

Gen Z has already moved on from the grid

Mosseri points out that most users under 25 no longer care about posting to their public grid. Instead, they’re sharing “unflattering candids” in private group chats or disappearing stories — a quiet rebellion against algorithm-chasing perfection.

That shift isn't just about preference, it actually reflects a deeper behavioral change: people don’t trust how things look anymore — they trust who’s posting them.

If a photo is too smooth, too symmetrical or too sharp, younger users assume it's AI. Visual perfection now reads like a red flag. The shift to immediately assuming the post was created using AI might be the theme of 2026.

Considering the source

The irony of Instagram’s authenticity crisis is that social media was the birthplace of filters. It's almost poetic how the platform is now calling time on the aesthetic era it helped create. But Mosseri’s take feels accurate, essentially stating that we’ve hit peak polish, and it no longer feels personal.

AI-generated influencers, product shots and aspirational scenes dominate the feed. The things that once signaled effort now signal automation. And the only thing that still feels undeniably human? A blurry, badly lit photo your friend sent in a group chat.

Rather than trying to verify every individual image, Mosseri says the platform is now focused on verifying people as the shift is moving from "is this real?" to "who shared this?"

For this reason, Instagram plans to:

- Label AI-generated content more clearly

- Show more context about accounts

- Build new tools to help human creators stay competitive in a synthetic media flood

Bottom line

In one of his more forward-looking ideas, Mosseri suggested that camera manufacturers should cryptographically sign images at capture, embedding proof that they were taken by a real device, at a real time, in a real place.

This kind of hardware-level authenticity would mark a major change — moving beyond detecting fakes after the fact to preventing doubt before it begins. Google's Nano Banana Pro can detect AI images, and even if you upload an image to ChatGPT, it can determine the qualities of AI within an image.

It's a clear signal that even platform leaders don’t believe content moderation alone can keep up with AI. In 2026, the mess might be the message.

Follow Tom's Guide on Google News and add us as a preferred source to get our up-to-date news, analysis, and reviews in your feeds.