There are several concerning aspects of generative AI, and AI image generation in particular. There's the potential impact on creative jobs, and the fear that human creative work as we know it may cease to exist to be replaced with a nightmarish jumble of poor-quality content deemed 'good enough'. And then there's the fear of a complete collapse of human knowledge and science as it becomes impossible to know what to believe because so much material is nonsense made up by AI.

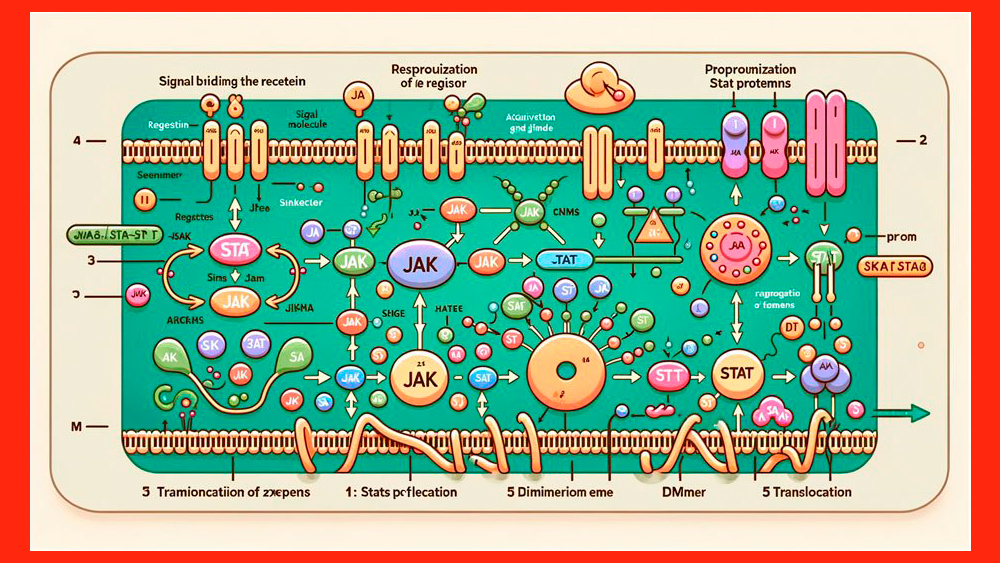

One place where AI imagery would never pass muster, of course, is in a scientific article. Scientific work is peer reviewed to ensure its validity, so a journal would never be able to print a paper full of obvious AI-generated nonsense, right? Only that's what's just happened (see our pick of the best AI image generators if you want to create your own phoney paper).

We thank the readers for their scrutiny of our articles: when we get it wrong, the crowdsourcing dynamic of open science means that community feedback helps us to quickly correct the record.February 15, 2024

As pointed out by a member of the public on X, a now-retracted study published in the supposedly peer-reviewed Frontiers in Cell and Developmental Biology featured images that can immediately identified as the work of AI. There are vaguely 'sciency-looking' diagrams labelled with nonsense words. Oh, and then there's the impossibly well-endowed rat.

The authors of the paper were actually quite honest about the use of AI, crediting the images to Midjourney. Incredibly, the journal still published the piece.

"This actually demonstrates that peer reviews are useless, and only contributes to the growing distrust of the scientific community," one person pointed out on X. "Lack of scrutiny like this does incalculable harm to the public's trust in science, particularly at a time in which certain political forces are actively stoking such concerns," someone else added.

Perhaps even more outrageously, the journal tried to spin the failure of its peer review system into evidence of the merits of community-driven open science. "We thank the readers for their scrutiny of our articles: when we get it wrong, the crowdsourcing dynamic of open science means that community feedback helps us to quickly correct the record," the publication responded on X.

Such disasters will have many people wondering what the objective of generative AI is. This is not the objective, but the fact is that the technology is going to be, and already is, everywhere, including places where it really shouldn't be, like in Uber Eats menu images.