Like all the big AI companies, Bing's Image Creator software has a content policy that prohibits creation of images that encourage sexual abuse, suicide, graphic violence, hate speech, bullying, deception, and disinformation. Some of the rules are heavy-handed even by the usual "trust and safety" standards (hate speech is defined as speech that "excludes" individuals on the basis of any actual or perceived "characteristic that is consistently associated with systemic prejudice or marginalization"). Predictably, this will exclude a lot of perfectly anodyne images. But the rules are the least of it. The more impactful, and interesting, question is how those rules are actually applied.

I now have a pinhole view of AI safety rules in action, and it sure looks as though Bing is taking very broad rules and training their engine to apply them even more broadly than anyone would expect.

Here's my experience. I have been using Bing Image Creator lately to create Cybertoonz (examples here, here, and here), despite my profound lack of artistic talent. It had the usual technical problems—too many fingers, weird faces—and some problems I suspected were designed to avoid "gotcha" claims of bias. For example, if I asked for a picture of members of the European Court of Justice, the engine almost always created images of more women and identifiable minorities than the CJEU is likely to have in the next fifty years. But if the AI engine's political correctness detracted from the message of the cartoon, it was easy enough to prompt for male judges, and Bing didn't treat this as "excluding" images by gender, as one might have feared.

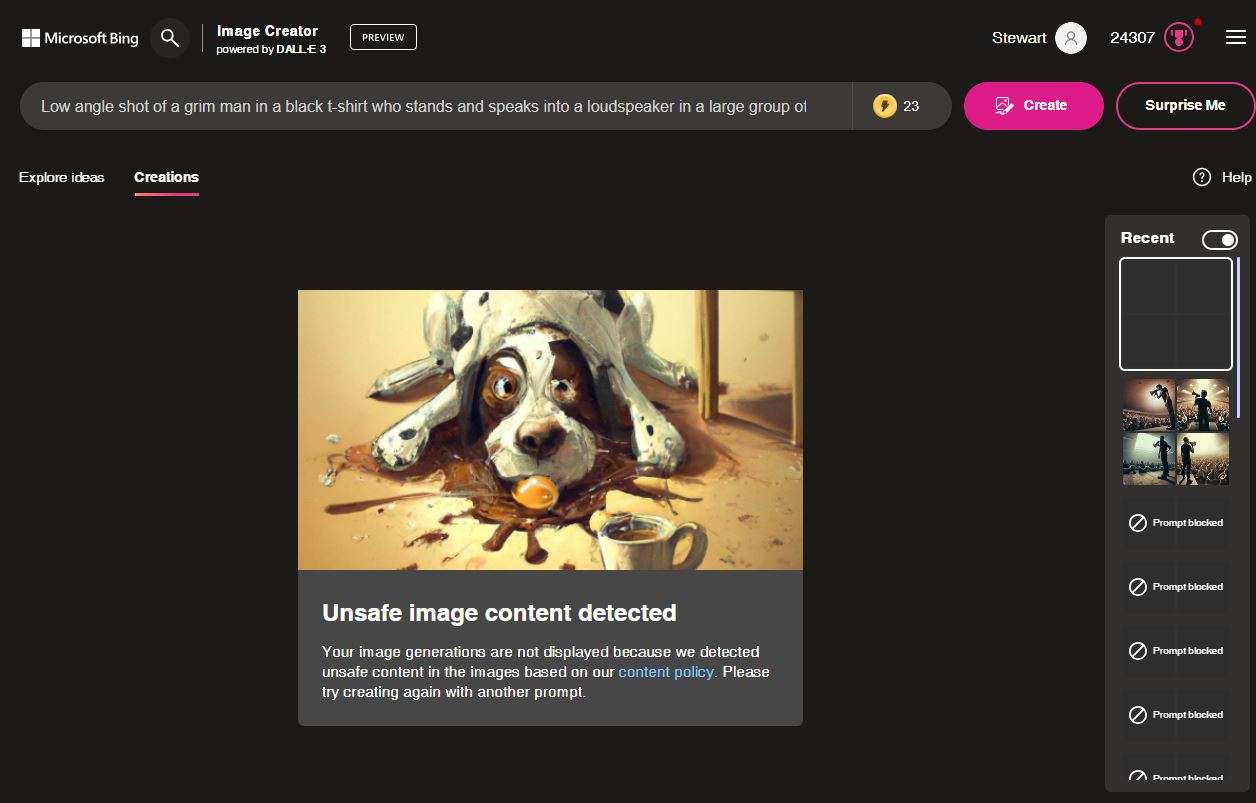

My more recent experience is a little more disturbing. I created this Cybertoonz cartoon to illustrate Silicon Valley's counterintuitive claim that social media is engaged in protected speech when it suppresses the speech of many of its users. My image prompt was some variant of "Low angle shot of a male authority figure in a black t-shirt who stands and speaks into a loudspeaker in a large group of seated people wearing gags or tape over their mouths. Digital art lo-fi".

As always, Bing's first attempt was surprisingly good, but flawed, and getting a useable version required dozens of edits of the prompt. None of the images were quite right. I finally settled for the one that worked best, turned it into a Cybertoonz cartoon, and published it. But I hadn't given up on finding something better, so I went back the next day and ran the prompt again.

This time, Bing balked. It told me my prompt violated Bing's safety standards:

After some experimenting, it became clear that what Bing objected to was depicting an audience "wearing gags or tape over their mouths."

How does this violate Bing's safety rules? Are gags an incitement to violence? A marker for "[n]on-consensual intimate activity"? In context, those interpretations of the rules are ridiculous. But Bing isn't interpreting the rules in context. It's trying to write additional code to make sure there are no violations of the rules, come hell or high water. So if there's a chance that the image it produces might show non-consensual sex or violence, the trust and safety code is going to reject it.

This is almost certainly the future of AI trust and safety limits. It will start with overbroad rules written to satisfy left-leaning critics of Silicon Valley. Then those overbroad rules will be further broadened by hidden code written to block many perfectly compliant prompts just to ensure that it blocks a handful of noncompliant prompts.

In the Cybertoonz context, such limits on AI output are simply an annoyance. But AI isn't always going to be a toy. It's going to be used in medicine, hiring, and other critical contexts, and the same dynamic will be at work there. AI companies will be pressured to adopt trust and safety standards and implementing code that aggressively bar outcomes that might offend the left half of American political discourse. In applications that affect people's lives, however, the code that ensures those results will have a host of unanticipated consequences, many of which no one can defend.

Given the stakes, my question is simple. How do we avoid those consequences, and who is working to prevent them?

The post Images that Bing Image Creator won't create appeared first on Reason.com.