Most species are transitory. They go extinct, branch into new species, or change over time due to random mutations and environmental shifts. A typical mammalian species can be expected to exist for a million years. Modern humans, Homo sapiens, have been around for roughly 300,000 years. So what will happen if we make it to a million years?

Science fiction author H.G. Wells was the first to realize that humans could evolve into something very alien. In his 1883 essay, “The Man of the Year Million,” he envisioned what's now become a cliche: big-brained, tiny-bodied creatures. Later, he speculated that humans could also split into two or more new species.

While Wells' evolutionary models have not stood the test of time, the three basic options he considered still hold. We could go extinct, turn into several species, or change.

An added ingredient is that we have biotechnology that could greatly increase the probability of each of them. Foreseeable future technologies such as human enhancement (making ourselves smarter, stronger, or in other ways better using drugs, microchips, genetics, or other technology), brain emulation (uploading our brains to computers), or artificial intelligence may produce technological forms of new species not seen in biology.

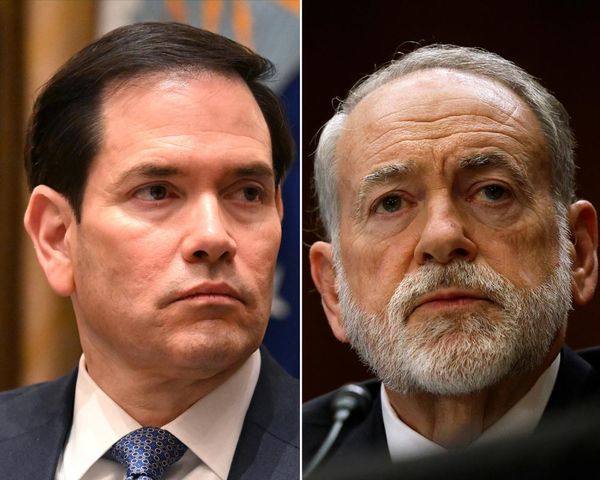

Artificial intelligence

It is impossible to predict the future perfectly. It depends on fundamentally random factors: ideas and actions as well as currently unknown technological and biological limits. But it is my job to explore the possibilities, and I think the most likely case is vast "speciation" — when a species splits into several others.

There are many among us who want to improve the human condition — slowing and abolishing aging, enhancing intelligence and mood, and changing bodies — potentially leading to new species.

These visions, however, leave many cold. It is plausible that even if these technologies become as cheap and ubiquitous as mobile phones, some people will refuse them on principle and build their self-image of being "normal" humans. In the long run, we should expect the most enhanced people, generation by generation (or upgrade after upgrade), to become one or more fundamentally different "posthuman" species — and a species of holdouts declaring themselves the "real humans."

Through brain emulation, a speculative technology where one scans a brain at a cellular level and then reconstructs an equivalent neural network in a computer to create a "software intelligence," we could go even further. This is no mere speciation, leaving the animal kingdom for the mineral, or rather, software kingdom.

There are many reasons some might want to do this, such as boosting chances of immortality (by creating copies and backups) or easy travel by internet or radio in space.

Software intelligence has other advantages, too. It can be very resource efficient — a virtual being only needs energy from sunlight and some rock material to make microchips. It can also think and change on the timescales set by computation, probably millions of times faster than biological minds. It can evolve in new ways — it just needs a software update.

Yet humanity is perhaps unlikely to remain the only intelligent species on the planet. Artificial intelligence is advancing rapidly right now. While there are profound uncertainties and disagreements about when or if it becomes conscious, artificial general intelligence (meaning it can understand or learn any intellectual problems like a human, rather than specializing in niche tasks) will arrive, a sizeable fraction of experts think it is possible within this century or sooner.

If it can happen, it probably will. At some point, we will likely have a planet where humans have largely been replaced by software intelligence or AI — or some combination of the two.

Utopia or dystopia?

Eventually, it seems plausible that most minds will become software. Research suggests that computers will soon become much more energy efficient than they are now. Software minds also won't need to eat or drink, which are inefficient ways of obtaining energy, and they can save power by running slower parts of the day. This means we should be able to get many more artificial minds per kilogram of matter and watts of solar power than human minds in the far future. And since they can evolve fast, we should expect them to change tremendously from our current style of mind.

Physical beings have a distinct disadvantage compared with software beings, moving in the sluggish, quaint world of matter. Still, they are self-contained, unlike the flitting software that will evaporate if their data center is disrupted.

“Natural” humans may remain in traditional societies, unlike software people. This is not unlike the Amish people today, whose humble lifestyle is still made possible (and protected) by the surrounding United States. It is not given that surrounding societies have to squash small and primitive societies: we have established human rights and legal protections, and something similar could continue for normal humans.

Is this a good future? Much depends on your values. A good life may involve having meaningful relations with other people and living in a peaceful and prosperous environment sustainably. From that perspective, weird posthumans are unnecessary; we just need to ensure that the quiet little village can function (perhaps protected by unseen automation).

Some may value “the human project,” an unbroken chain from our paleolithic ancestors to our future selves, but be open to progress. They would probably regard software people and AI as going too far but be fine with humans evolving into strange new forms.

Others would argue what matters is freedom of self-expression and following your life goals. They may think we should explore the posthuman world widely and see what it has to offer.

Others may value happiness, thinking, or other qualities that different entities hold and want futures that maximize these. Some may be uncertain, arguing we should hedge our bets by going down all paths to some extent.

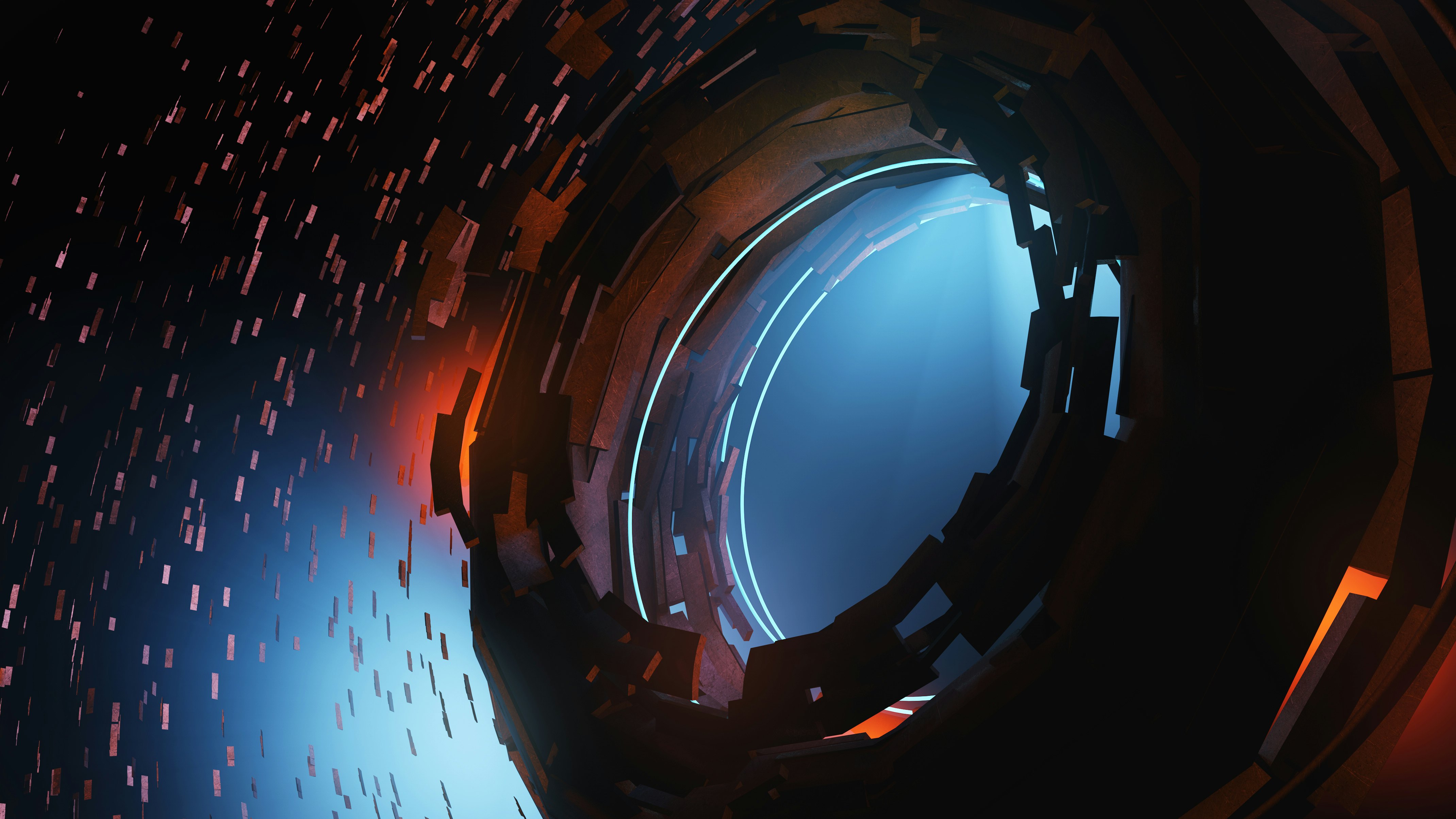

Dyson sphere

Here’s a prediction for the year 1 million. Some humans look more or less like us — but they are less numerous than they are now. Much of the surface is wilderness, having turned into a rewilding zone since there is far less need for agriculture and cities.

Here and there, cultural sites with vastly different ecosystems pop up, carefully preserved by robots for historical or aesthetic reasons.

Under silicon canopies in the Sahara, trillions of artificial minds teem. The vast and hot data centers which power these minds once threatened to overheat the planet. Now, most orbit the Sun, forming a growing structure — a Dyson sphere — where each watt of energy powers thought, consciousness, complexity, and other strange things we do not have words for yet.

If biological humans go extinct, the most likely reason (apart from the obvious and immediate threats) is a lack of respect, tolerance, and binding contracts with other post-human species. Maybe a reason for us to start treating our own minorities better.

This article was originally published on The Conversation by Anders Sandberg at the University of Oxford. Read the original article here.