ChatGPT and other generative AI tools which draw on large language models (LLMs) are a hot topic. Released in November 2022 by OpenAI, ChatGPT is a chatbot – it generates text output refined through user prompts.

What makes it special is just how sophisticated and impressive that output is. The stratospheric rise of generative AI tools has sparked much discussion over what it might mean for the future of education, the job market, humanity and society as a whole.

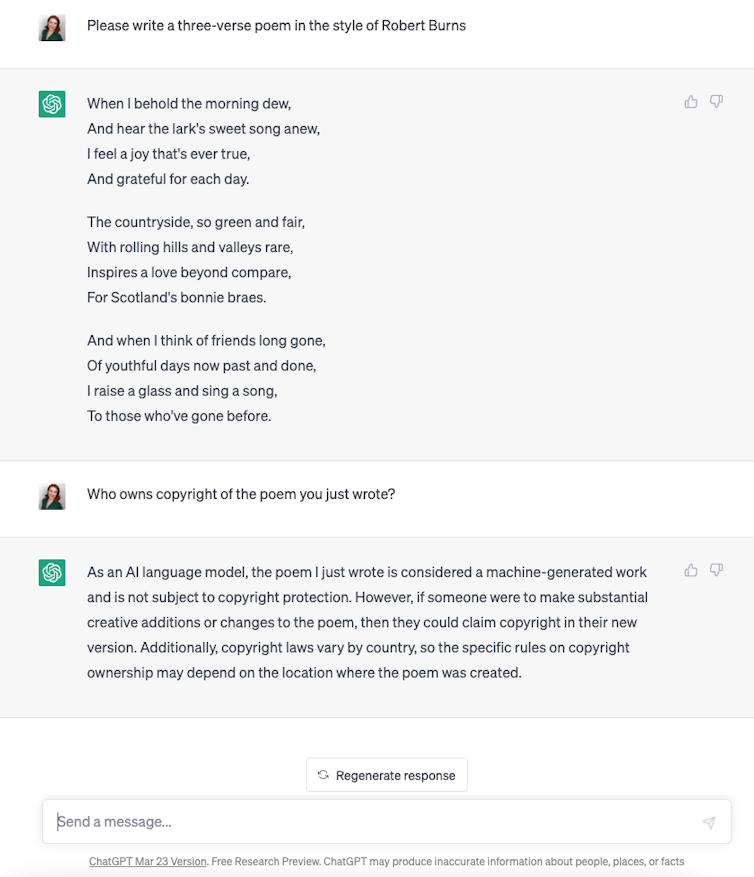

By now, you’ve likely interacted with a generative AI. But who owns copyright to the output, and how does copyright law apply?

Read more: Calls to regulate AI are growing louder. But how exactly do you regulate a technology like this?

Text output and the law

ChatGPT is powered by an LLM – a machine-learning algorithm which processes vast datasets, including text, websites, news articles and books. Through the use of billions of parameters, ChatGPT statistically analyses complex language structures and patterns to produce the output.

Some people might think OpenAI – the company responsible for ChatGPT – would have an authorship right in any output (the generated text), but this is not so. OpenAI’s terms assign the right, title and interest in output to a user. Anyone who uses such AI tools needs to know the copyright implications of generating output.

Putting aside ethical and moral issues regarding academic integrity, there are many copyright implications surrounding LLMs.

For example, when you use ChatGPT to produce output, under Australian law, would you own the copyright of that output? Can AI such as ChatGPT be considered a legal joint author of any LLM output? Do LLMs infringe others’ copyright through the use of data used to train these models?

Do you own your ChatGPT output?

Under Australian law, because the output is computer-generated code/text, it may be classified as a literary work for copyright purposes.

However, for you to own copyright in ChatGPT output as a literary work, requirements known as “subsistence criteria” must also be satisfied. When considering AI processes in light of the subsistence criteria, the analysis becomes challenging.

The most contentious subsistence criteria in the context of LLMs are those of authorship and originality. Seminal Australian cases dictate a literary work must originate through an author’s “independent intellectual effort”.

To determine potential copyright in ChatGPT output, a court would examine the underlying processes of creation in detail. Hypothetically, when considering how LLMs learn, although people prompt AI, a court would likely deem this prompting to be a separate, precursory act to the actual creation of the output. The court would likely find the output is produced by the AI. This would not meet the criteria for authorship, because the output was authored by an AI instead of a human.

Also, the output is unlikely to adequately express a person’s “independent intellectual effort” (another subsistence criterion) because AI produces it. Such a finding would be similar to the ruling in a seminal case about a computer-generated compilation. There, a valuable Telstra database was not protected by copyright due to lack of establishment of human authorship and originality.

For these reasons, it’s likely copyright would not come into effect on ChatGPT output as a literary work produced in Australia.

Meanwhile, under UK law, the result could be different. This is because UK law makes provision for a person who makes the arrangements for a computer-generated literary work to be considered an author for copyright purposes.

Can you be a joint author with ChatGPT?

In recent years, human authorship has been challenged in court a few times overseas, including the famous monkey selfie case in the United States.

In Australia, a work must originate with a human author, so AI doesn’t qualify for authorship. However, if AI were ever to achieve something akin to its own version of sentience, AI personhood debates will unleash many issues, including whether AI should be considered an author for copyright purposes.

Assuming one day AI can be considered an author, if a court was assessing joint authorship between a person and AI, each author’s contribution would be examined in detail. A “work of joint authorship” states that each author’s contribution must not be separate from the other. It’s likely that a person’s prompting of the AI would be deemed separate to what the AI system then does, so joint authorship would probably fail.

Do LLMs infringe on copyright?

A final issue is whether LLMs infringe others’ copyright through accessing data in training. Such data may be copyright-protected material. This requires an examination of the LLM training and output. Is a substantial portion of copyright-protected material reproduced? Or, is mass data synthesised without substantial reproduction?

If it is the earlier option, infringement may have occurred; if it’s the latter, there would be no infringement under current law. But even if output reproduces a portion of copyright-protected material, this might fall under a copyright exception. In Australia, this is called fair dealing.

Read more: Explainer: what is 'fair dealing' and when can you copy without permission?

Fair dealing permits particular purposes, such as research and study. In the US, similar fair use exceptions are broader in scope, so LLM output may be caught by this. Also, the European Union has a copyright exception for text and data mining which permits the use of data to train LLMs unless expressly prohibited by a rights-holder.

Seeing as AI is here to stay, a final point to ponder is whether amendments should be made to the Australian Copyright Act to allow an AI user to be considered an author for copyright purposes. Should we amend the law by following in the United Kingdom’s footsteps, or implement a text and data mining exception similar to that in the EU?

As AI initiatives continue advancing, Australian copyright law will likely grapple with these issues in the coming years.

Wellett Potter does not work for, consult, own shares in or receive funding from any company or organisation that would benefit from this article, and has disclosed no relevant affiliations beyond their academic appointment.

This article was originally published on The Conversation. Read the original article.