AI art and the sudden rise of AI seeping into every area of our lives is, frankly, a little terrifying. At best AI will enable us to do more, speed up our creativity and usher in a new kind of artistic vision. At worst, we're all out of jobs and creativity is heading down a cul-de-sac where AI will ask other AI, "is this art?"

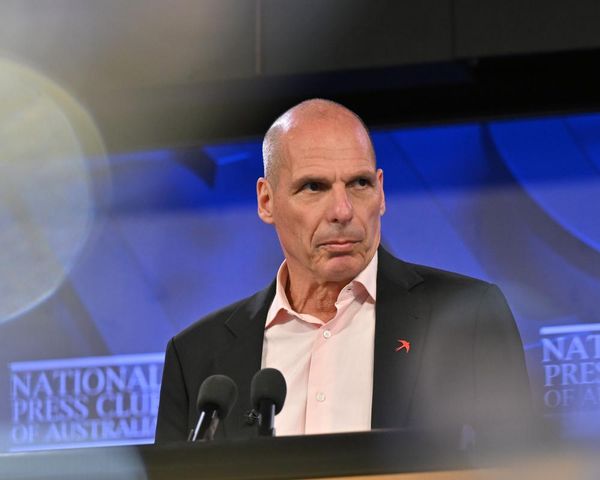

To try and fathom what is going on, and see if, like D&D artist Greg Rutkowski, whose very name is now a prompt, AI is to be feared, I went to London's AI Summit. I wasn't sure what to expect, after all we've been writing about weird DALL-E 2 AI art and reporting on the best AI art generators for some time, but this was glimpse into every aspect of life AI will affect.

Things were kind of going well. The rooms at the AI Summit in London were a hum of excited voices for the future of AI, and for the most part the goal is to aid our lives, from IBM's impressive Watson AI chatbot that can react to your queries realistically to an AI that can transcribe your video and turn them into ebooks.

Then AI YouTuber Samson Vowles said, with a smirk and a shrug, "Artists should just suck it up, frankly". He was responded to digital artists' work and styles being 'scraped' to feed generative AI. The audience applauded. This, I soon found, wasn't the space to discuss putting the breaks on AI.

Yet, this is the aim of such conferences; these are safe spaces to engage all views and ideas. Somewhere in the mire of excitement for the future and fringe views that trash artists concerns is a pathway to find real uses for generative AI. Below I list some of what I learned, what is facing AI and how it, perhaps, will help us all.

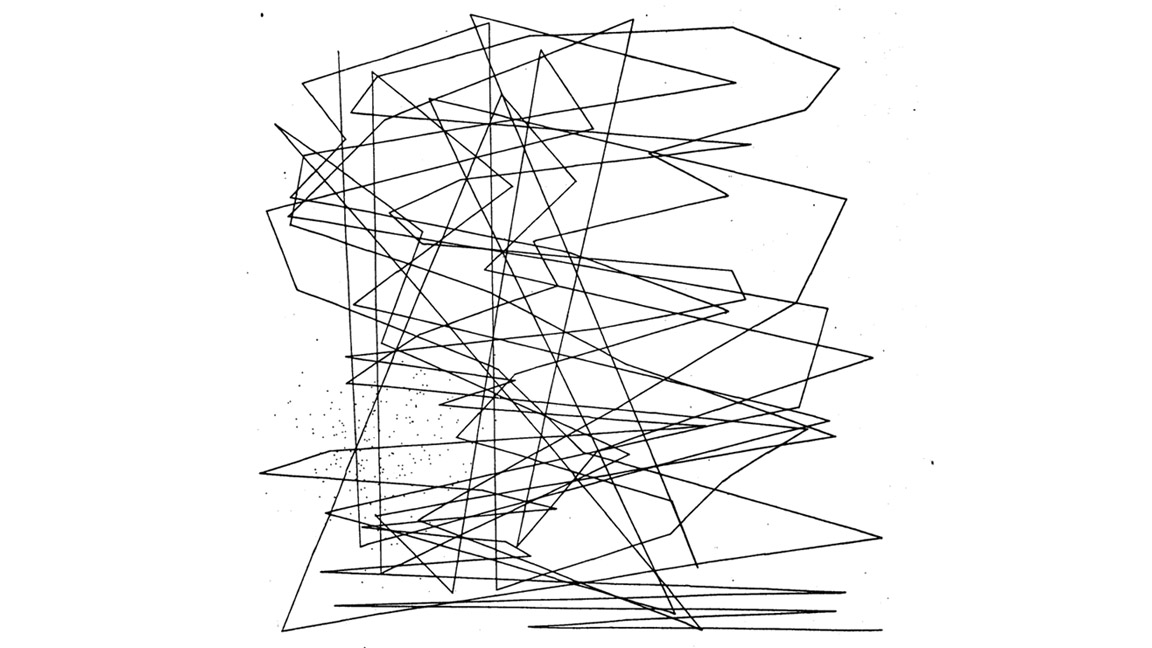

So, generative AI art isn't actually new

Generative AI art isn't new. Technologist Michael Noll created the first generative AI artwork in 1962, called simply Pattern One, followed by the first computer generated art exhibit at the Howard Wise Gallery in 1965. Artists Manfred Mohr, Charles Csuri and Vera Molnár were making generative art through the 1970s and are still pioneering this art form.

So generative AI art didn't begin with DALL-E 2, Midjourney and Chat GPT but it is now far more accessible, approachable and easy to use; combined with NFTs, the blockchain and the emerging metaverse, AI generated art has emerged as a realistic way to create and sell digital images, video and music. We're into the second 'boom' of AI art.

Will AI take my job?

During a panel discussion called 'Gen AI & the Future of Gaming' the spectre of AI taking our jobs reared its head, and Christine A. Mackay, CEO and founder of animation studio Salamandra.uk, uttered the dreaded word "efficiencies", corporate code for job cuts.

Mackay did clarify this, saying the use of AI needn't mean job cuts for many studios, as AI could deliver greater productivity. "It could mean the same studio can now make three projects instead of one," she commented. In this light, small studios can compete and expand faster than is currently possible. I can see how a small team could now create a film, video game and TV series when in the past it would need to focus on one of these aspects of a project.

While we just don't know what the future holds, there are some reassuring studies being made, for example consultancy firm McKinsey & Company predicts AI will add $4.4 trillion to the US economy by improving productivity and creating new jobs. I'm ever an optimist, so I prefer to believe AI will enable us to do more and be more creative than replace what we do.

Regulation vs deregulation is the real concern

There are lots of views on whether AI will have a positive or negative impact on our lives, but AI is here and it's not going away. Which means the real debate comes down to, should AI be regulated or deregulated?

The EU has already played its hand with the EU AI Act that aims to tackle software, so it's trying to regulate AI at use, with a focus on CV scanning and ethical use of AI by governments.

The other option is to regulate AI at source by targeting the hardware used by generative AI, which would likely ultimately mean hiking taxes and restrictions on render farms and GPUs. But is it feasible to restrict tech success stories like Nvidia?

Finally we have deregulation. This is light-touch on restrictions and simply enables AI firms to monitor themselves, or hands control to existing regulators, which in the UK would mean the likes of the Advertising Standards Authority, Ofcom, Video Standards Council and more. The problem? Generative AI won't always sit comfortably in one sector, a single AI app could be deployed across web creation, video editing, games, art, music, finance, and more. So which regulator is responsible?

The real issue with deregulation or light-touch regulation is, as Dr Andrew Rogoyski from The Surrey Institute for People-Centred Artificial Intelligence explained to me, is "deregulation is a race to the bottom, no one wins".

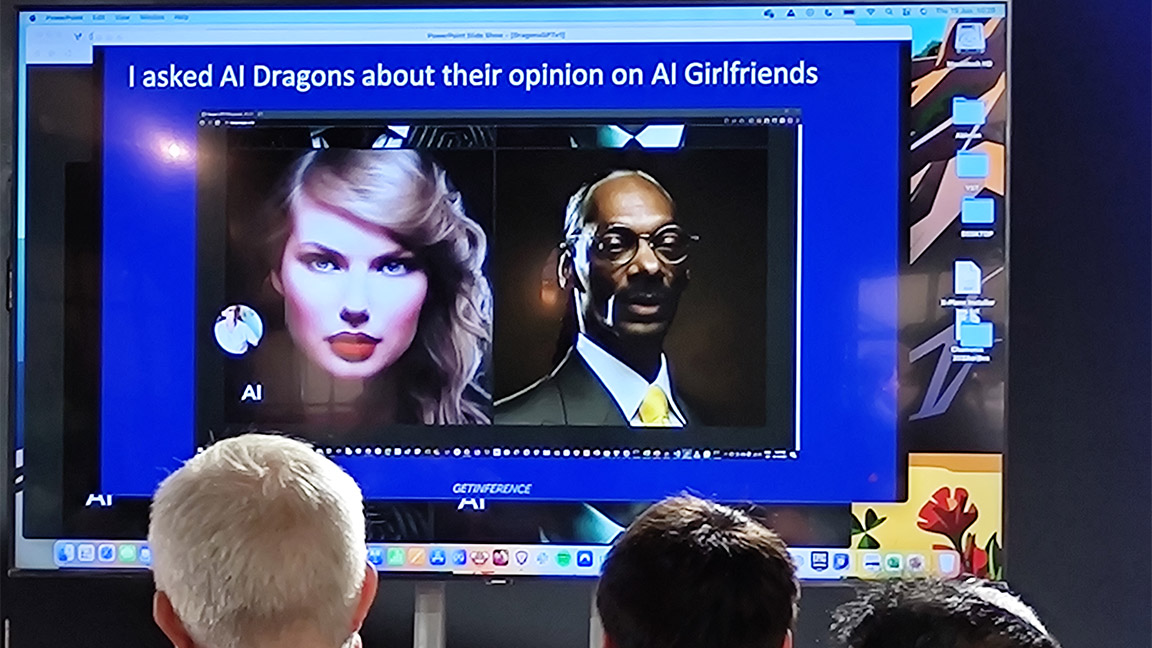

No copyright is safe

Something quite alarming happened, during a talk aimed at sharing advice on funding and stress-testing AI projects a 'Dragon Chat GPT' appeared on screen and the room was being offered insights from Taylor Swift and Snoop Dog.

A bit of fun but having their likenesses and voices are cooped for the talk pinpointed some major issues surrounding AI and its uses. My brain could only see a huge problem with the copyrights. Dragon Chat GPT may have licensed celebs, it's not clear, but if Taylor Swift isn't safe from an over-excited AI developer then we're all at risk, and our work is at risk too.

My data is worth something now?

Generative AI needs data, lots of data. As major brands embrace AI our data will become even more valuable. Large brands are risk averse and won't release an AI onto their customers until assured the data it's training on is ethical. This will likely mean you opt in to having your discourse with an AI recorded and used for training.

Sounds fine, we all assign Cookies without really thinking, right? Well what if you secure your data on the blockchain, and now you ask a fee to aid a brand's AI training? This is pure speculation, but ultimately, our data and how we interact with Web3 and generative AI, the information we feed it, will become more and more important.

Is AI more than Chat GPT?

Yes, oh yes. Chat GPT is the AI to force the door open and behind it are hundreds of interesting apps that can and will affect how you work and play. In many ways AI has been with us for some time and you've just not realised it, whether it's Spotify planning your playlists or how your passport is assessed.

The aim of generative AI is to make our lives easier and free up time to do more and be more productive. There are AIs to build websites and edit videos, but there are also AIs to plan agriculture and aid in complex surgery, or an AI platform like Aitem that helps vets diagnose our pets.

It's all about chatbots now, right?

It was hard not to miss how the next AI battlefield will be with who can win the chatbot war; every major tech company is developing a chatbot AI (read our guide to how to build an AI chatbot). One of the most advanced and impressive is IBM's Watson AI that can react in realistic ways to your questions, it will offer advice and even guide you around a website and take on everyday tasks.

One aspect of Watson AI that impressed me is how it can take on jobs like processing commissions and discussing rates with clients, meaning freelancers can add this AI to their blogs and sites and improve bookings.

There's money to be made here too. YouTuber Caryn Marjorie created an AI version of herself called CarynAI that you can 'hire' as your virtual girlfriend (don't laugh) for $1 an hour. Marjorie has 1.8 million followers and around 1,000 'boyfriends'. You do the maths… and who's laughing now?

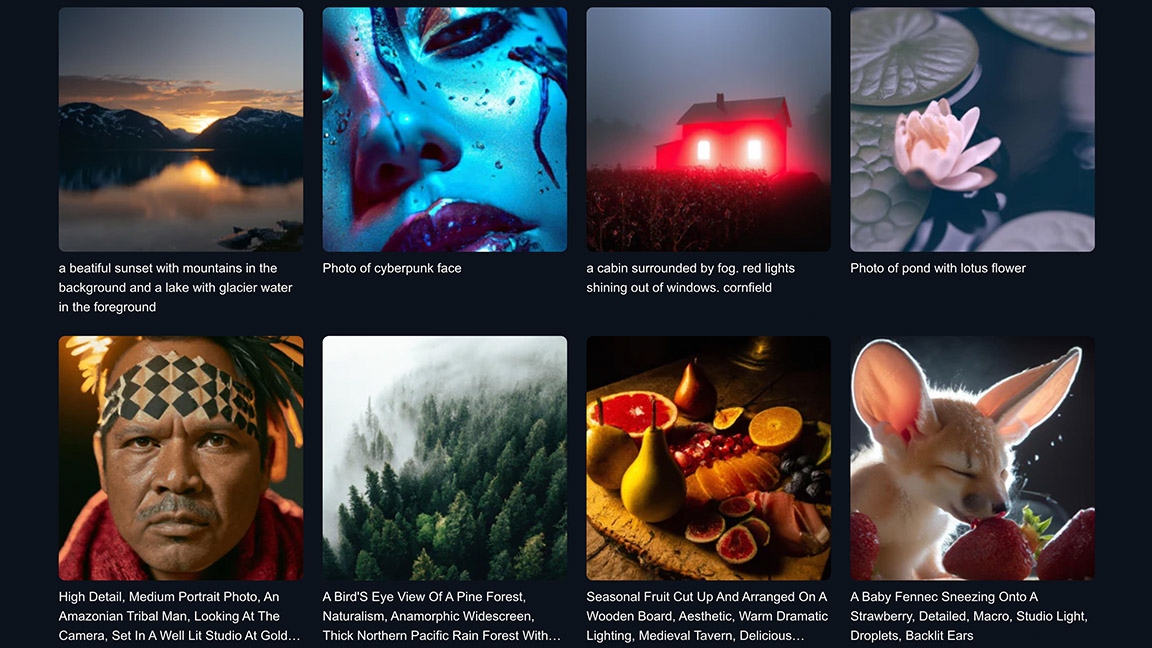

Is any of this AI use ethical?

The night before the AI Summit I attended Adobe MAX and toyed with its new ethical AI, Firefly; Adobe is keen to ensure its AI is only trained on copyrighted images it owns, or images submitted under its AI policy. I love this, and it was welcoming to find many talks at the AI Summit focused on the ethical use of generative AI. It was also a little disheartening to find some of these talks were half-empty.

There are brands taking the ethical issues associated with AI seriously. For example, Shutterstock AI is similar to Adobe's approach and is only training its AI on images it has a copyrights for, offers users the option to monetise their art when used by AI or in generative art created by others on the Shutterstock AI platform.

AI has an identity problem

The unintended biases in tech isn't new, but generative AI could mean this becomes an even more damaging issue. One use of AI on the rise comes from CV scraping and gatekeeping for job interviews, which can also include recruiters using chatbots and pre-recorded interviews to enable an AI to decide if a person should progress for a job.

An AI is trained on data, real world data inputted by humans with human biases. An AI can also be trained by scraping information from a company's previous recruitments. So, if a company has a history of not employing women, well it's AI won't change the status quo.

The issue of identity and bias in AI is being tackled, but it's a major concern. The artists Adaeze Okaro, Serwah Attafuah and Jah are part of Feral File's upcoming show, In/Visible, that aims to shine a light on the 'significant gaps in training data which precipitates a flattened representation of the Black identity'.

These are just some of my immediate thoughts after spending two days in the room with AI evangelists and devs from all industries. If you want to know a little more about generative AI, read my feature on how AI art generators are being used now, our guide to how to use Adobe Firefly and how deep dive into everything you need to know about Adobe Firefly.