I just had a conversation with an in-game NPC that could easily appear scripted. Except it wasn't, at all. I asked a question, the NPC answered, and all thanks to Nvidia's ACE technology and Convai.

You might have caught sight of Nvidia ACE in action during the company's Special Address stream. It's essentially a technology that allows in-game NPCs to react and respond to players in real-time, with voiced dialogue and animations. Nvidia's been showing off the same tech demo called Kairos, which takes place inside a ramen restaurant in a cyberpunk world, since ACE was announced back at Computex. Over at CES 2024, I got to try it out for myself.

In the tech demo—which is build in Unreal Engine 5 and uses a platform from AI startup Convai—you play as Kai, and you're able to speak with Jin and Nova, two NPCs in the demo's cyberpunk world.

First off, I gave a prompt that was entered into the demo's underlying AI system. This will be used to direct the conversation between the two NPCs before I even interact with them. I picked the first thing that came into my head, which for whatever reason was skateboarding. That meant as I walked up to both characters, I could hear them discussing the finer points of skateboarding injuries.

That's a small part of the AI-driven system. Mostly it's about responding to you, as another character in the world. This requires a microphone to directly ask questions and speak with the NPCs.

Below is a video of me speaking with Jin and ordering some ramen. It's pretty weird to hold a conversation with an NPC like this, yet it's also pretty fun. There's a slight delay in responding to you, which comes across as an awkward pause, but honestly the delay was minimal and the general accuracy of the responses pretty good.

@pcgamer_mag ♬ original sound - PC Gamer

I'm keen to see where this sort of technology could go. On the one hand, it could mean the end of NPCs wandering around open worlds muttering the same three phrases over and over again. It could breathe new life into often robotic NPCs and make for some stronger connections with major characters in games. What springs to mind are the few major characters you can hang out with in Cyberpunk 2077. Just uncovering their backstory through non-scripted conversation could be a far less formulaic way of experiencing all that.

On the other, if there's no purpose to these conversations—i.e no quest building or story points to speak of—it could make for some trivial conversations that get stale pretty quick. I don't feel that will ultimately be the case—all that character background is designed and programmed in—but it will take some finessing on a developer's part to have these NPCs steer the conversation back to something pertinent to a game's story or building out a backstory.

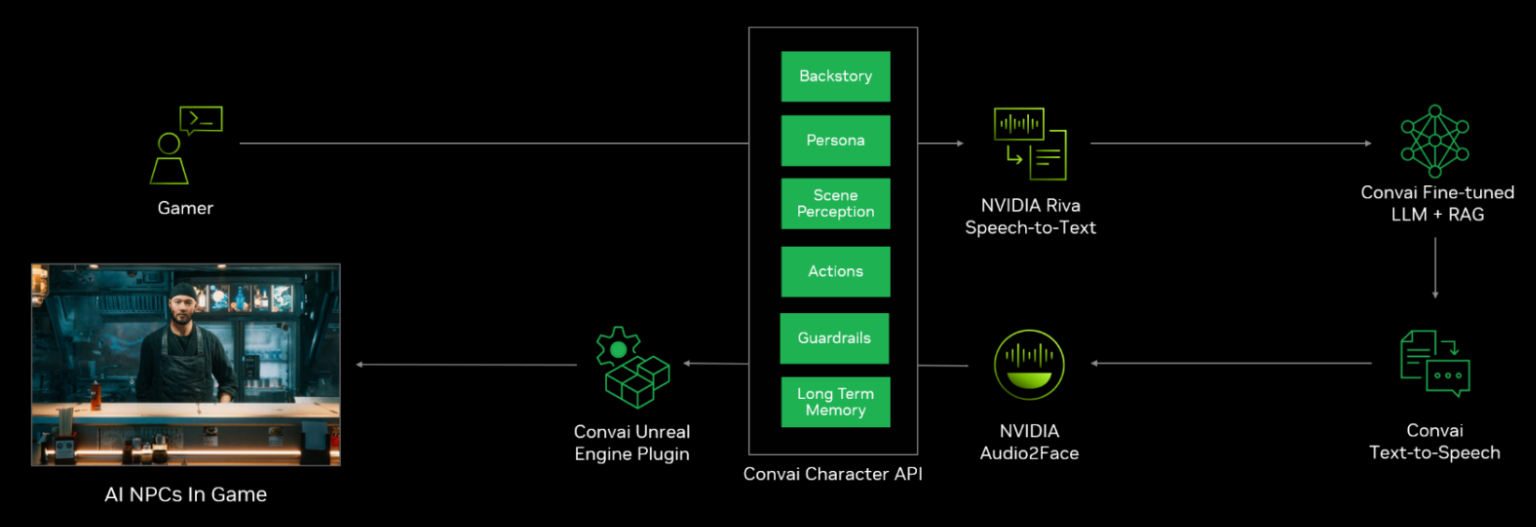

The Unreal Engine 5-powered demo uses a couple key models, including Automatic Speech Recognition, Text-to-Speech, Audio2Face, and Nvidia NeMo, alongside a platform from an AI startup called Convai that integrates spatial awareness, actions, and NPC-to-NPC interactions, like the one I mentioned involving skateboarding.

Best CPU for gaming: The top chips from Intel and AMD.

Best gaming motherboard: The right boards.

Best graphics card: Your perfect pixel-pusher awaits.

Best SSD for gaming: Get into the game ahead of the rest.

The actions and spatial awareness are pretty cool, too. I could ask the AI about the world around them, and they'd respond with stories and information. I could also ask for another bowl of ramen and have Jin make one up. Or ask him to turn down the lights and he'd walk off and do it. These AI are actually incredibly obliging, but there's surely be some sort of sass meter required to create more realistic characters.

I'm keen to see how someone takes this tech and rolls it into something that feels like a good use of the tech. A VR game would perhaps be a great fit. And I'm trying not to get bogged down thinking of how it might help make some pretty awful experiences, too. Then there's the matter of how voice actor's voices are used and what they get a say in when it comes to AI.

Nvidia says you could hook this up to a Google search if you wanted and have the AI spit facts at you, but that feels like it would be underselling what could be a pretty engrossing technology with some effective game design and well-written personas. We'll just have to wait and see what comes out of ACE once the tech gets into developers' hands.

_____________________________________

PC Gamer's CES 2024 coverage is being published in association with Asus Republic of Gamers.