Google Gemini Pro 1.5 is a game changing moment for multimodal artificial intelligence. It allows you to feed it a video, audio or image file and ask questions about the contents.

To see how well it performed, I gave Gemini Pro 1.5 a completely silent video of the moment of totality from the recent total solar eclipse visible across North America.

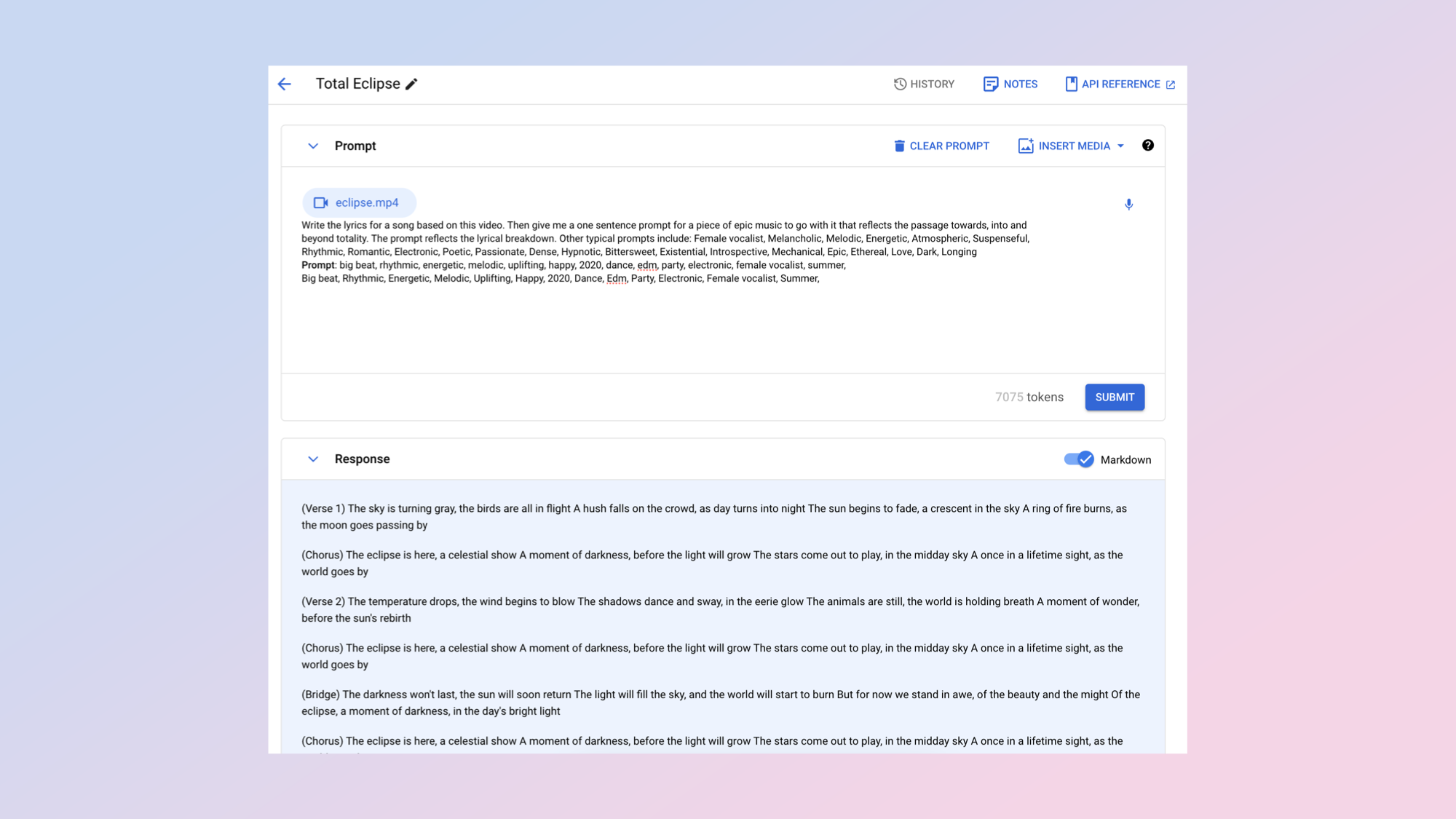

Working in the Google Cloud platform VertexAI, I was able to also give Gemini some instruction along with the video clip. I asked it to write the lyrics and a prompt for an AI music generator to create a song inspired by the contents of the video.

I then put the prompt and lyrics into Udio to create the song and fed the complete track back into Gemini Pro 1.5 and asked it to listen to the track and plot out a music video.

What is Google Gemini Pro 1.5?

Google released its Gemini family of models in November, starting with the tiny Nano which is available on some Android phones. It then dropped Pro which now powers the Gemini chatbot and finally in January released the powerful, GPT-4-level Gemini Ultra.

Last month the search giant released its first update to the Gemini family, unveiling Gemini Pro 1.5 which has a massive million token context window, a mixture of experts architecture to improve responsiveness and accuracy, as well as true multimodal capabilities.

While it is currently only available for developers through an API call or the VertexAI cloud platform, this advanced functionality is expected to appear in the Gemini chatbot soon.

Some of the features include being able to upload an audio file such as a song or a speech, a video file of someone exercising or a solar eclipse and ask Gemini questions about the file.

Creating a song from a video

Whle you can’t directly generate a piece of music using Gemini Pro 1.5 — Google has other AI models for making both music and video — you can create prompts and lyrics.

I gave the AI model a short 25-second clip showing the moment of totality from the recent total solar eclipse visible from the U.S. and asked it to give me both lyrics and a prompt I could feed into an AI music generator to create a song inspired by the video.

New @Google developer launch today:- Gemini 1.5 Pro is now available in 180+ countries via the Gemini API in public preview- Supports audio (speech) understanding capability, and a new File API to make it easy to handle files- New embedding model!https://t.co/wJk1e1BG1EApril 9, 2024

It gave me this as a prompt for Udio: “An epic orchestral piece with three distinct movements, the first building in suspense and anticipation as the eclipse approaches totality, the second slowing down and becoming ethereal and mysterious during totality, and the third building again to a triumphant crescendo as the sun emerges from behind the moon.”

This was the lyric for the chorus: “The eclipse is here, a celestial show A moment of darkness, before the light will grow The stars come out to play, in the midday sky A once in a lifetime sight, as the world goes by.”

I don’t think Gemini Pro 1.5 is as creative as Claude 3, ChatGPT or Gemini Ultra. Lyrics from those platforms tend to be more inventive but the ability to analyze a video is huge. It was able to assess the different moments in the video, capture the changes and reflect it in the lyrics.

Creating a music video from a song

One of the most recent updates for Google Gemini 1.5 is the ability to take a song or any piece of audio and analyse its content. I’ve found this particularly useful in planning ideas for a music video to go with that song — especially if I’m working quickly.

I took the song I generated in Udio using the Gemini Pro 1.5 prompt and lyrics and asked the AI model to plot out a shot-by-shot music video based on the audio file.

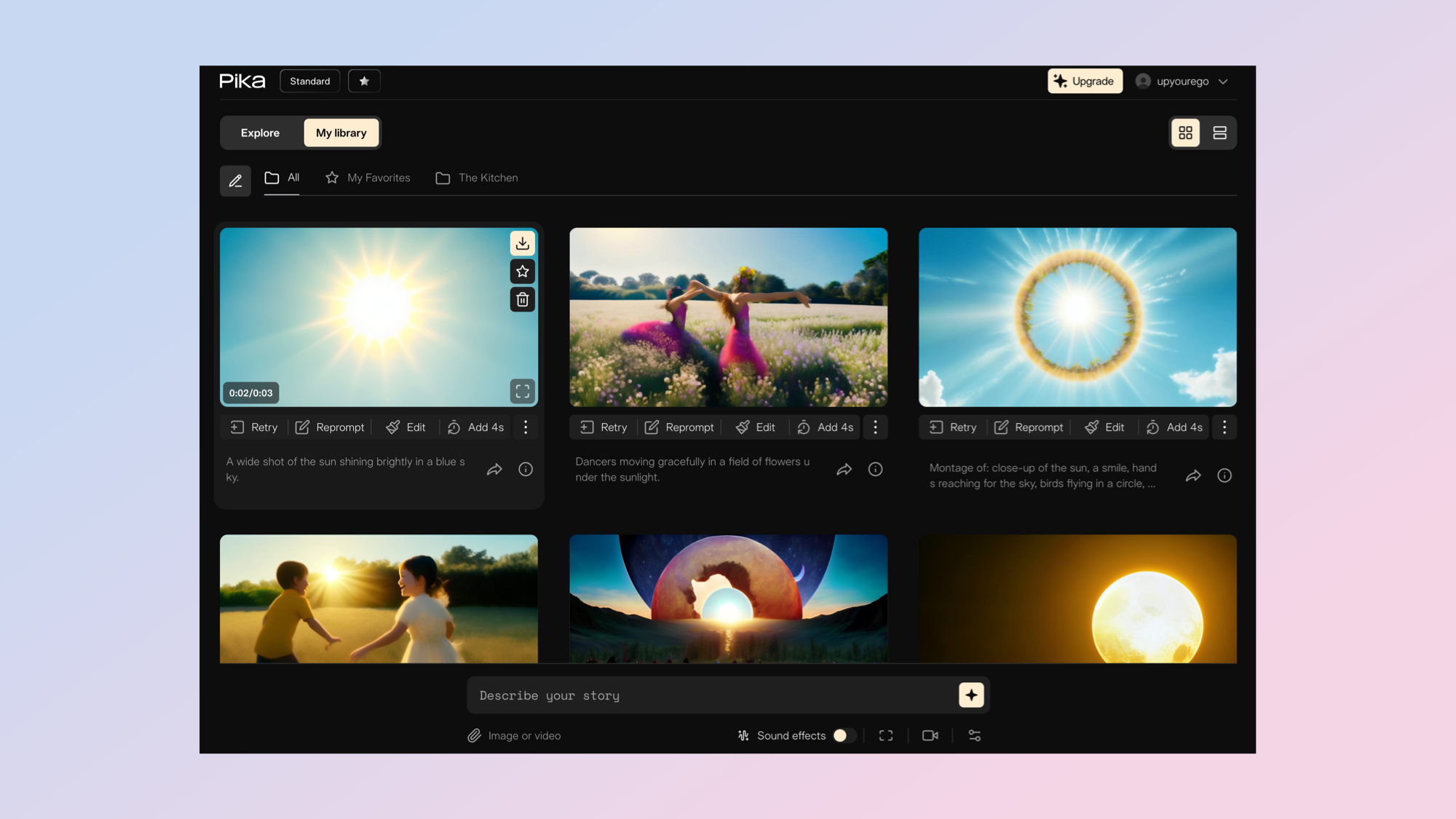

It gave me a series of 5 second shots for each part of the song including the intro and chorus. For each section it gave me a prompt such as "generate a beautiful landscape with sunrise and birds in flight". I then fed the prompts into Pika Labs.

This could give a robot the ability to act completely independently using nothing more than a microphone and camera.

While this is an unusual example of how to use Gemini Pro 1.5, it is an indication of what could be possible or even what could be built by a third party developer. For example you could use Gemini Pro 1.5 as an intermediate layer between an AI music generator and an AI video platform like LTX Studio or Runway to create a song and music video from one click.

The real benefit of larger context windows will come as we start to see AI applications such as Meta’s smart glasses or the Rabbit R1 come on to the market. If Google can tackle the latency problem the AI could analyze the mass of real world data and give live feedback and information.

This could be used to help a blind person see through audio descriptions, create the first step towards true driverless vehicles, or give a robot the ability to act completely independently using nothing more than a microphone and camera.