While Microsoft's Copilot+ PCs aren't the first AI PCs we've seen, they are the first laptops that heavily rely on the power and performance of the much more powerful onboard NPU. So now we are faced with an interesting new challenge: How do we test AI PCs?

We'll naturally put them through a thorough review process using the laptops in our daily work and lives, but Laptop Mag also relies on consistently reproducible tests in our mission to find the best laptops and best AI PCs for you. And where that is concerned, the short answer is that, as of now, we only have three viable synthetic benchmark tests built to test NPU performance: Geekbench ML and UL's Procyon AI Image Generation and Procyon AI Computer Vision. Other available test workloads to test an AI PC are prohibitively difficult and time-consuming to set up and run as part of a typical review process.

Let's get back to basics before we go any further; before we can determine how to test an AI PC, we need to agree on what makes a laptop an AI PC. While all laptops can access some version of cloud-based AI, AI PCs are computers with a built-in NPU capable of handling on-device AI.

What is an NPU?

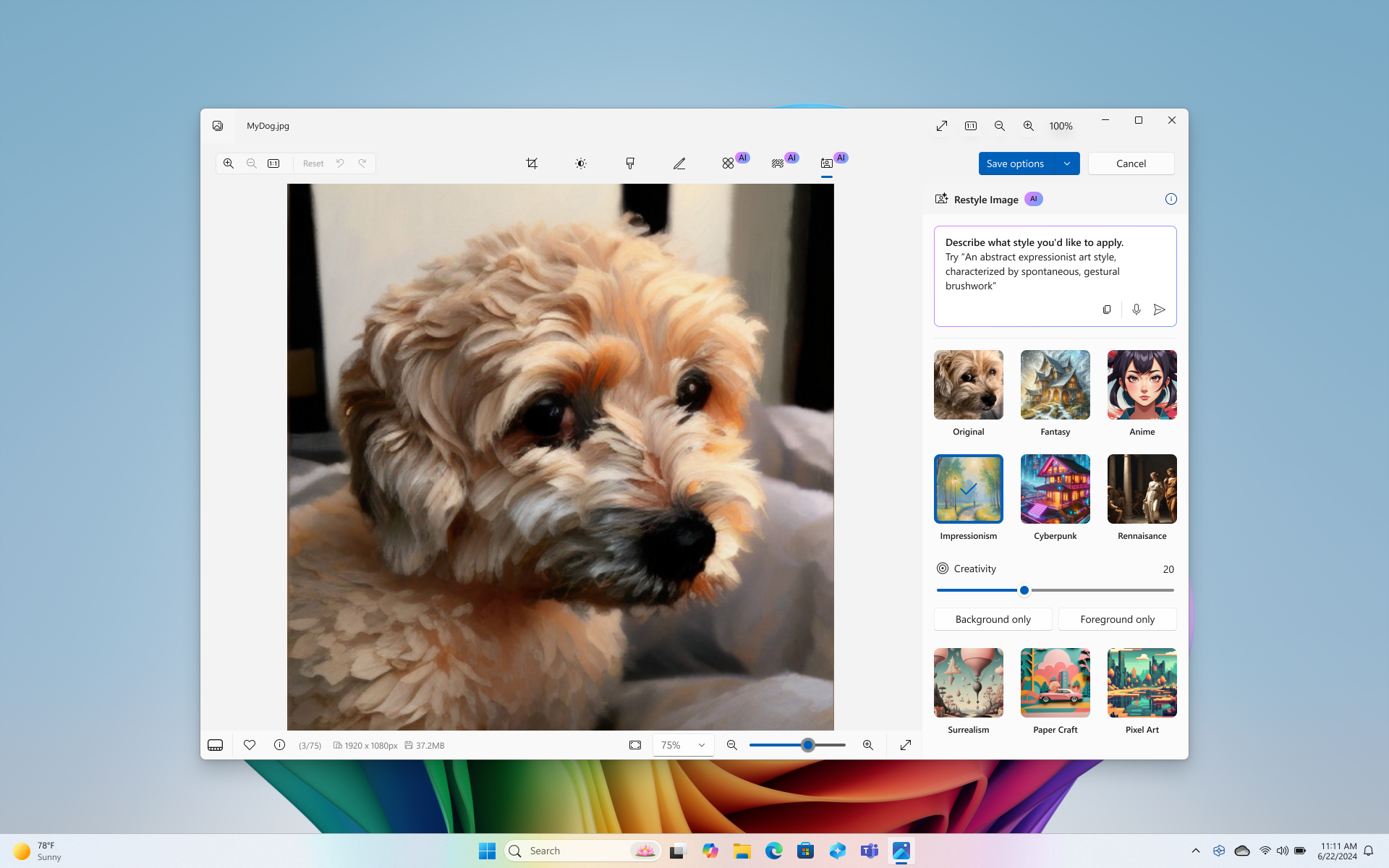

An NPU, or Neural Processing Unit, is a processor dedicated to neural network operations and AI tasks. A computer's CPU and GPU can also handle AI workloads; however, the NPU is optimized for parallel computing, which makes it better at handling AI tasks like image generation, speech recognition, background blurring in video calls, and object detection tasks in photo and video editing software.

While laptops without NPUs can access some of these features, they will struggle with more intensive AI tasks. Thus, Microsoft's Copilot+ program requires more than 40 TOPS (trillions of operations per second) of NPU performance for the laptop.

So far, the only laptops that will meet Microsoft's new requirement are those featuring Qualcomm Snapdragon X Elite and Snapdragon X Plus chipsets. At the time of publication, 9 Copilot+ PCs were available to purchase. However, laptops with an AMD Ryzen AI "Strix Point" processor or Intel Core Ultra "Lunar Lake" processor will also become part of the Copilot+ platform when they are released later this year.

Existing AI Benchmarks

Geekbench ML

Geekbench ML is, naturally, brought to you by the same minds as the industry-standard benchmark Geekbench 6. This multi-platform benchmark measures your computer's machine learning performance on the CPU and GPU or uses Core ML or NNAPI frameworks to test neural accelerators.

Much like Geekbench 6, Geekbench ML uses real-world workloads that utilize computer vision and natural language processing.

Of course, because Geekbench ML comprises multiple real-world tests into one benchmark, it kicks back a synthetic score. With NPU results ranging from under 500 on the OnePlus Nord to over 3,600 on the Apple iPhone 15 Pro, there is massive variability in scores. A higher number is preferred and indicates better NPU performance. However, it can be difficult to conceptualize the difference between a Geekbench ML NPU average of 500 compared to 3,600.

Of course, we'll start to better understand this as we test more AI PCs and compare the synthetic results with those in real-world testing.

Geekbench ML is not optimized to test the NPU of Qualcomm's Snapdragon X Elite and X Plus chipsets, though the full version of this benchmark, Geekbench AI, has been optimized for the new Qualcomm hardware. For now, we will continue to run Geekbench ML on applicable AI PCs to compare older laptops, but this test will likely be phased out of our benchmark suite within the year.

Geekbench AI

Geekbench AI is a cross-platform AI benchmark built as a follow-up to Geekbench ML. This machine learning benchmark will measure NPU performance in on-device AI tasks rather than simply measuring NPU accelerators. Geekbench AI can also calculate the AI performance of a CPU and GPU, but the NPU benchmark is most important for testing AI PCs.

Like Geekbench 6 and Geekbench ML, Geekbench AI uses real-world AI workloads to measure the performance of a computer's CPU, GPU, or NPU when handling AI tasks. Geekbench AI can be tested using Core ML, OpenVINO, ArmNN, Samsung ENN, and Qualcomm QNN AI frameworks.

Also, like the other Primate Labs benchmarks, Geekbench AI reports synthetic Full Precision, Half Precision, and Quantized scores. AI workloads cover a wide range of precision levels depending on the task, which increases the complexity of evaluating AI performance. While this can make it difficult to conceptualize what the Geekbench AI scores mean, a higher score is preferred across all three metrics.

As this is the updated version of Geekbench ML, Geekbench AI will likely replace Geekbench ML in our testing suite within the year.

UL Procyon AI Image Generation

UL is the same company that produces 3DMark and PCMark 10, although the Procyon suite of benchmarks is geared more toward professional-grade software benchmarks than gaming or general productivity tests.

The UL Procyon AI Image Generation benchmark measures the inference performance of on-device AI accelerators in generating images using Stable Diffusion. The benchmark includes two tests for measuring the performance of mid-range and high-end discrete GPUs. The benchmark can be configured to work with Nvidia TensorRT, Intel OpenVINO, and ONNX inference engines.

While an NPU can assist with image generation, this Procyon benchmark does not target the NPU. This makes it tricky to use as an AI benchmark because while a GPU can run AI workloads, it isn't an AI PC-specific benchmark.

Additionally, the Procyon AI Image Generation benchmark kicks back a synthetic benchmark score, much like Geekbench ML, which can make it difficult to understand the performance of your AI PC. While the challenge of the test using both NPU and GPU will remain, we should be able to hone in on a better understanding of the results as we test more AI PCs.

UL Procyon AI Computer Vision

The second UL Procyon AI benchmark is the AI Computer Vision test, which can measure AI inference performance using the CPU, GPU, or dedicated AI accelerators like an NPU.

This cross-platform Windows and Mac benchmark can run Nvidia TensorRT, Intel OpenVINO, Qualcomm SNPE, Microsoft Windows ML, and Apple Core ML inference engines.

Much like the other UL Procyon and Geekbench ML tests, the Procyon AI Computer Vision benchmark scores are synthetic. While higher scores are preferred, they will again be difficult to depend on as a sole indicator of AI performance. Like the previous test, this is compounded by the test utilizing more than simply the NPU.

Other AI test workloads

There are a few other benchmark options, though they aren't as formalized as Geekbench ML or the UL Procyon suite. These benchmarks often involve installing software, making changes to the software, and then letting the test workload run.

Some tests involve using programs like Audacity to remove background noise from a video or require the NPU to auto-complete a program in Python. These tests provide a specific result, making them feel more "real-world" than the synthetic results generated from Geekbench or Procyon. However, these tests involve a lengthy setup period and take a long time to run. This makes it difficult for the tests to be repeatable enough to join our benchmarking suite. One attempt at running the Python workload left the review unit laptop unusable until it had been reset multiple times.

So, for now, our AI PC testing mostly involves running Geekbench ML and the two UL Procyon AI tests because they're much simpler to set up and run to obtain reproducible results.

The Future of AI Benchmarking

Like most things to do with AI PCs, this list of benchmarks is subject to change. While we aren't on the first generation of AI processors, we are still in the early stage of AI PC development. Companies are still making a case for why you need an AI PC compared to a regular computer. This means we could end up with plenty of new AI PC benchmarks in the future.

Those benchmarks will likely be as varied as AI PC usage and AI PC chips evolve.

The Laptop Mag testing lab will continue to look at new tests, and our process will evolve to ensure that we bring you the best and most accurate representation of AI PC performance.

More from Laptop Mag

- Best laptop 2024: 10 best laptops tested and rated

- Best laptop deals in September 2024

- Best laptop docking stations in 2024