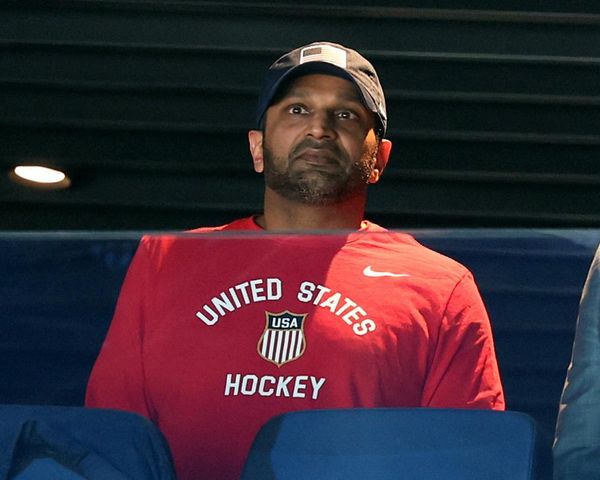

In his role as chief information and digital officer at pharmaceutical giant Merck, Dave Williams estimates that at least 20% of his time is dedicated to cybersecurity.

“It’s more than I would have anticipated before I came into the role,” says Williams. “But when you look at the escalating impact of threats and ransomware attacks, it's something that we pay very, very close attention to.”

Since late 2019, Williams has led the technology organization for Merck’s research, manufacturing, and business units after previously serving as CIO at the company’s animal health division. He’s worked in the pharmaceuticals industry since 2007, when he was at Schering Plough, which merged with Merck in a $41.4 billion deal in 2009.

Cybersecurity has always been a priority, but got even more attention inside Merck after the company was stung by the NotPetya cyberattack in 2017, which reportedly damaged more than 30,000 of the company’s computers. It led to $1.4 billion in claims stemming from the attack; Merck only reached a settlement with insurers earlier this year.

Merck’s C-suite leadership sees cybersecurity as critical to the business, making it easy for Williams to get all the financing he needs to modernize the company’s tech. “I don’t feel like I ever have to oversell the importance of us staying modern and current,” says Williams. “The reputational risk is huge. We deal with a lot of patient data. And so, it is integrated into everything that we do.”

Cybersecurity risks have led Merck to invest in companywide cyber training programs and zero trust security, a framework that assumes no user, device, or communication can be trusted and thus demands continuous verification.

Merck’s focus on prioritizing strong controls around cybersecurity is an important layer as the company embraces generative artificial intelligence. Merck has crafted a proprietary GPTeal platform, which uses generative AI models including OpenAI’s ChatGPT, Meta’s Llama, and Anthropic’s Claude. GPTeal allows employees access to four different generative AI products, including an internal chatbot and document translation. The company says 43,000 employees are using GPTeal today and have thus far entered 80 million prompts.

“All of this happens within the guardrails that we’ve built, both technical, as well as policy and governance,” says Williams.

This interview has been edited and condensed for clarity.

How does cybersecurity fit into the overall technology strategy?

We were always invested in cyber, but [NotPetya] was an interesting experience and since that day, it has been an integral part of what we do as a company. From a leadership and cultural standpoint, this is a whole company effort. From the CEO to the executive team to our board, there are constant ongoing and active discussions around cyber. We spend a lot of time educating our entire workforce, because the entry point to companies is usually an unwitting click by someone that exposes the company. We do a lot around our zero trust strategy. And the last thing I would say is: testing, testing, testing. We invite in Mandiant, CrowdStrike, and others to constantly emulate threat actors and we learn a tremendous amount from that.

Can you tell me about your proprietary GPTeal platform and how that works?

We made the very conscious decision as a company to go on offense with generative AI. We viewed this as a game changer. We also understood that you have to be thoughtful about how you do this—you don’t just open up to all these public websites and let your business go in an unfettered fashion. GPTeal is the architecture we created, in partnership with Microsoft, so we have a private instance of OpenAI. We direct all of our internal employees to it, addressing all issues around IP [intellectual property], privacy, and security.

What were the guardrails that you established to ensure your usage of generative AI was safe and protects your data?

In partnership with Microsoft, these private instances of OpenAI ensure that no data is used for training these models and no data goes out to the public models. There are all sorts of technical guardrails that ensure that, and we audit to make sure everything is in place. We have a formal gen AI policy that talks about transparency, testing, and all the normal things you see related to AI ethics. And then even when users go into it, there are flash screens that remind people of what to do, and what not to do, in the models.

You mentioned you are leaning into a blend of generative AI models. What led you to take that approach?

The early bets we placed were that it was going to be transformative for knowledge workers, as well as business use cases. We didn’t want to bet on a model. We felt these models would continue to evolve, and some would be large foundational models, like OpenAI and Gemini, and some would be more special purpose foundational models, like around biology in our case. We have a formal testing process but if there’s a new model we want to bring in, we can do so fairly quickly.

Cybersecurity is constantly in the headlines and it is often described as a game of cat and mouse between fraudsters and corporations. Who is winning the game today?

The good guys are so disadvantaged because we have to be right all the time. The threat actor only has to be right one time and that can have pretty catastrophic impacts across an enterprise. You add to that the legacy we have to deal with, we’re a 130-year-old company. Modern compute platforms are a little easier to secure. When you look at a lot of the legacy systems and applications, some of these things are 10, 20, 30 years old and it’s really hard to secure them the way we’d like to secure them. We are extremely paranoid, humble, and never satisfied. You’ll never hear someone from my team say, ‘We’re in good shape.’ And now, you look at generative AI and all the technologies we like to use to drive value for our core businesses, these same technologies are used by threat actors.

Beyond generative AI, are there other emerging technologies you look at for the cybersecurity space?

Companies like Zscaler, CrowdStrike, and Palo Alto Networks, those are three partners in this space and our zero trust journey. If you look at one of the CrowdStrike capabilities, we’ve leveraged their AI engines to identify anomalies and do automated alerting. So it’s the combination of automation, AI, cloud-based technologies, and inspection. All of these things come together, again, not to make us comfortable, because we’ll never be comfortable. But it gives us some comfort that we have maximized the visibility we have into our environment and have an ability to detect, react, and hopefully mitigate as much risk as possible.